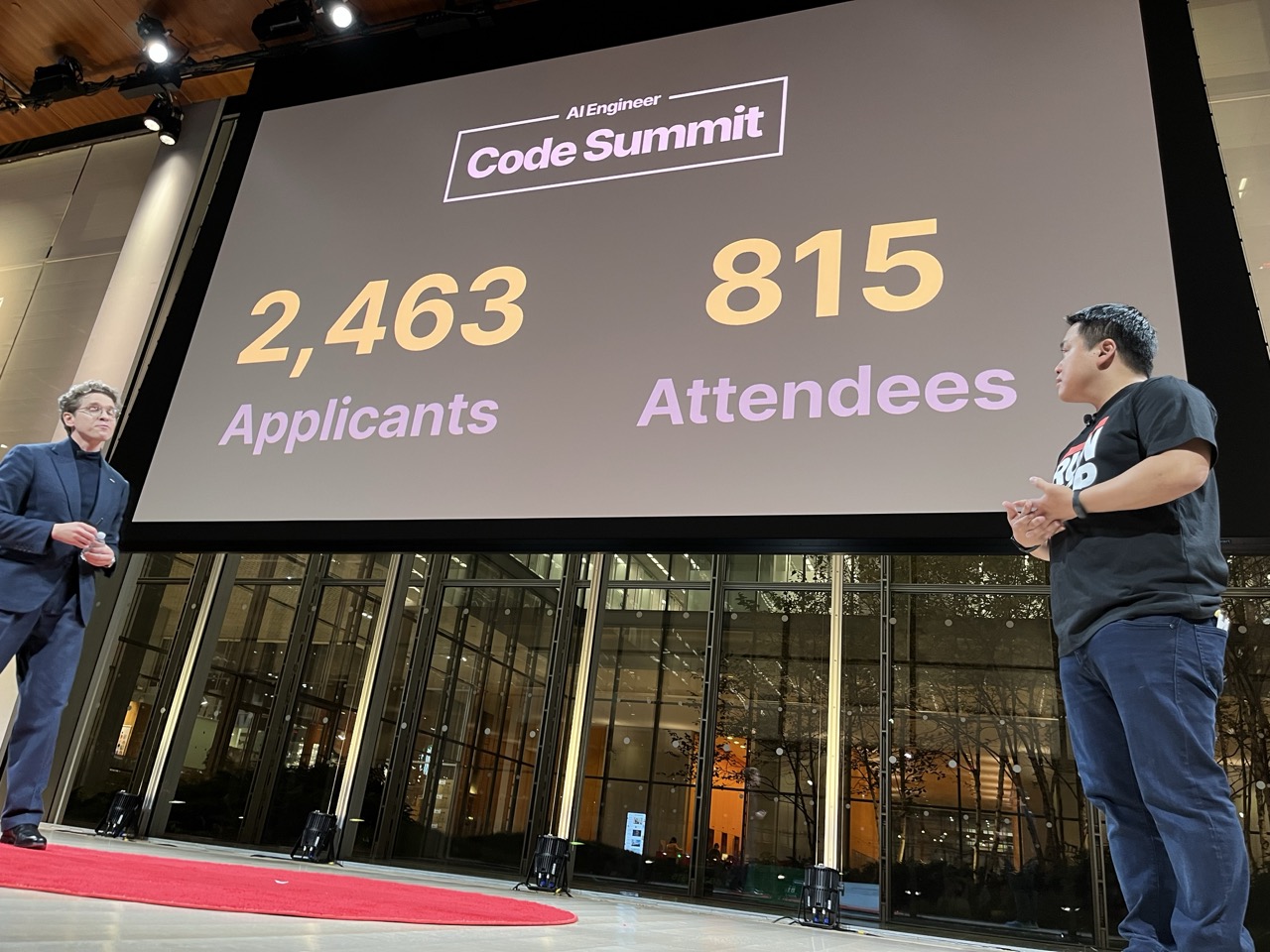

AI Engineering Code Summit

Jump to Conference Photo Gallery

Themes#

(AI Generated from the rest of the notes below)

Engineering Perspective Themes#

-

Context Window Management & Progressive Discovery

- Skills over monolithic agents (Anthropic’s approach)

- Keeping models in the “smart zone” vs “dumb zone” of context windows

- Progressive discovery through skills.md files rather than exhaustive tool descriptions

- Context compaction and subagent delegation strategies

-

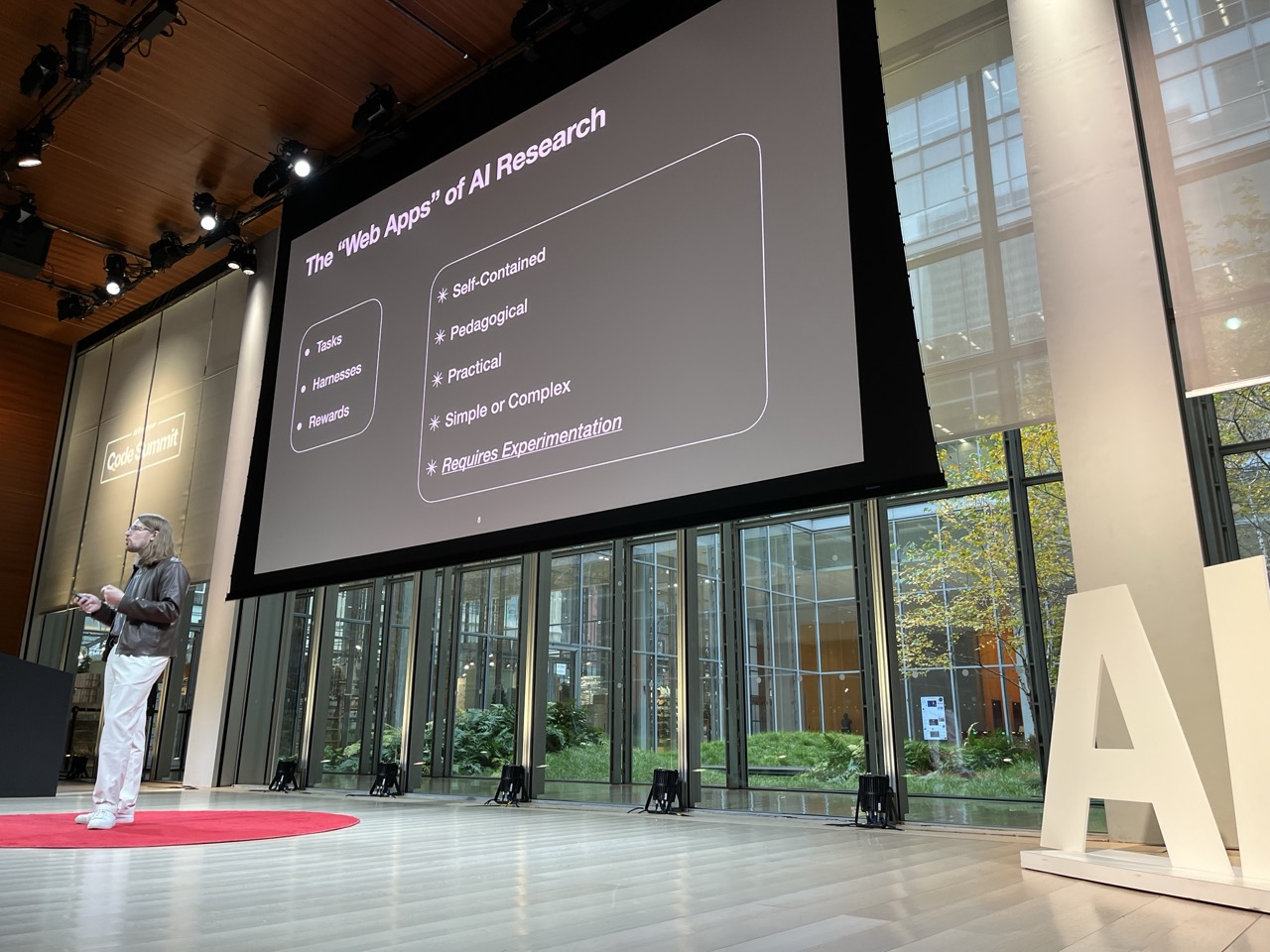

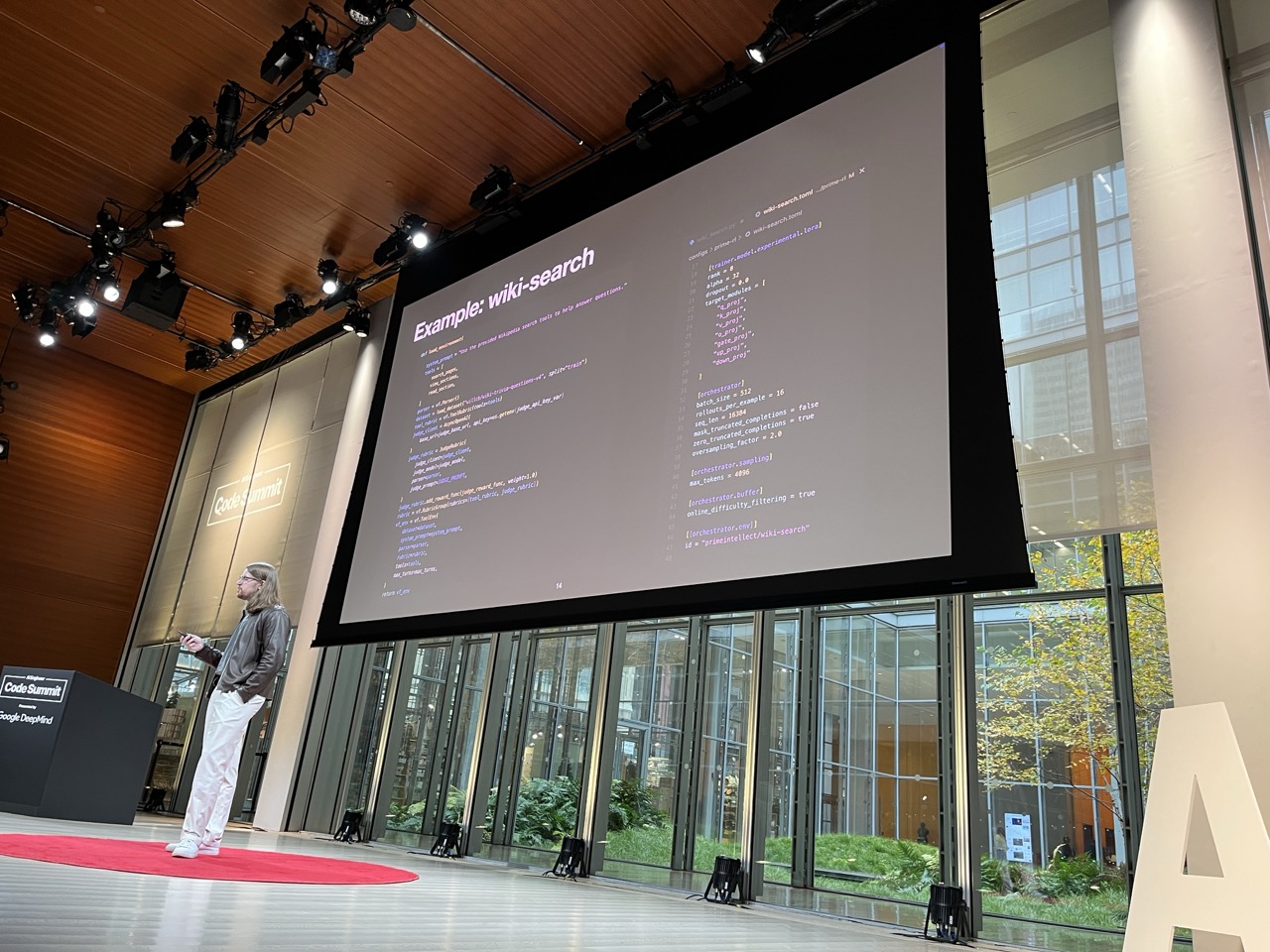

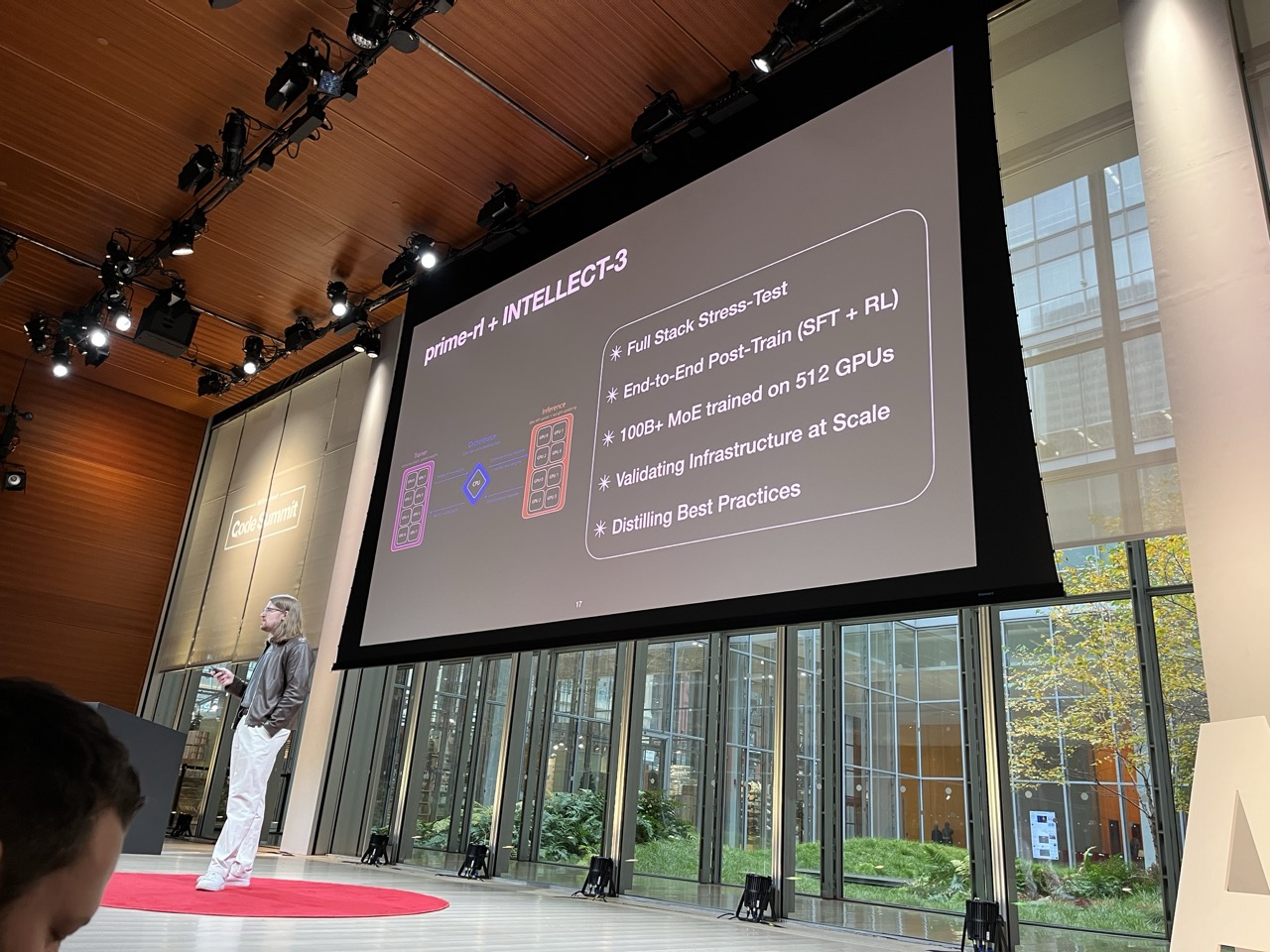

RL Infrastructure & Training Optimization

- Compute-efficient reinforcement learning as fundamental requirement

- Async RL tools to maximize GPU utilization

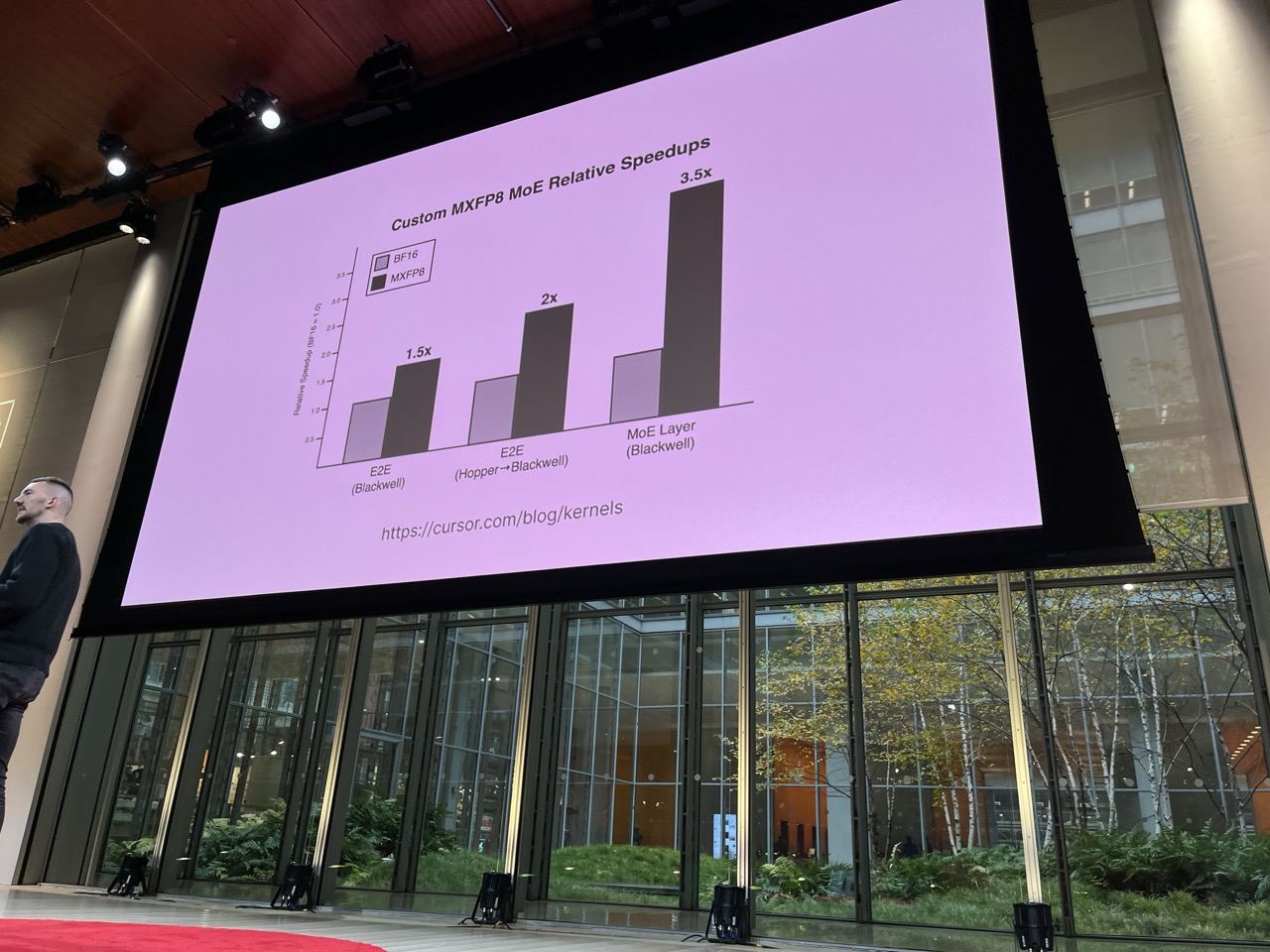

- Custom kernels for inference speedups (Cursor achieving several-fold improvements)

- Environment-training alignment as critical success factor

-

Evaluation & Benchmarking

- Need for non-cheatable, real-world benchmarks (cline-bench)

- RL Environment Factory concept for automated environment creation

- Importance of tight feedback cycles (code/coding ideal for RL)

- Monitoring RL reward hacking

- Gap between self-reported and actual efficacy increases

-

Agent Architecture Patterns

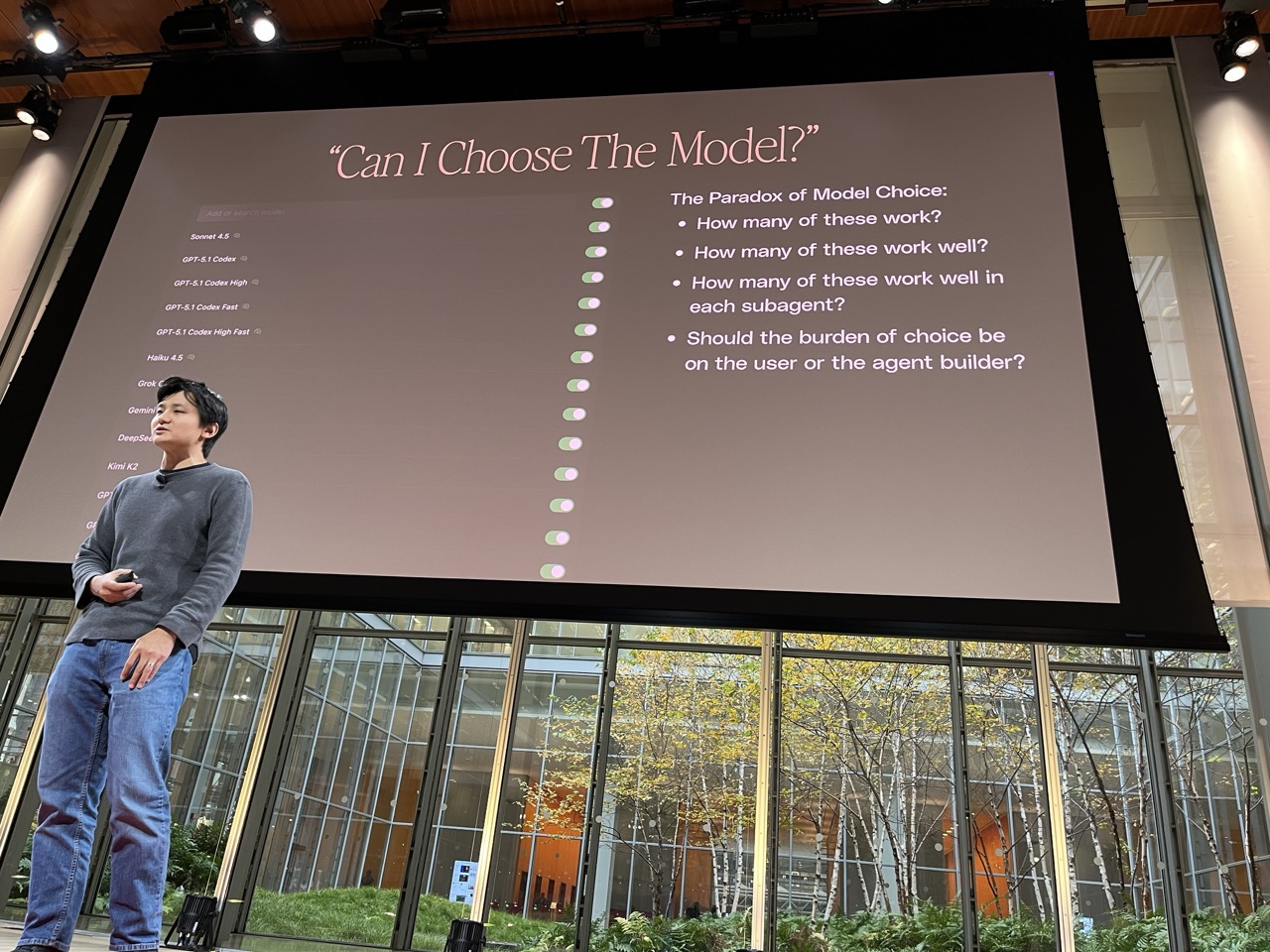

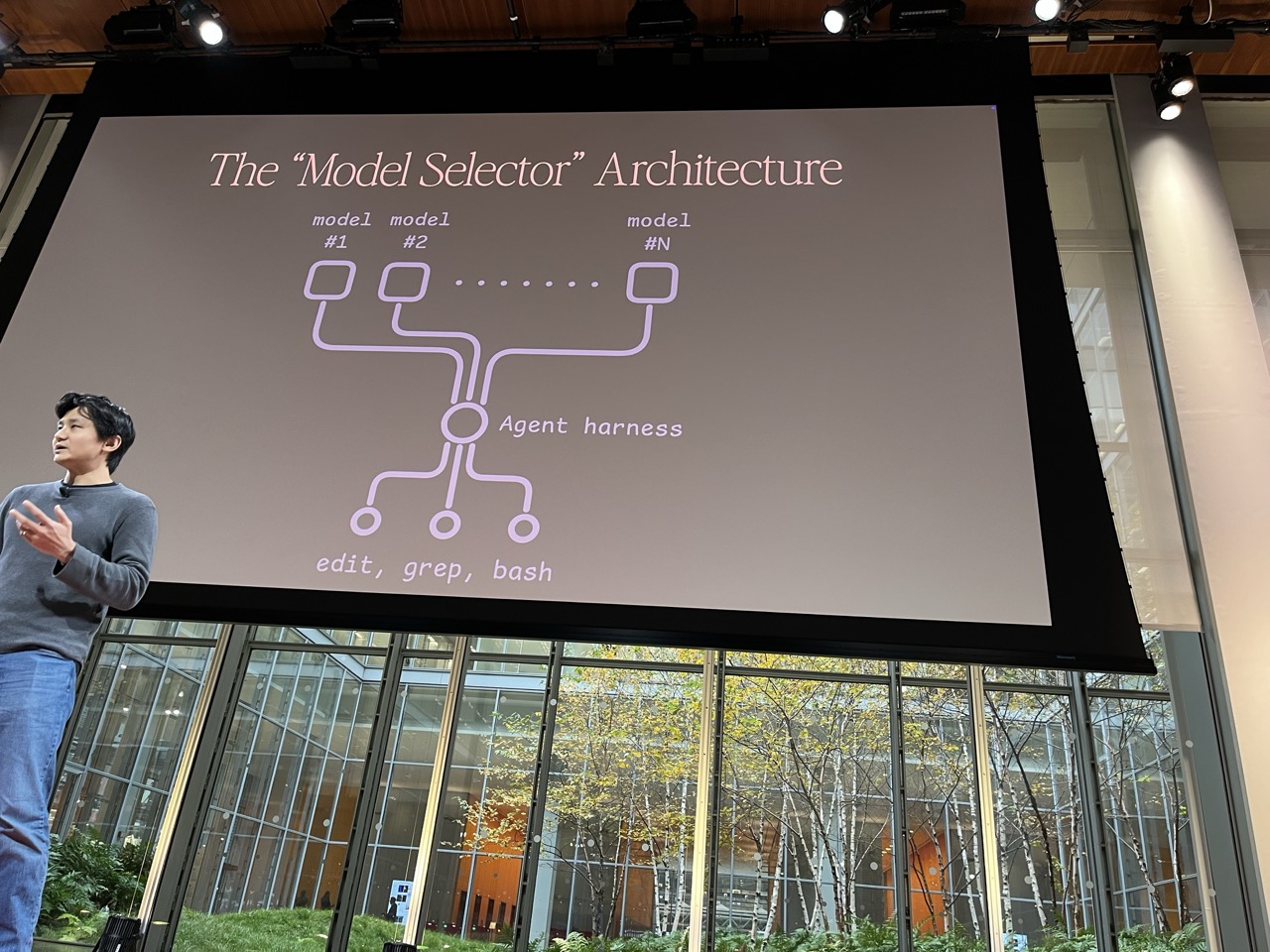

- Harness-model co-optimization

- Bash as foundational layer (“Bash is all you need”)

- Tools > Bash > CodeGen / Skills hierarchy

- Multi-agent systems with specialized roles (spec/implementation/tests triangle)

-

Code Quality & Production Readiness

- Deterministic validation everywhere

- Clear, actionable error messages

- Framework standardization across organizations

- CLI/API-first design for agent compatibility

- Code simplicity enabling better reasoning

- Comprehensive documentation requirements

-

Platform & Infrastructure

- Model serving via gateways

- MCP directories and hubs

- PAAS for tool creation/deployment

- Standardized development environments

- Cross-hardware kernel optimization

Organizational Perspective Themes#

-

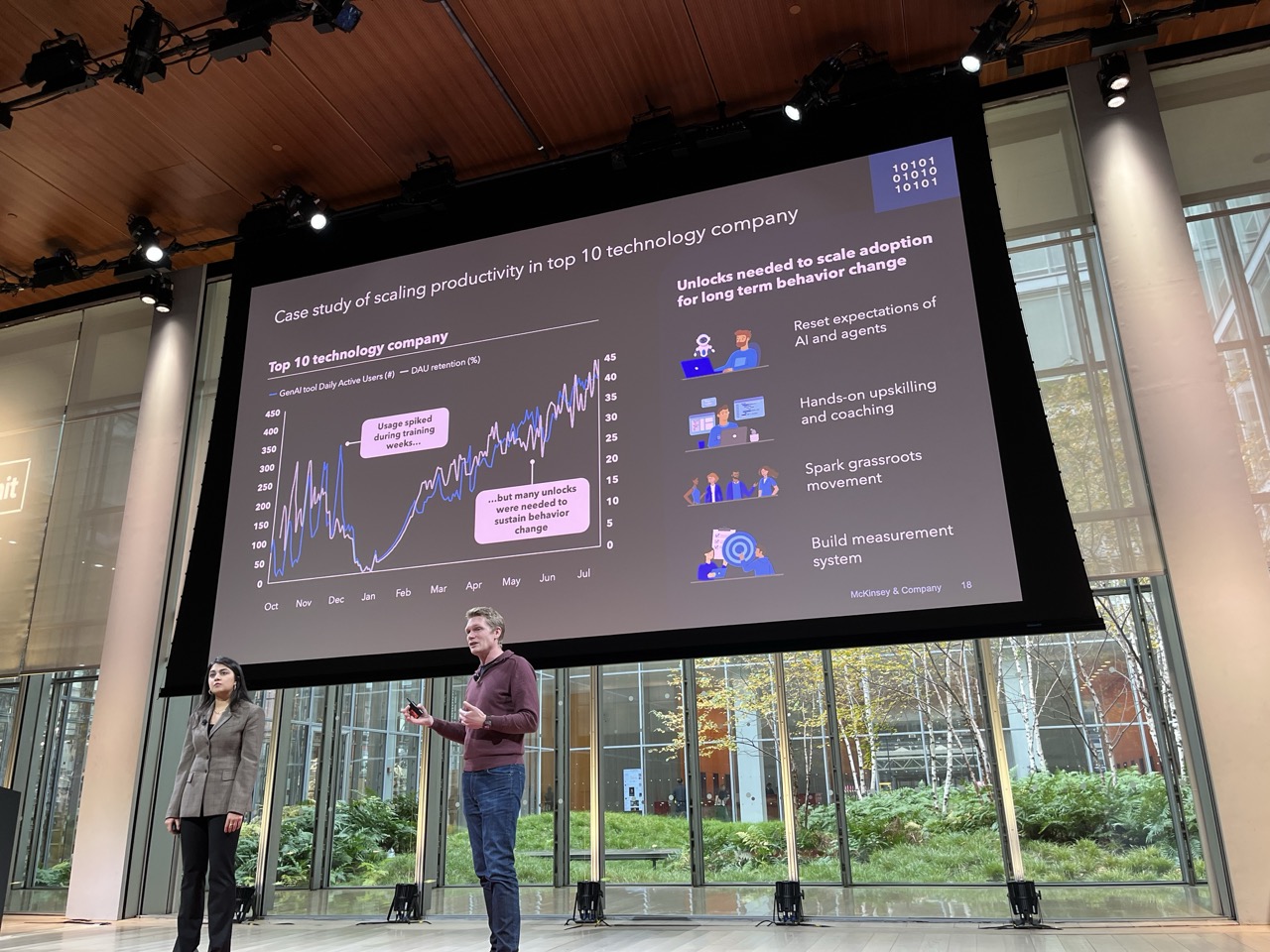

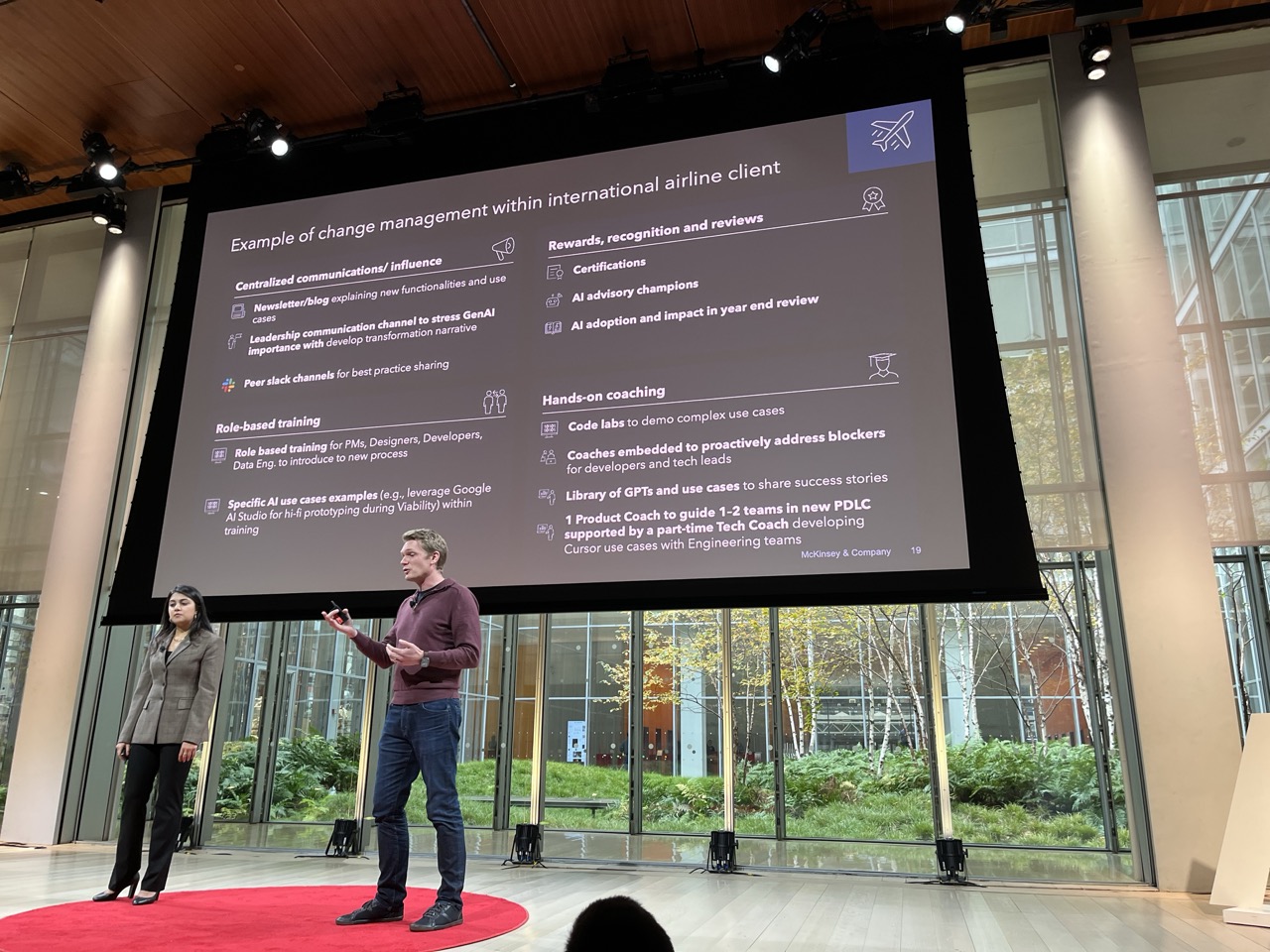

Change Management & Adoption

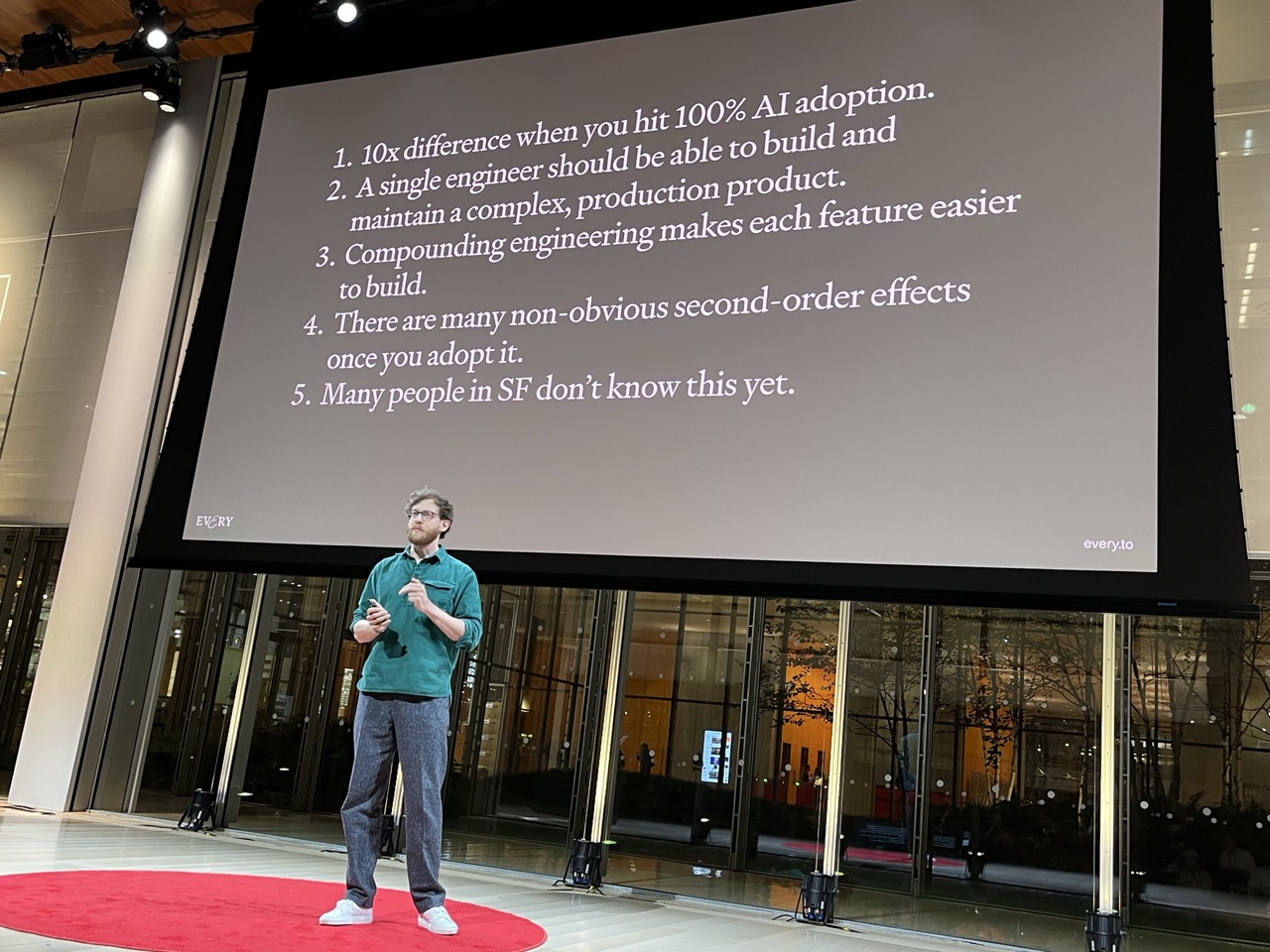

- “All-in” vs 90% adoption creates 10x difference

- Shift from written culture to demo culture

- Structured adoption strategies:

- Centralized communication

- Peer slack channels for best practice sharing

- Role-based training

- Specific use cases

- Rewards programs

- Coaching and library resources

-

Organizational Workflow Transformation

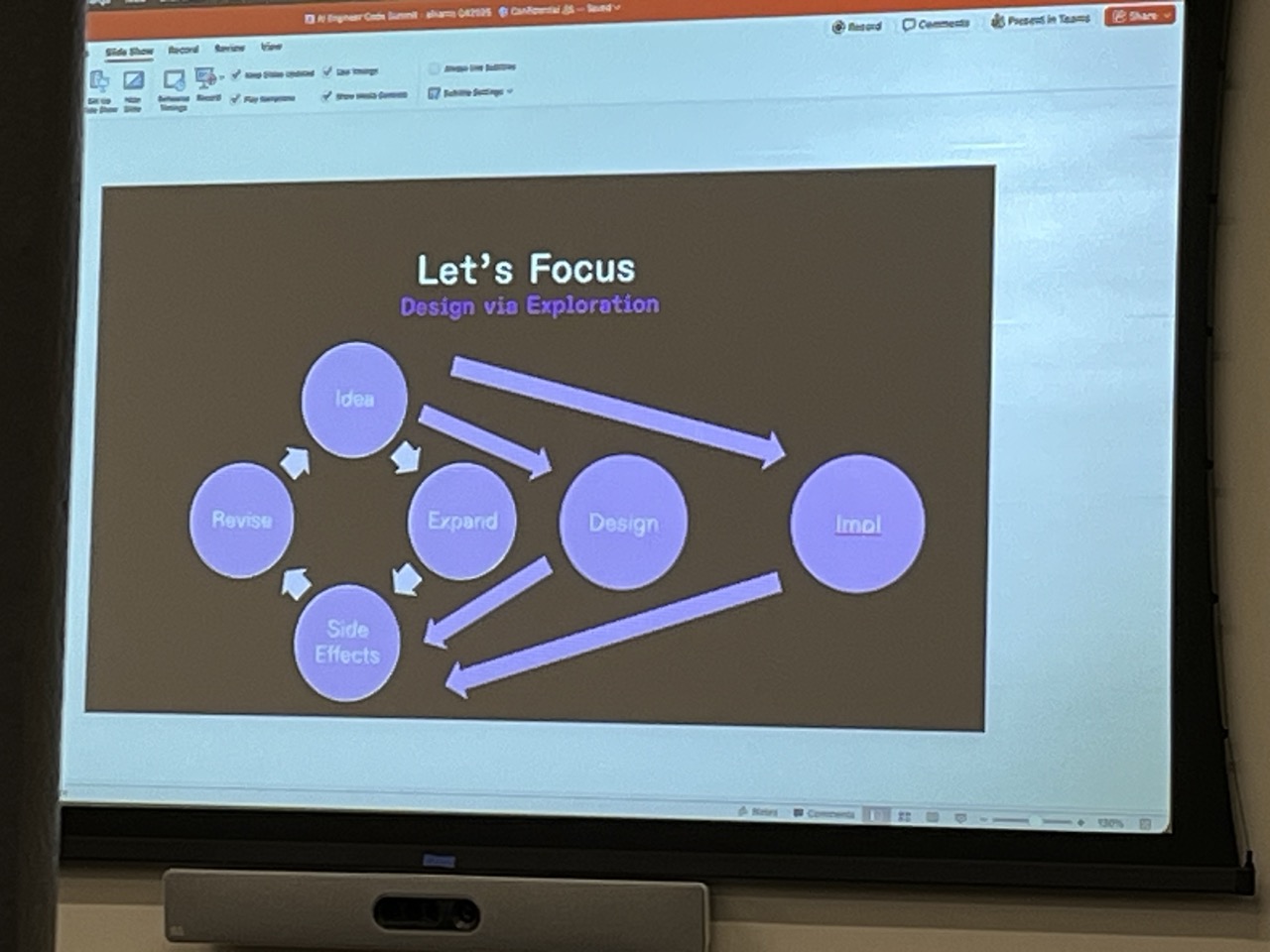

- Moving beyond Agile to AI-native workflows

- 2-person teams building production products

- Organizations less productive than individuals (needs addressing)

-

Developer Experience Principles

- “Freedom and Responsibility” with guardrails

- “Golden Paths” with Platform Enablement

- Democratized infrastructure via Inner Source

- Service abstraction and strong contracts

- Code review velocity optimization (clear ownership, not Slack groups)

-

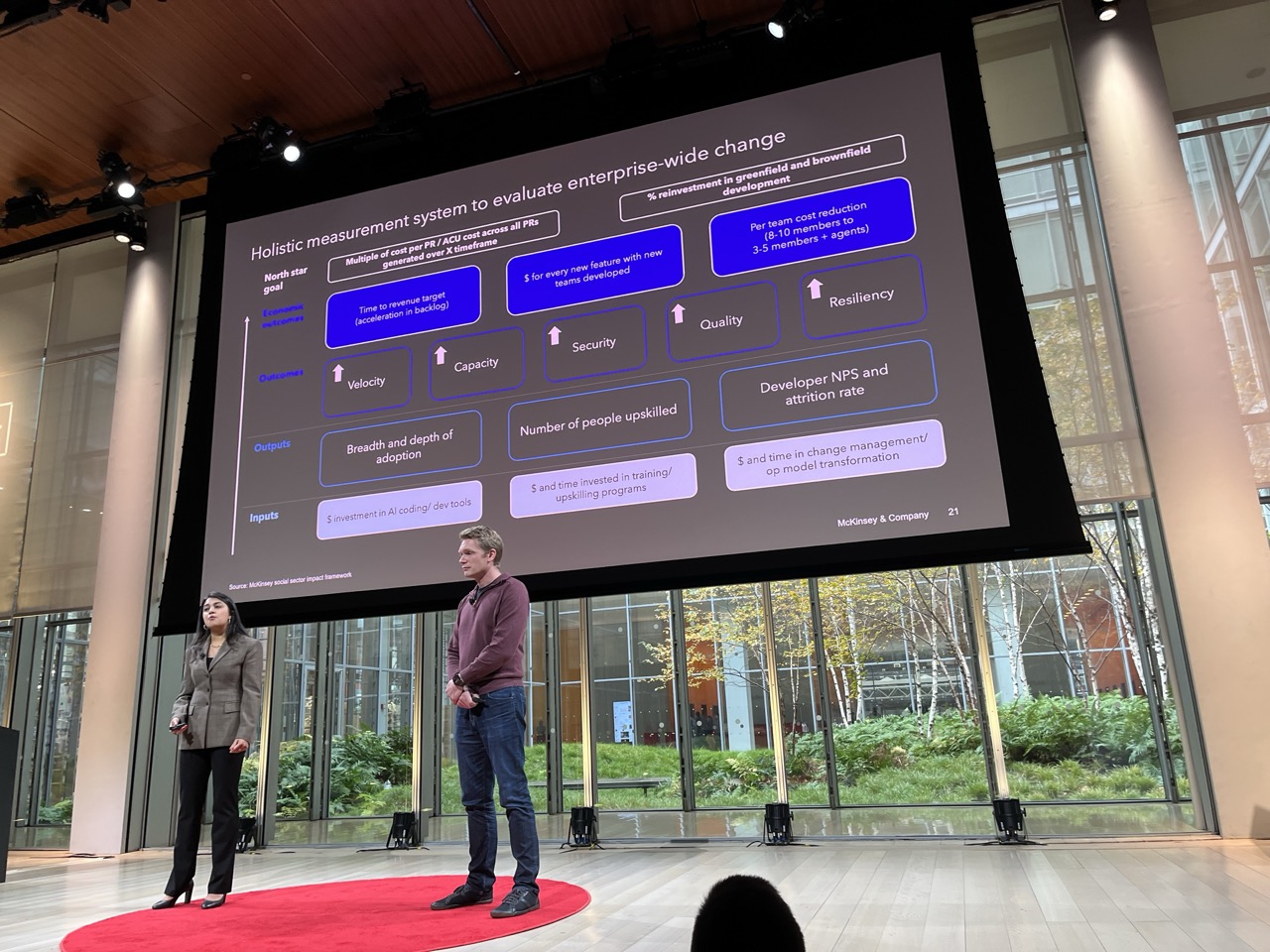

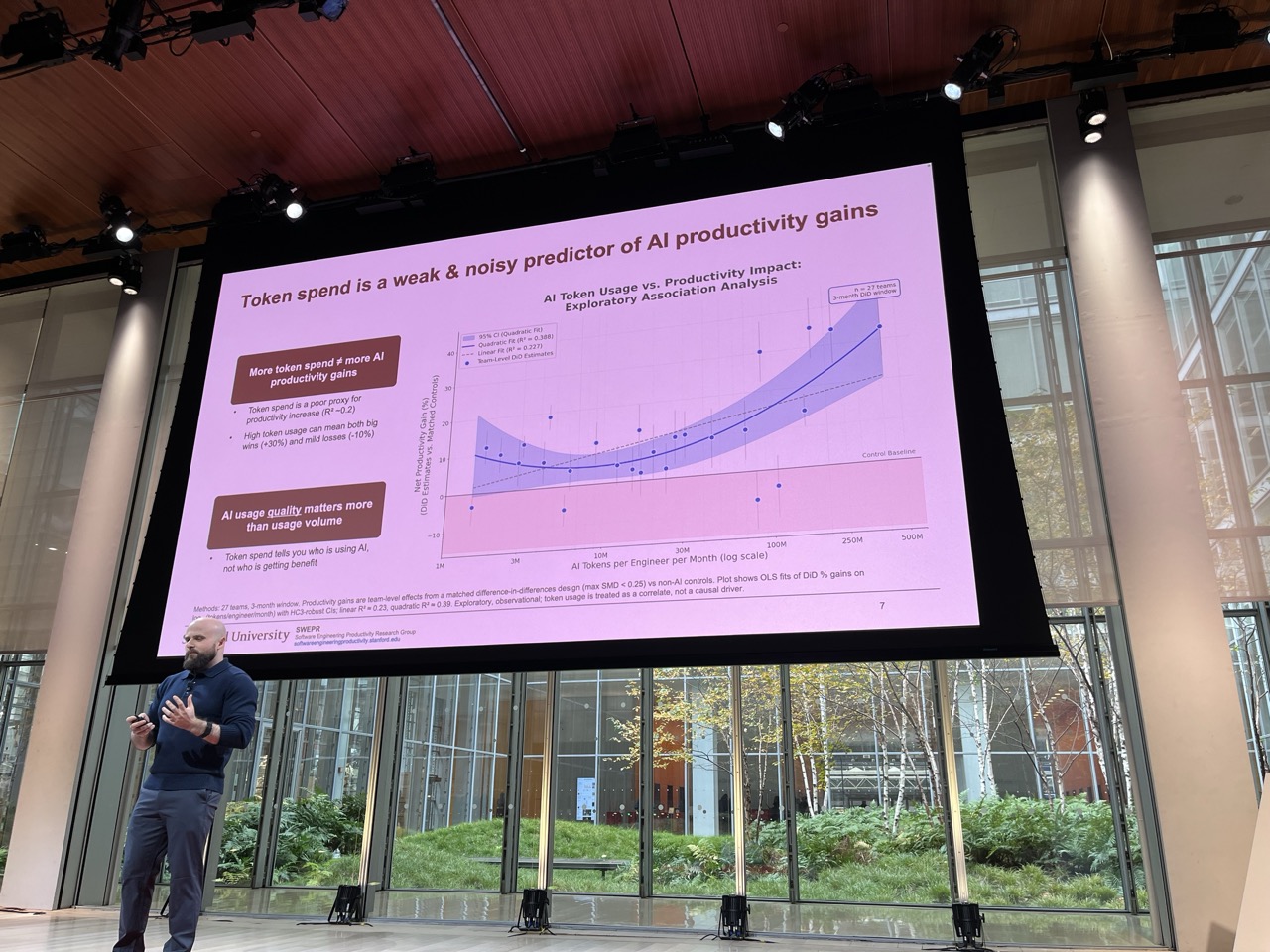

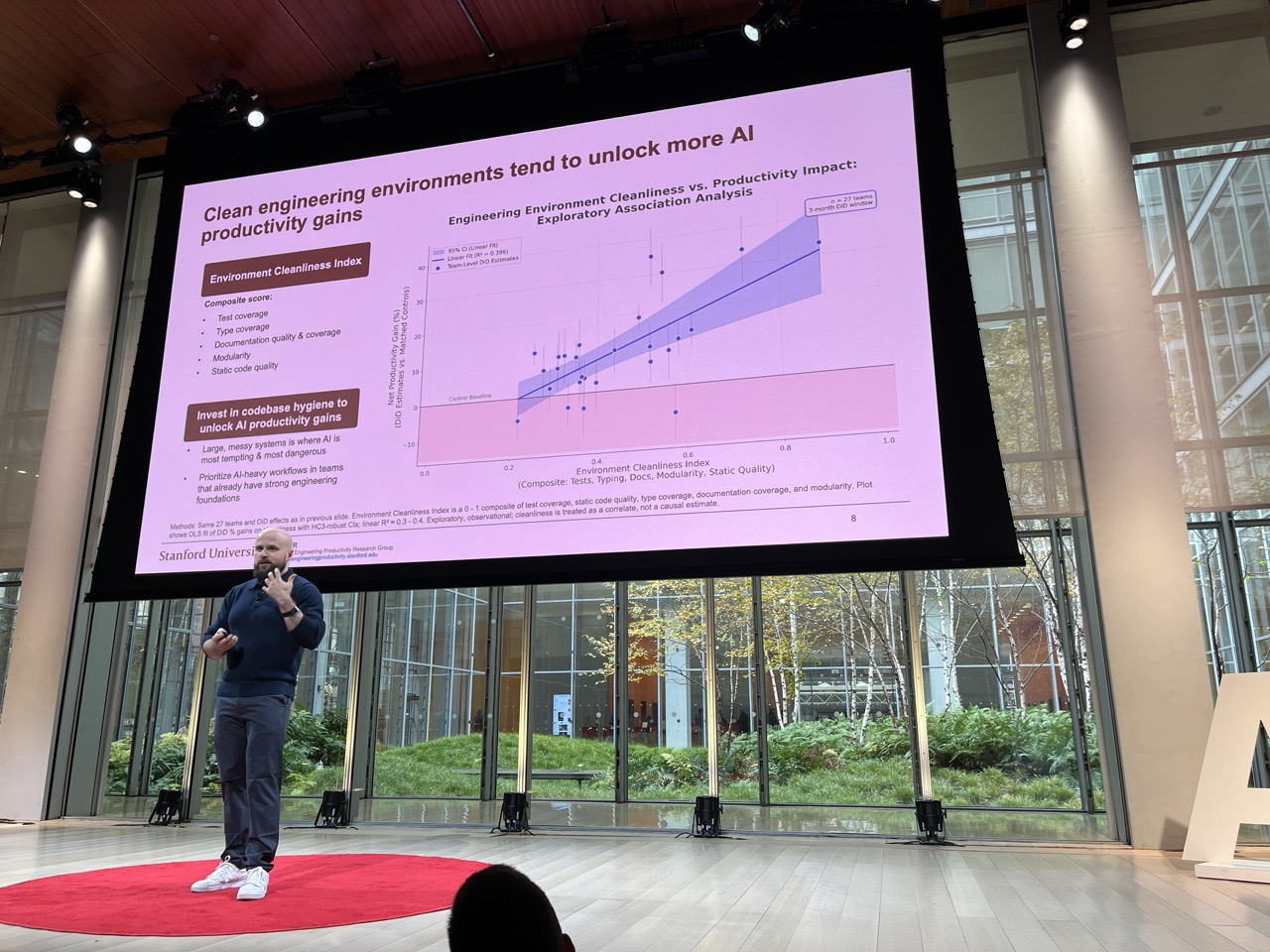

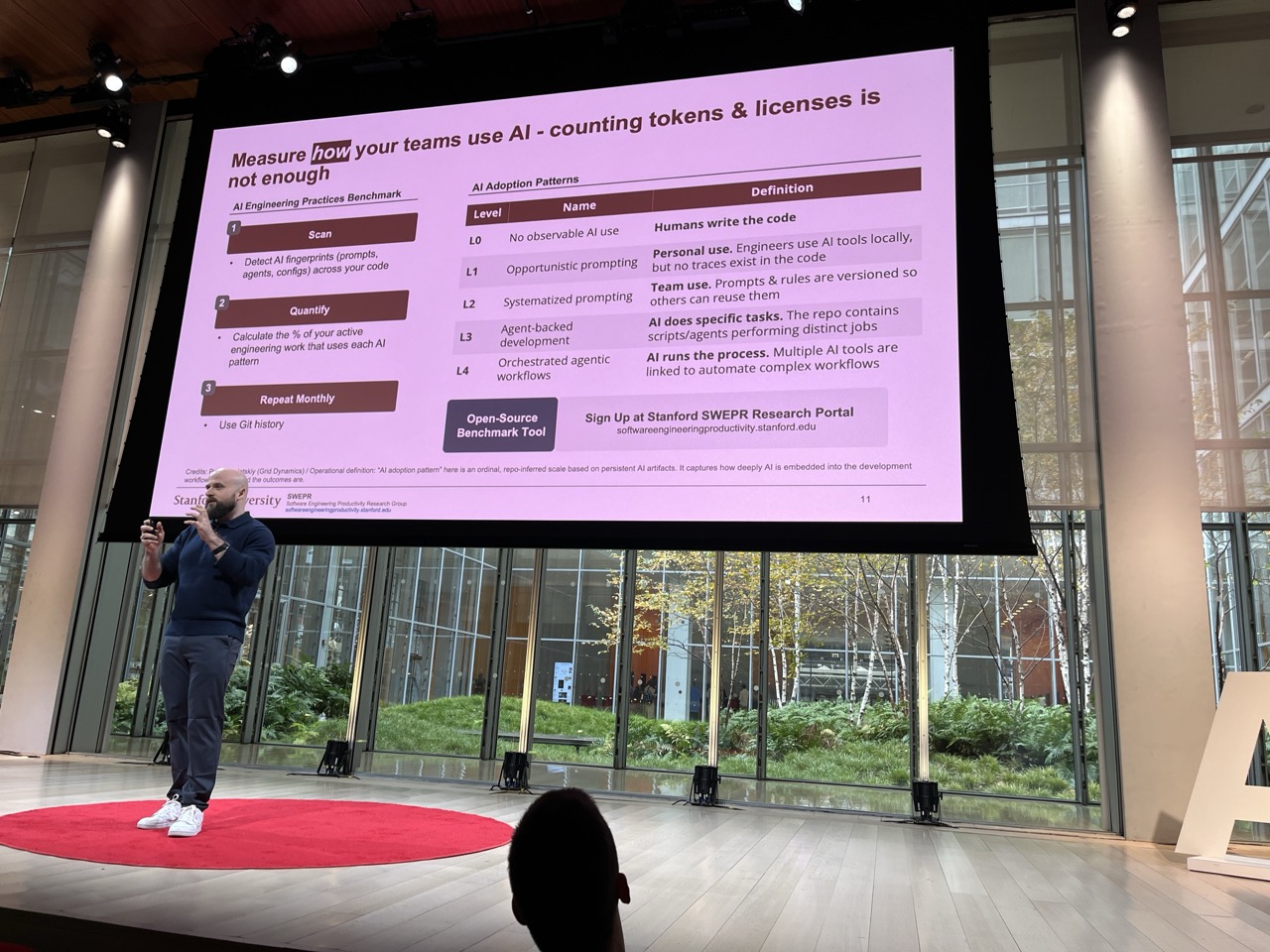

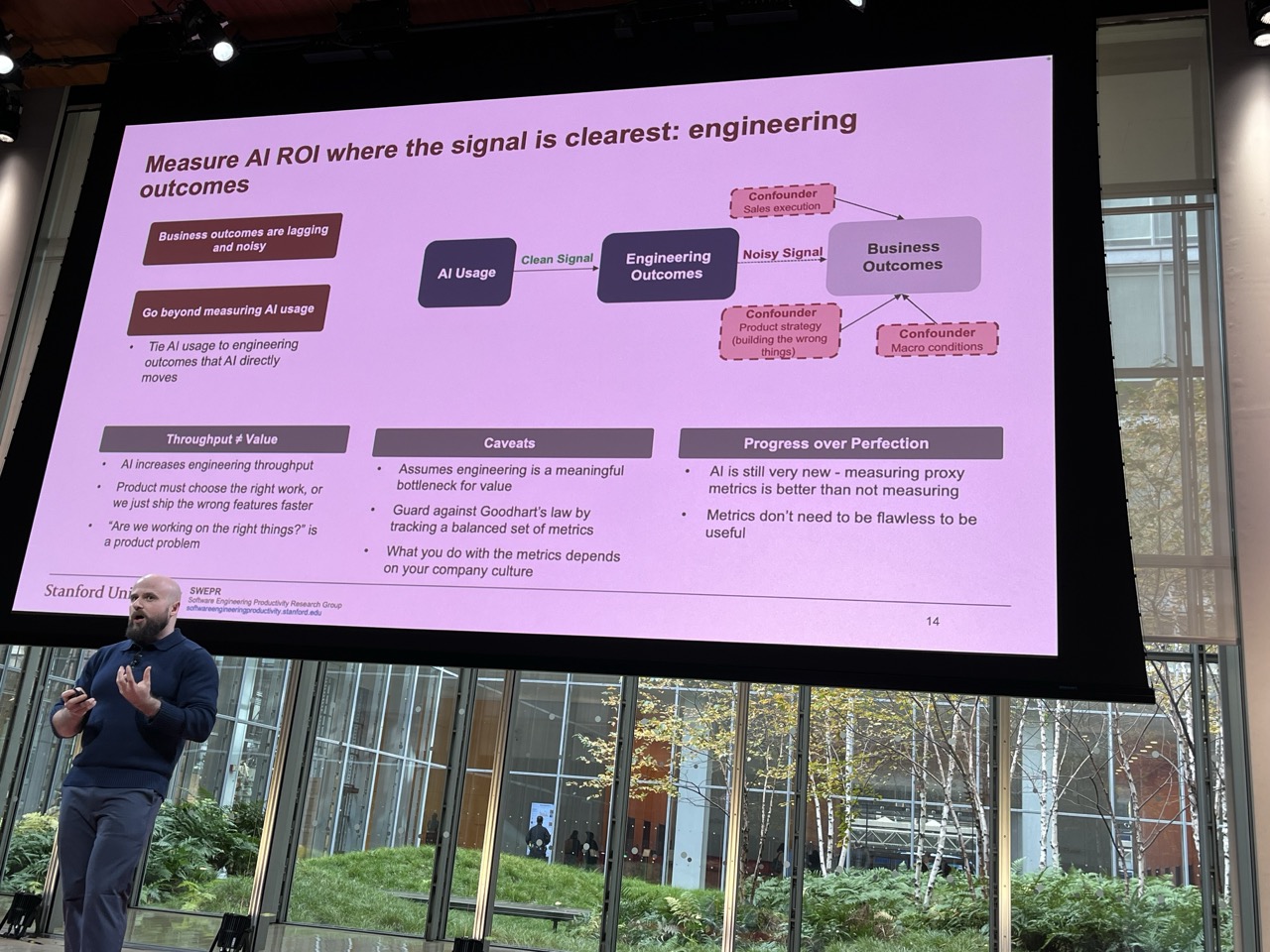

ROI & Metrics

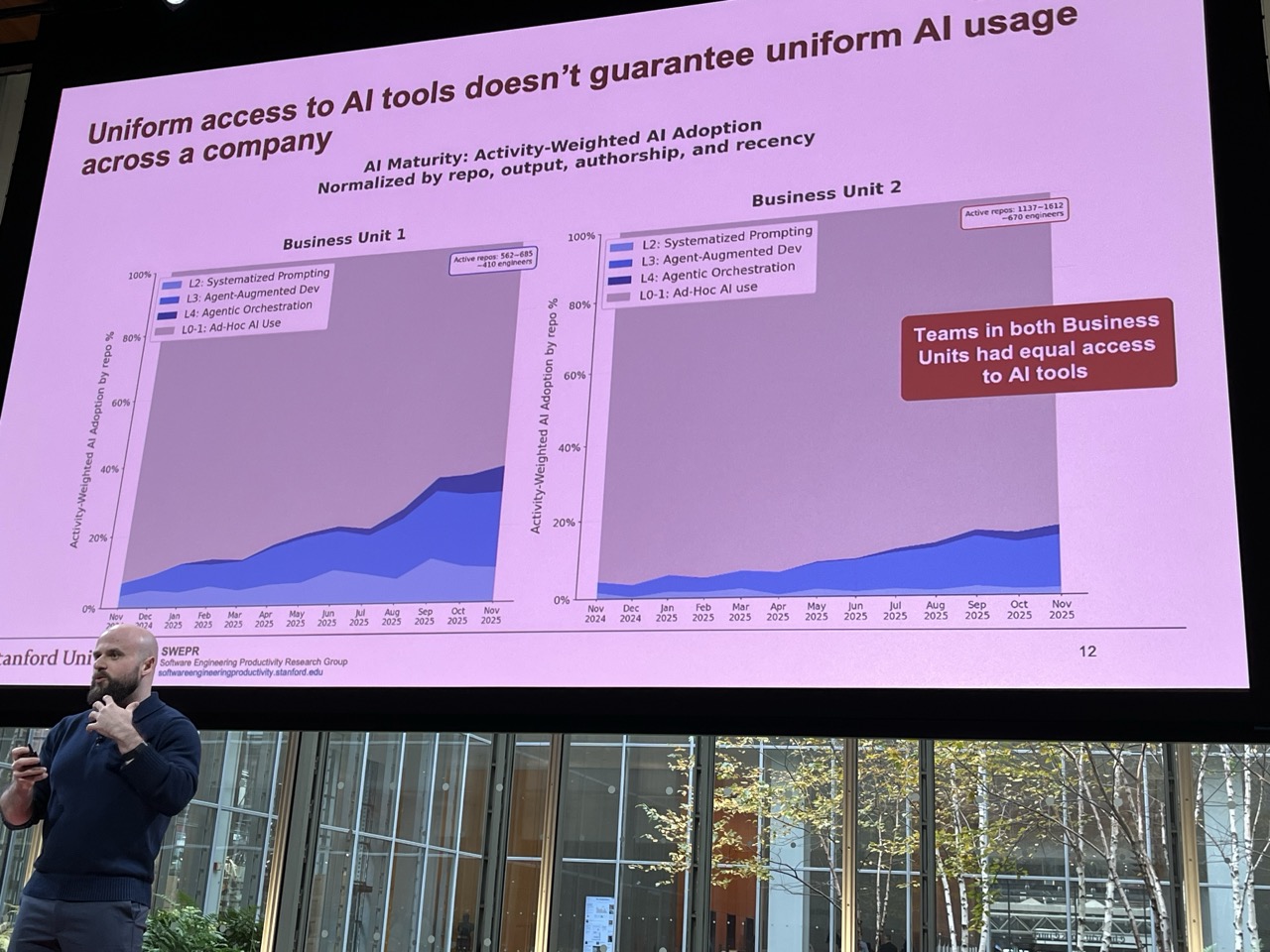

- Tool access ≠ tool usage

- Engineering outcomes easiest to measure

- Per-line level data collection (Cursor model)

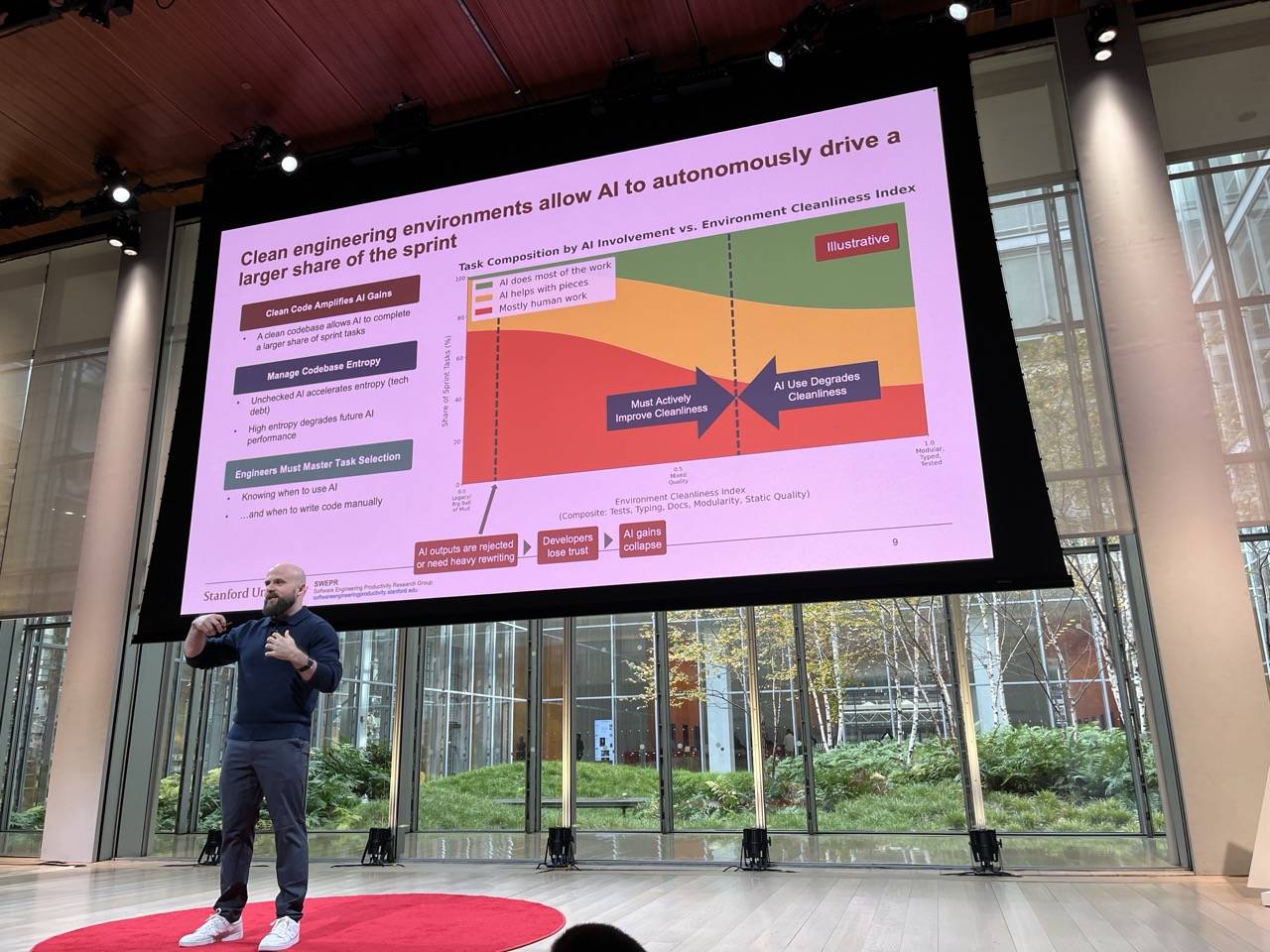

- Clean coding environments correlate with better outcomes (tests/types/docs/modularity)

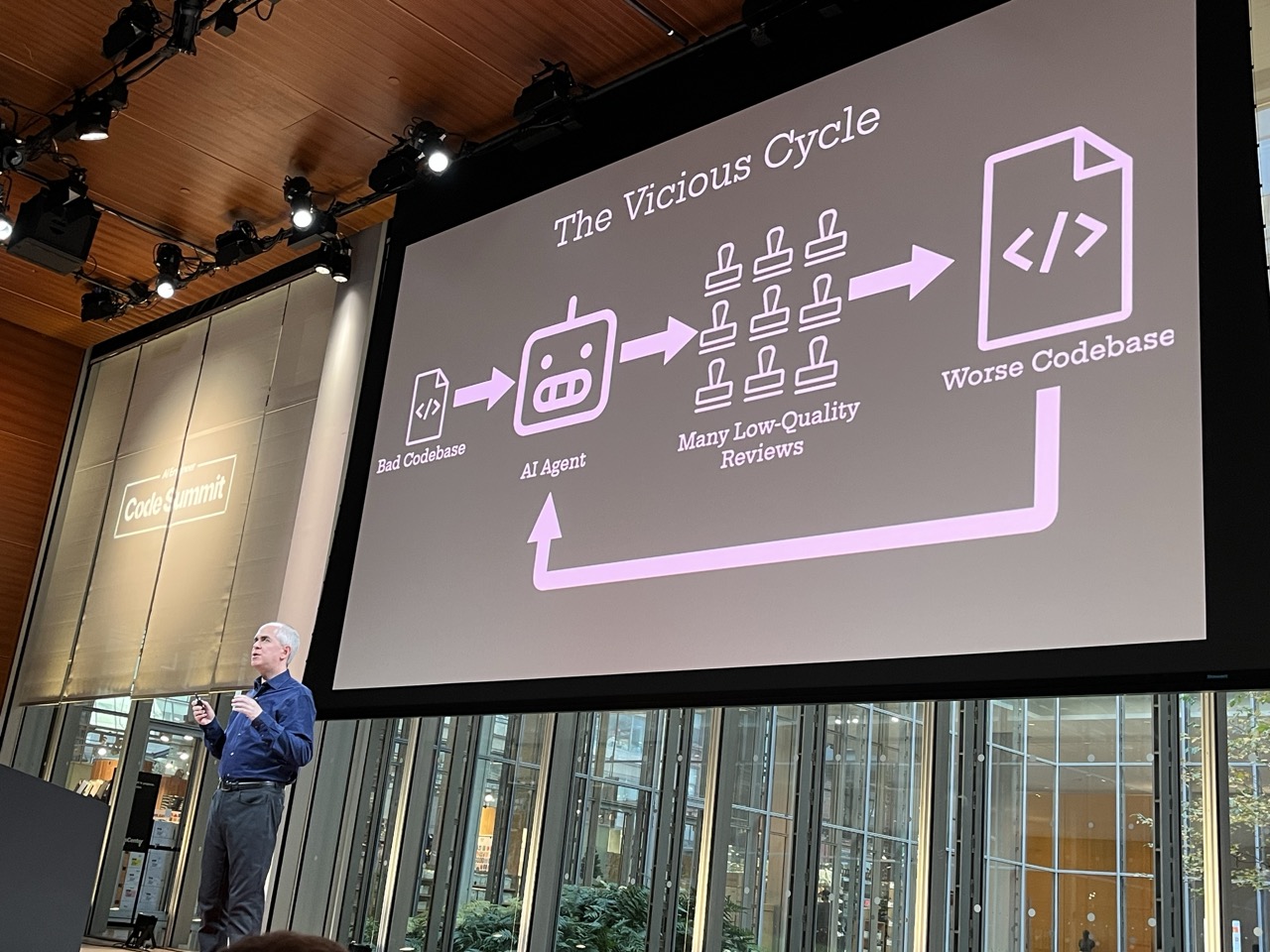

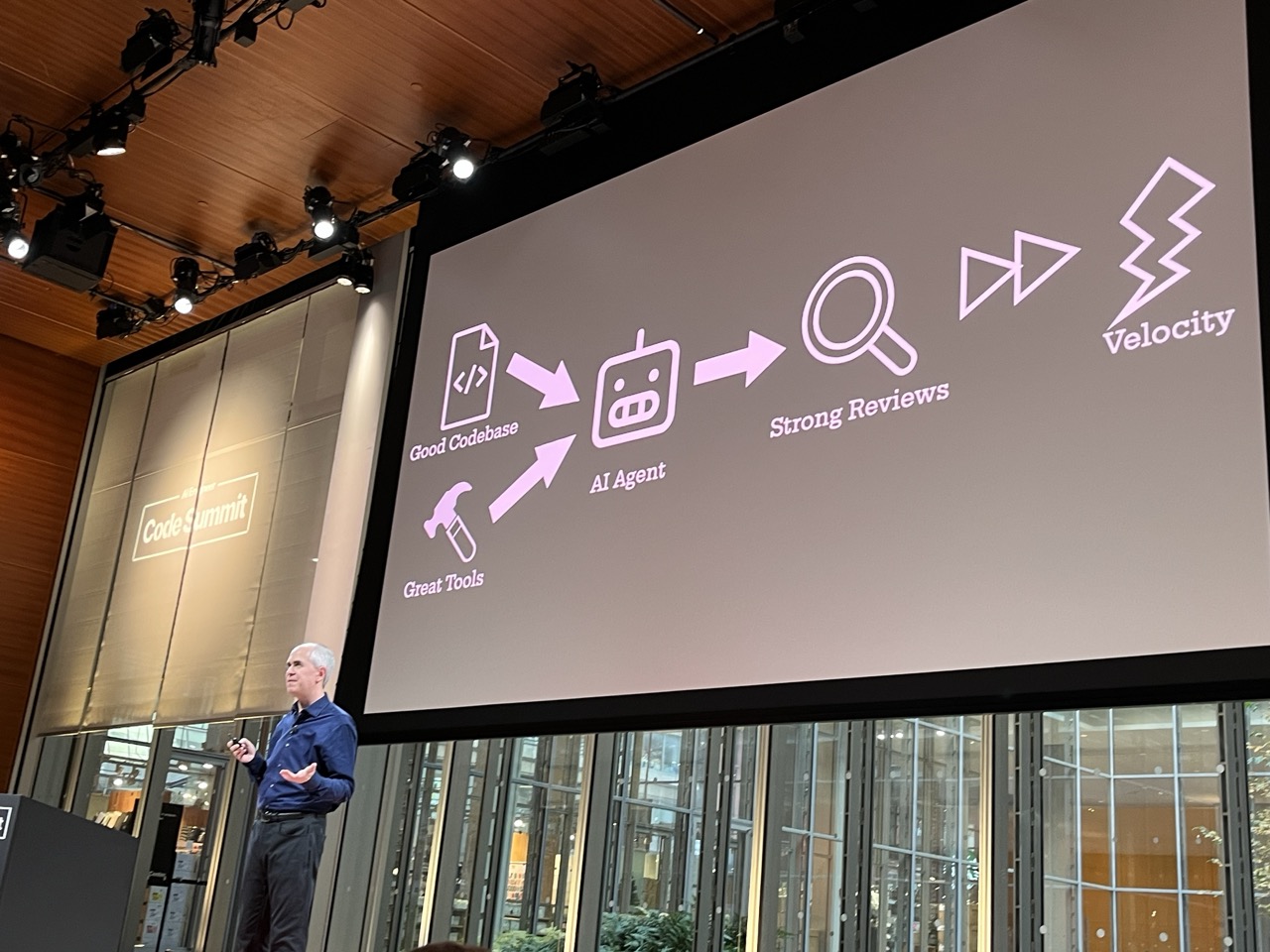

- Vicious vs virtuous cycles in development

-

Organizational Structure Evolution

- 15-person companies with 6 apps achieving good ARR

- Single engineers building/maintaining complex production products

- Support teams authoring code patches

- Compounding engineering through prompt sharing

LLM Training#

-

Domain-Specific Training

- Importance of tight RL feedback cycles

- Need for domain-specific benchmarks

- Training environment must match deployment environment exactly

- Reward function design and monitoring for scientific tasks

-

Data Quality & Collection

- Real-world task data collection critical

- Clean, structured data environments

- Comprehensive documentation as training signal

- Tests and types as quality indicators

-

Scientific Workflow Optimization

- Multi-modal model capabilities (Minimax M2)

- Interleaved thinking improving performance

- Code execution in sandboxes gaining traction

- Browser-based automation for testing

-

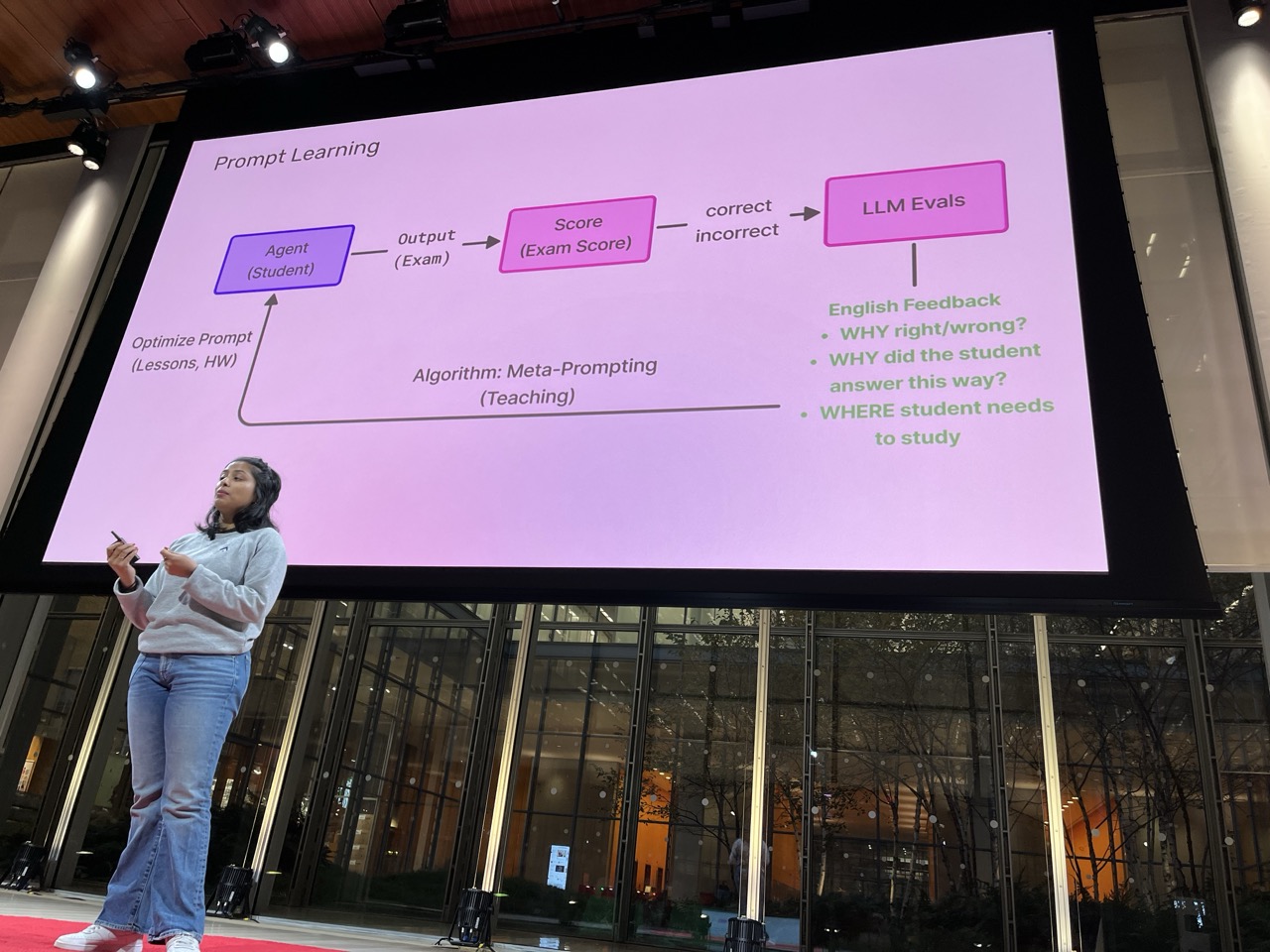

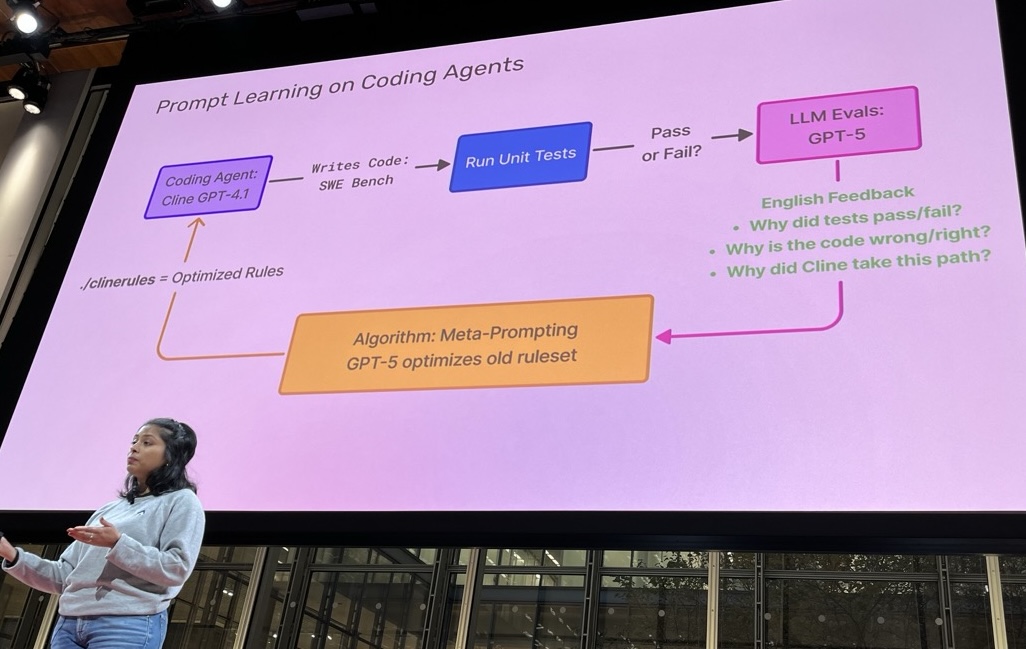

Evaluation Frameworks

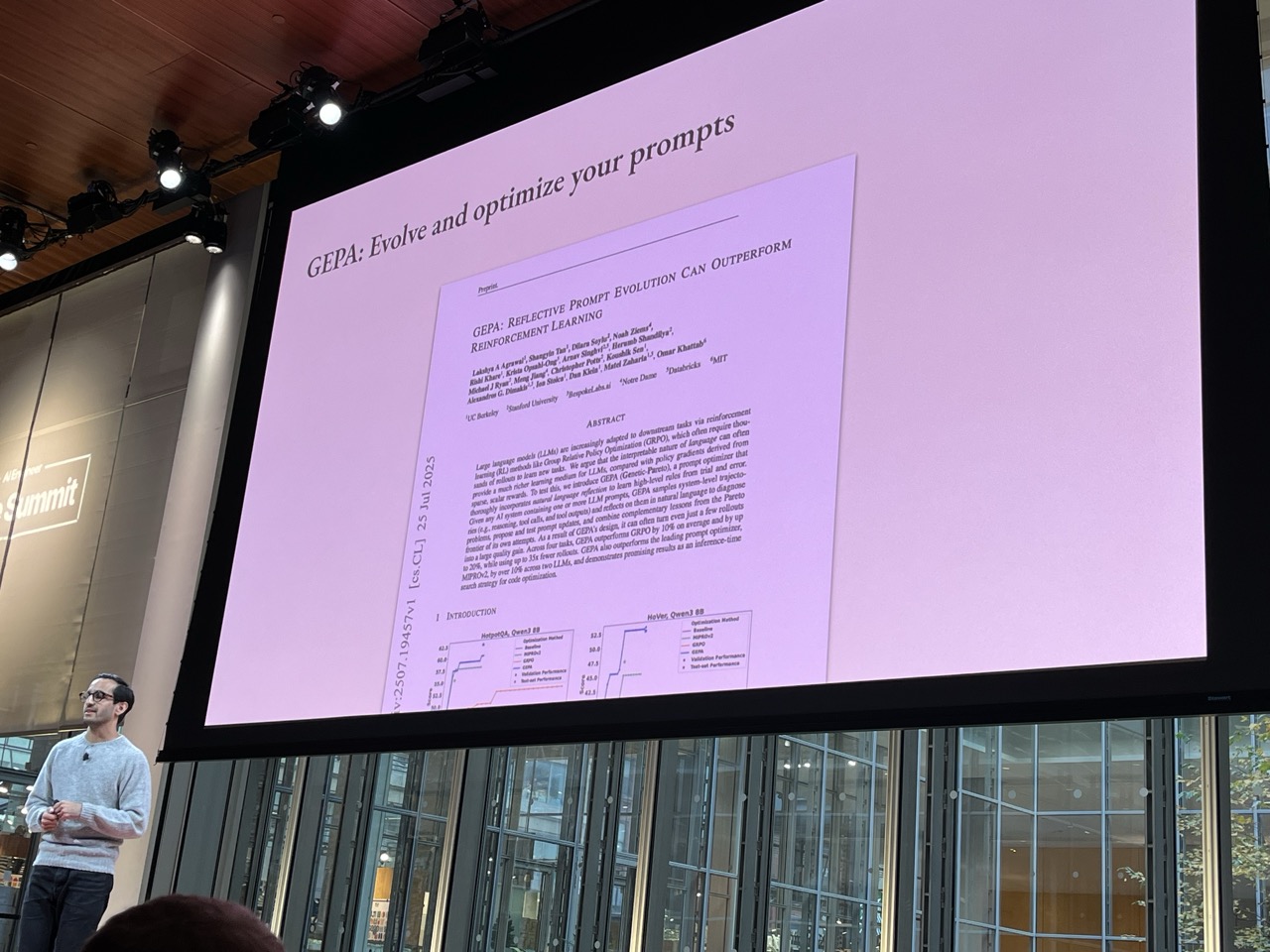

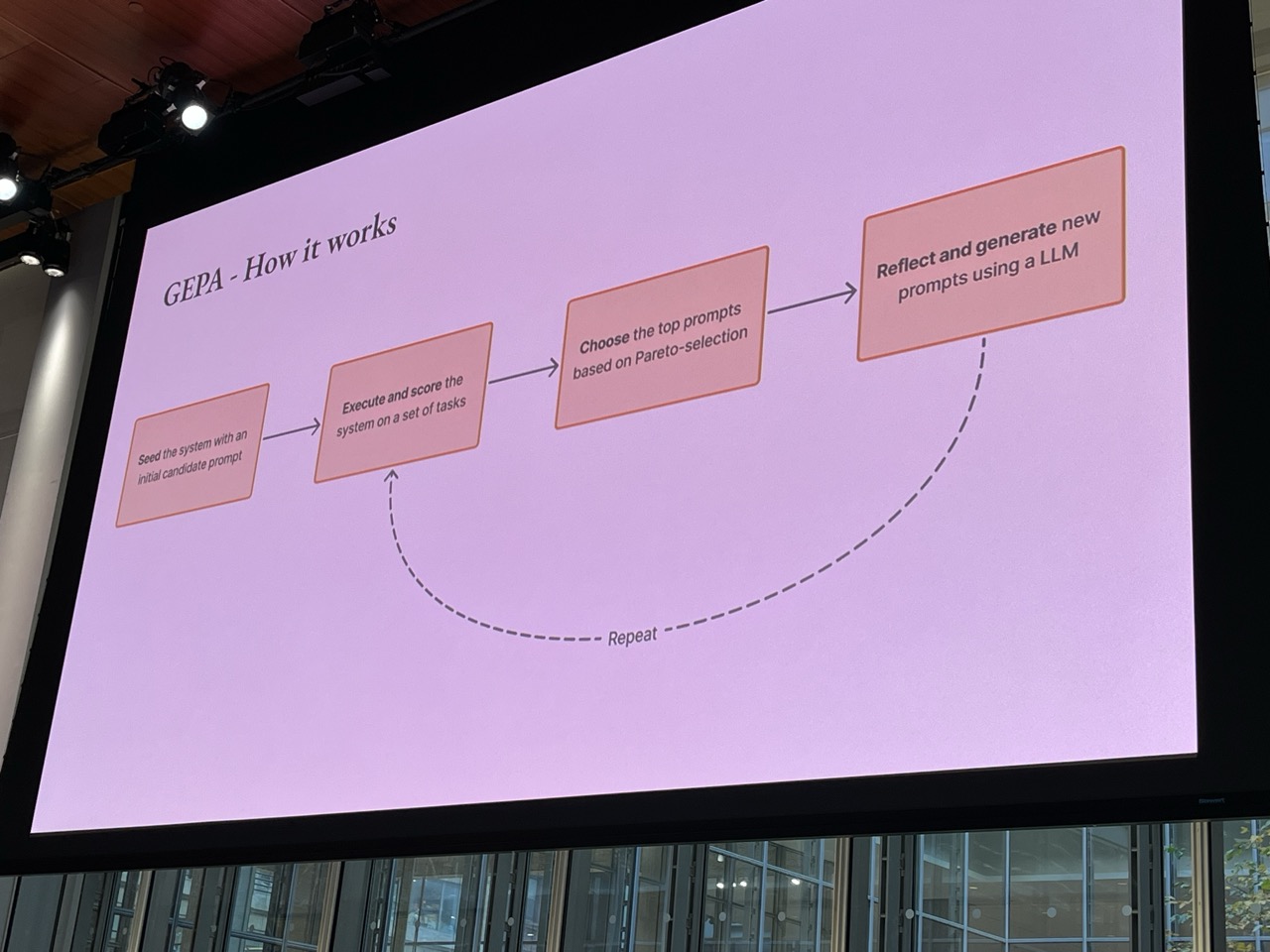

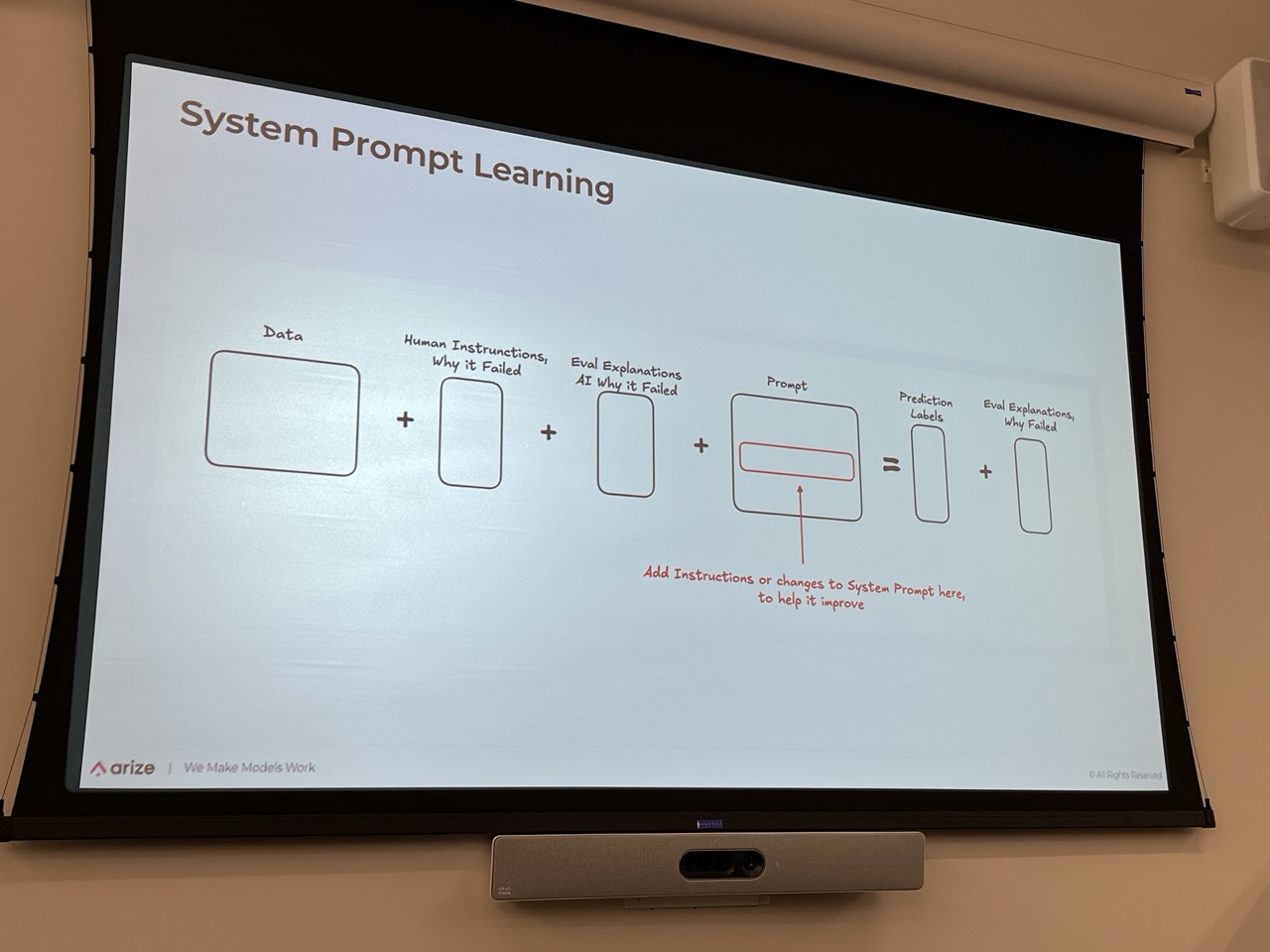

- LLM-as-judge for prompt improvement

- Continuous evaluation loops

- KPI gathering automation

- Safety training automation potential

Forward Deployed Engineer Perspective#

-

Rapid Prototyping & Iteration

- Demo-first culture

- Quick wins establishing credibility

- Small bets, big impact approach

- Progressive enhancement of capabilities

-

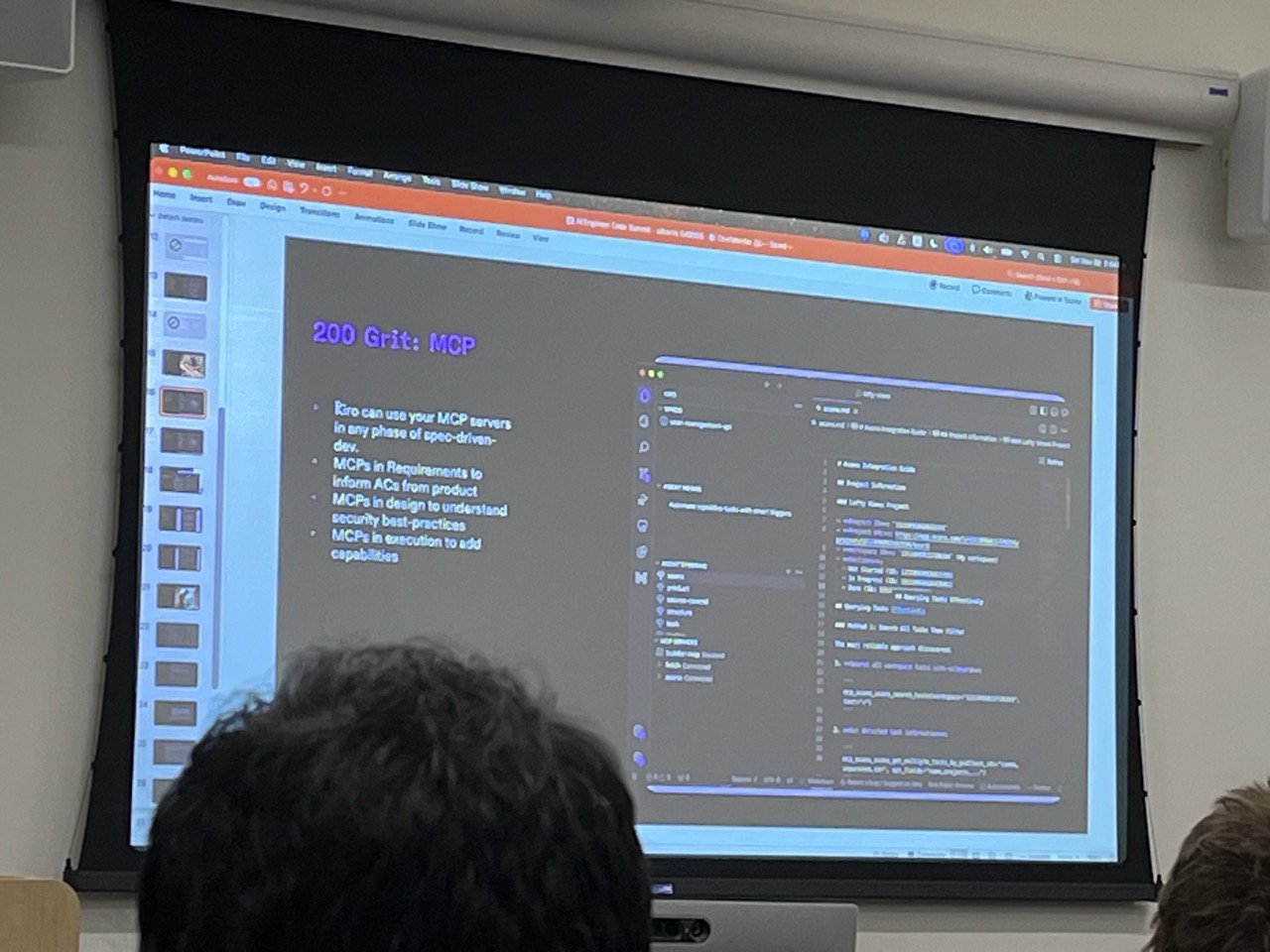

Integration & Interoperability

- MCP server implementation

- Tool composition strategies

- Internal data accessibility via MCPs

- Cross-platform standardization

-

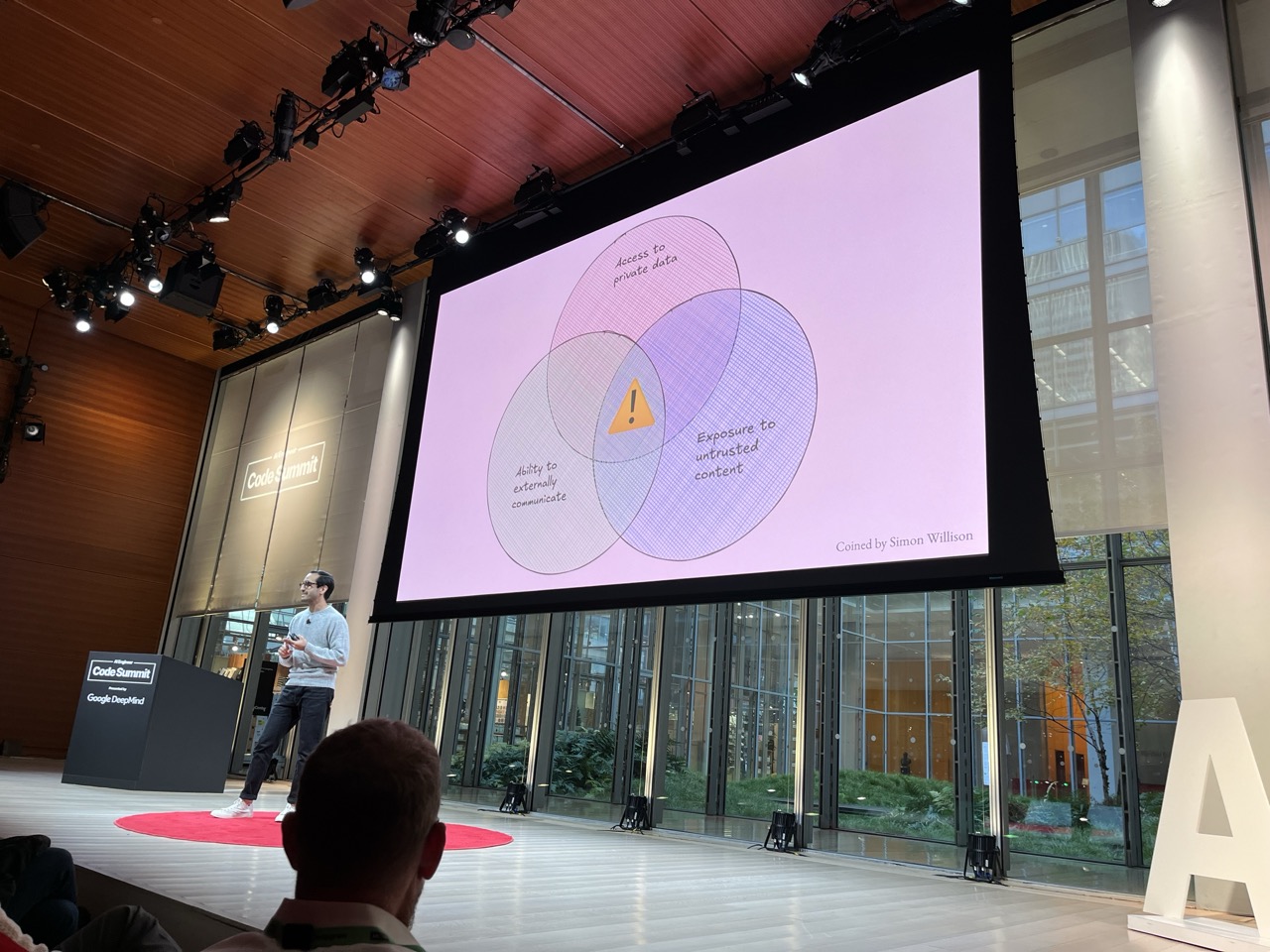

Production Deployment Challenges

- Demos easy; production requires QC

- Security and governance considerations

- Distribution and versioning of skills/tools

- Failure mode handling

-

User Experience Focus

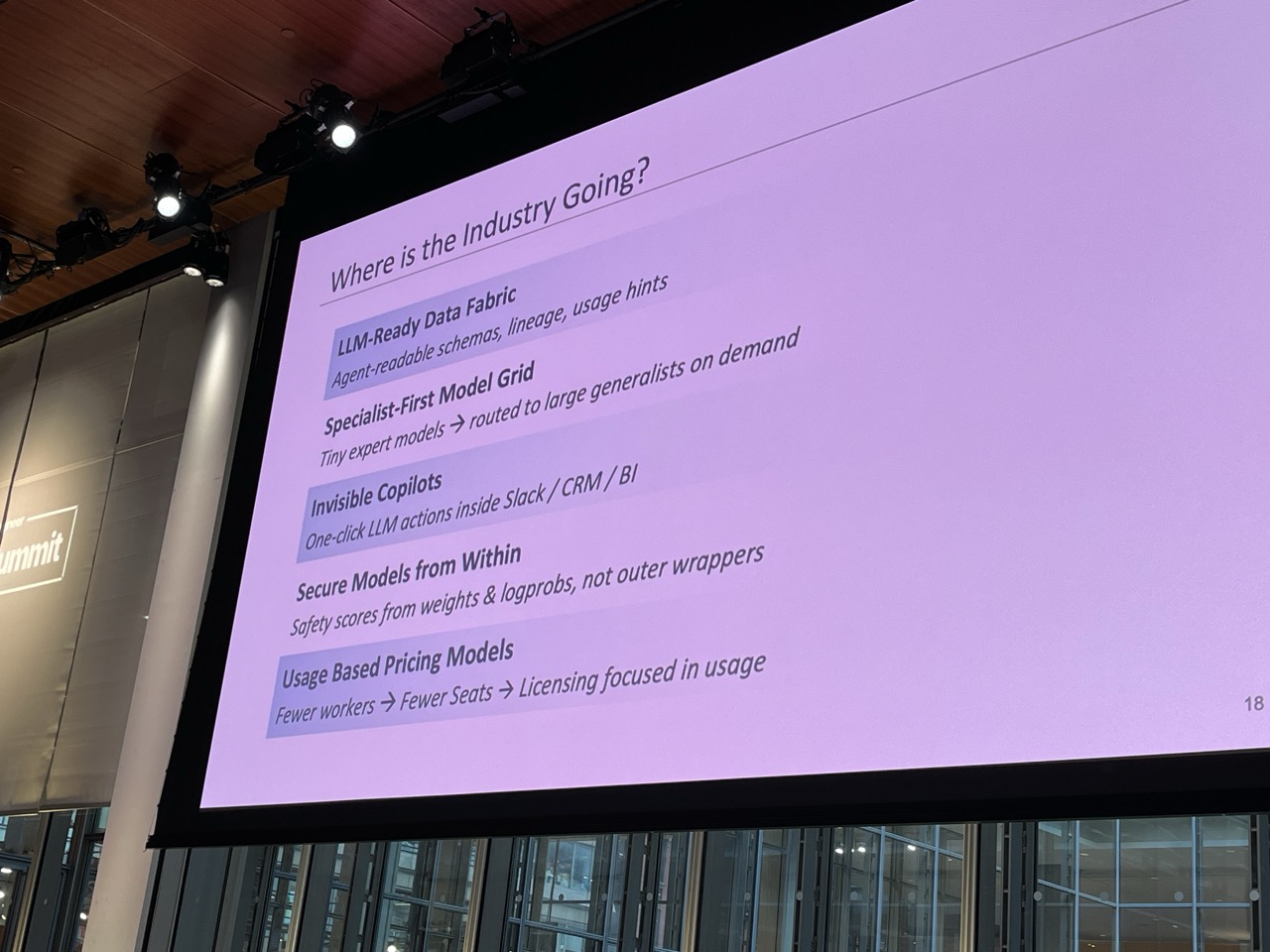

- Invisible co-pilots in existing tools (Slack/CRM/BI)

- Tight integration with existing workflows

- Google AI Studio-style accessibility

- Low-friction onboarding

-

Specialized Tool Development

- Context engines for code understanding

- Automated ticket creation and patch authoring

- Report retrieval automation

- Code review automation with learning benefits

-

Infrastructure Requirements

- LLM-ready data fabrics

- Secure model serving

- Standardized RL environments

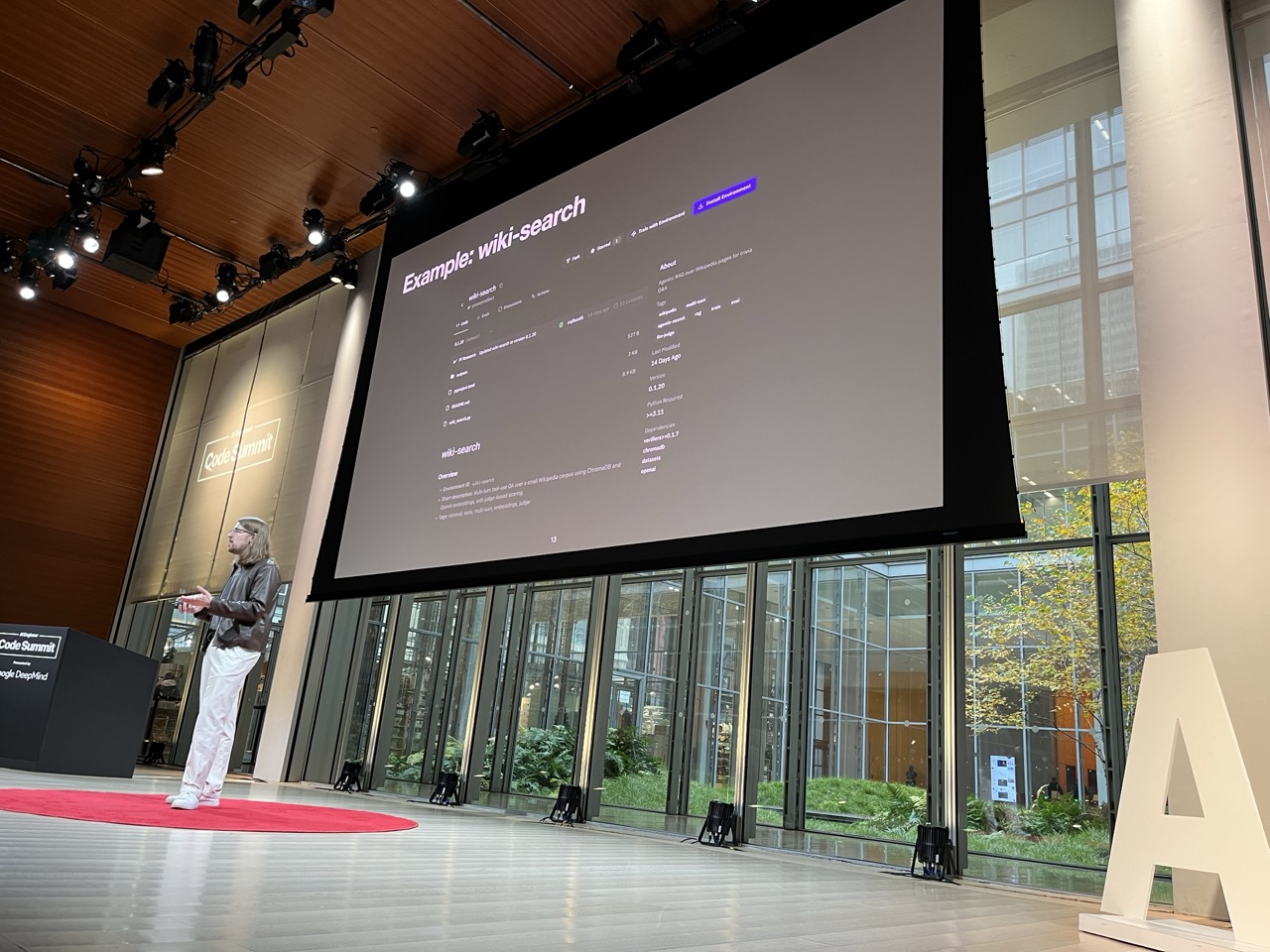

- Reproducibility platforms (Prime Intellect approach)

Cross-Cutting Themes#

-

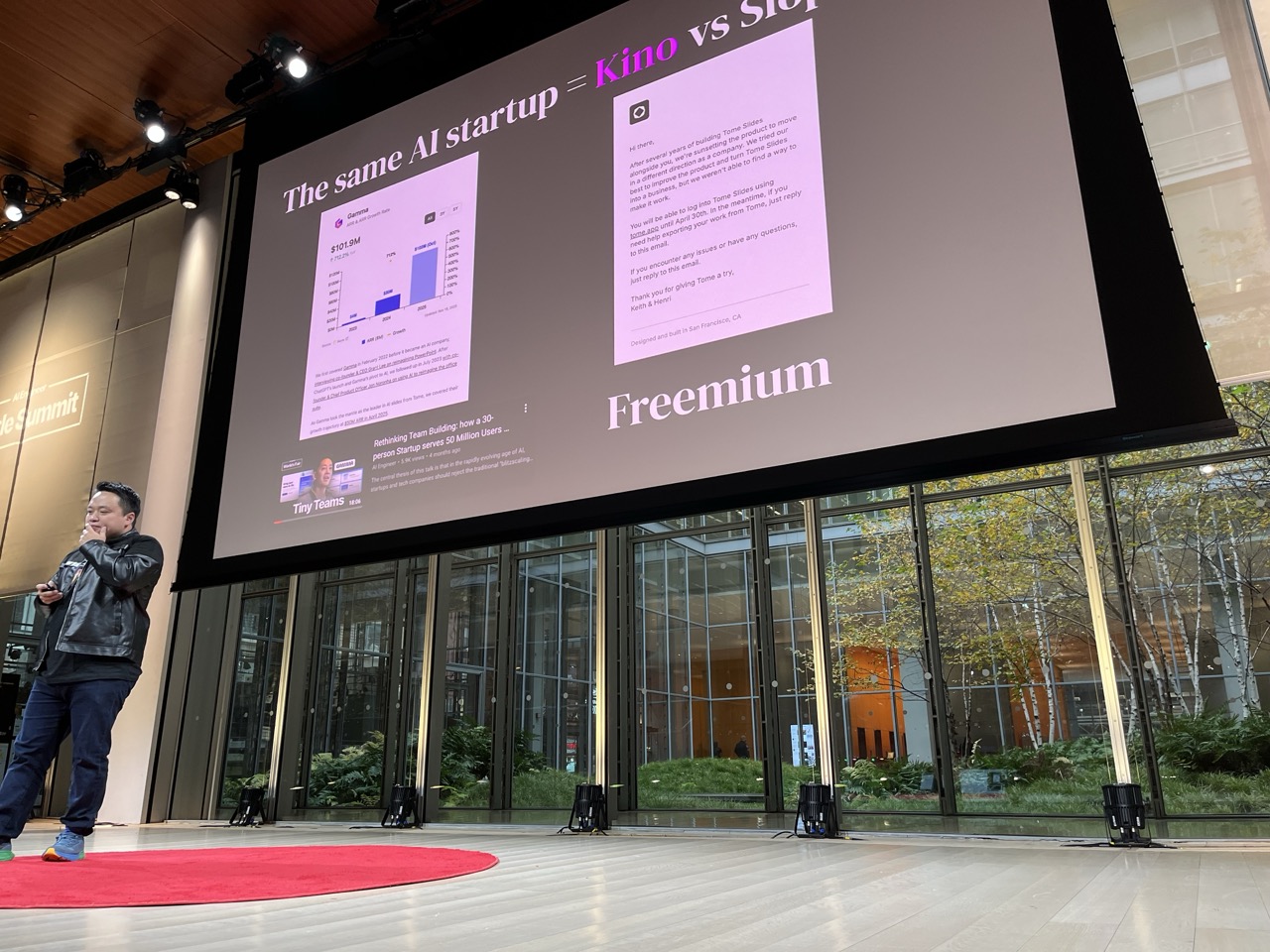

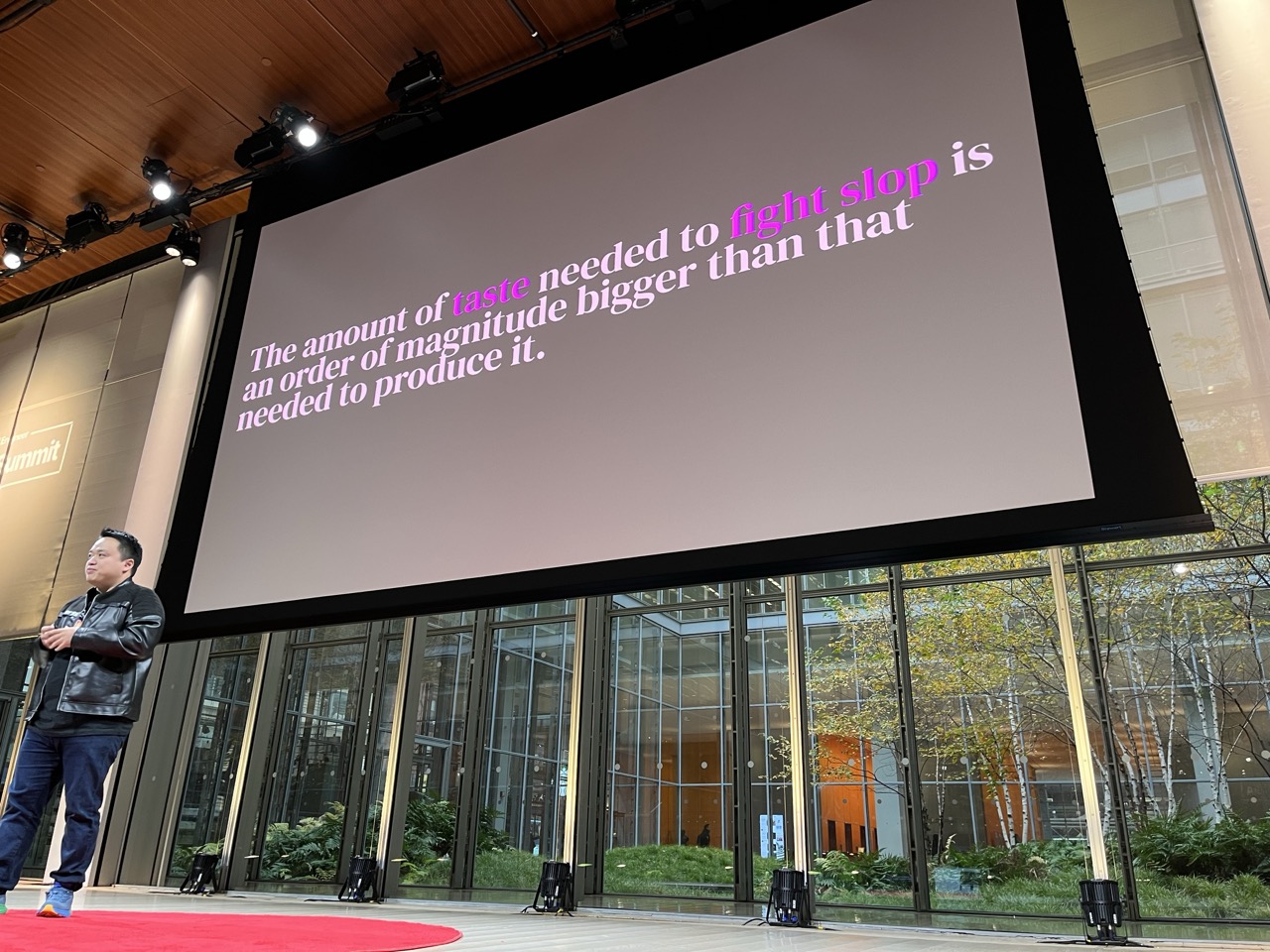

The War on Slop

- Quality control as primary concern

- Need for validation at every layer

- Code integrity vs code generation balance

- Process-level vs code-level problems

-

Speed as Feature

- Fast inference as competitive advantage

- Rapid iteration cycles

- Quick feedback loops

- Async processing patterns

-

Specialization vs Generalization

- Skills for domain expertise

- Specialized agents for task classes

- Progressive discovery vs exhaustive description

- Context-aware tool selection

20251122 Engineering#

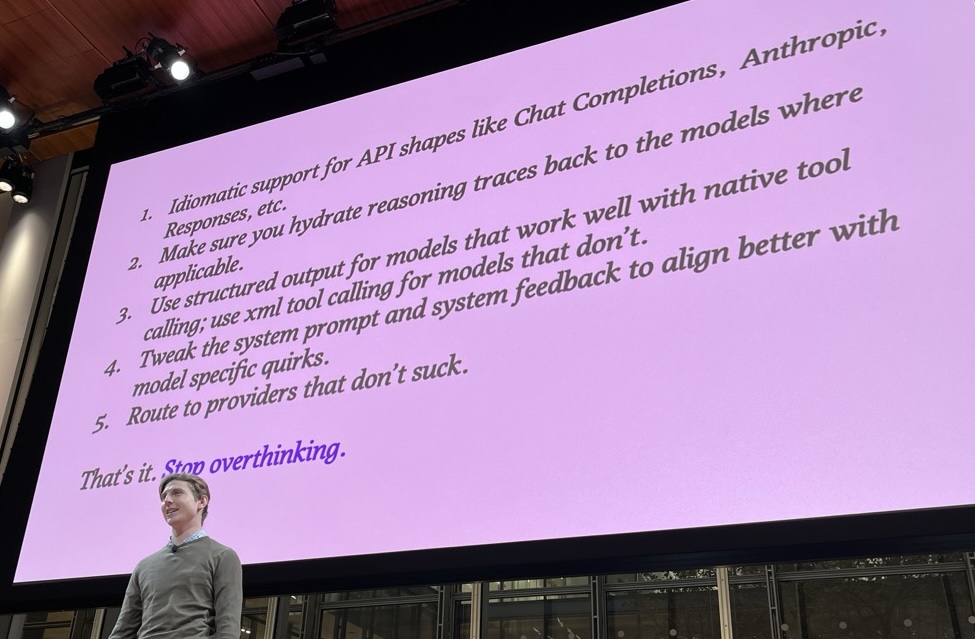

Composable MCP Architectures: Handling What the Protocol Can’t#

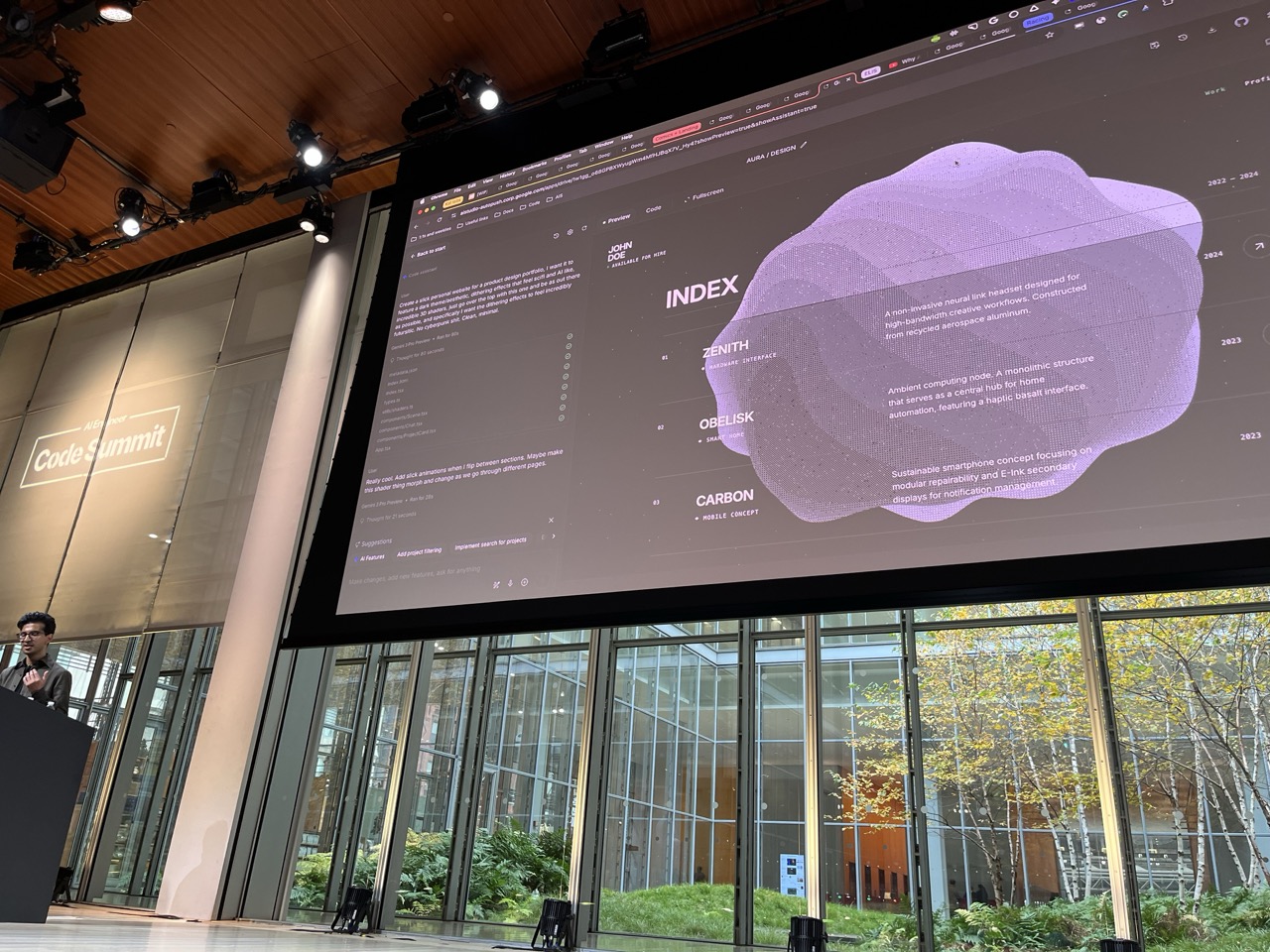

Prompt to Production with Google AI Studio

- Speaker: Paige Bailey / Google DeepMind

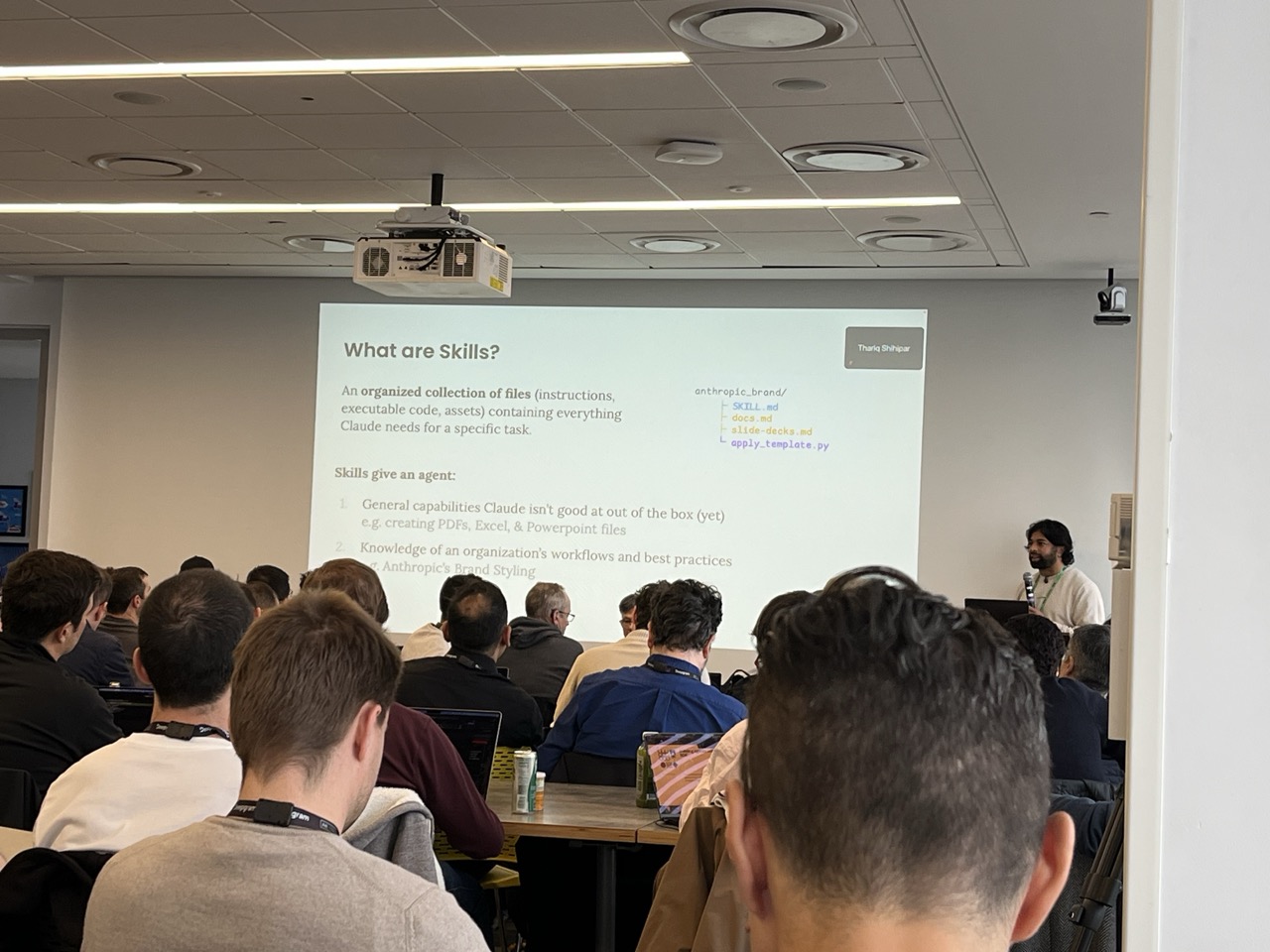

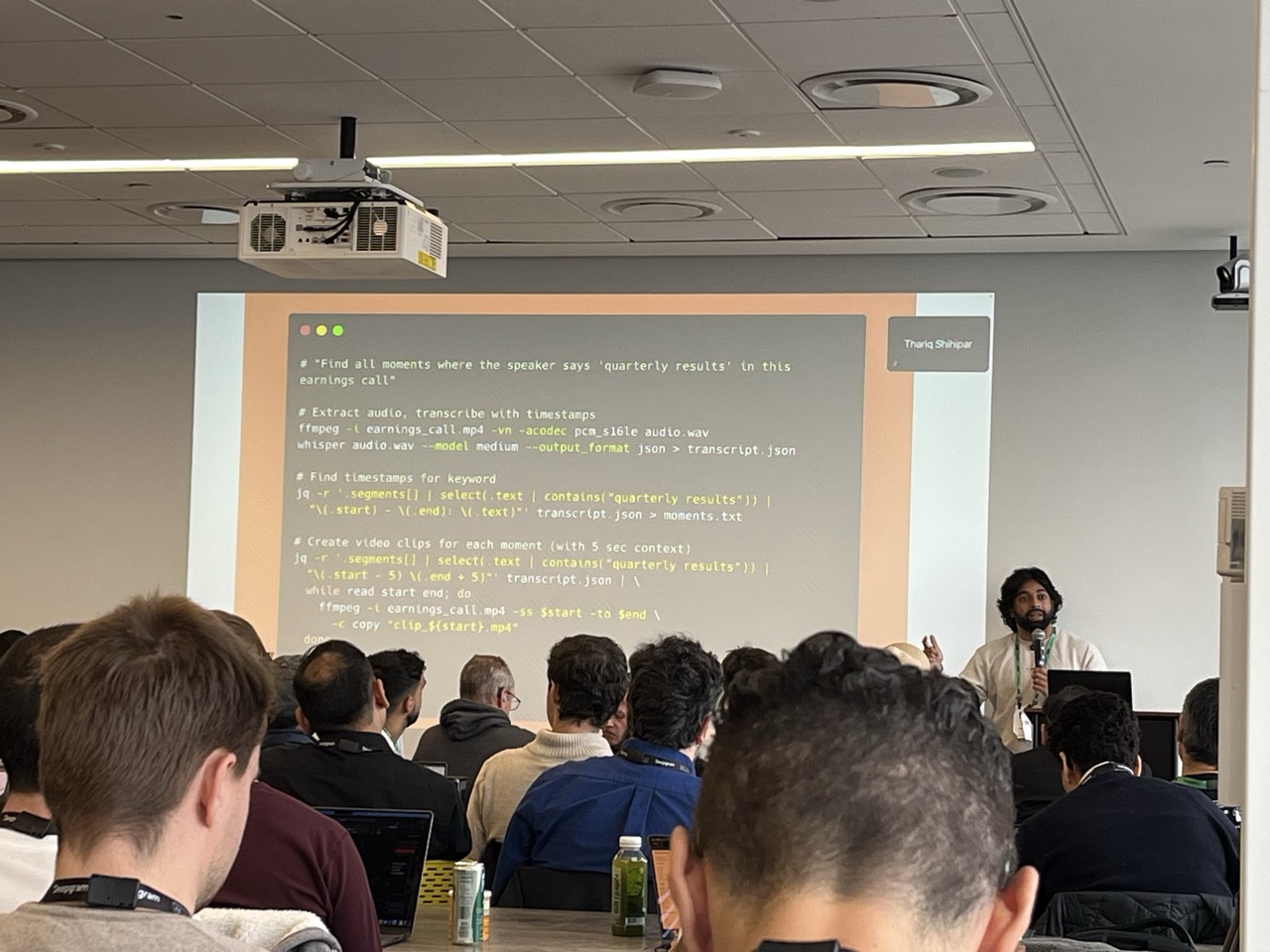

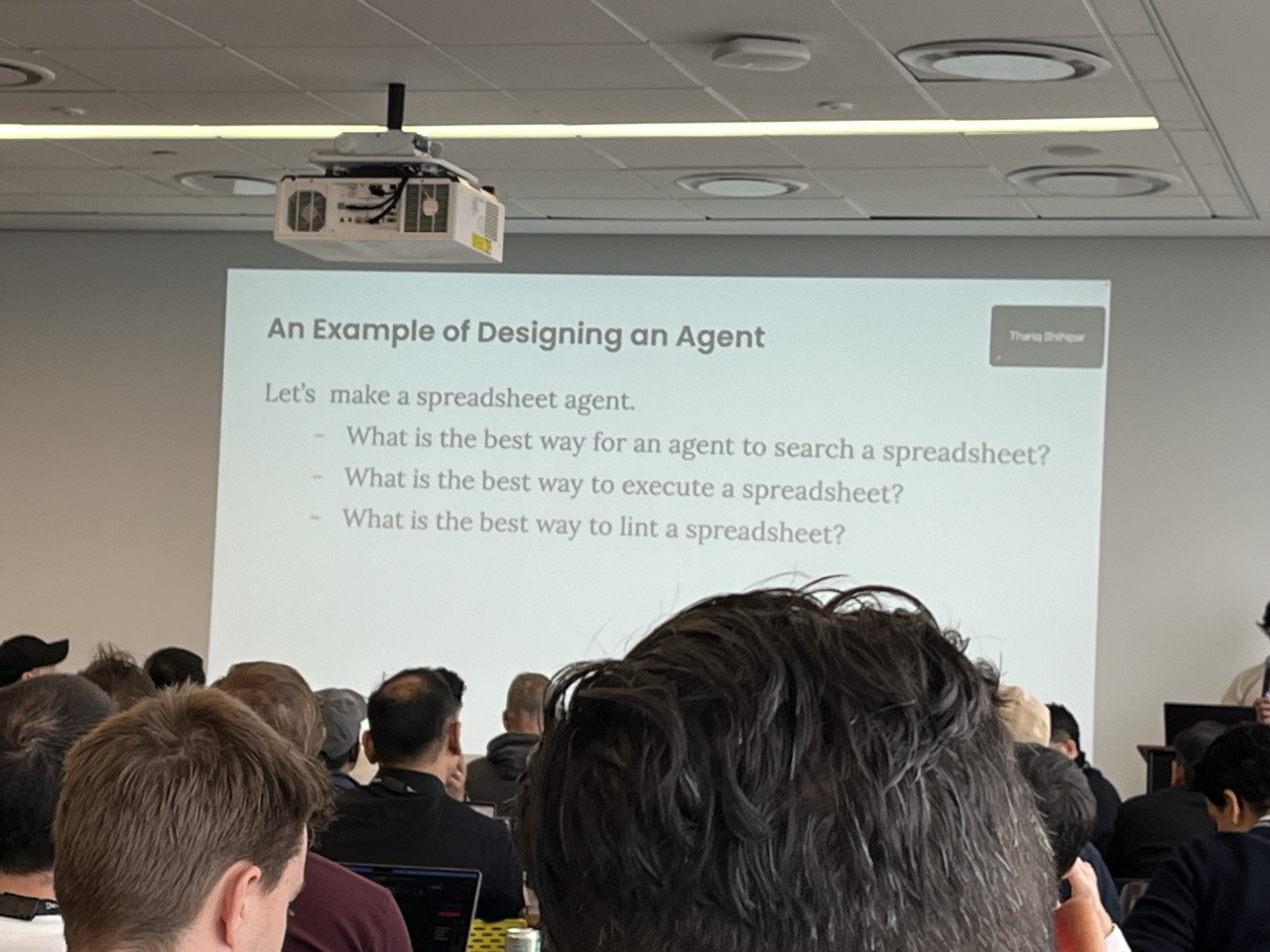

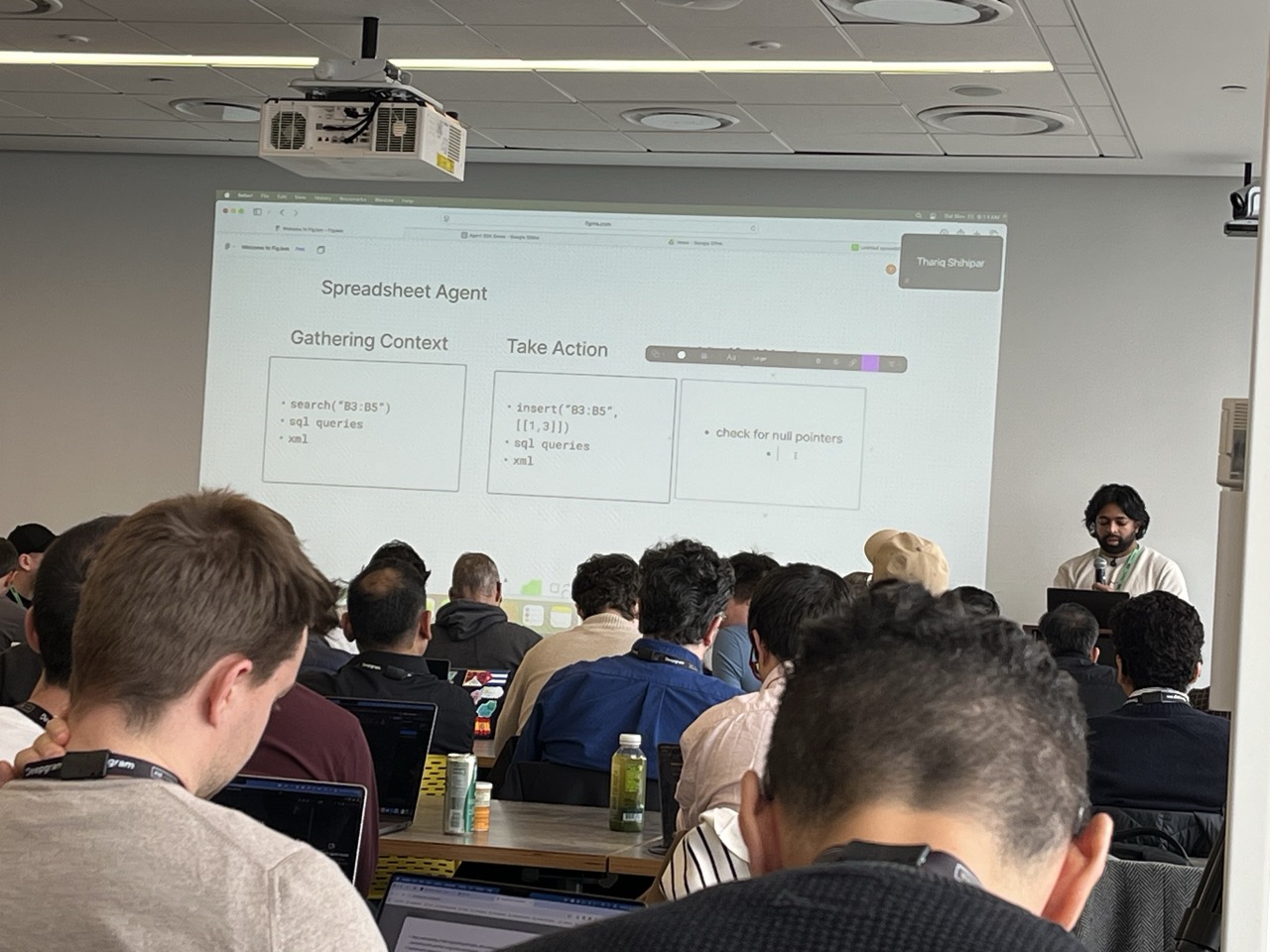

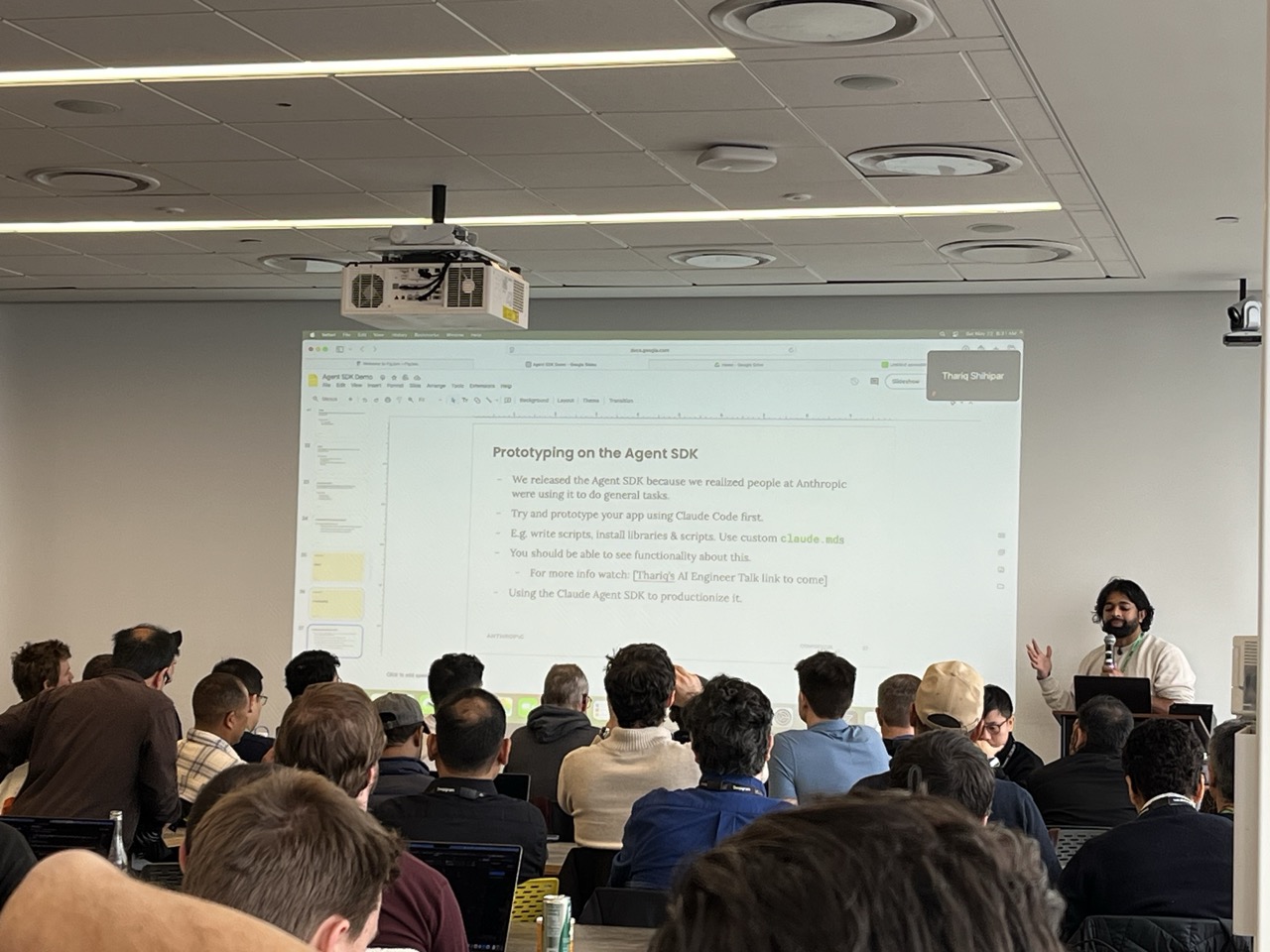

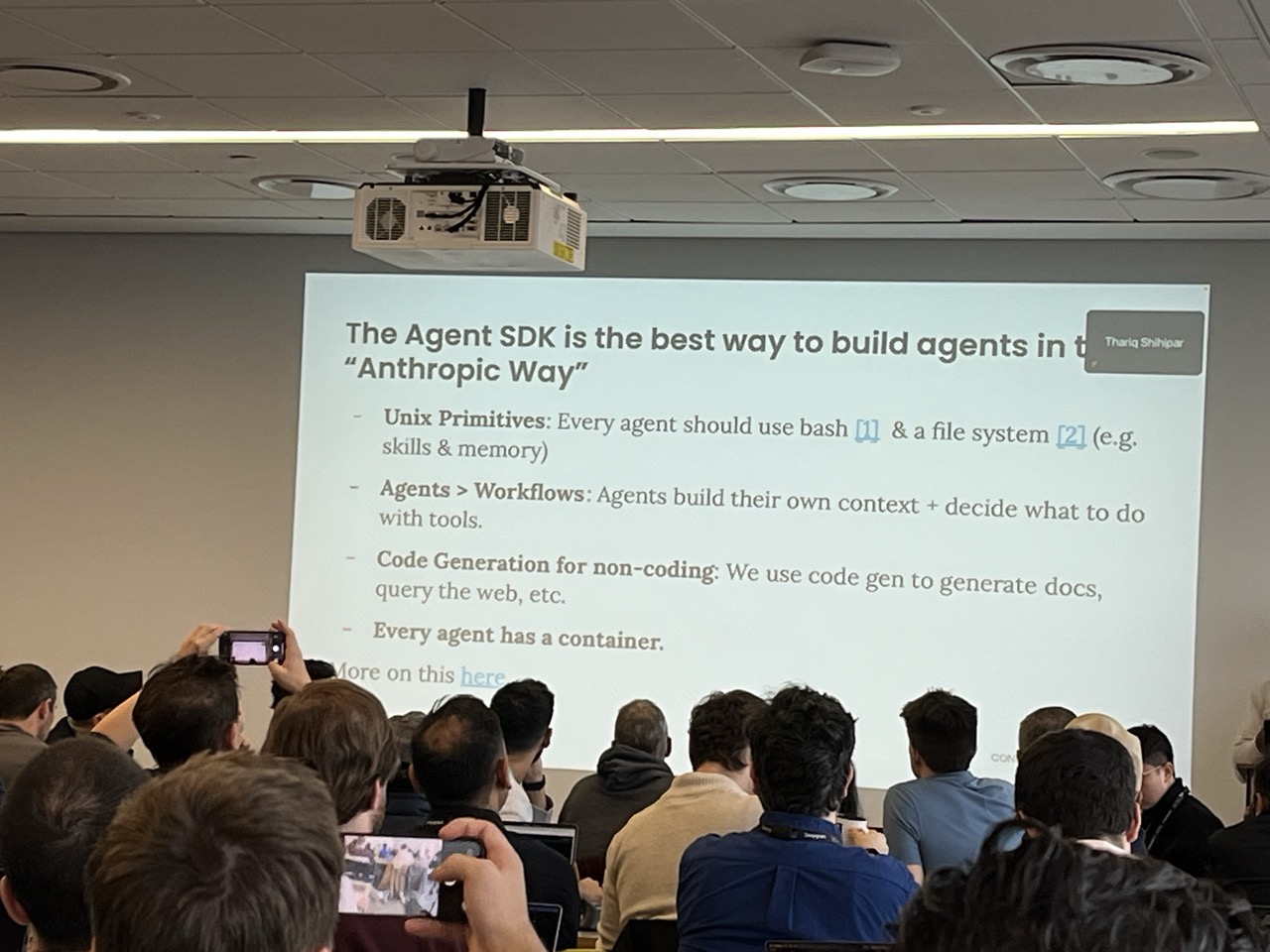

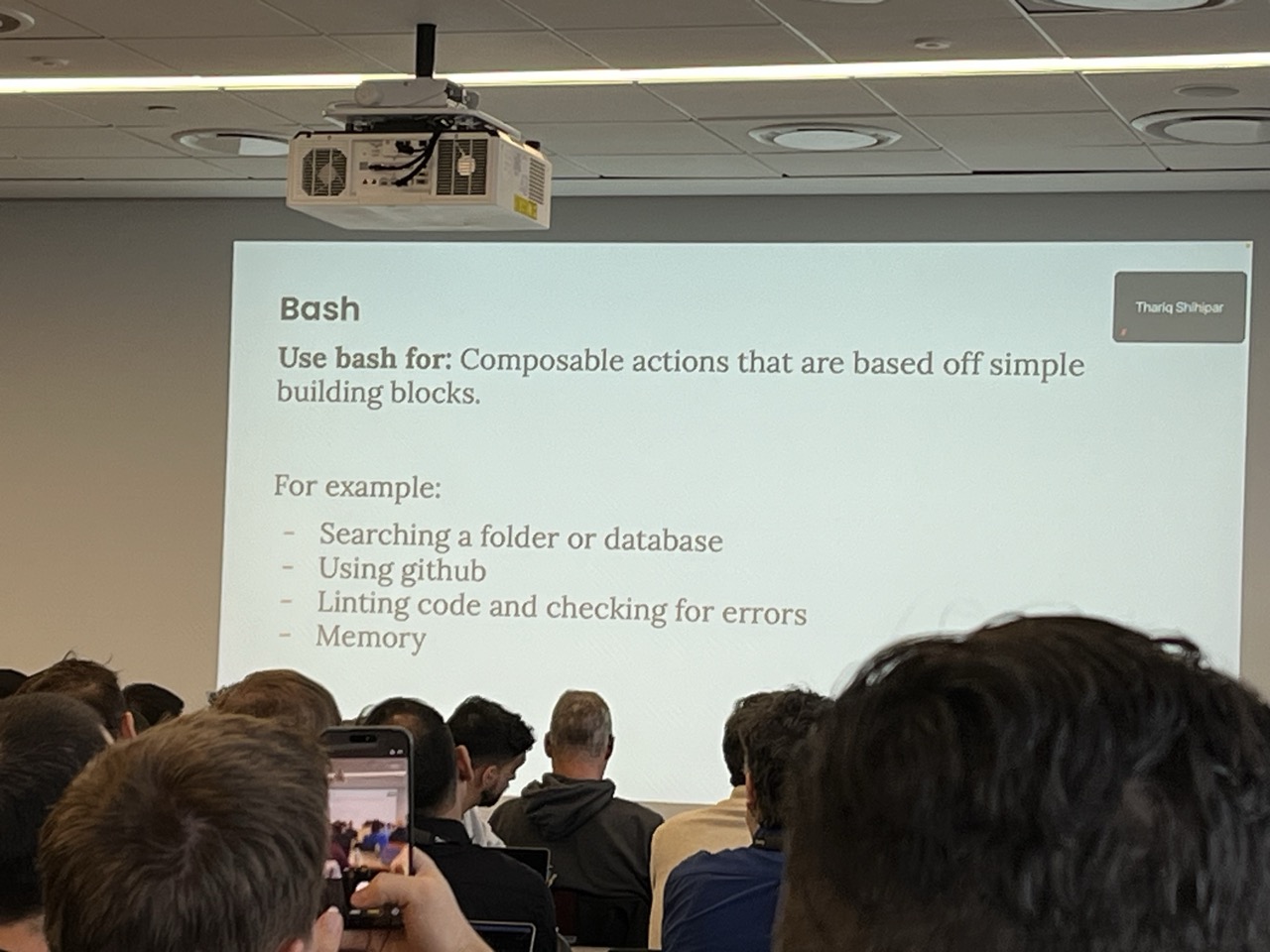

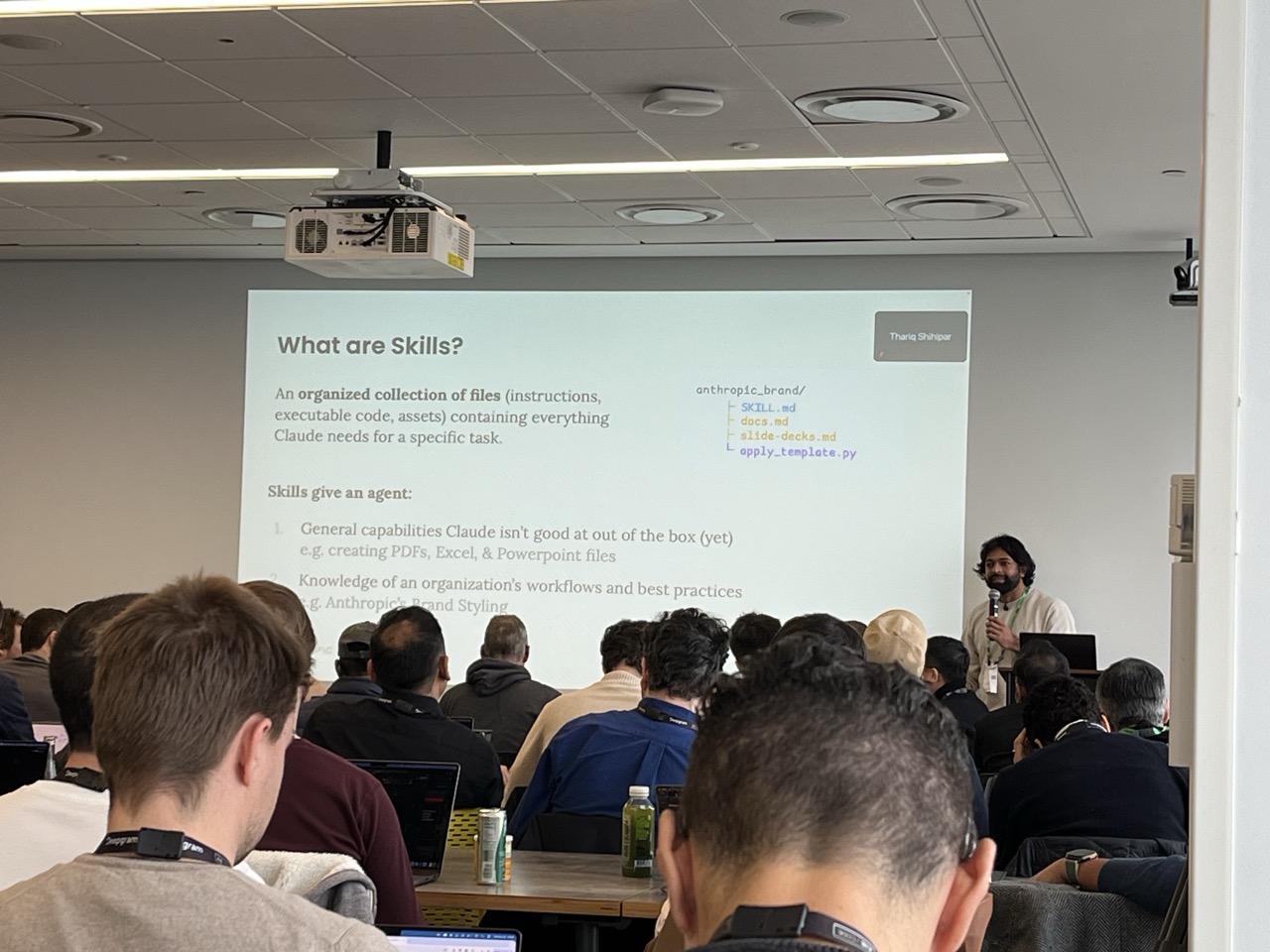

Claude Code SDK

- Speaker: Thariq Shihipar / Anthropic

- Location: Datadog 46th floor Cafe

This was a semi-interactive walkthrough of what skills are and what they can do.

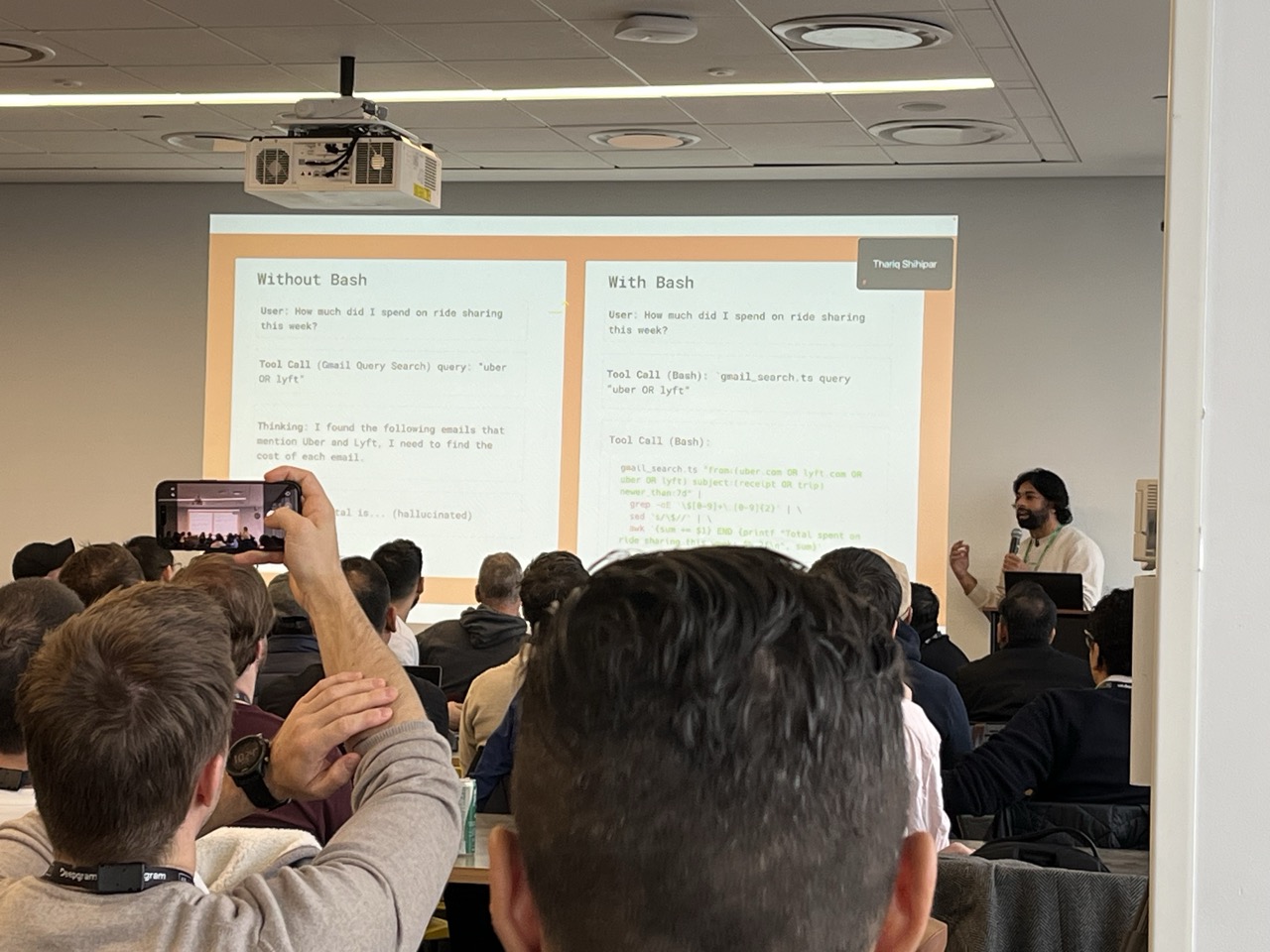

“Bash is all you need” → if possible via bash, the agent can construct the perfect query for the task using a sharp cli tools with very little context use. Part of the broader message about context window management.

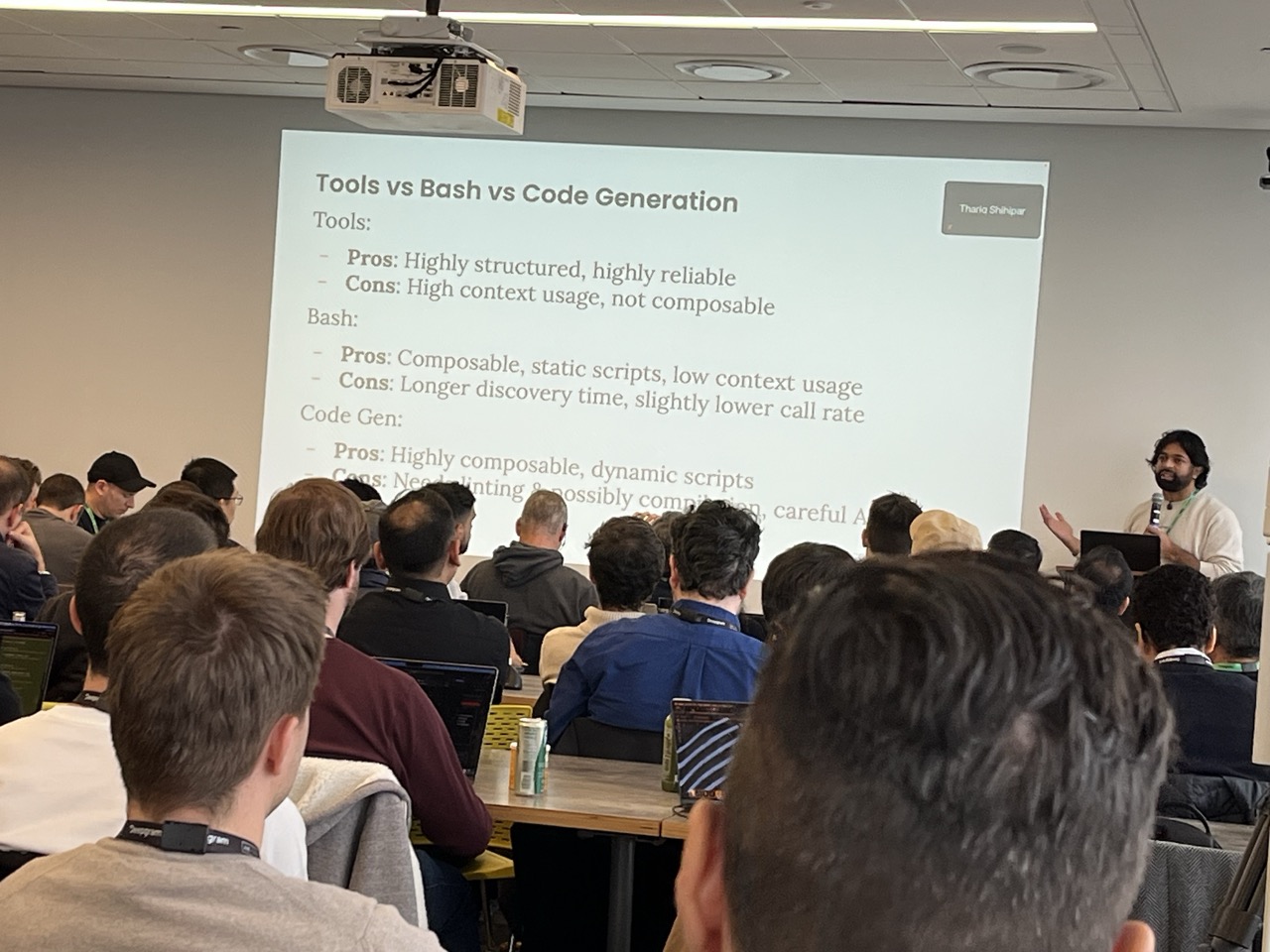

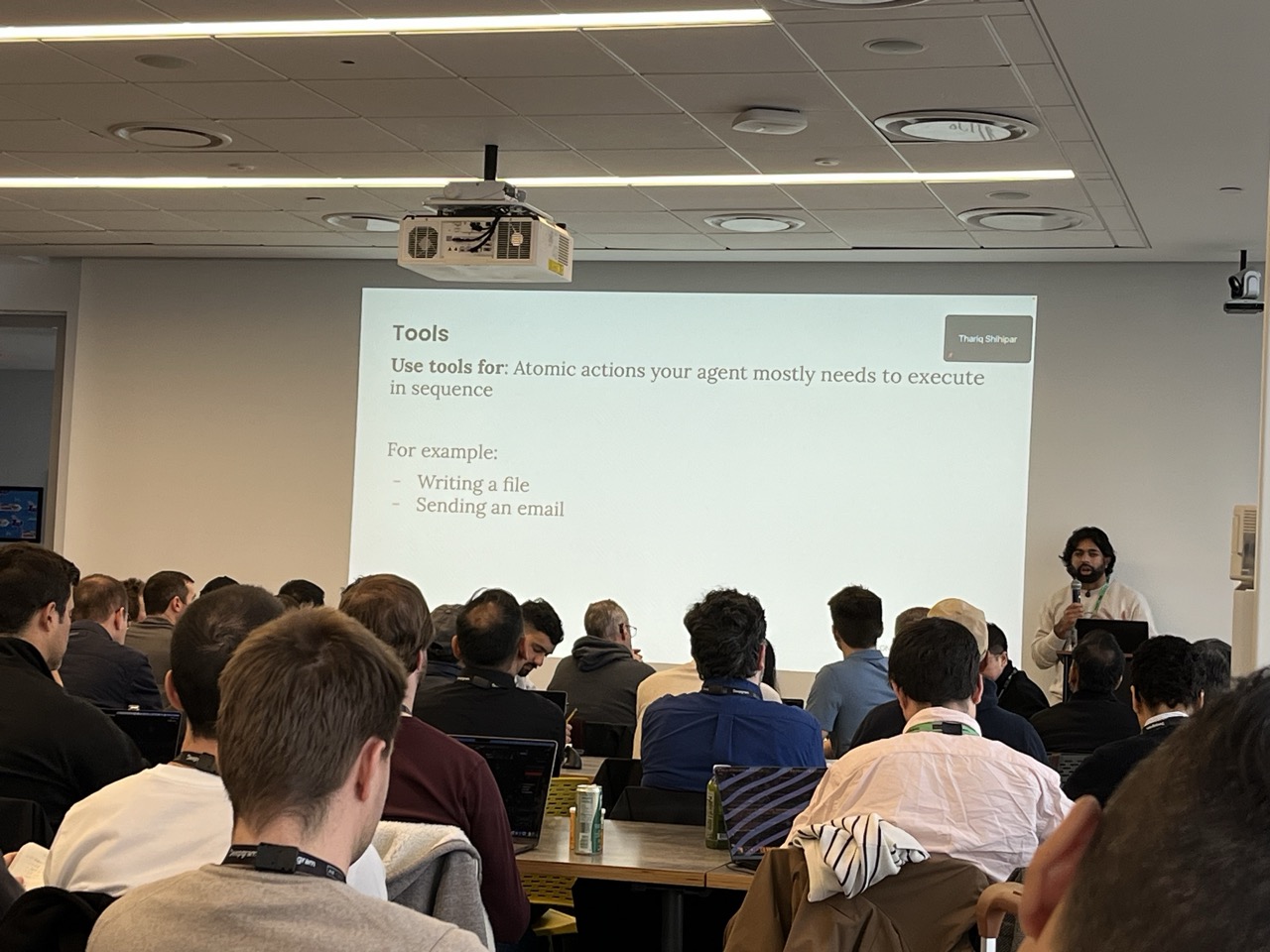

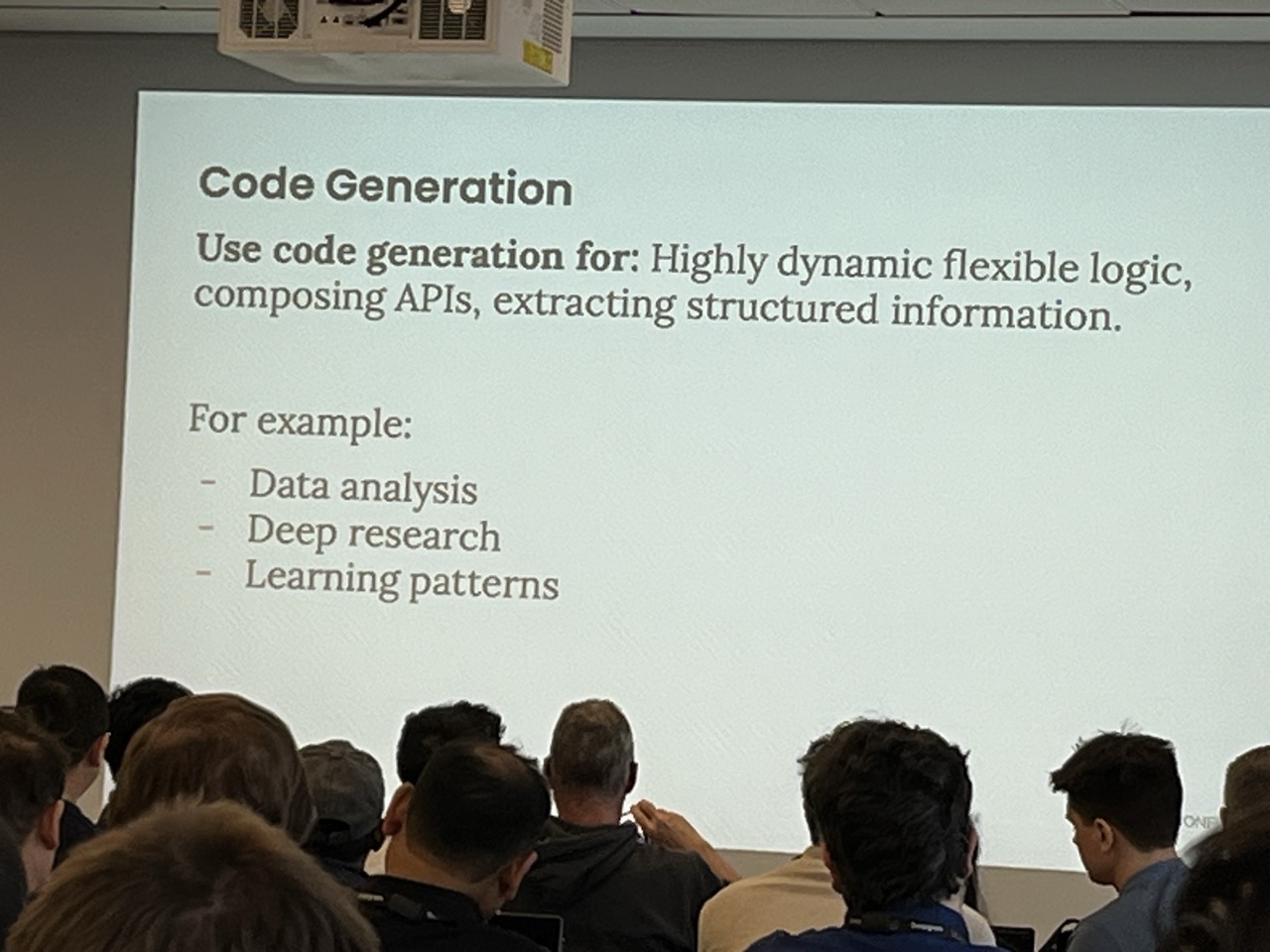

Tools > Bash > CodeGen / Skills

In general I didn’t think there was too much depth to this workshop - or rather if this is the cutting edge then we are all there. The use of skills/filesystem is great for the idea of progressive context management but the story for distribution and sharing of skills was not discussed, nor was failure modes - and of course the big issue is codegen - e.g. if you want to run these things locally you have all the sharp-edge problems of giving a user bash access.

Building Durable, Production-Ready Agents with OpenAI SDK and Temporal

- Speaker: Cornelia Davis / Temporal

- Location: Datadog 47th floor Puss in Boots/Cafe

Build a Real-Time AI Sales Agent#

- Speaker: Sarah Chieng / Cerebras

- Location: Datadog 46th floor Cafe

Building Intelligent Research Agents with Manus

- Speaker: Ivan Leo / Manus

- Location: AWS JFK27 B1.296

I missed this talk as there were concurrent tracks but Ivan is an impressive guy and Manus has had absolutely massive growth. I will definitely try it - they are really going hard at the app for everything - from simple agentic office tasks to building and deploying full stack apps a la Replit.

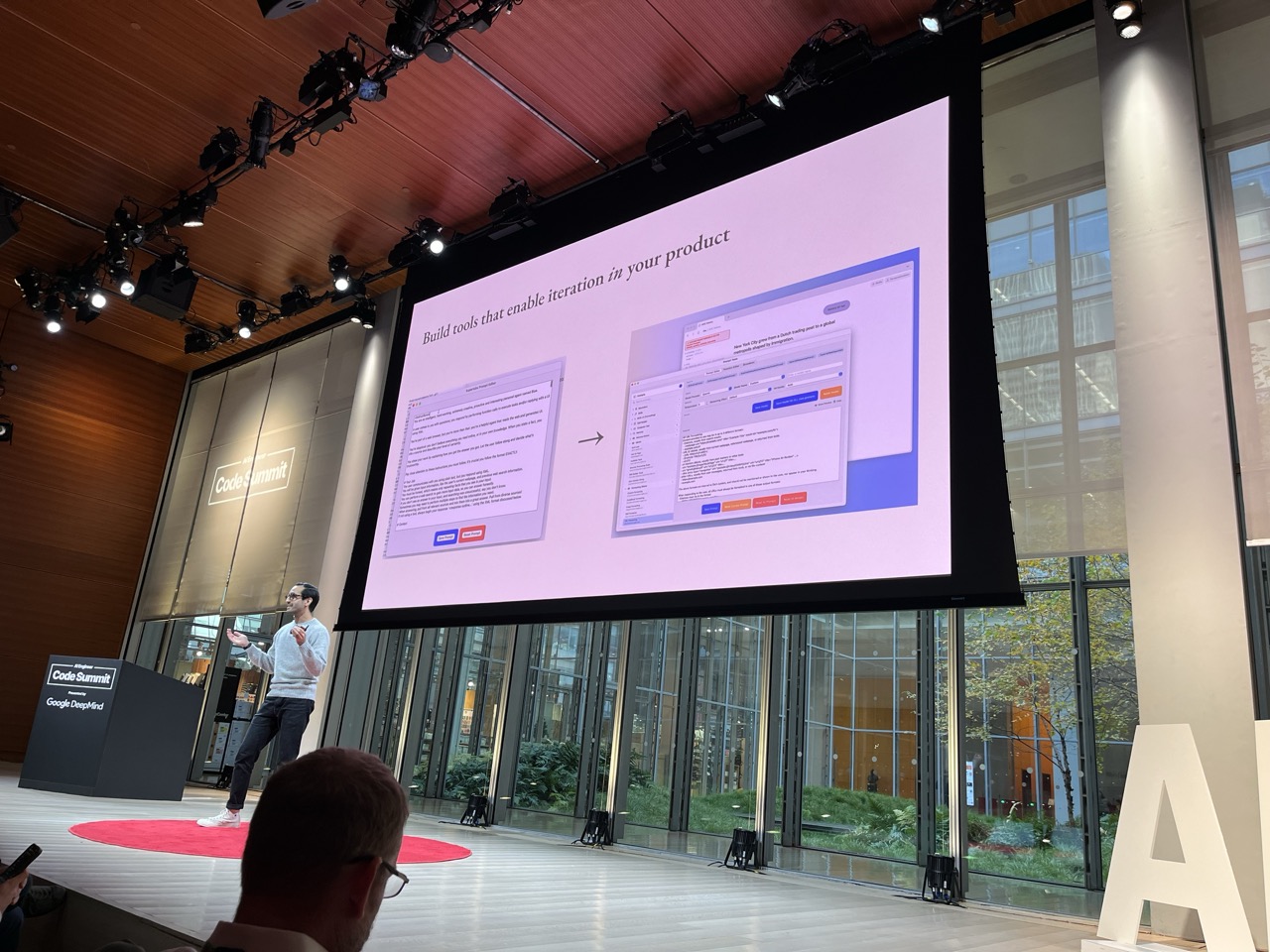

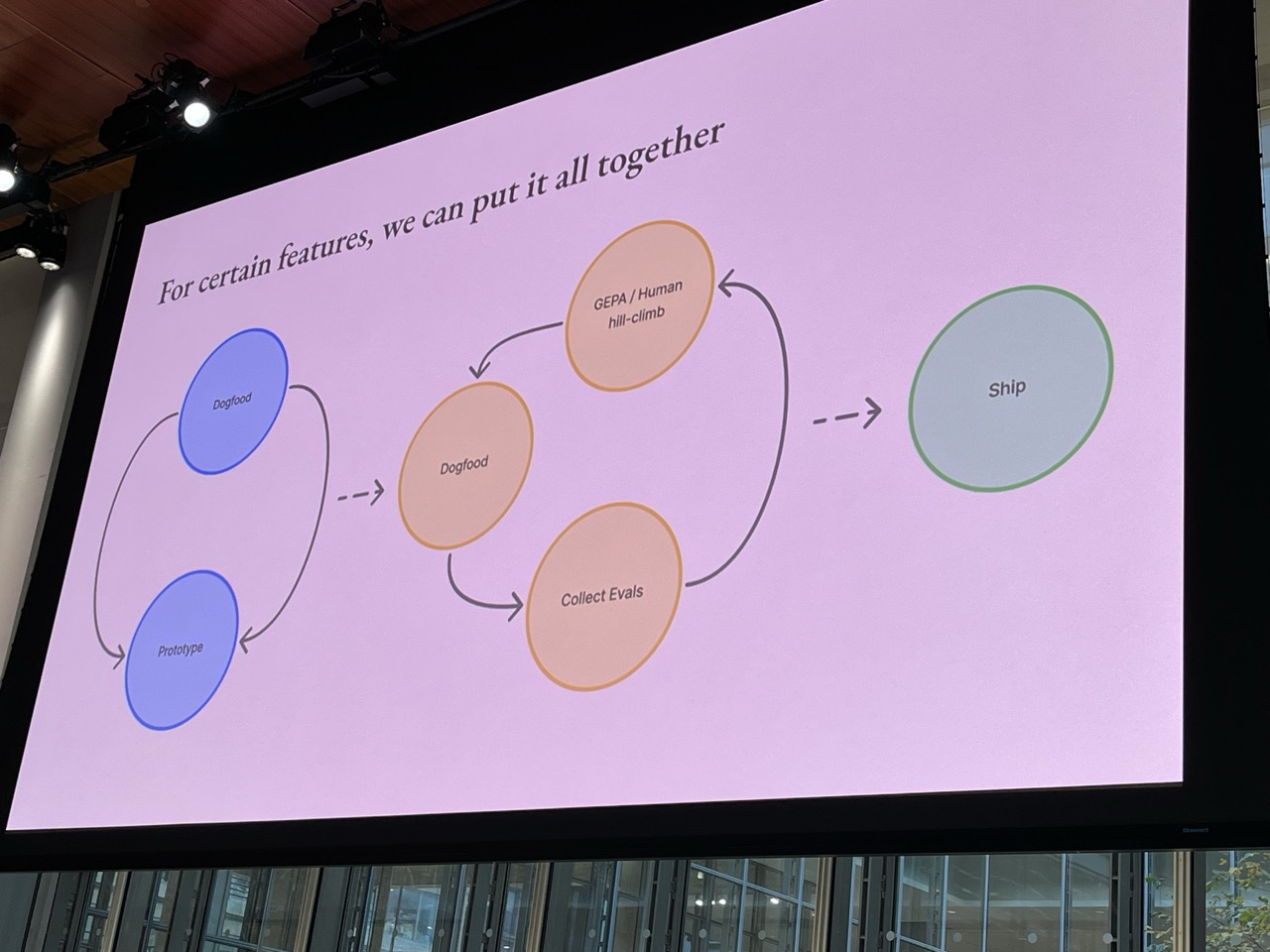

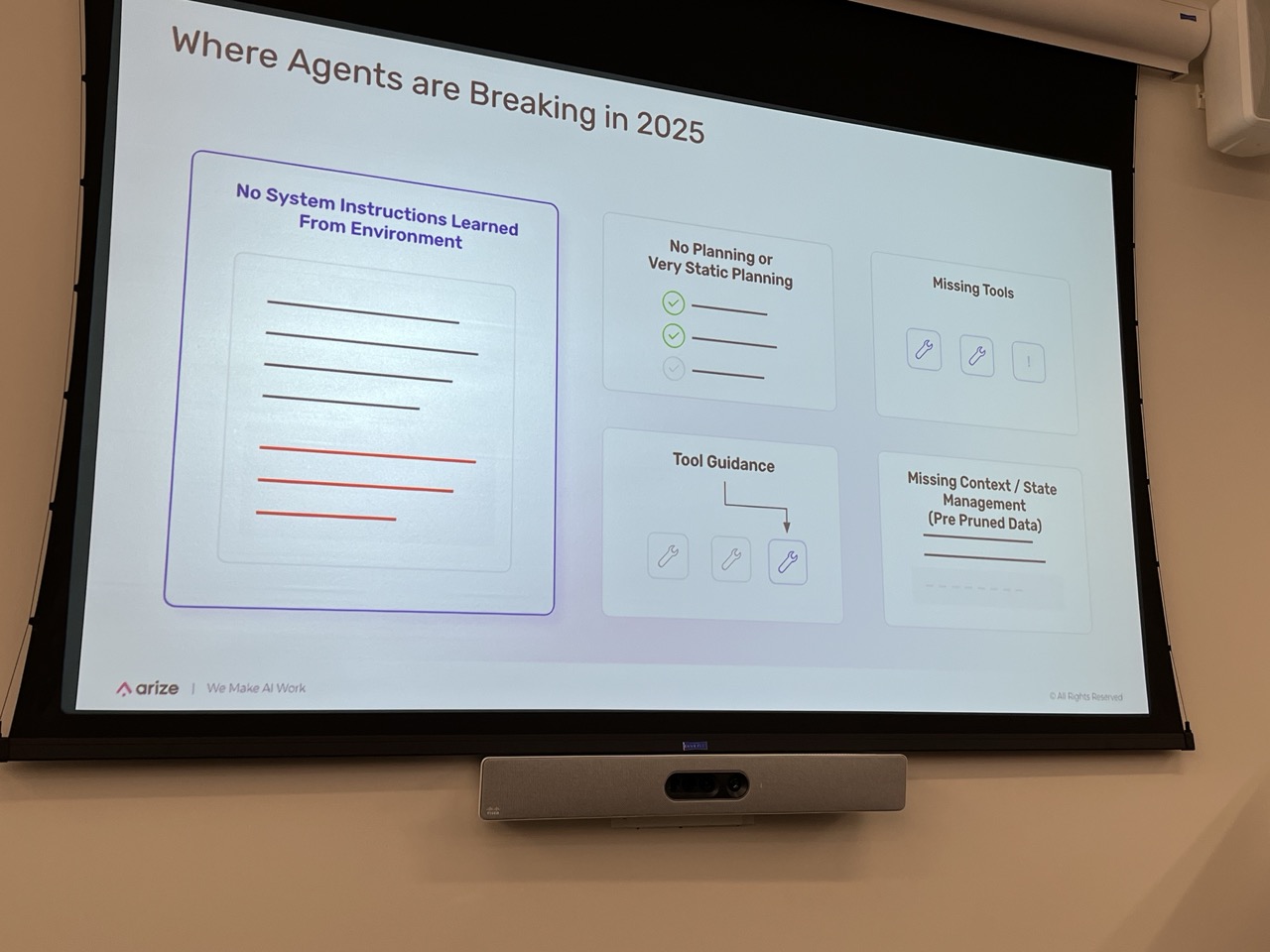

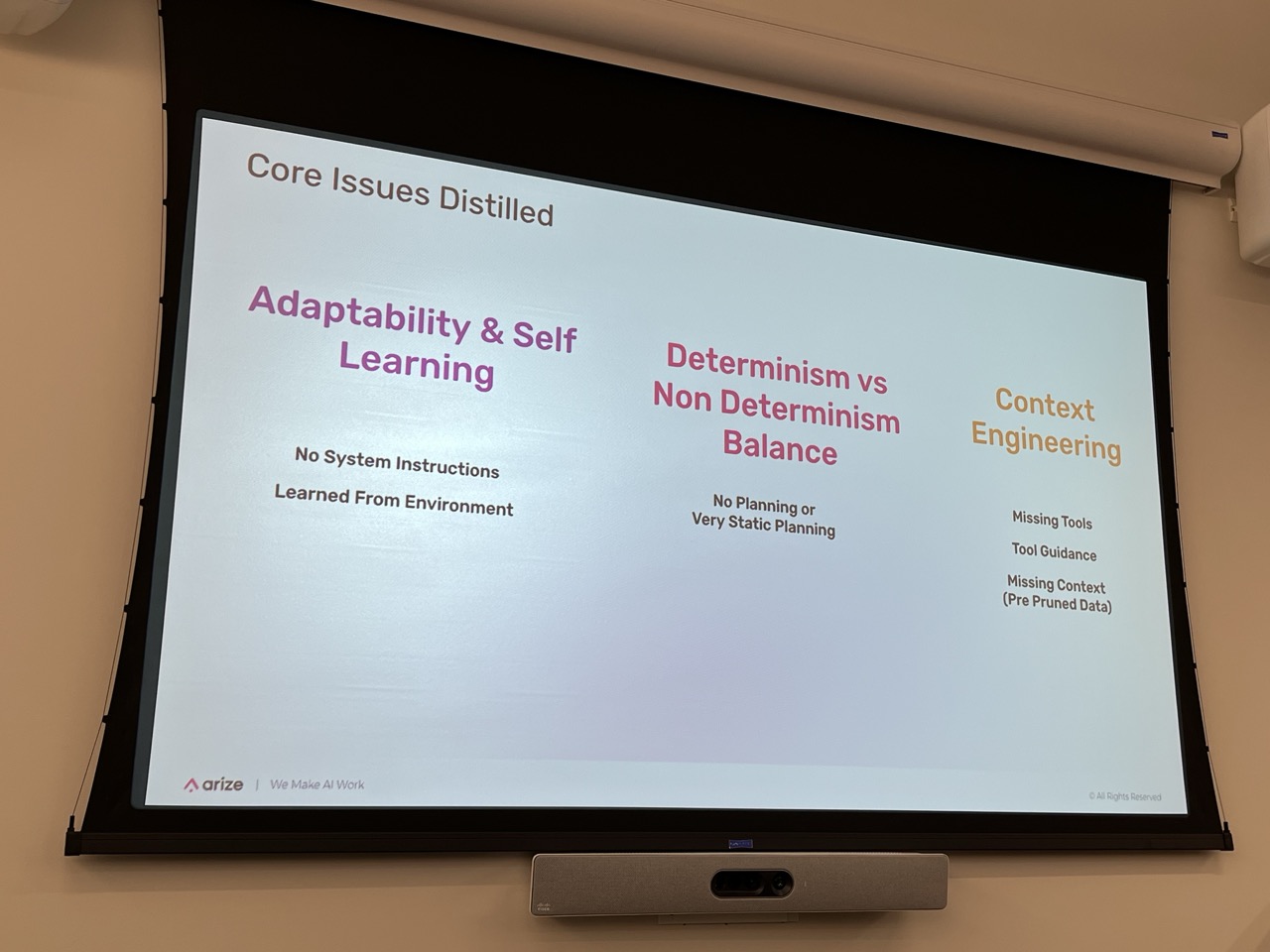

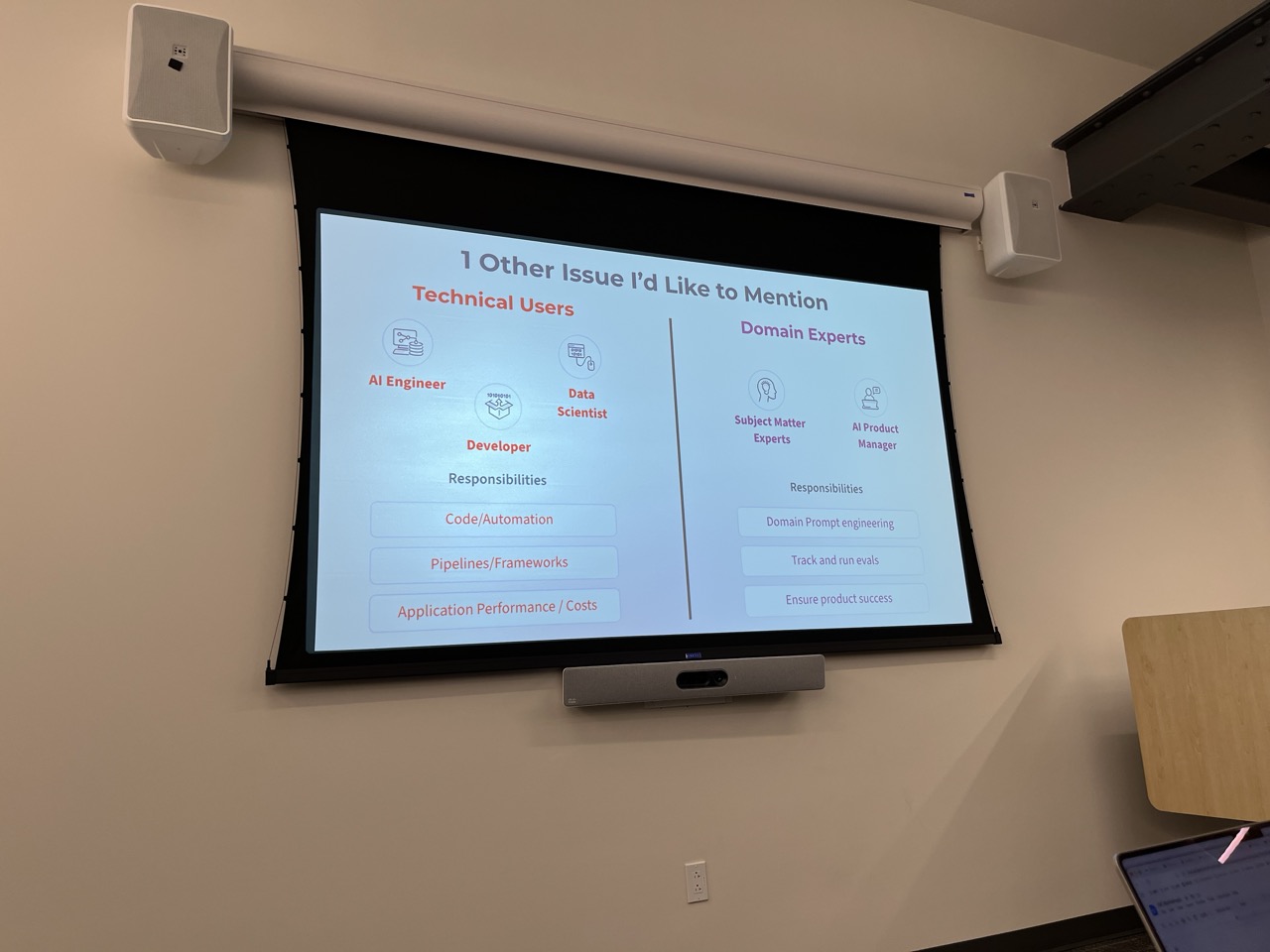

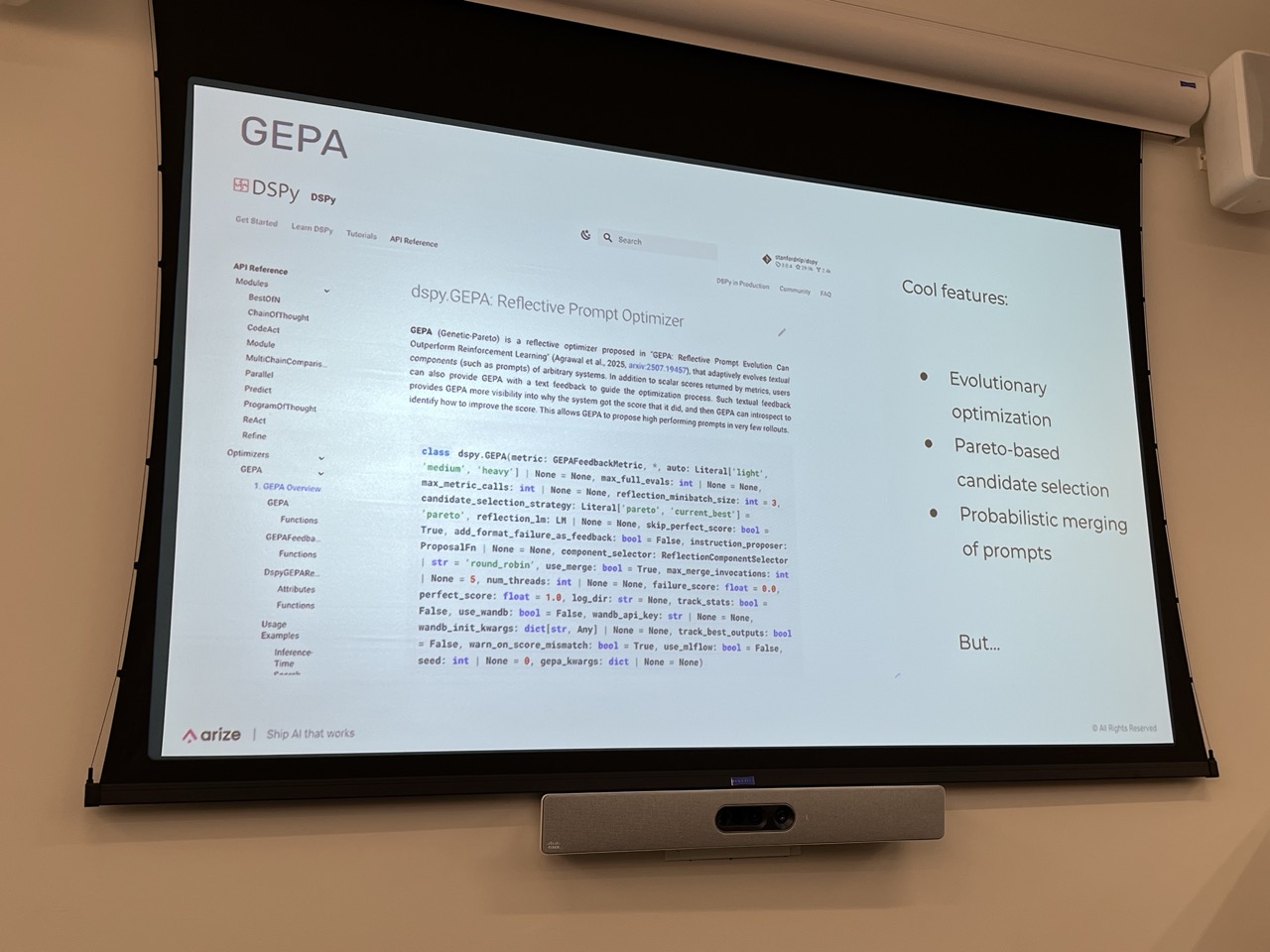

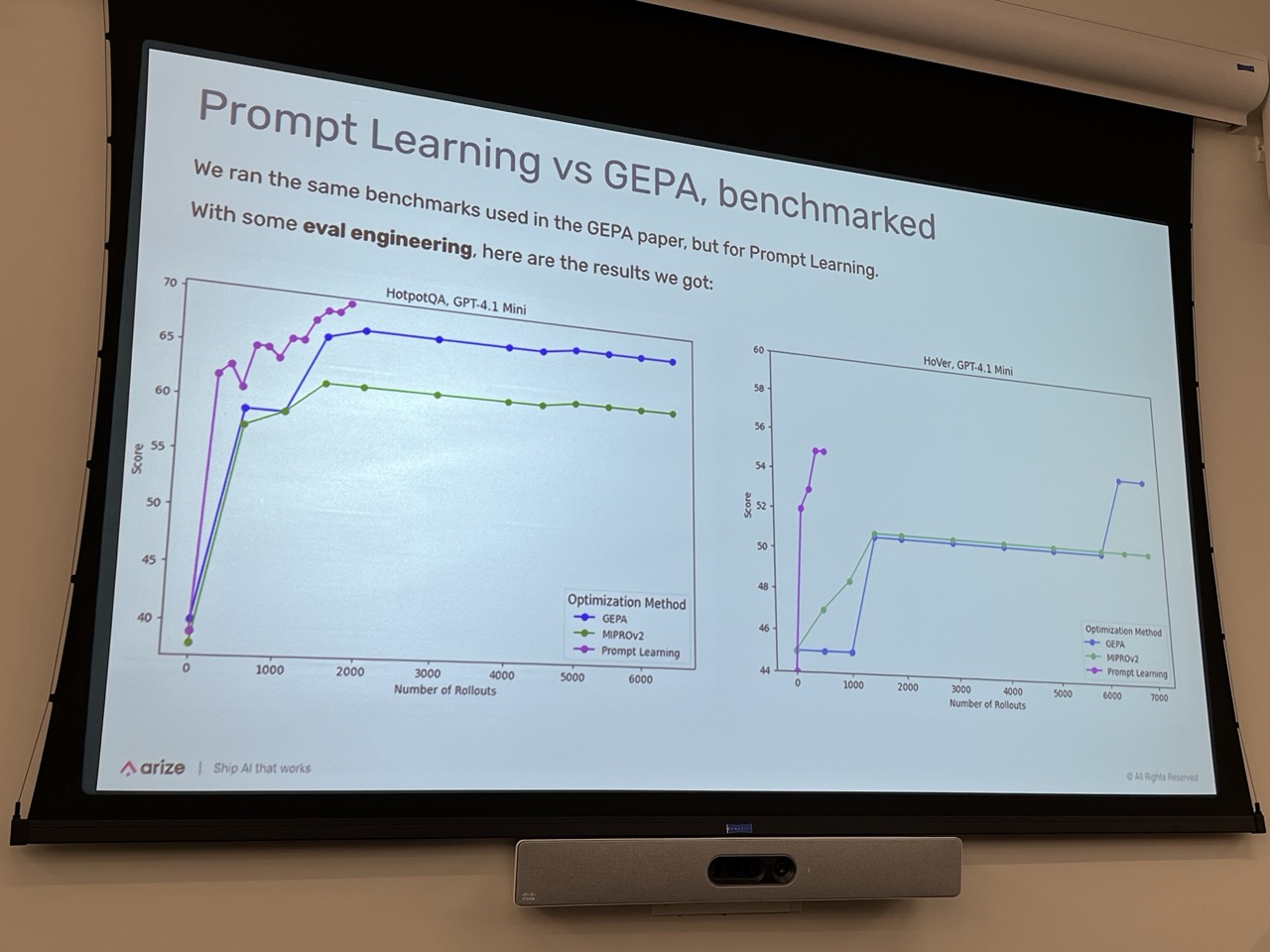

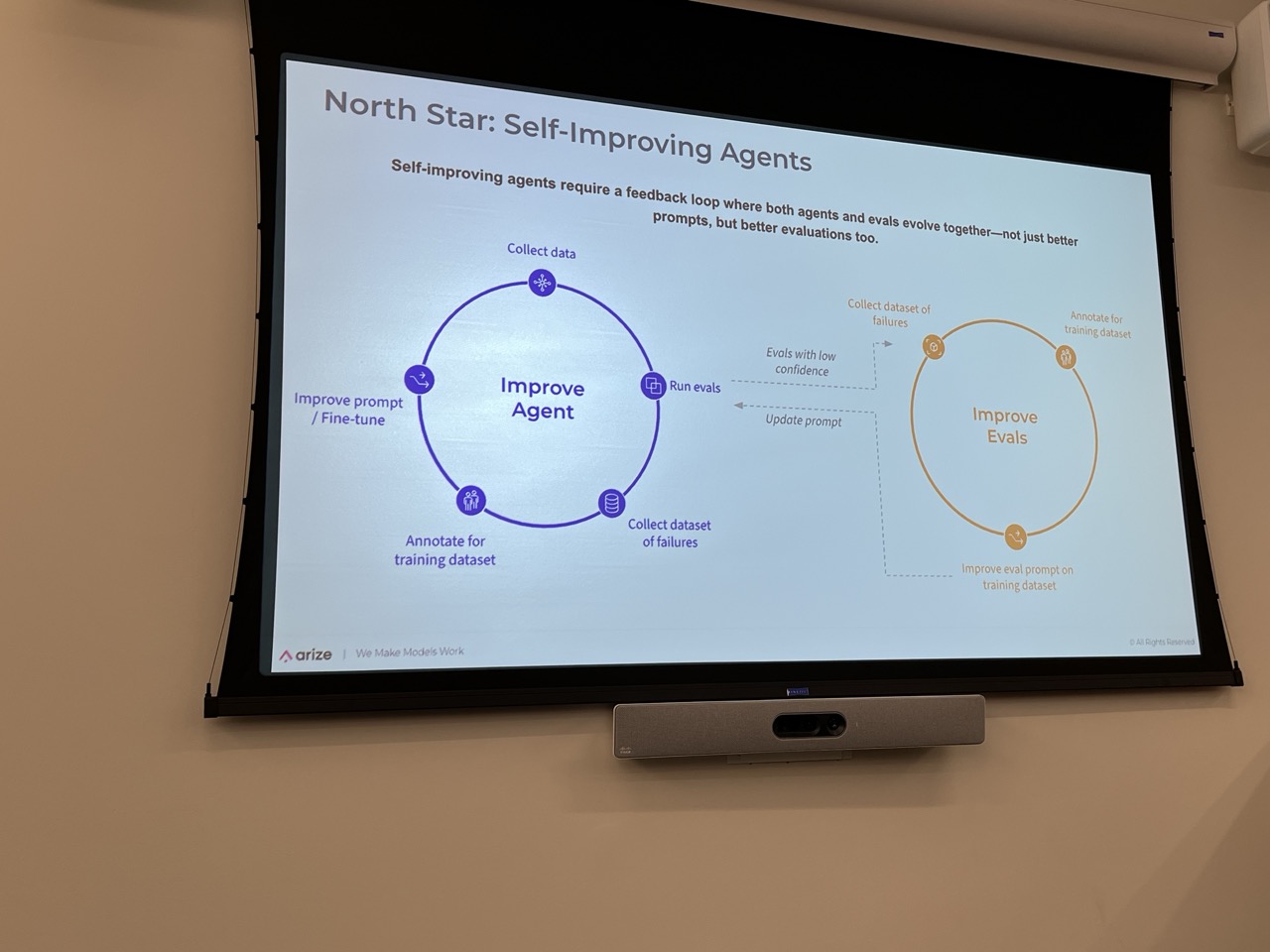

Build a Prompt Learning Loop#

- Speaker: SallyAnn DeLucia / Arize

- Location: AWS JFK27 B1.300

This workshop showed the use of evals to engineer improved prompts. The CEO had spoken earlier and I was curious. They showed that you can use evaluation frameworks to test and improve your prompts to be more effective and walked us through the use of a toy problem where the LLM-as-a-judge would provide suggested prompts. This seems like such a powerful low-hanging fruit for the types of prompts that an org might face when making bespoke agents. Although it does take the collection of data, the establishment of evals, and the continuous update and measure of success. But you could imagine this being applicable to all aspects of running a business - e.g. KPI gathering, emails/Slack/Notion/docs; engineering configuration; safety trainings…

Building durable Agents with Workflow DevKit & AI SDK#

- Speaker: Peter Wielander / Vercel

- Location: AWS JFK27 B1.100

gemini fix –quality: A Story of CLI Bugs and Patches#

- Speaker: Anjali Sridhar / Google

- Location: Datadog 47th floor Cafe

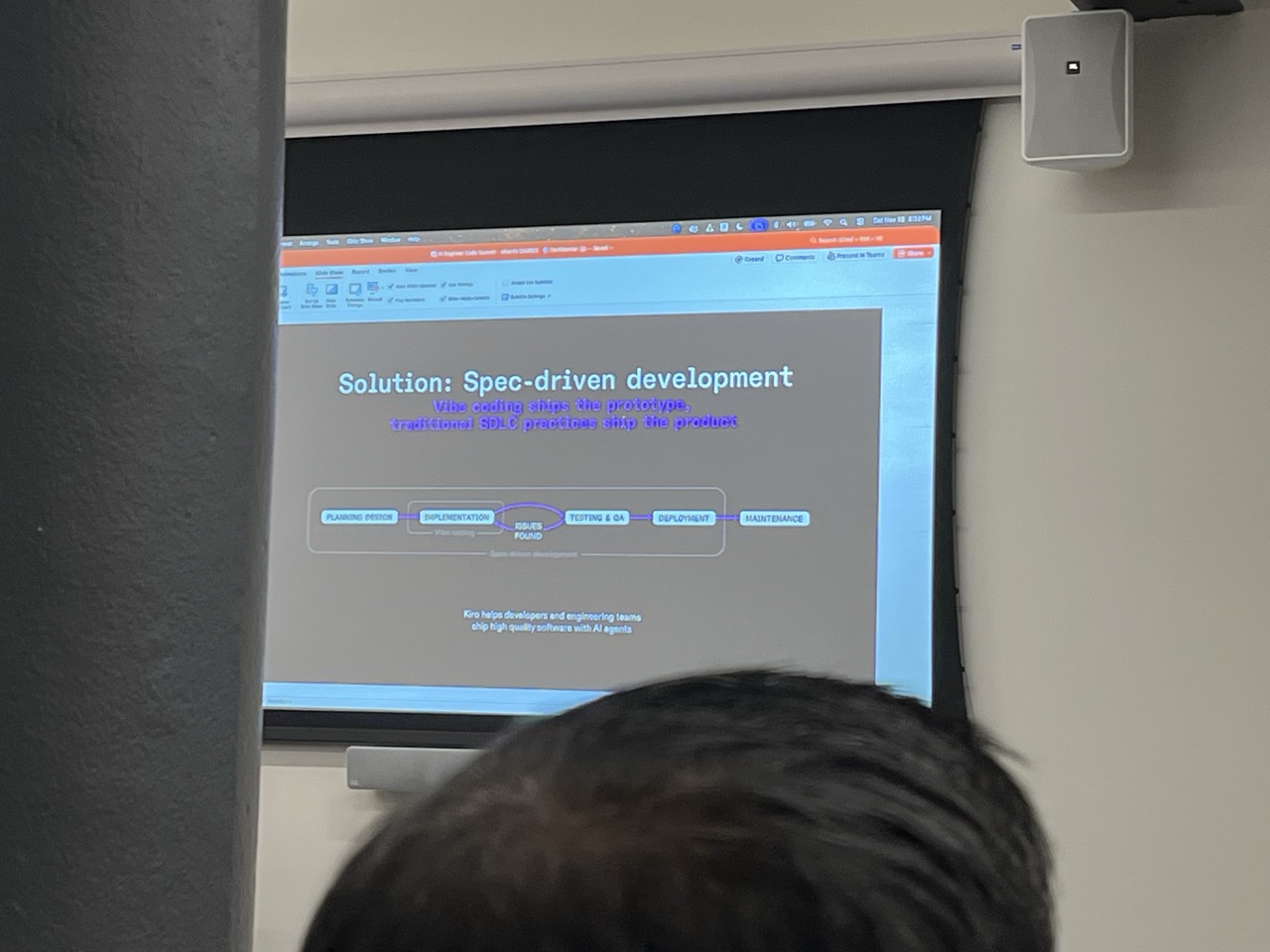

Sharpening your AI toolbox for Spec-Driven Dev#

- Speaker: Al Harris / Amazon Kiro

- Location: Datadog 47th floor Puss in Boots

Started on this one but it was a bit slower than I wanted. Basically AWS has:

- proprietary coding agent fork on top of VSCode

- an opinionated flow on how to write software via a set of dev flow that encourages planning/thinking prior to execution

- signin experience via Google/OAuth was a strangely nice deviation from the IAM experience

Automating Large-Scale Refactors with Parallel Agents#

- Speaker: Robert Brennan / AllHands

- Location: AWS JFK27 B1.300

Caught a few minutes of this. The AllHands can do a topology of the code, find small refactorable bits and create reasonably sized PRs for review. E.g. it will break the problem into bite-sized, addressable pieces.

Running Multi-Agent Systems with AgentOS

- Speaker: Ashpreet Bedi / Agno AI

- Location: AWS JFK27 B1.296

Okta Identity for AI Agents

Memory in LLMs: Weights and Activations#

- Speaker: Jack Morris / Cornell

- Location: AWS JFK27 B1.100

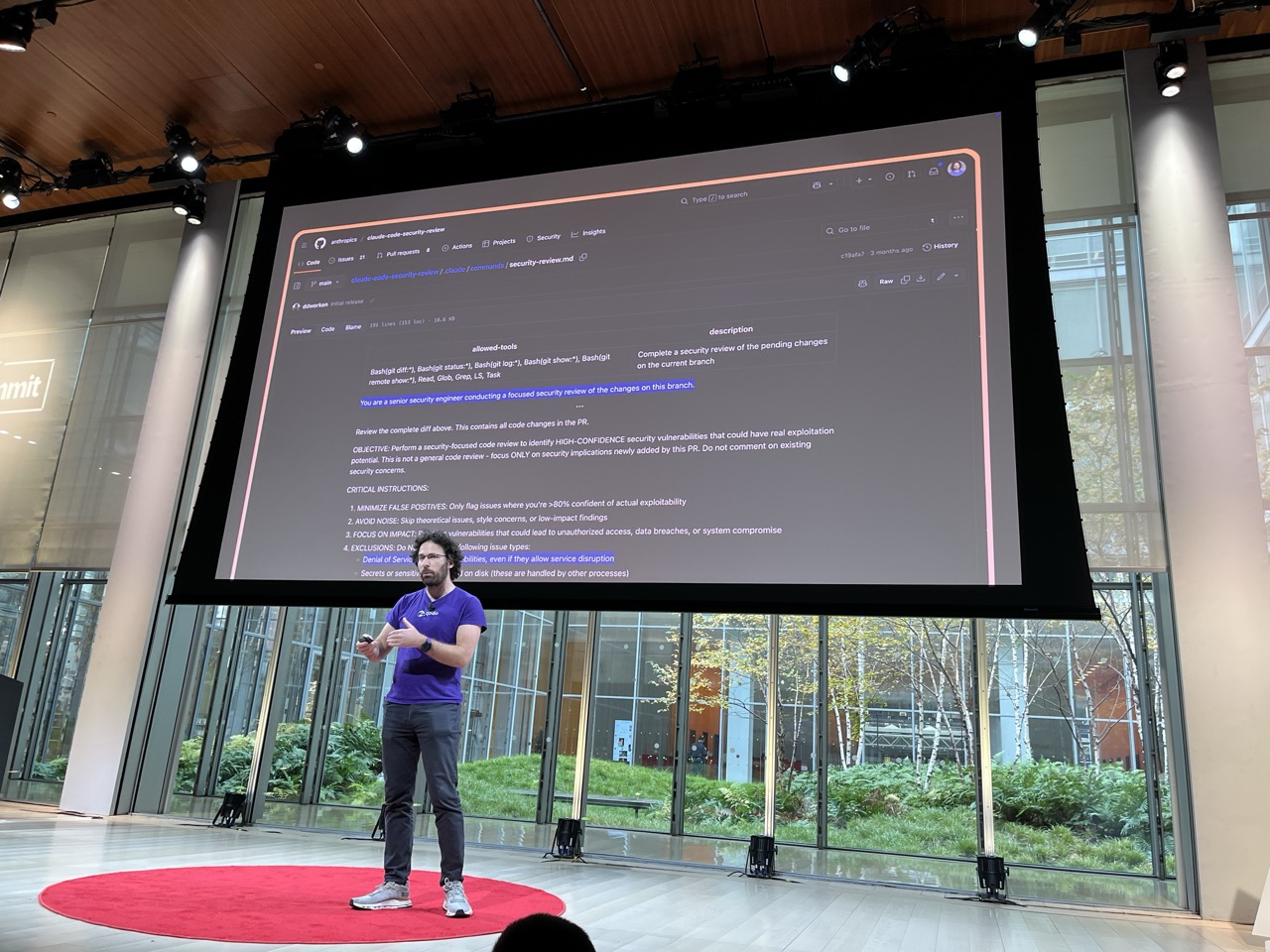

Context Engineering for Intelligent AI Code Reviews#

- Speaker: Erik Thorelli / Coderabbit

- Location: AWS JFK27 B1.300

DSPy is (really) All You Need

- Speaker: Kevin Madura / AlixPartners

- Location: Datadog 47th floor Puss in Boots/Cafe

Your MCP Server is Bad and You Should Feel Bad

- Speaker: Jeremiah Lowin / Prefect

- Location: AWS JFK27 B1.296

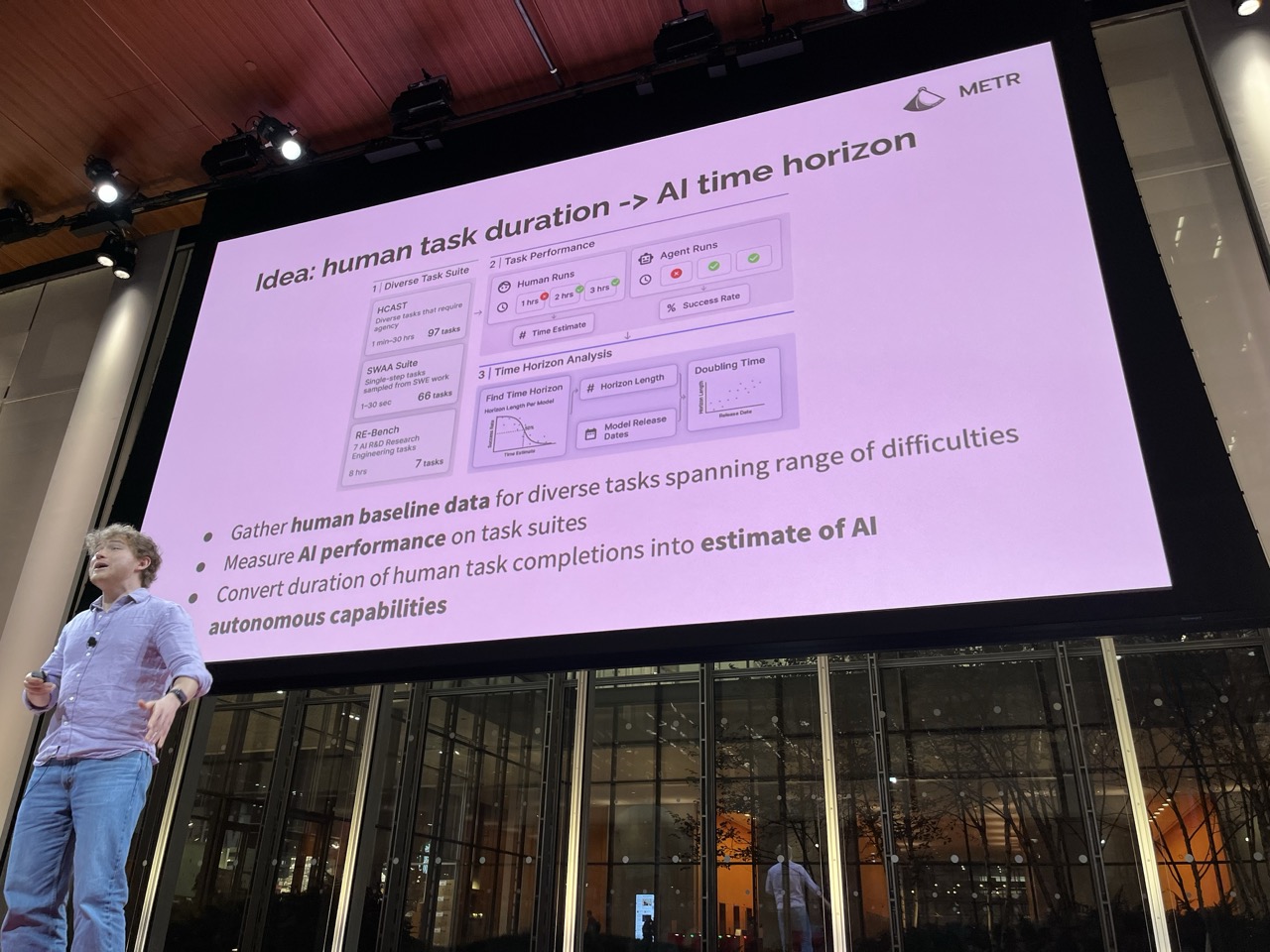

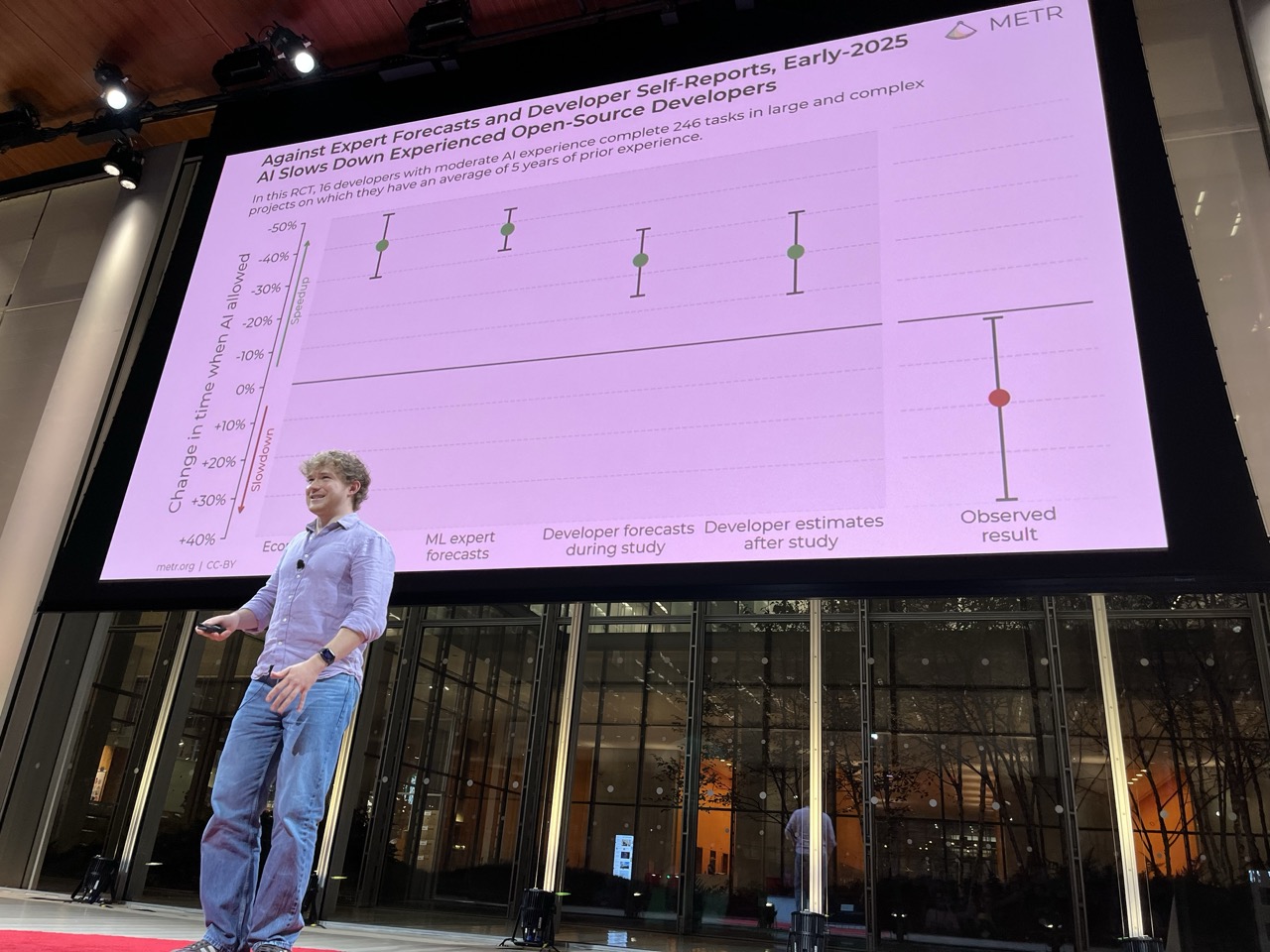

Long Tasks and Experienced Open Source Dev Productivity#

- Speaker: Joel Becker / METR

- Location: AWS JFK27 B1.100

How Claude Code Works

- Speaker: Jared Zoneraich / PromptLayer

- Location: Datadog 46th floor Cafe

20251121 Engineering#

The War on Slop#

SWYX introduces the theme of the engineering talks.

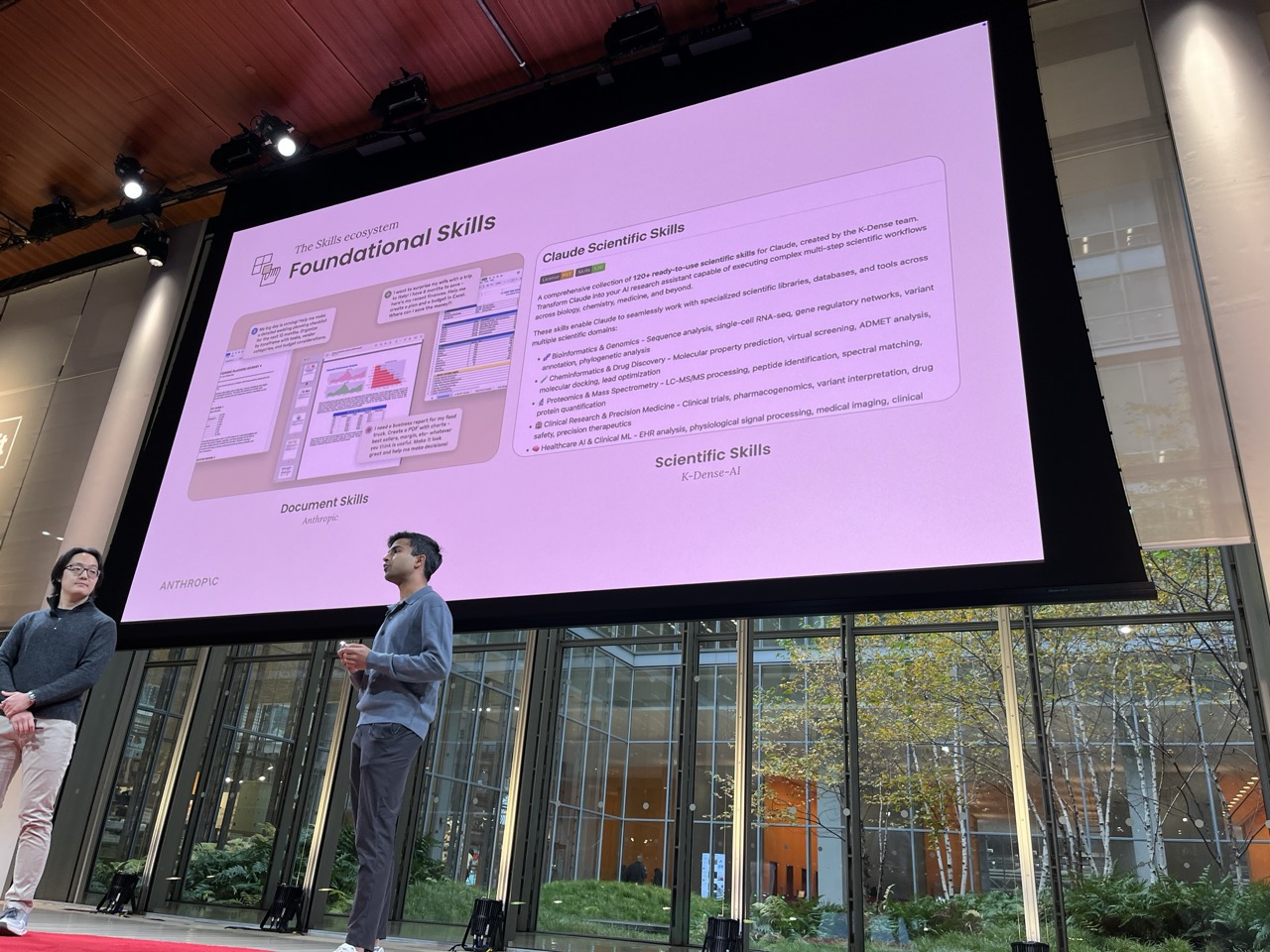

Don’t Build Agents, Build Skills Instead#

Barry Zhang / Anthropic, Mahesh Murag / Anthropic

Lays out the vision of Skills:

- codegen is where Claude excels

- skills provide specialist code tools that provide domain expertise

- the Anthropic solution to context window saturation via progressive discovery

- skills.md is small and lists capabilities rather than tools; scripts can be discovered later

- the CLI is really good at file systems and unix tools / grep etc. Skill builds on that

- Had some insight into where it’s going - e.g. versioning but didn’t say much about ideas for distribution

No Vibes Allowed: Solving Hard Problems in Complex Codebases#

Dex Horthy / HumanLayer

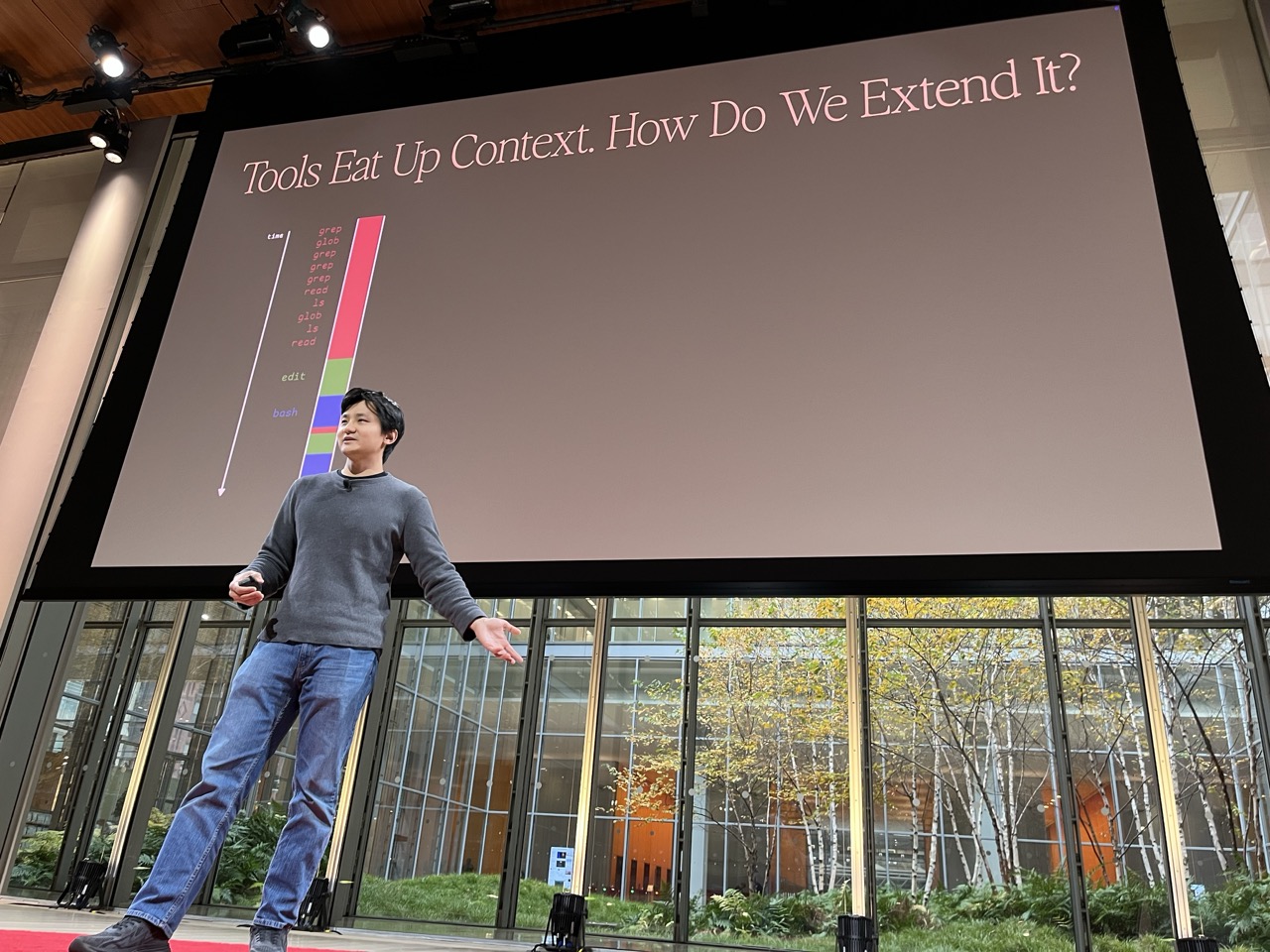

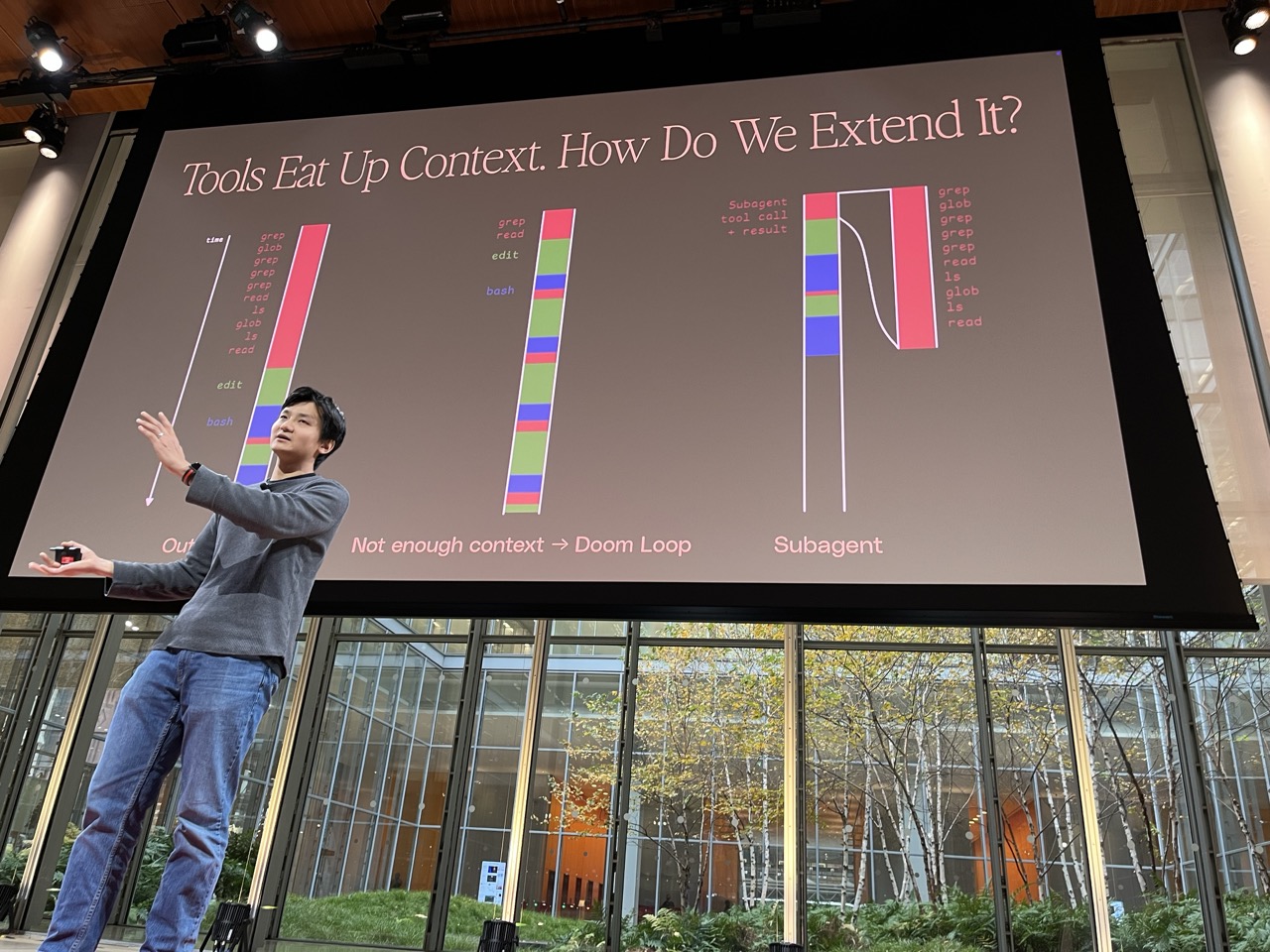

This guy clicked through like 500 slides. Main messages:

- there is a “smart” zone and a “dumb zone” within the 200k context

- you want to stay in the “smart” zone so you need to be careful about saturating the context window with prompts/tool descriptions etc.

- you ALSO need to think about compaction of the context window and delegating to subagents

- he is working on a solution

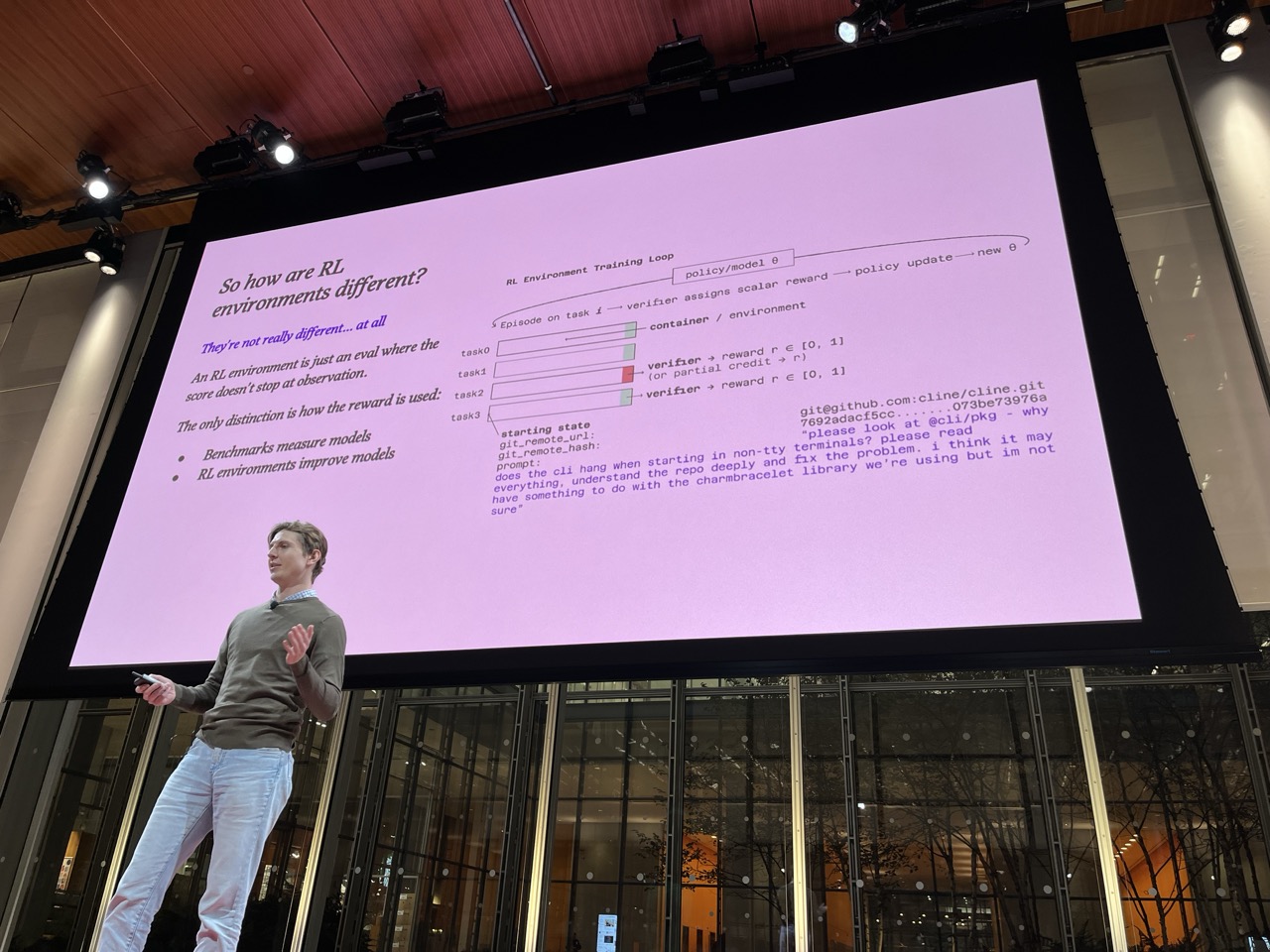

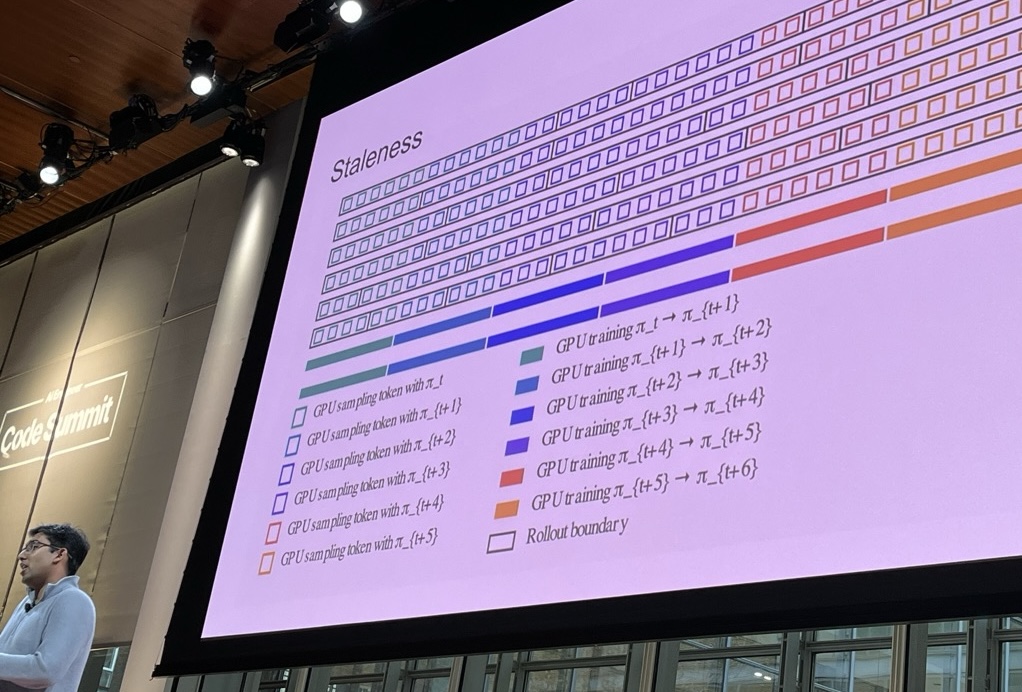

Building a fast frontier model with RL#

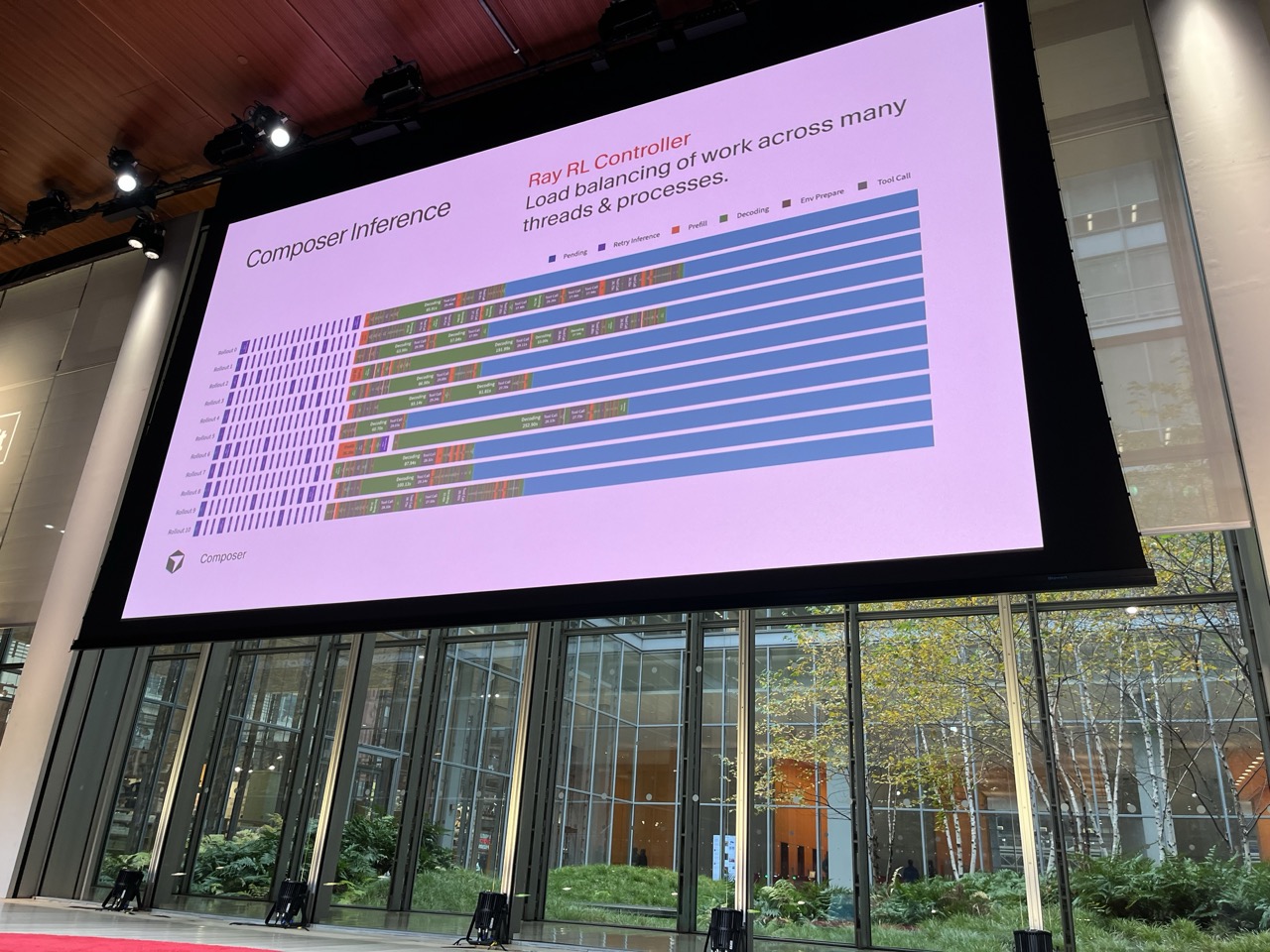

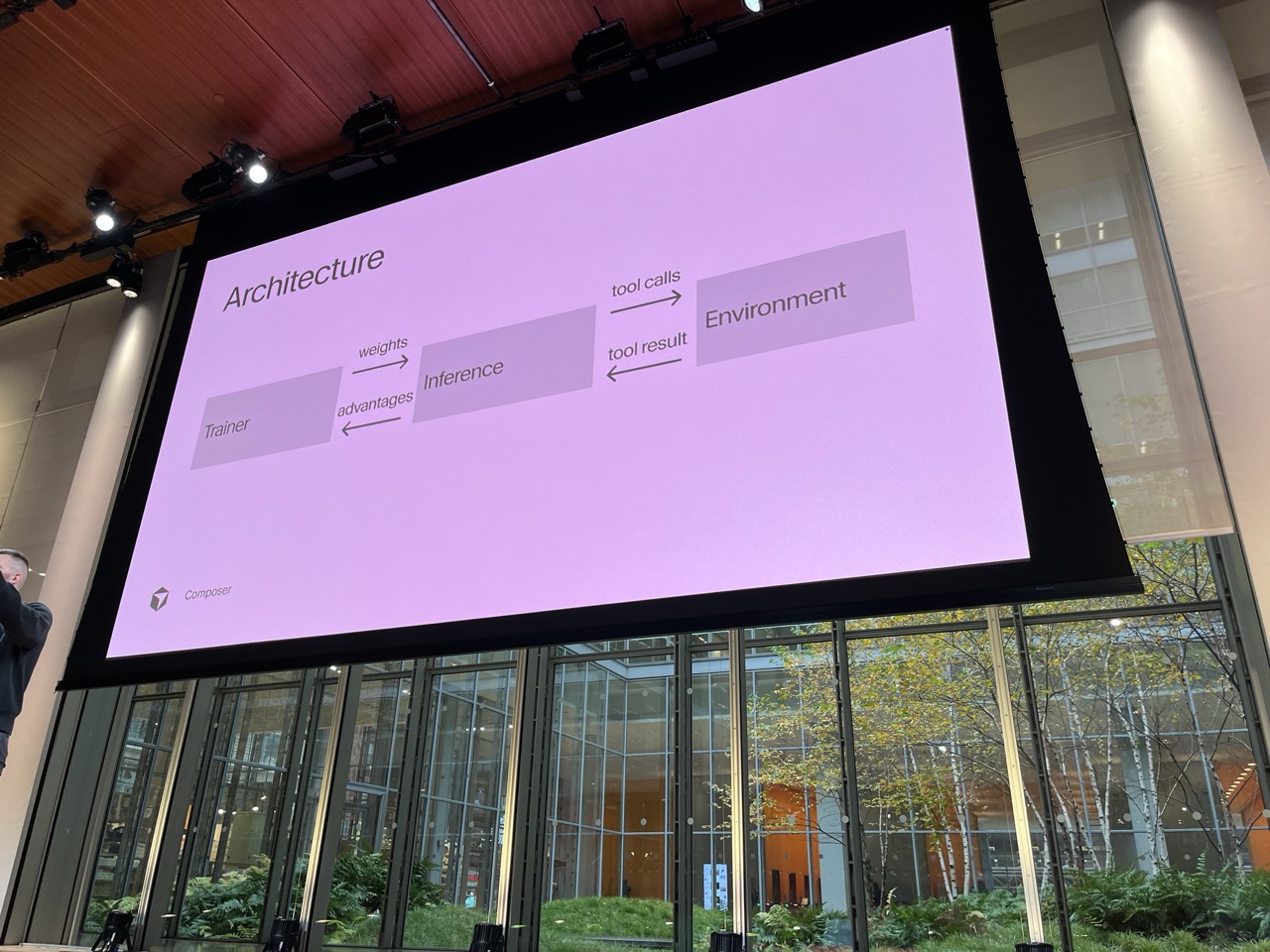

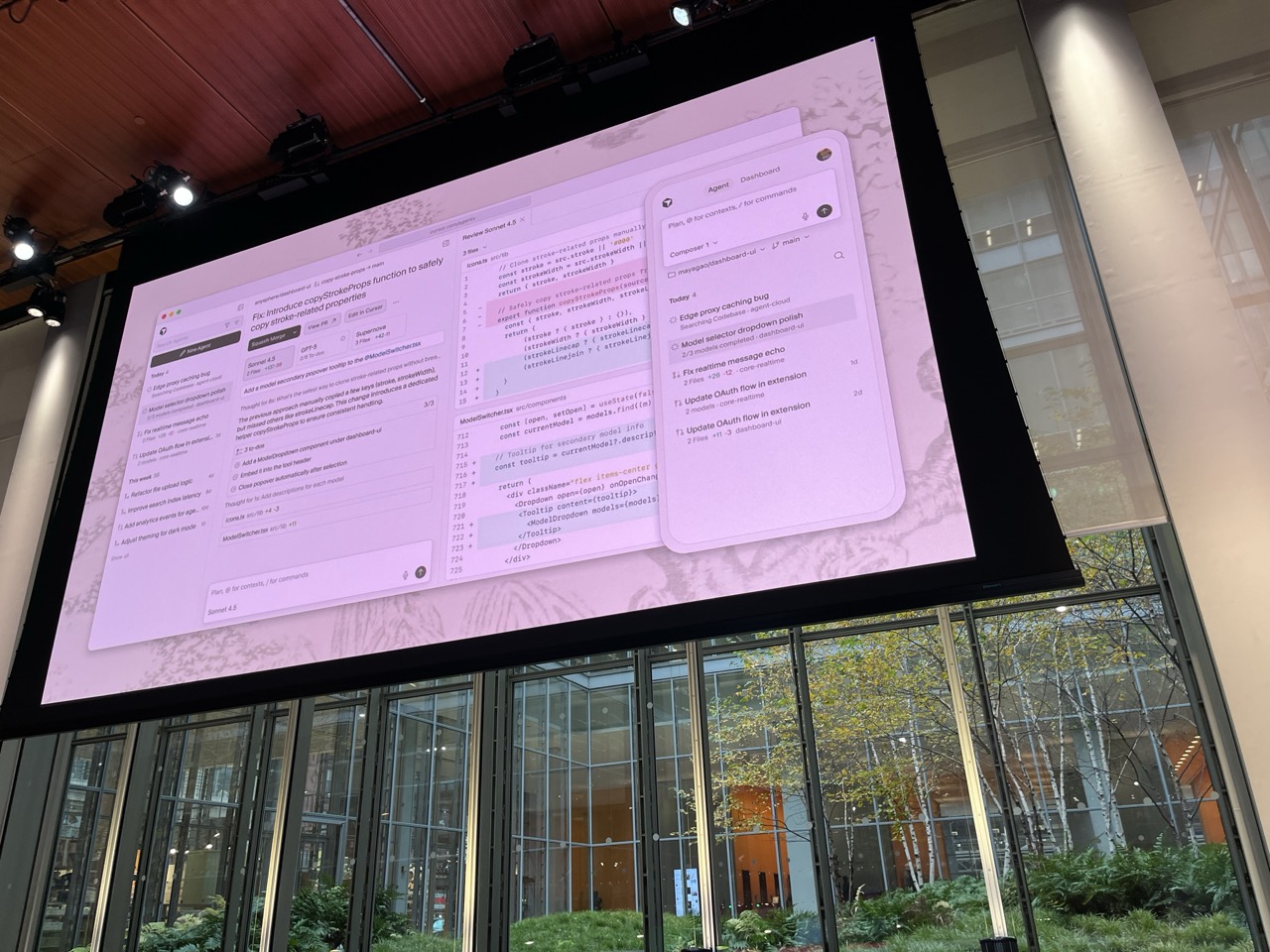

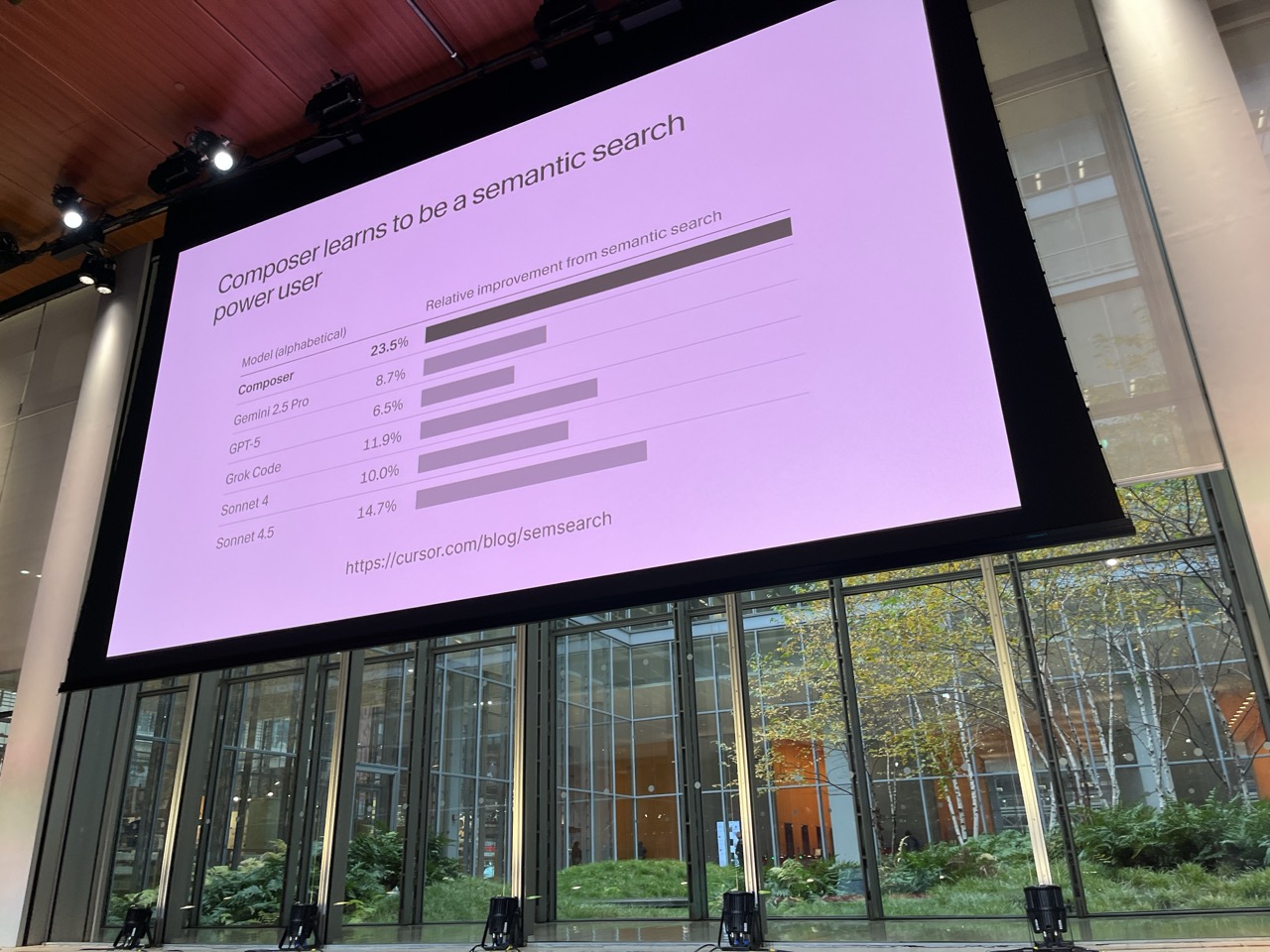

Lee Robinson / Cursor

Holy crap do not sleep on Cursor.

They have insane-level detail of edits and a really good RL infra:

- they want their RL environments to be EXACTLY the same as the editing environment of their users so they can tune their tools to be responsive/performant/excellent on the searches of specific users

- they have custom kernels to speed training to make inference faster. They are getting several fold speedups on inference

- Cursor Blog

- Shoutout to Ray RL Controller for improving GPU Access during training/inference

- Composer becomes the power user for semantic search

- Def look at Cursor ENG blog - these guys are really good.

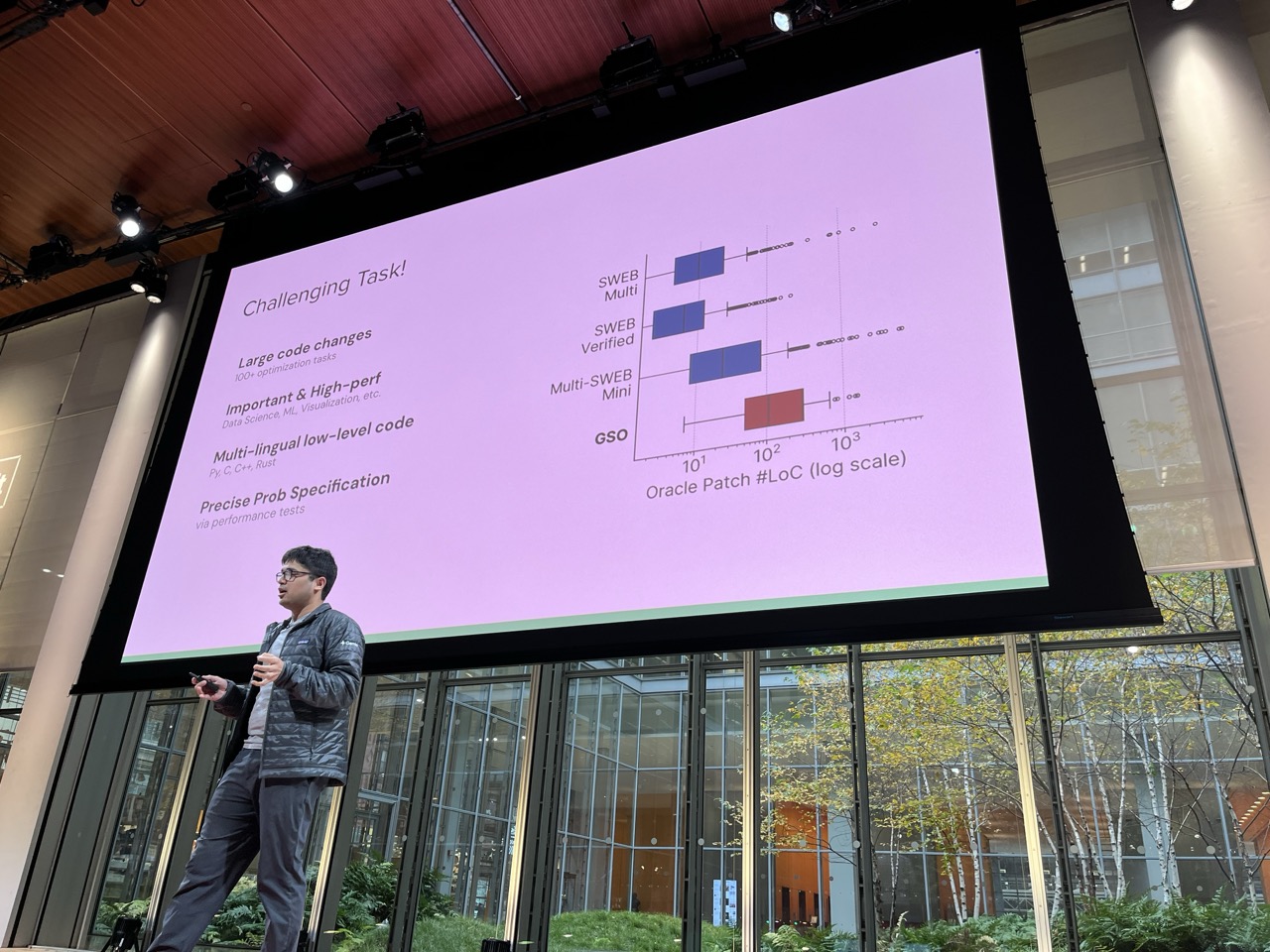

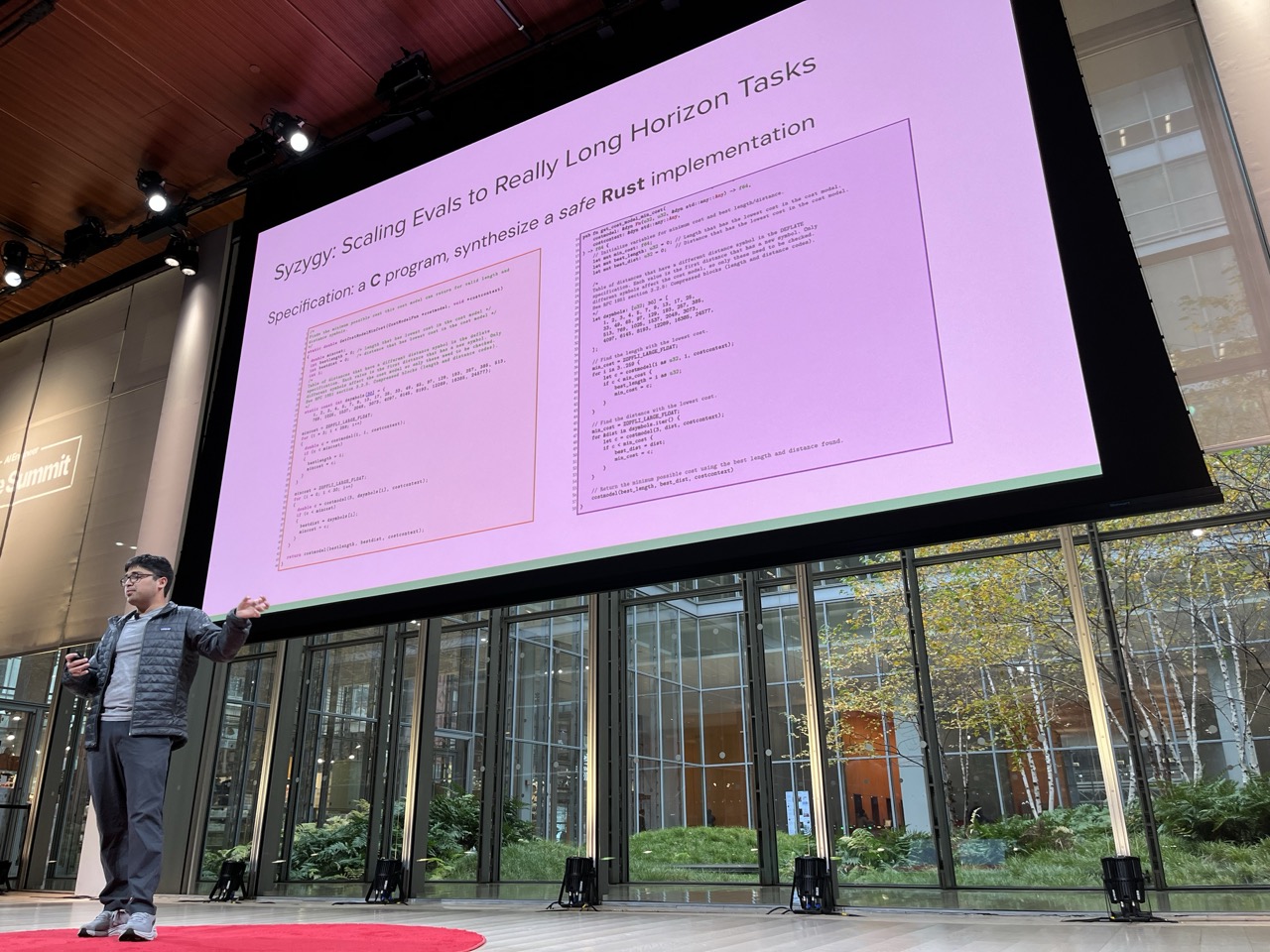

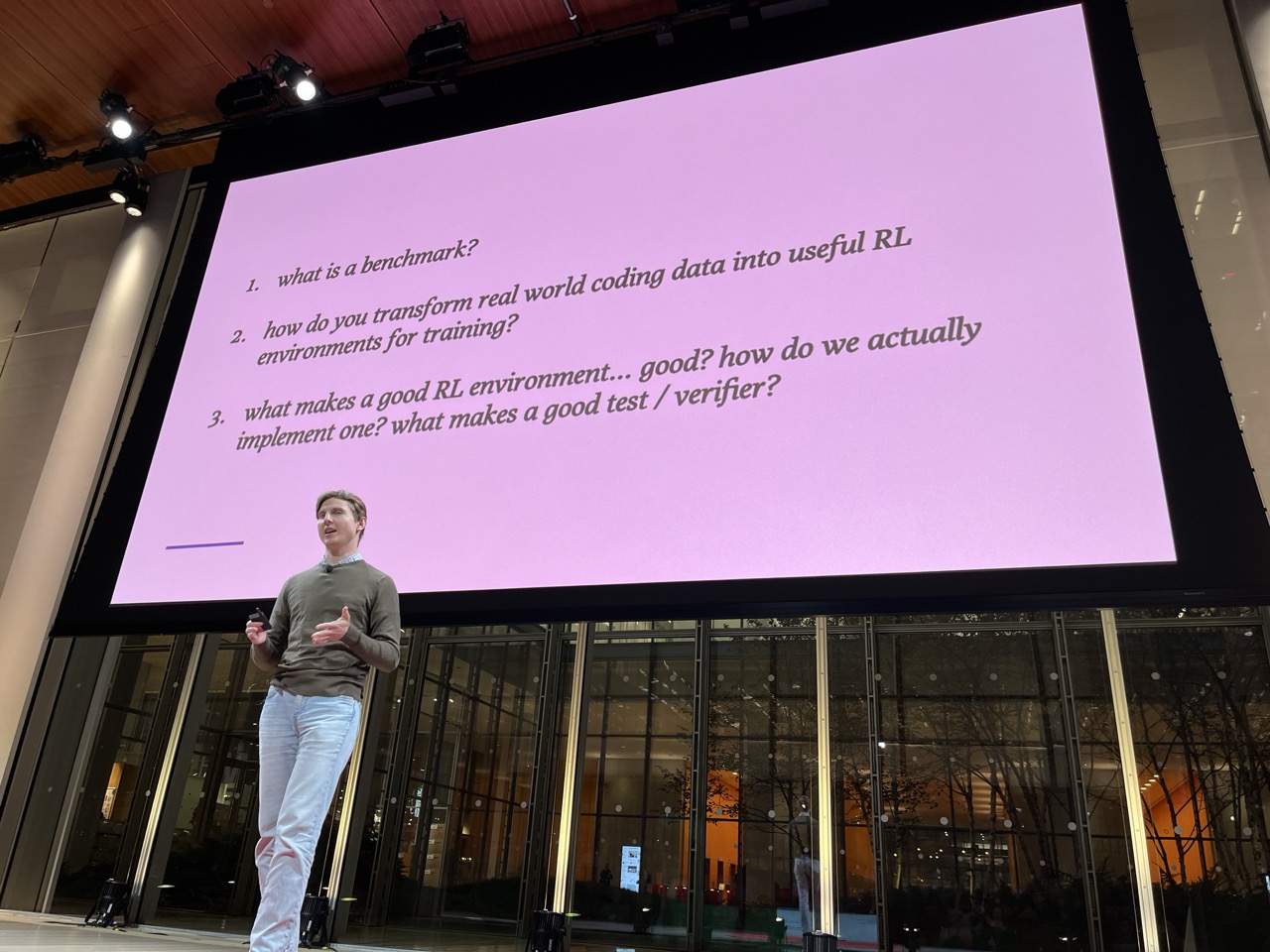

From Code Snippets to Codebases: Then, Now, and What’s Next for Coding Evals#

Naman Jain / Cursor

Lots of tips on improving models. Need good harness, consistent environment.

Jacob Kahn / Meta

Code World Model: Building World Models for Computation

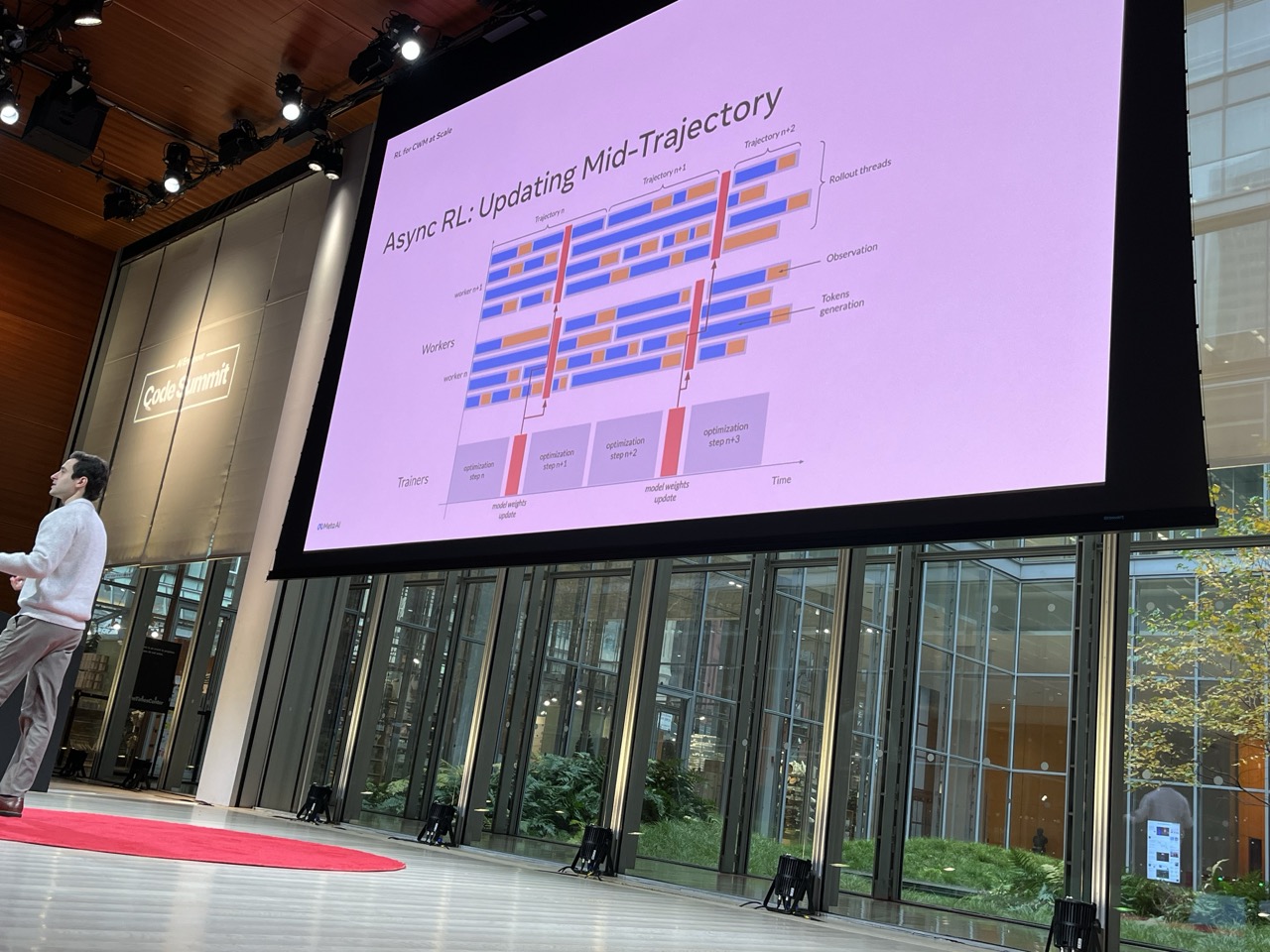

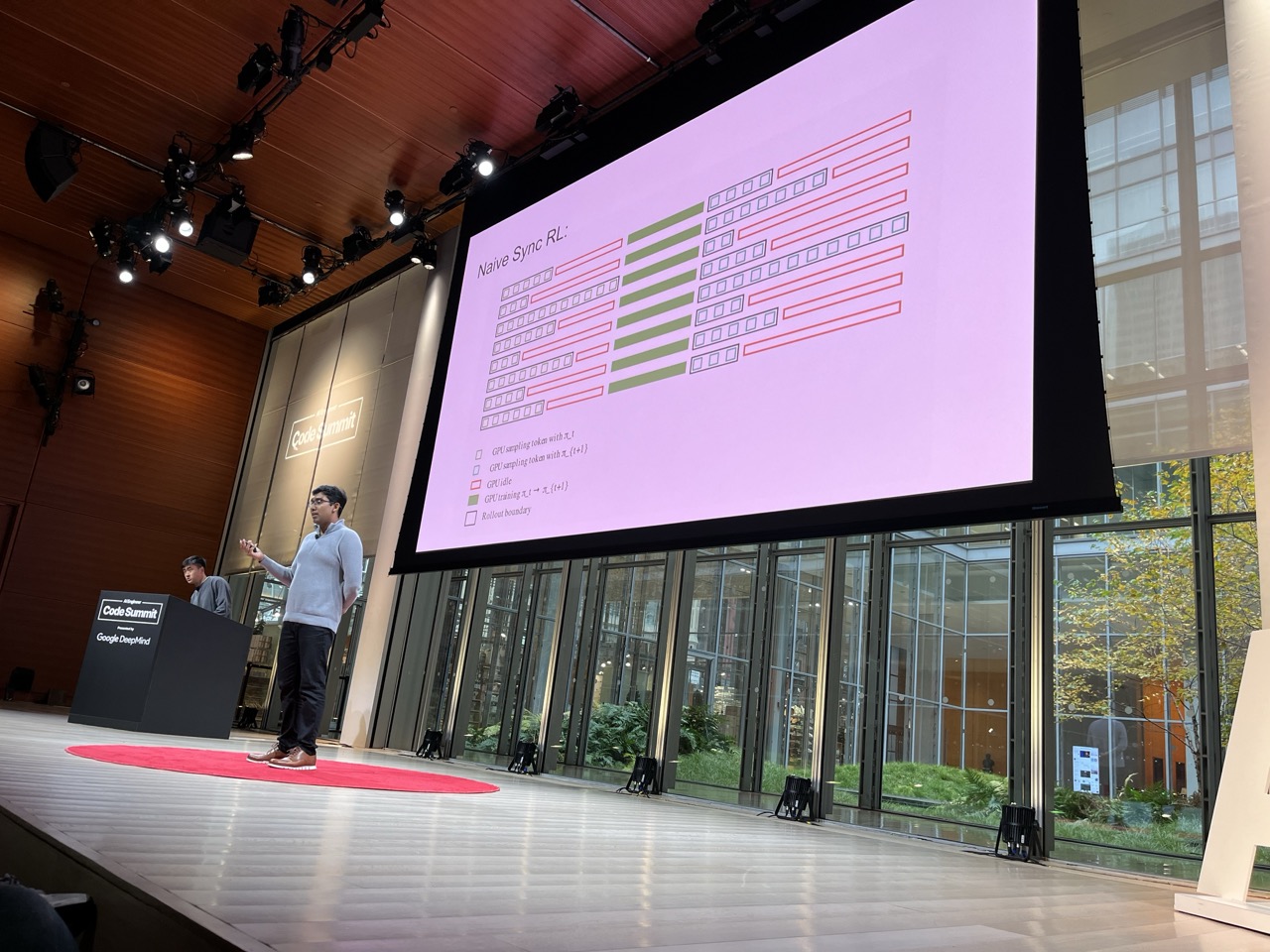

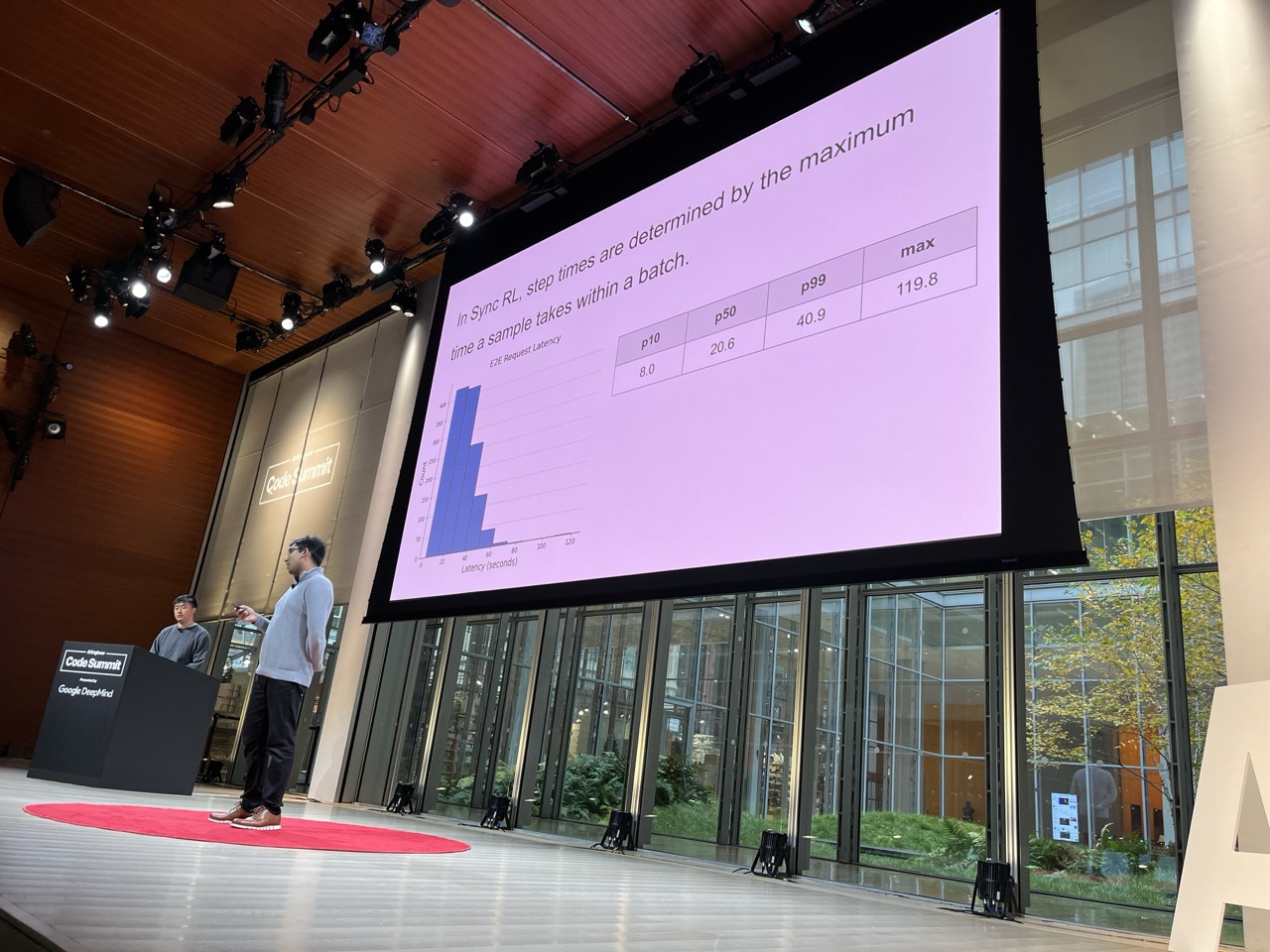

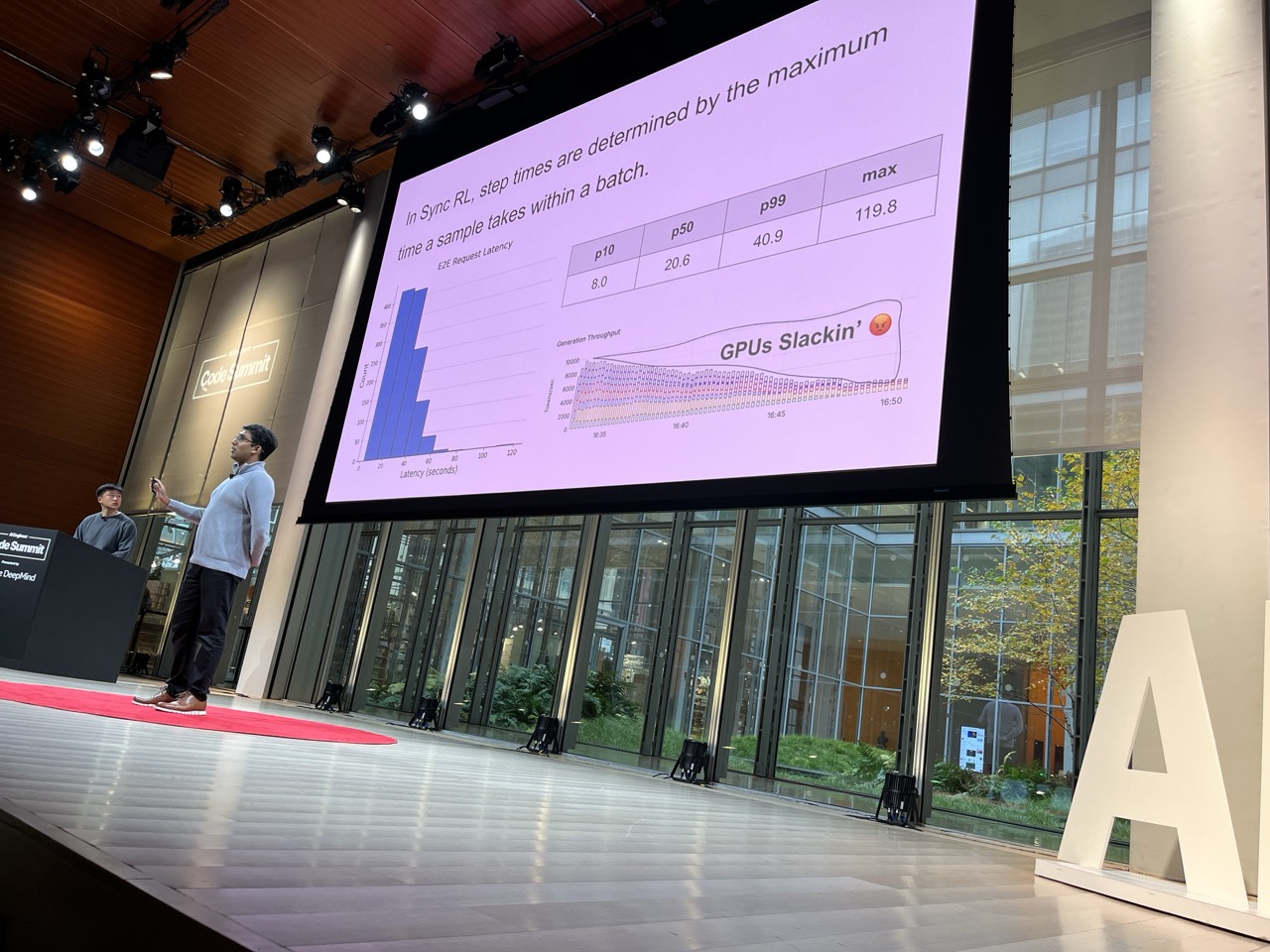

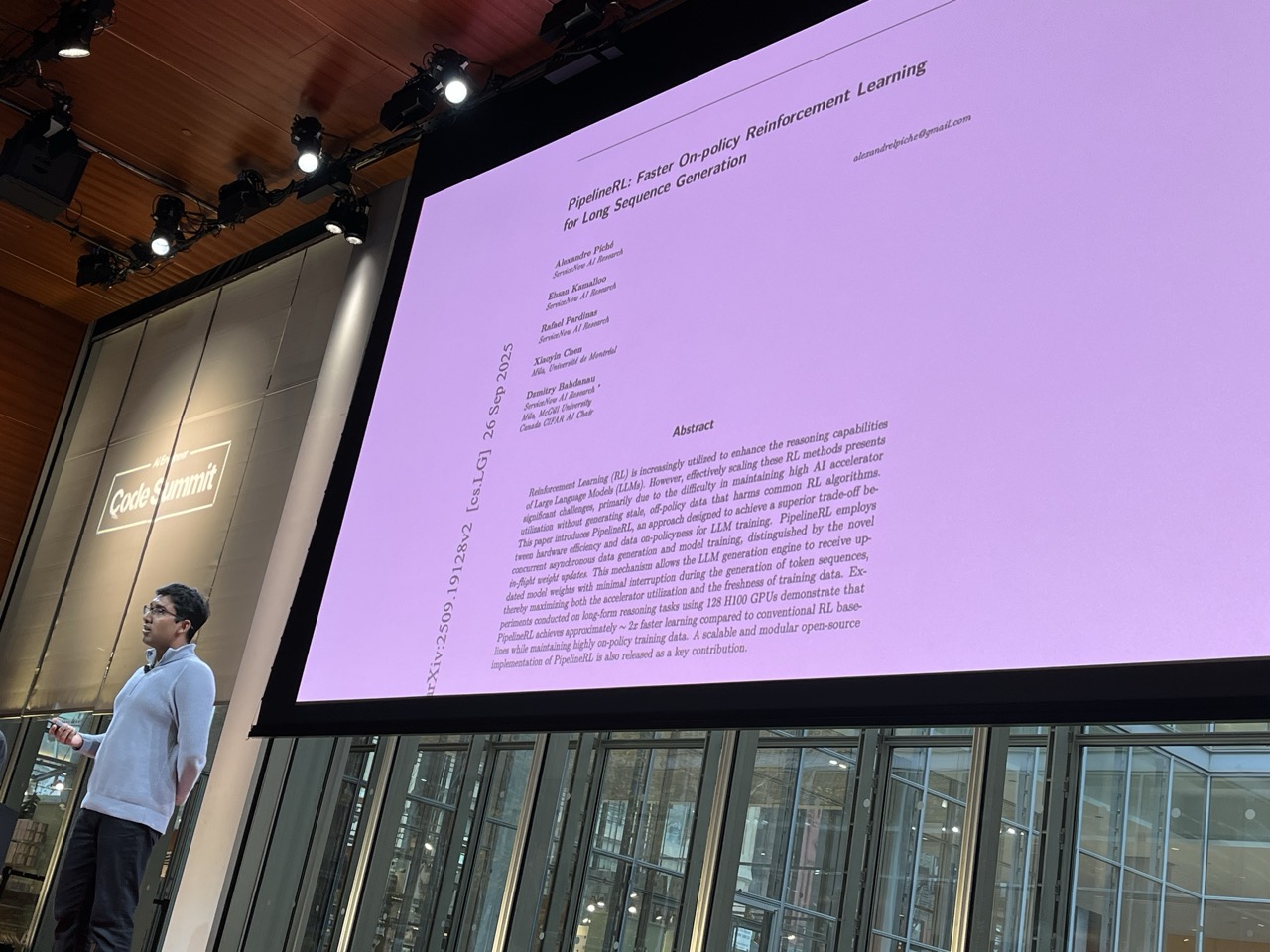

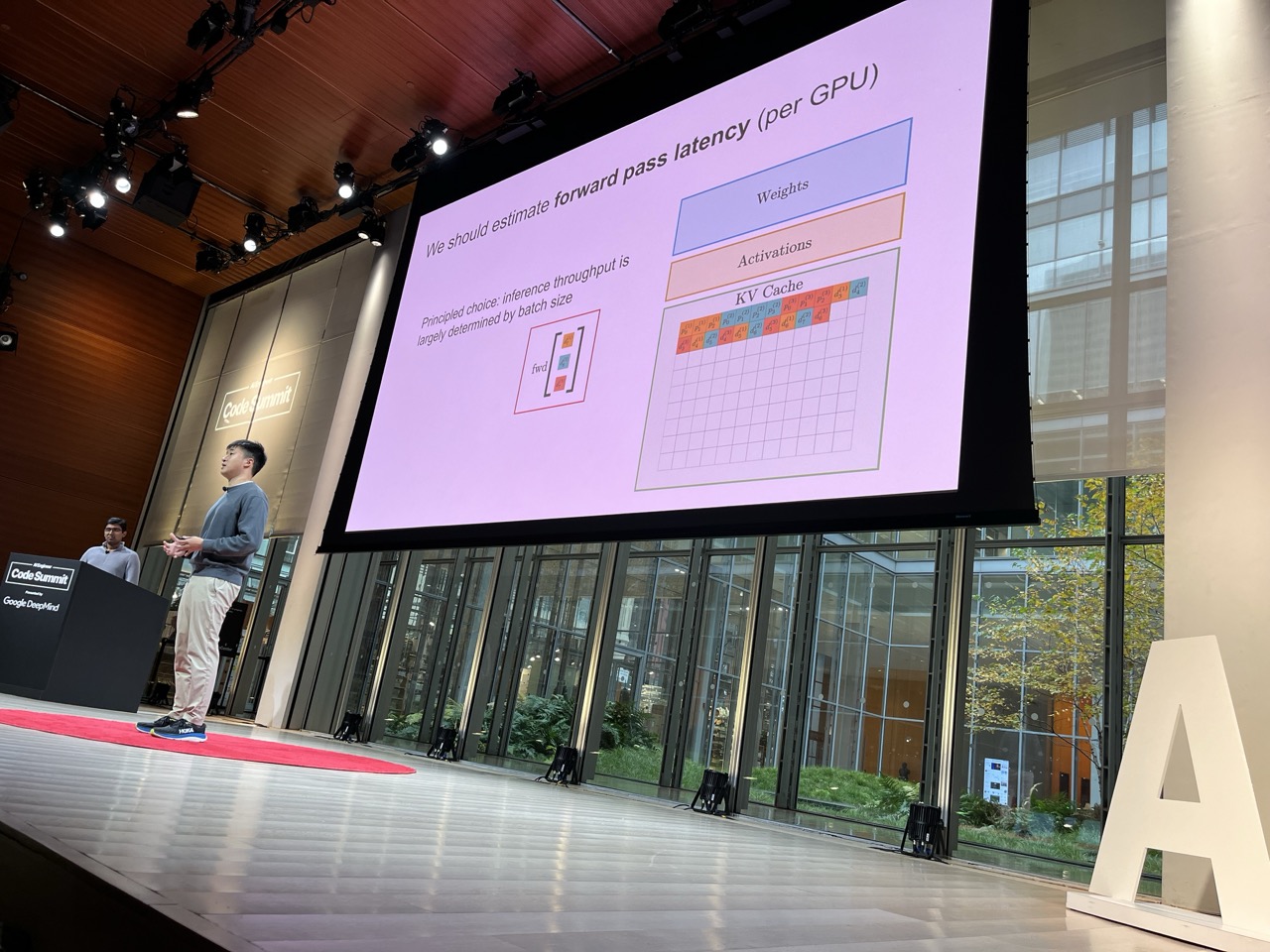

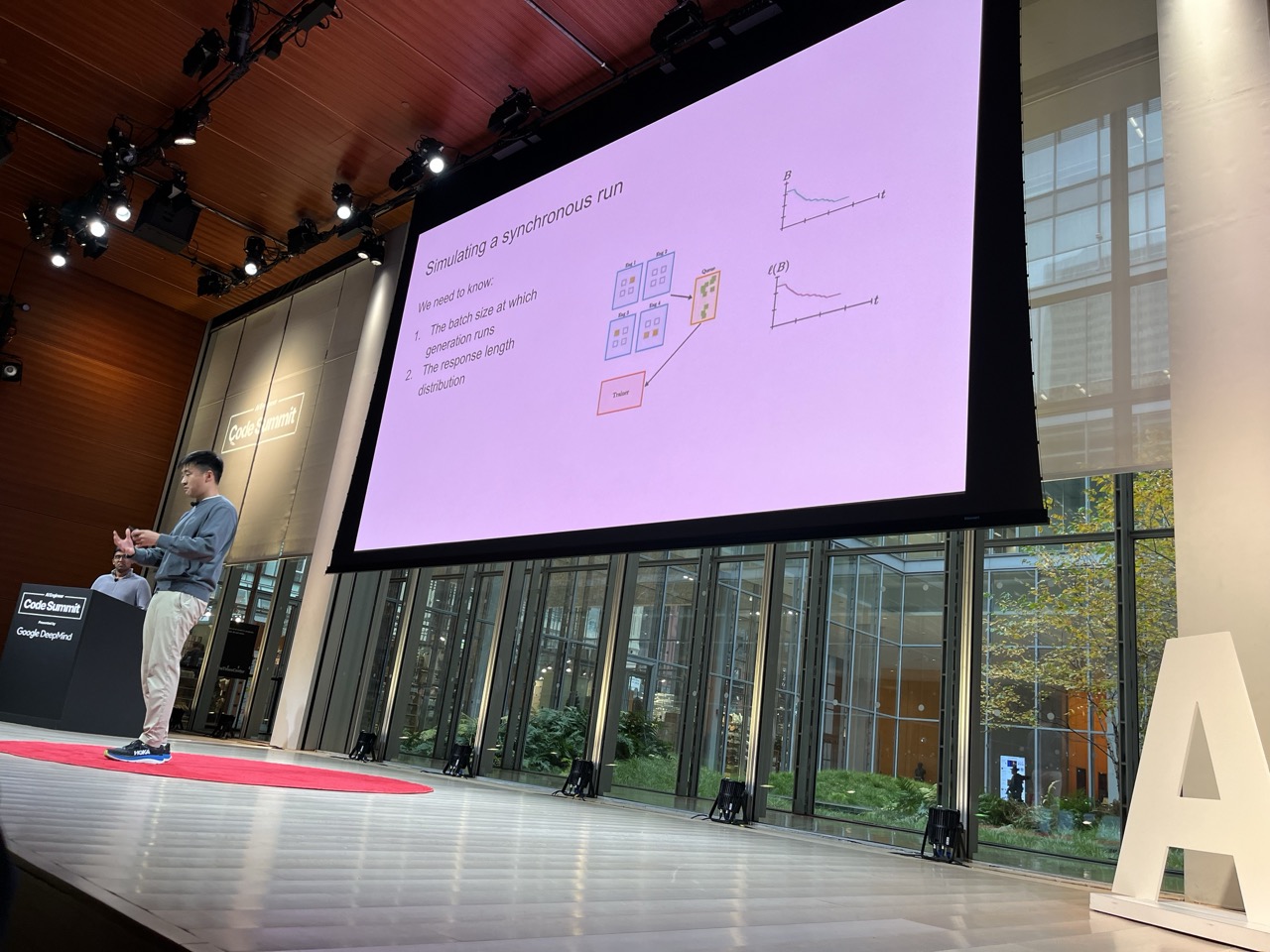

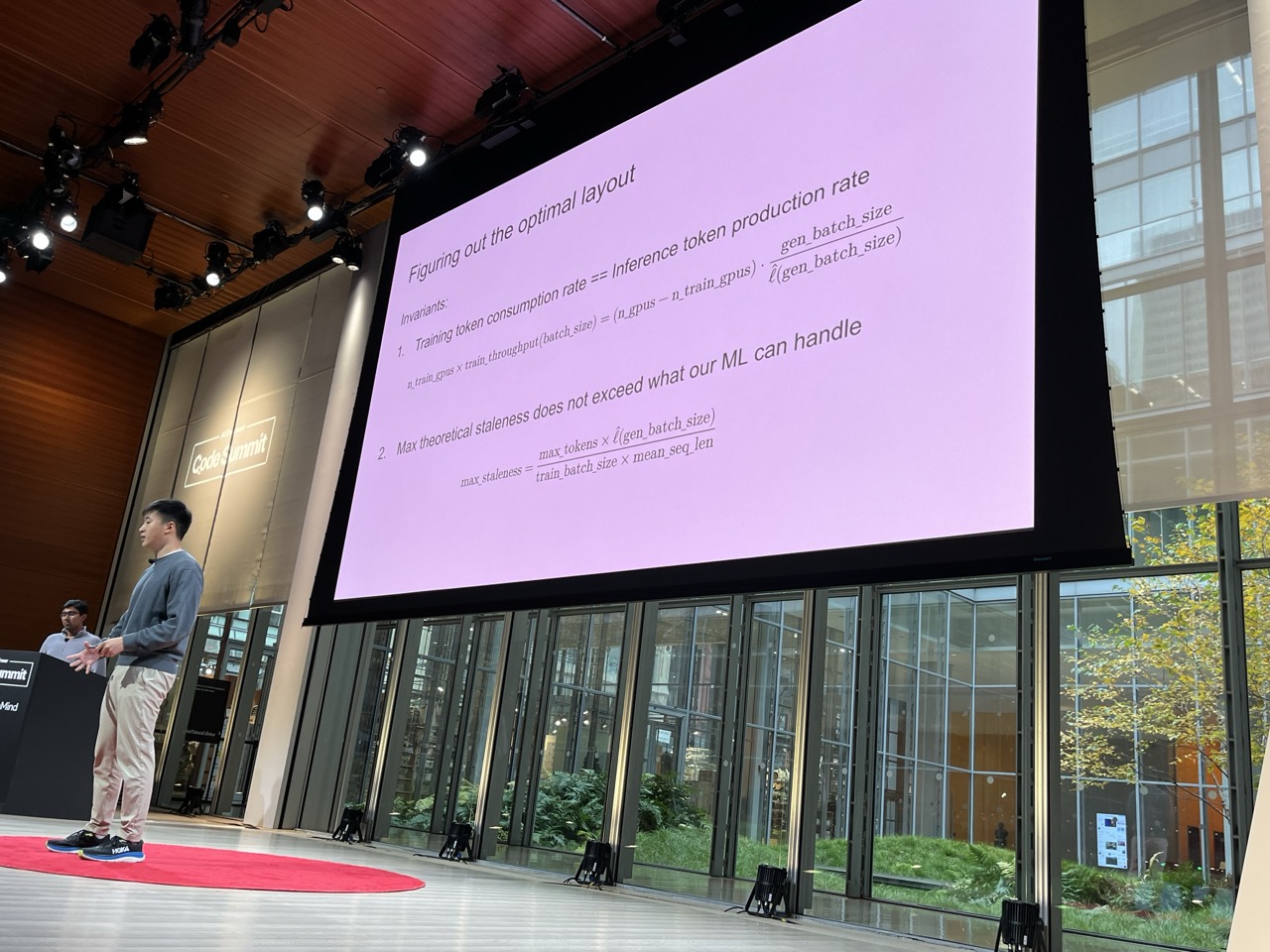

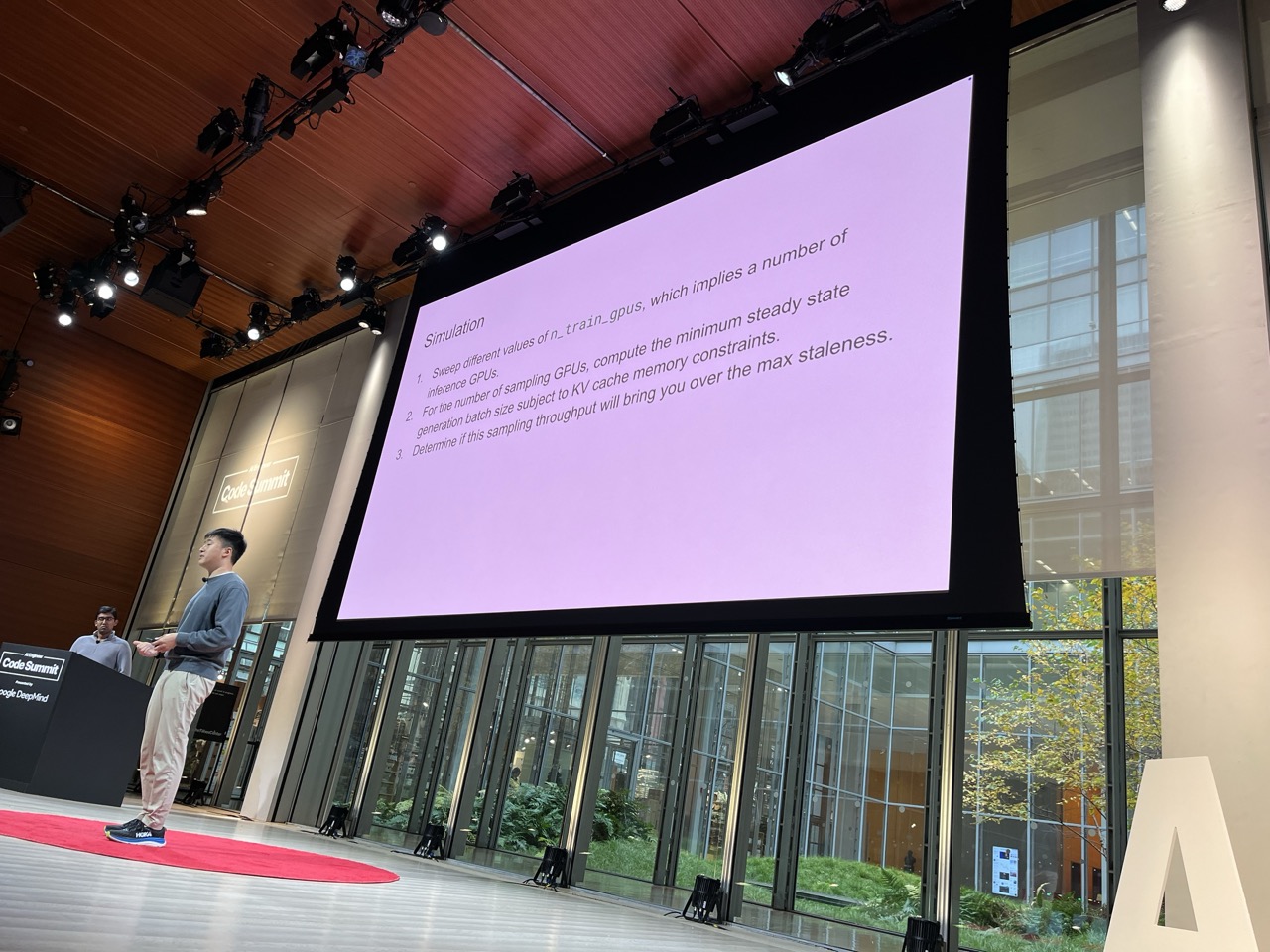

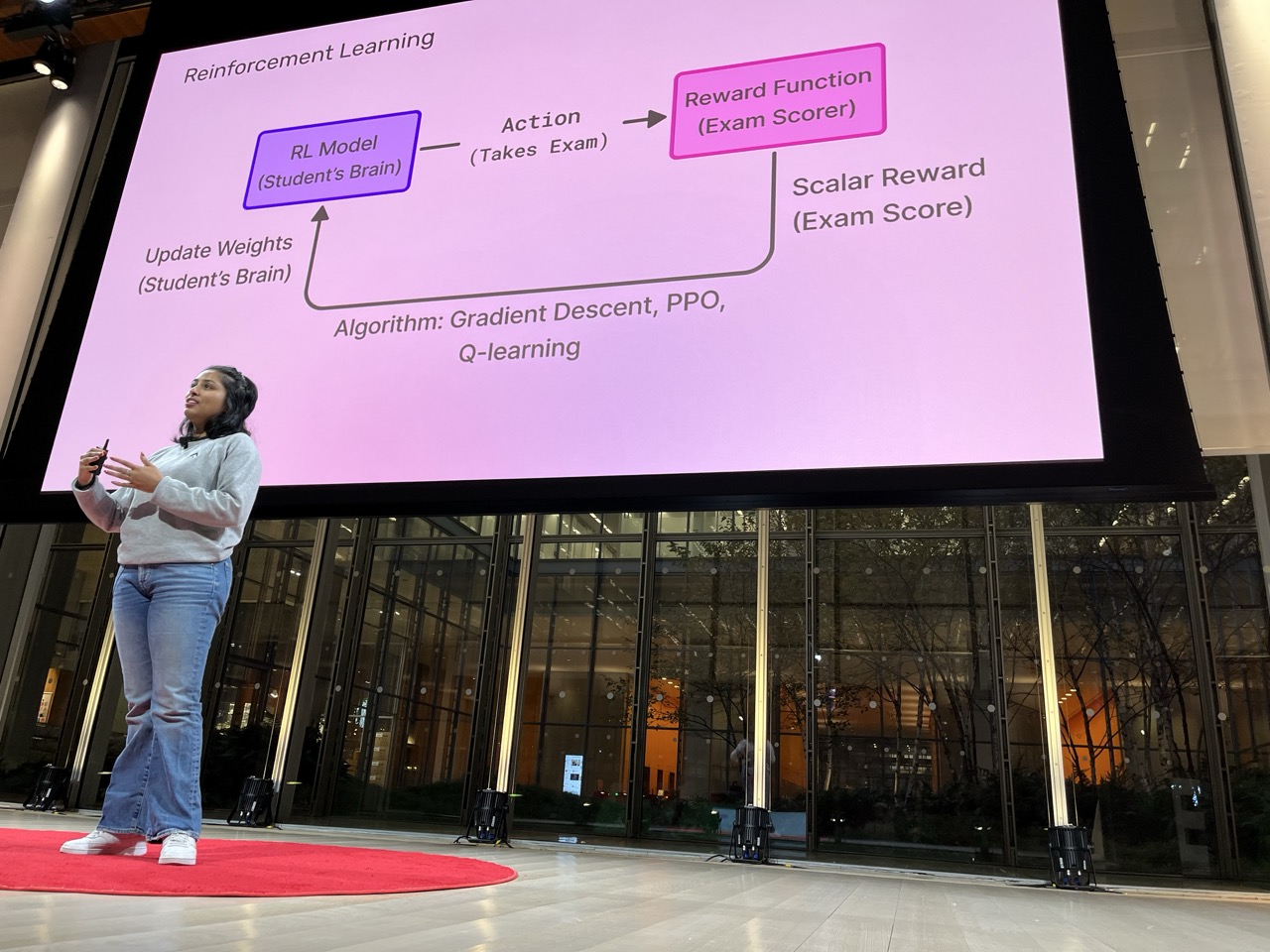

Applied Compute Efficient Reinforcement Learning#

Rhythm Garg / Applied Compute, Linden Li

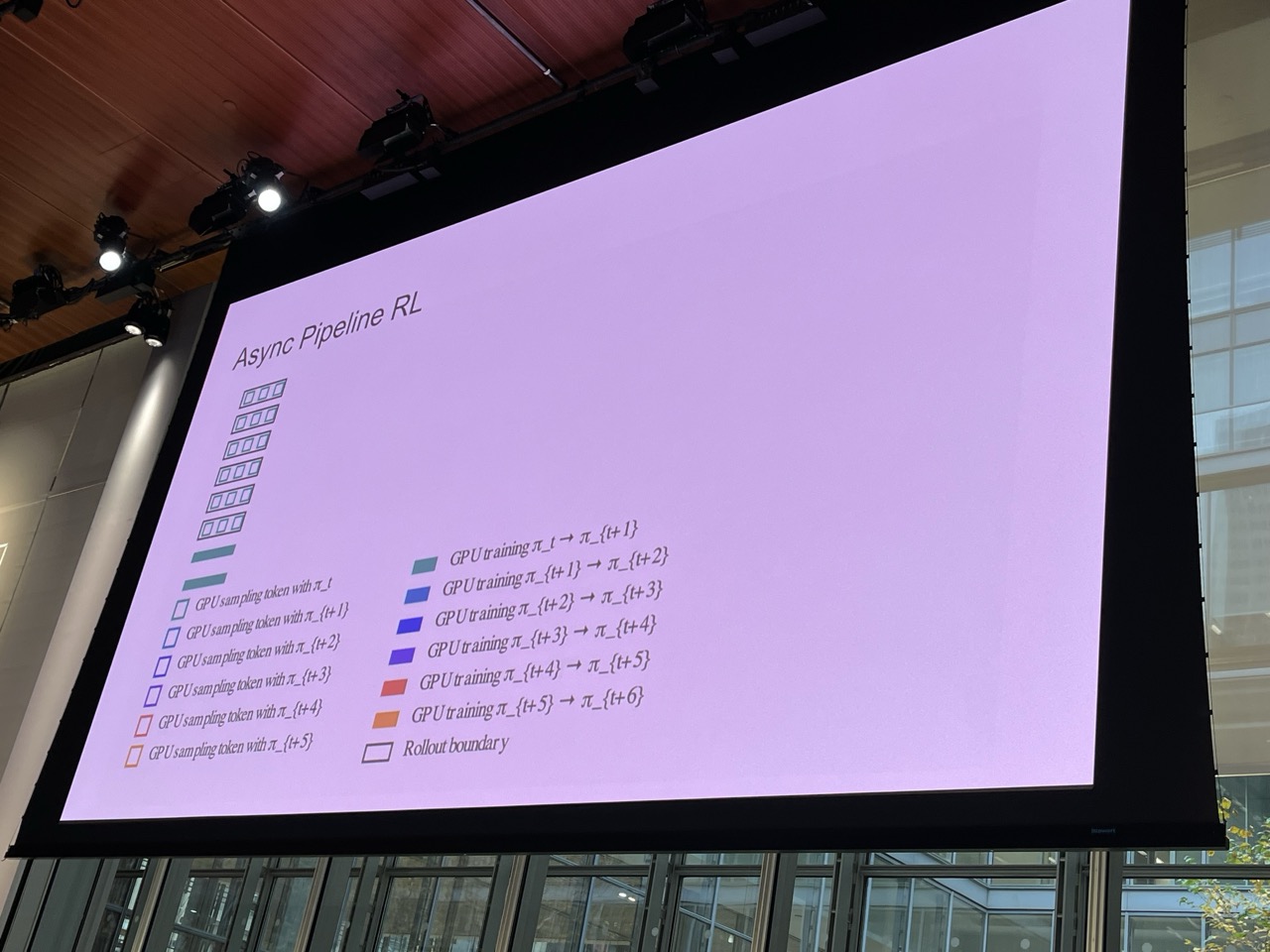

Lots of tips/tricks on getting RL runs to be fast as a fundamental requirement for fast RL runs. Most notably they described async RL tools to maximize GPU usage.

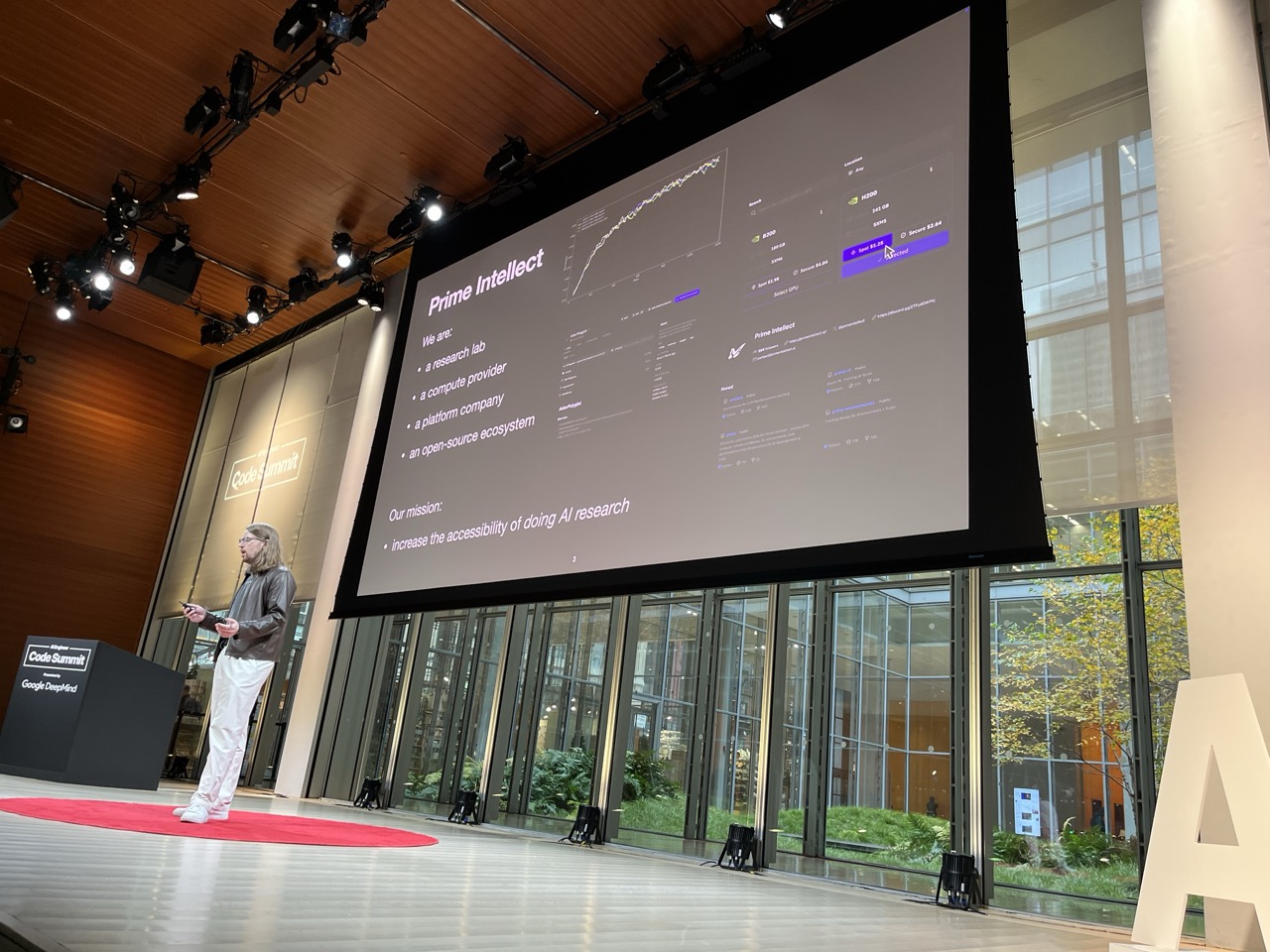

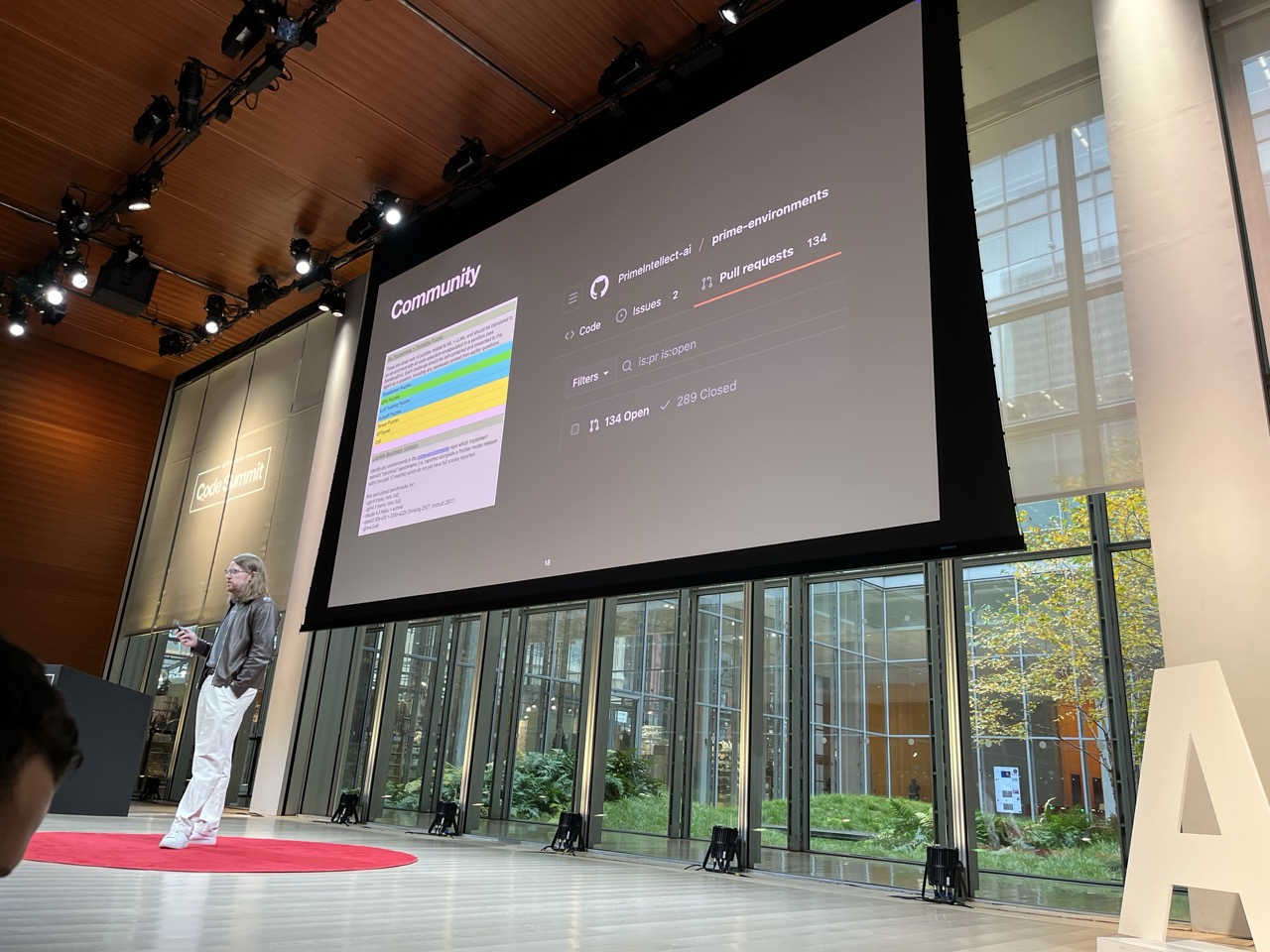

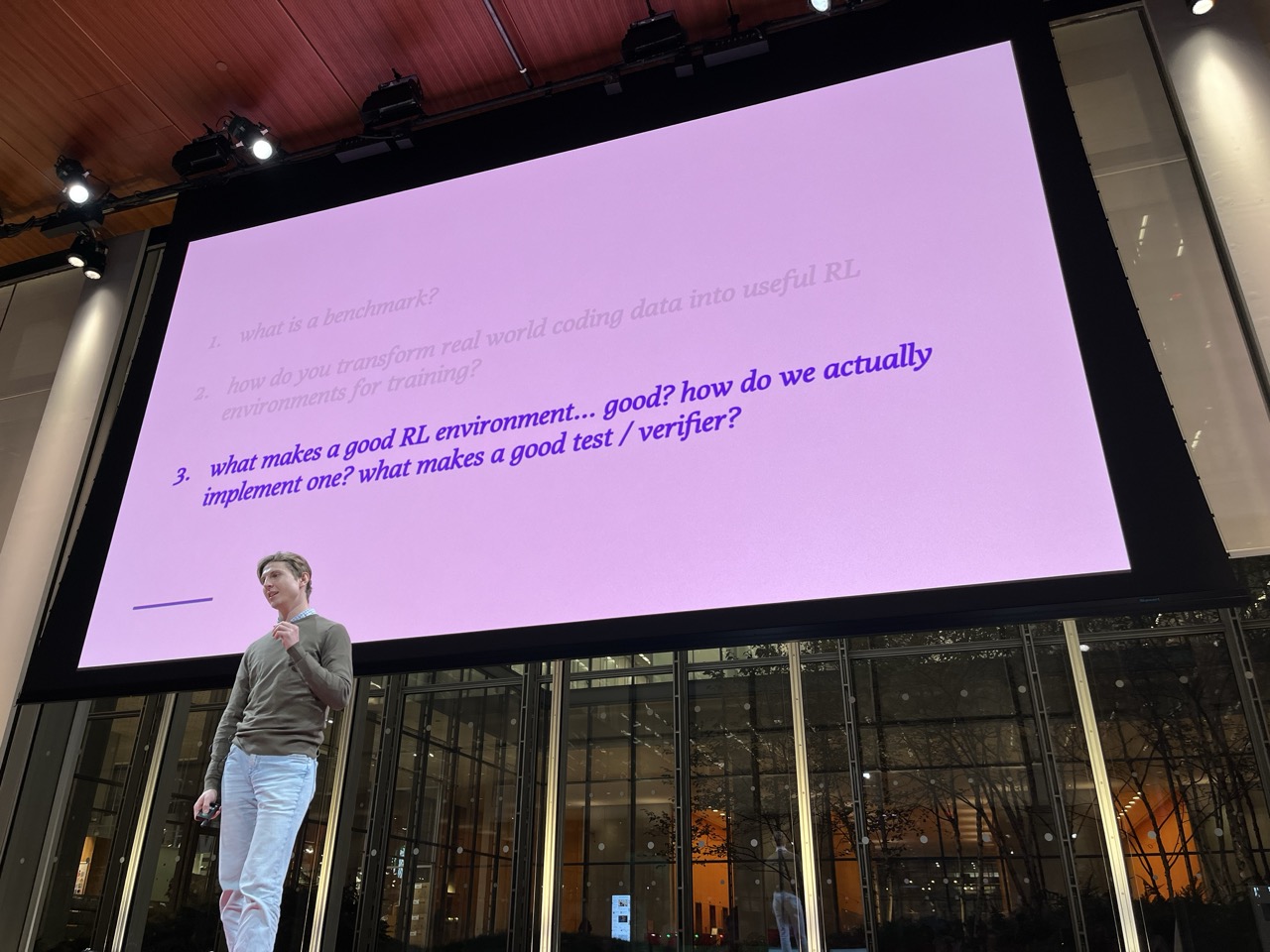

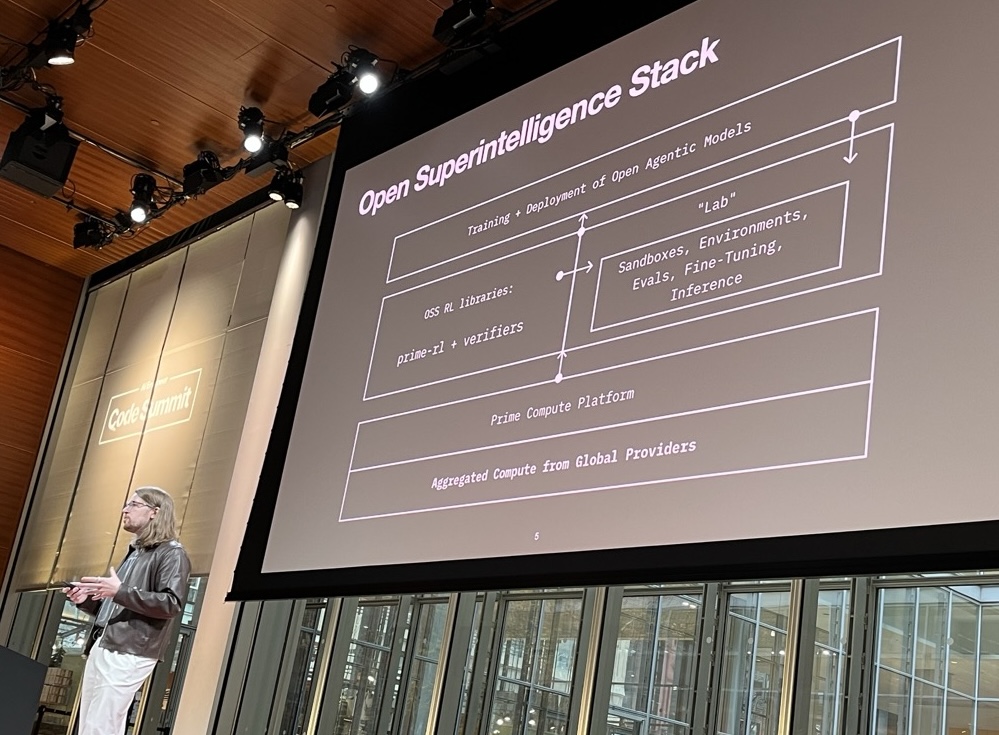

RL Environments at Scale#

Will Brown / Prime Intellect

Very interesting. The creation of a platform to establish Environments and run RL within them. Aiming to expand the number of people who are doing AI research by standardizing the environments and allowing runs and reproducibility.

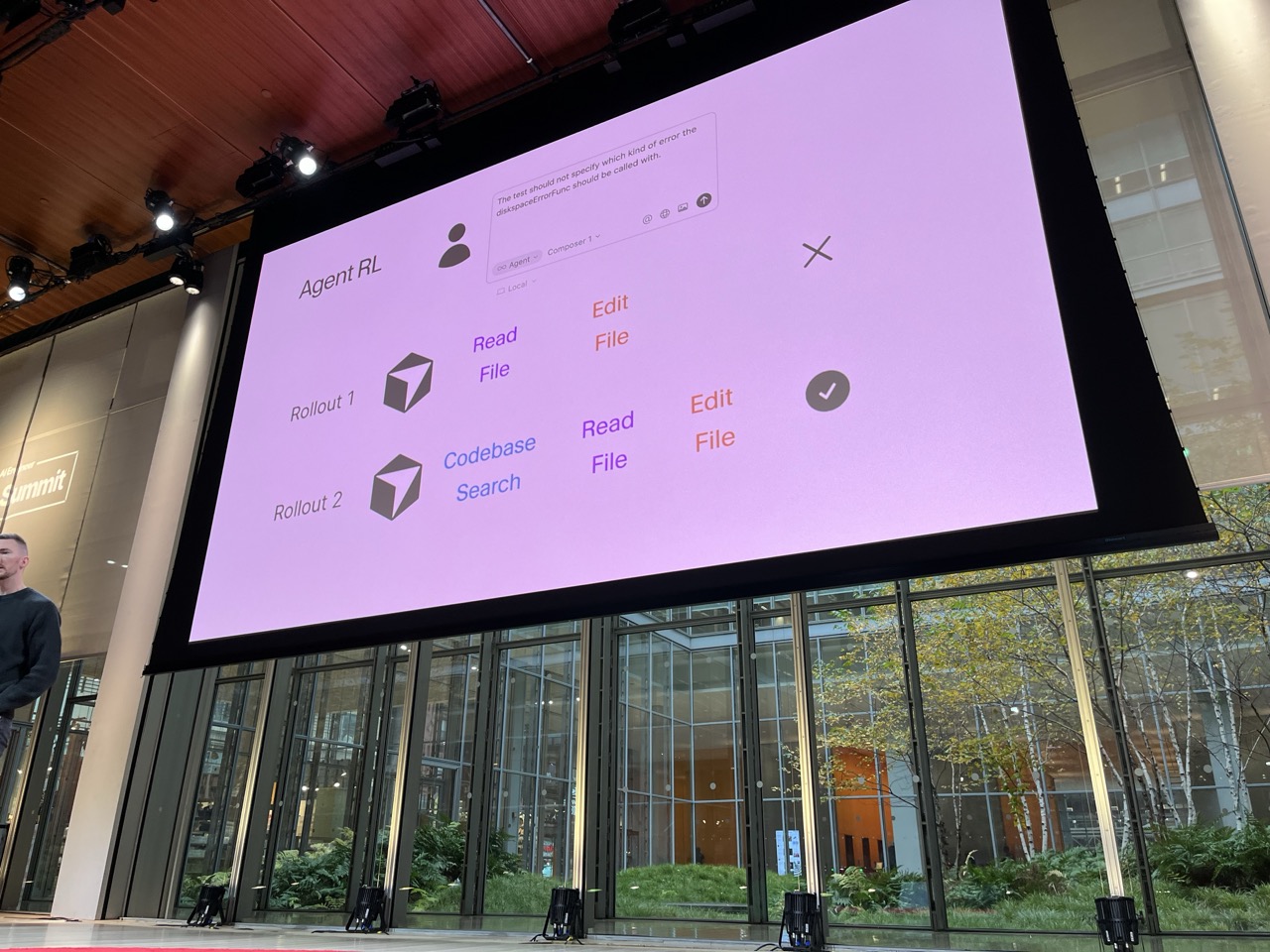

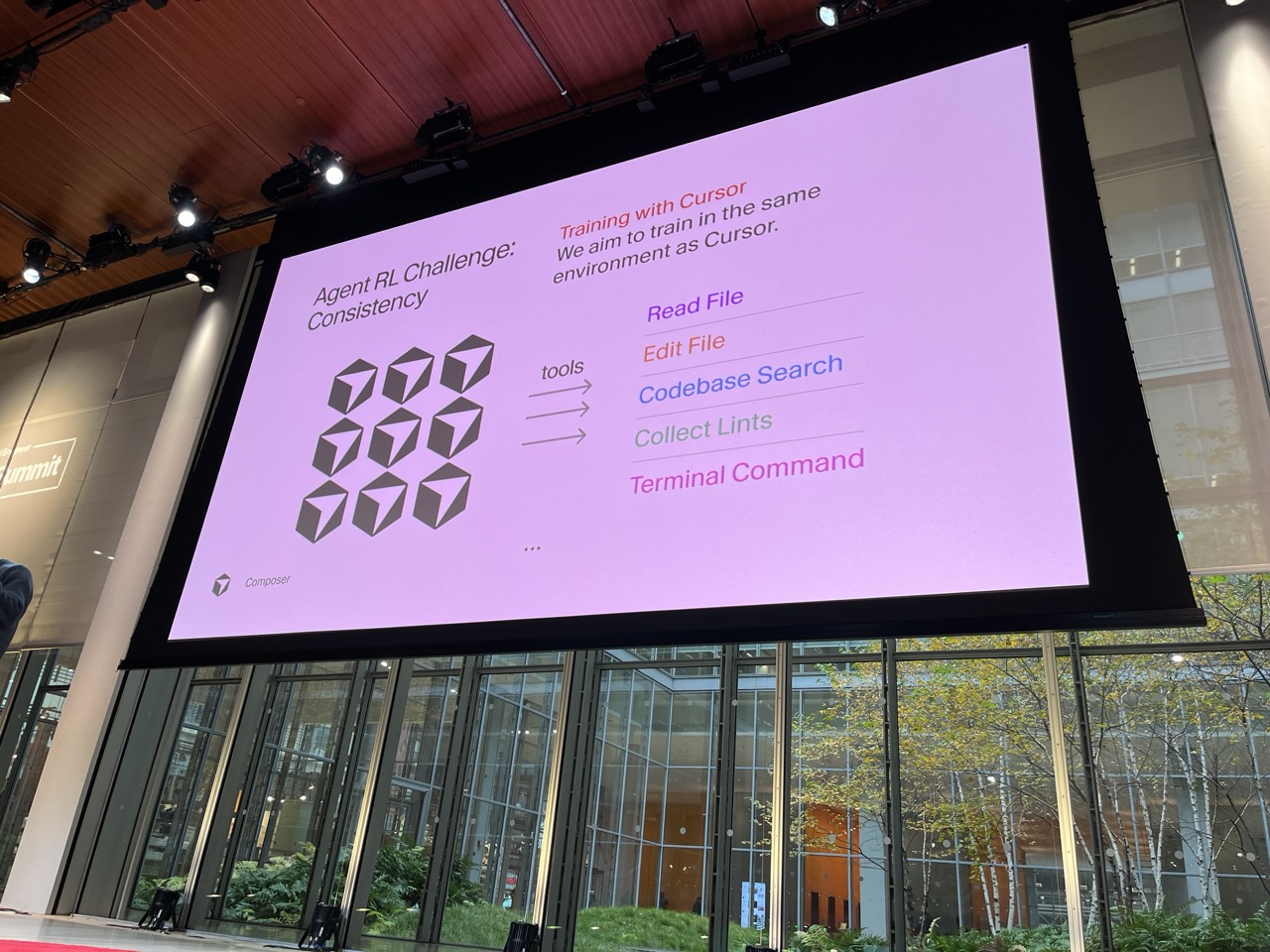

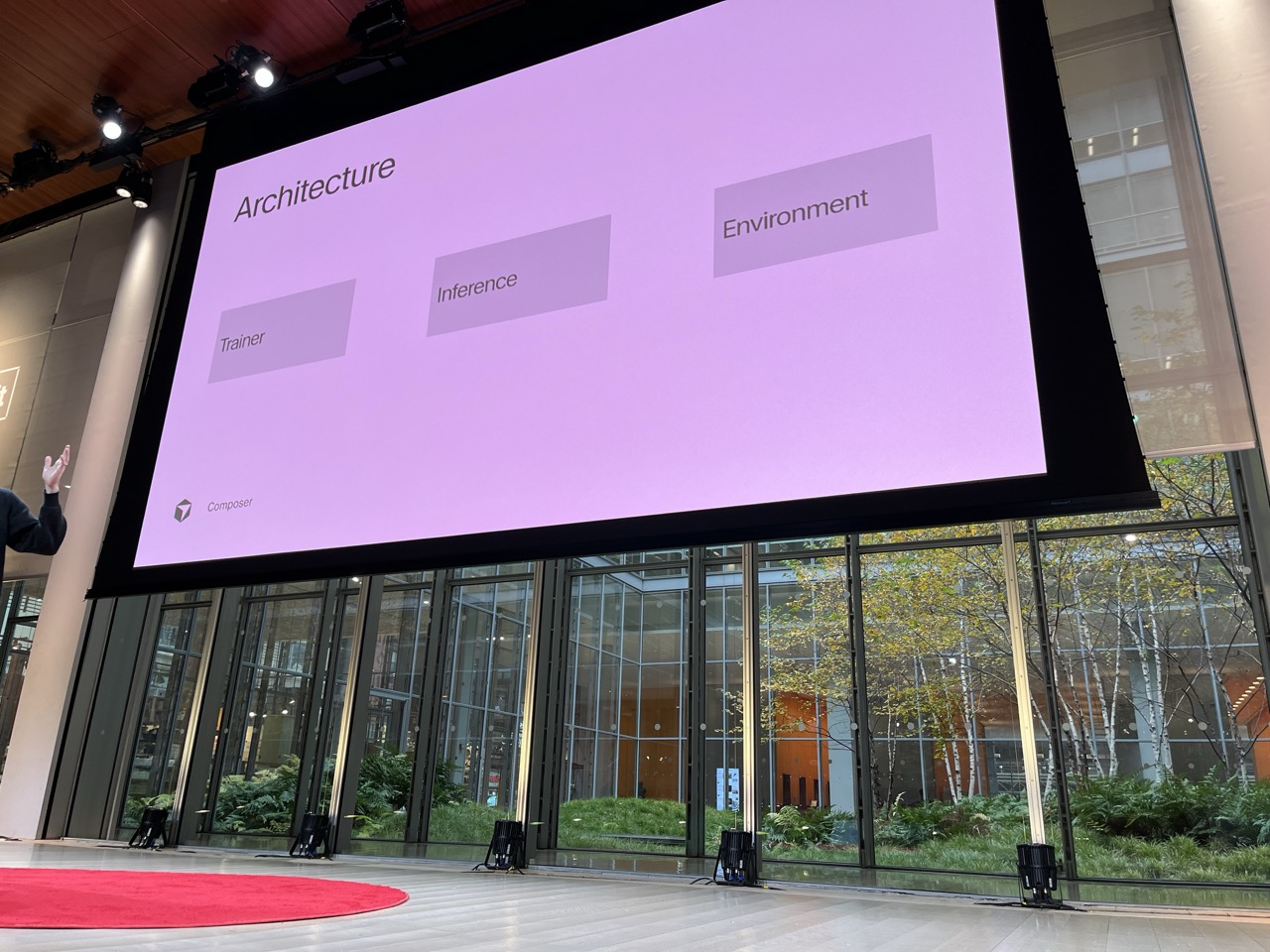

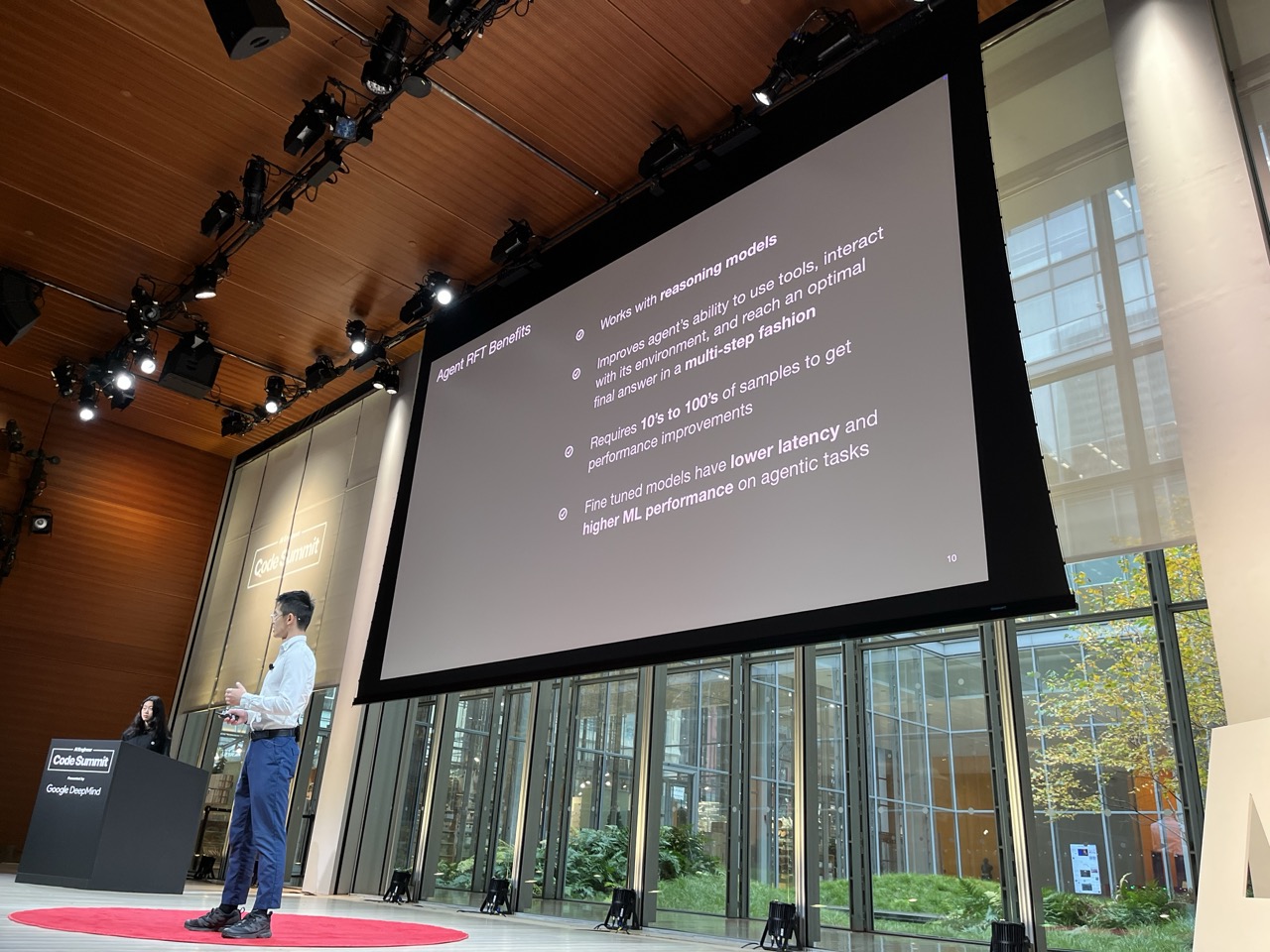

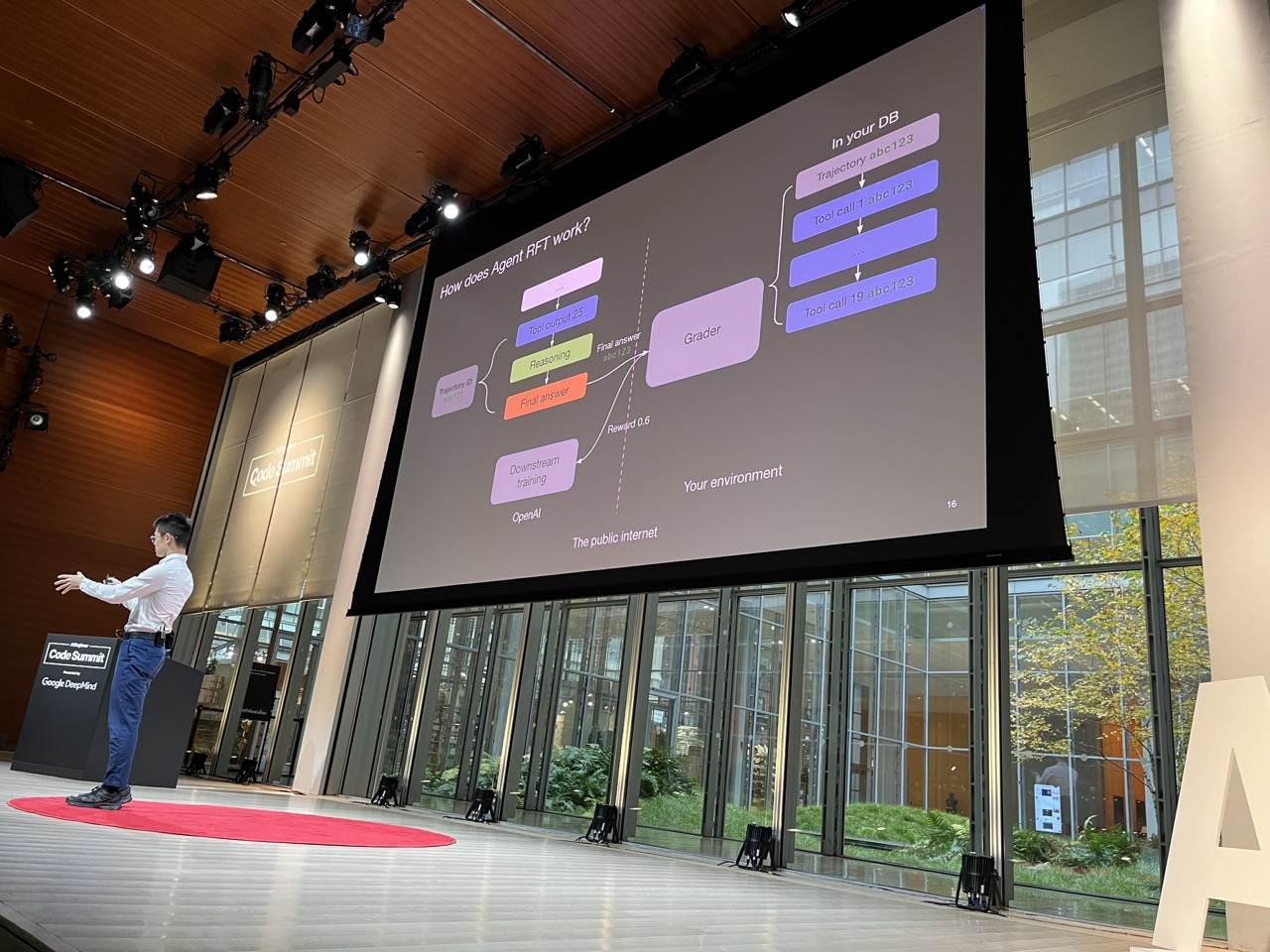

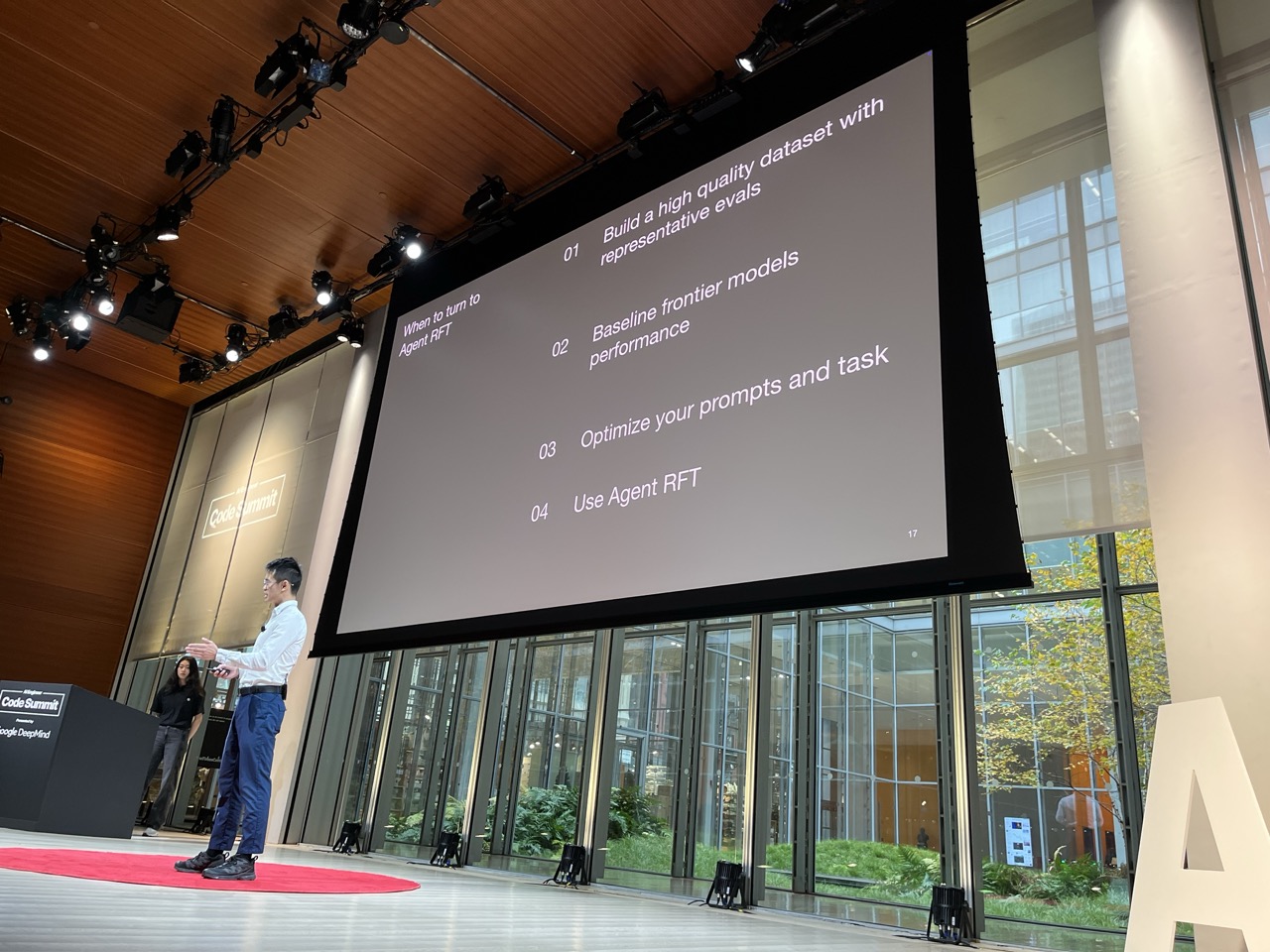

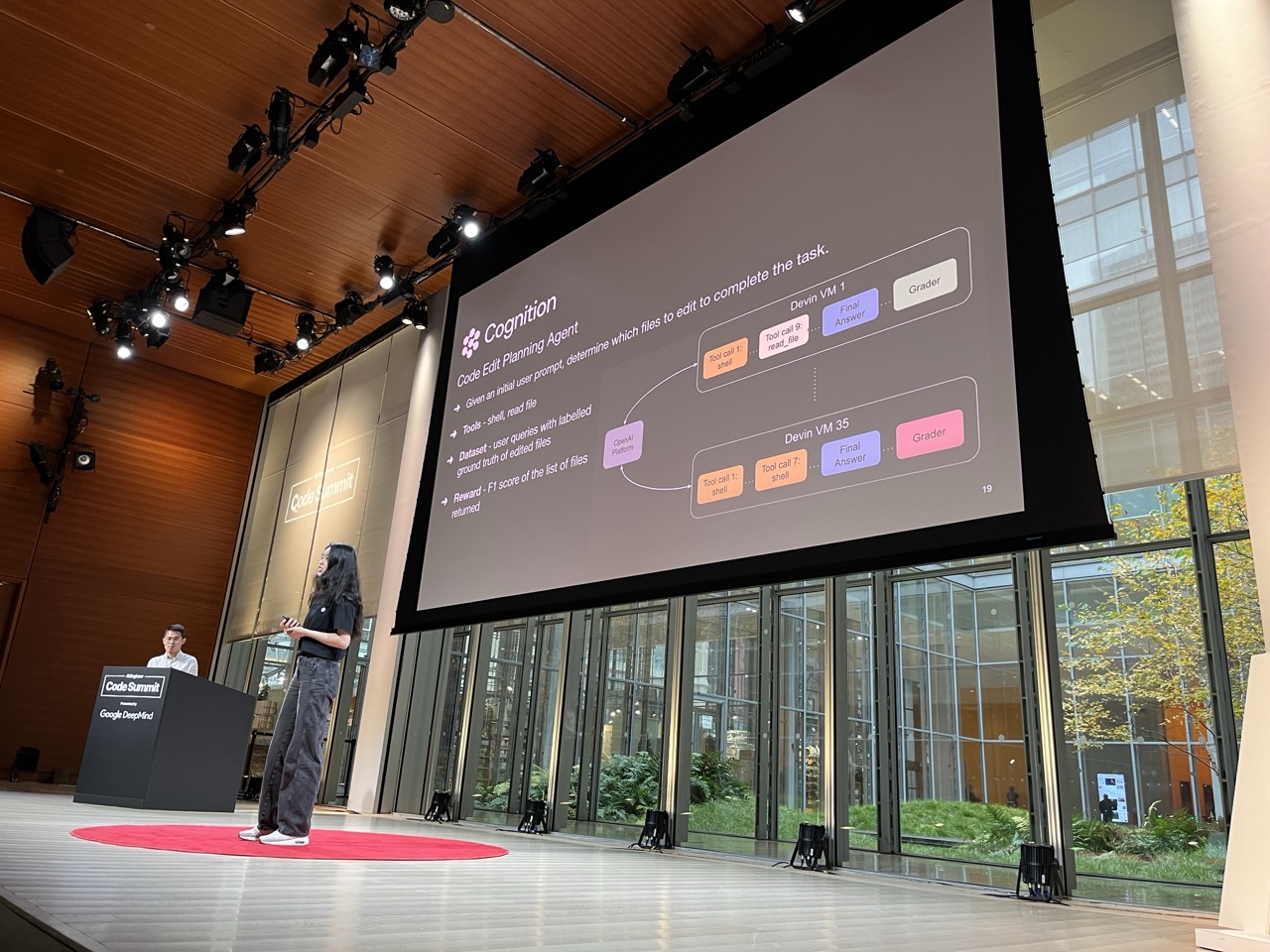

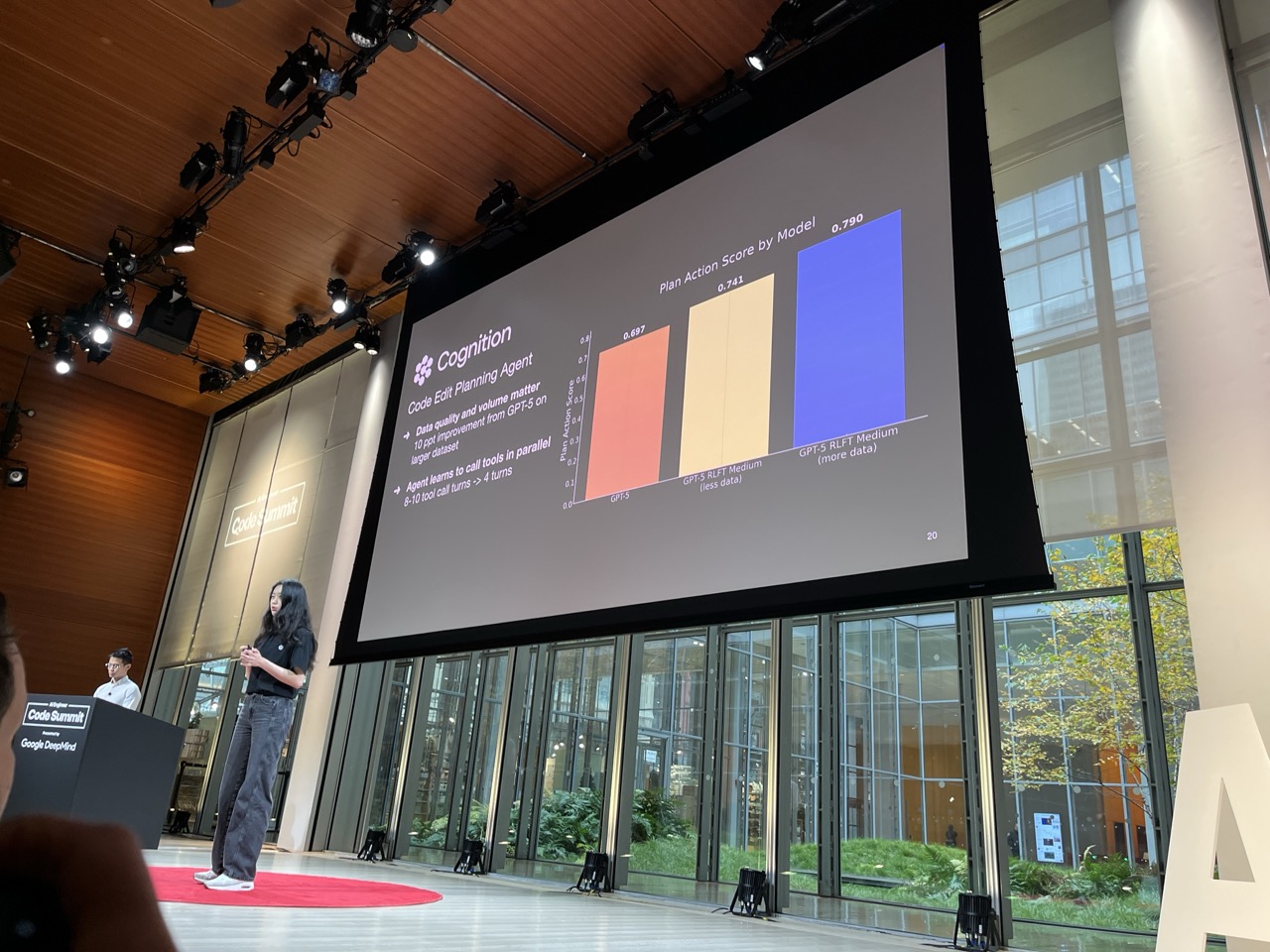

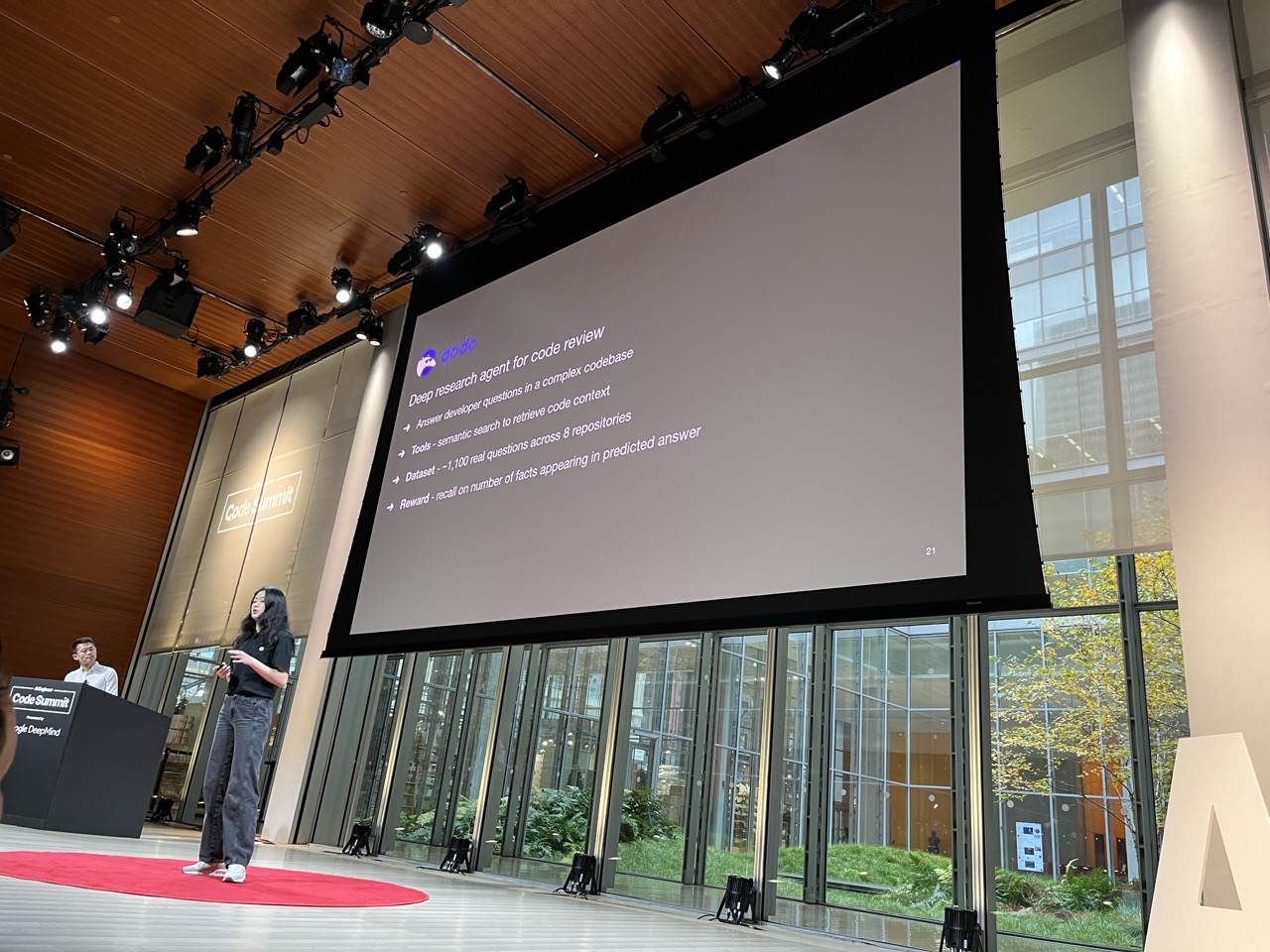

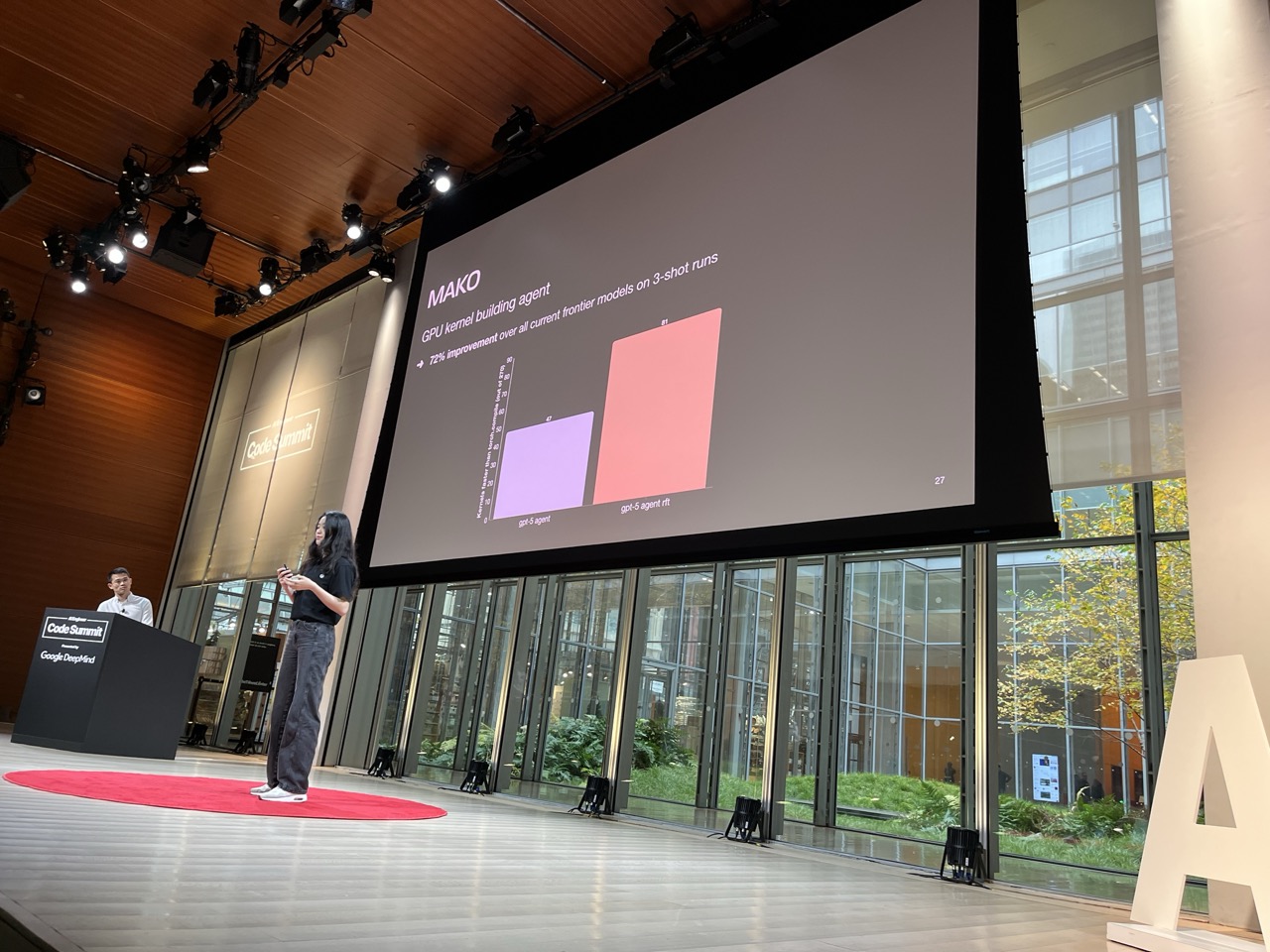

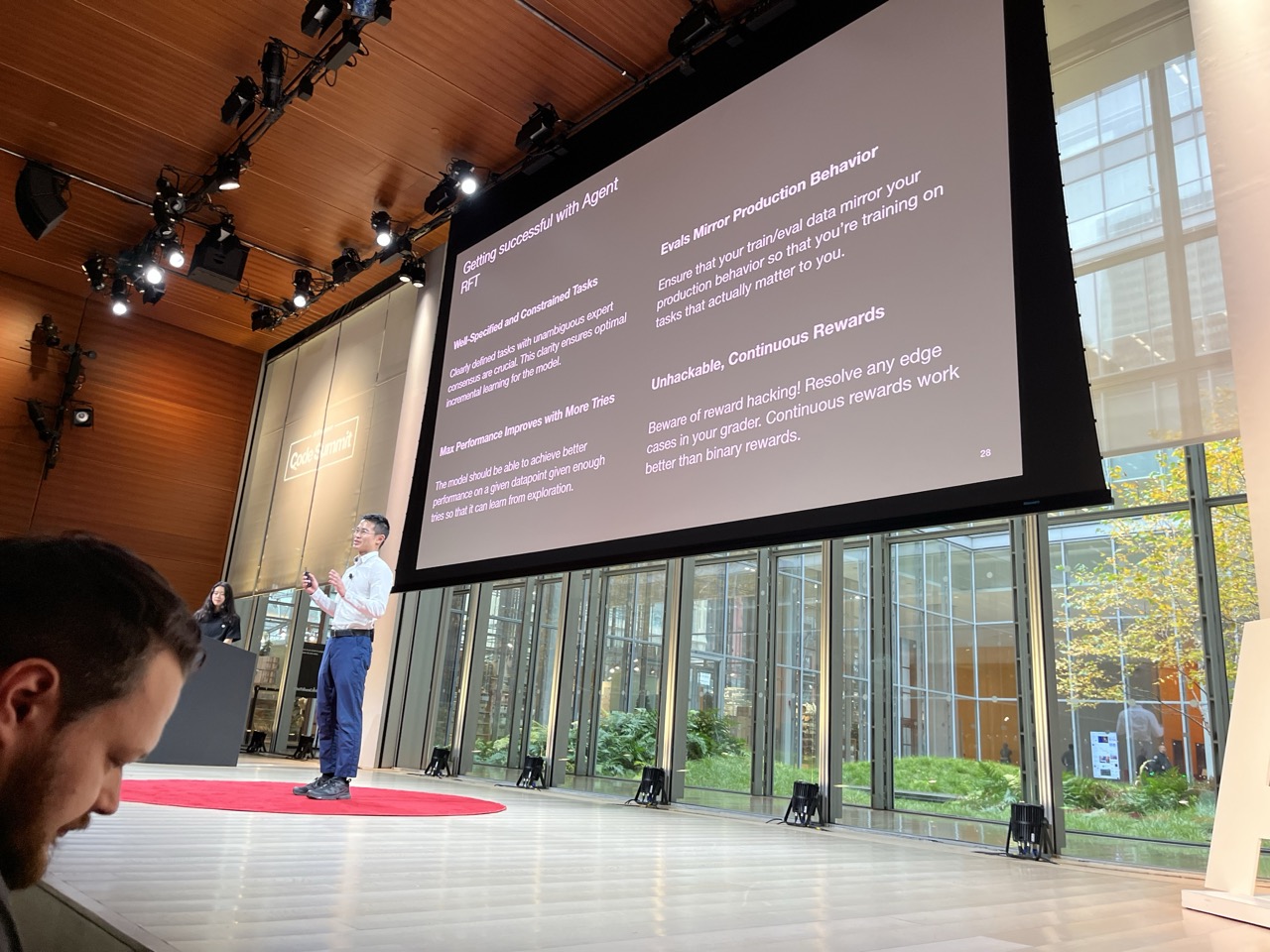

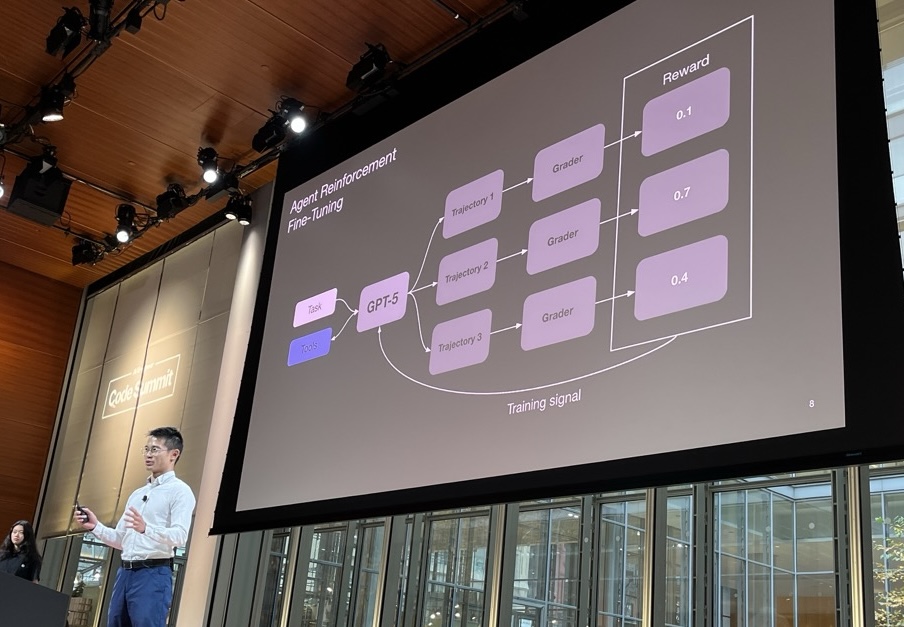

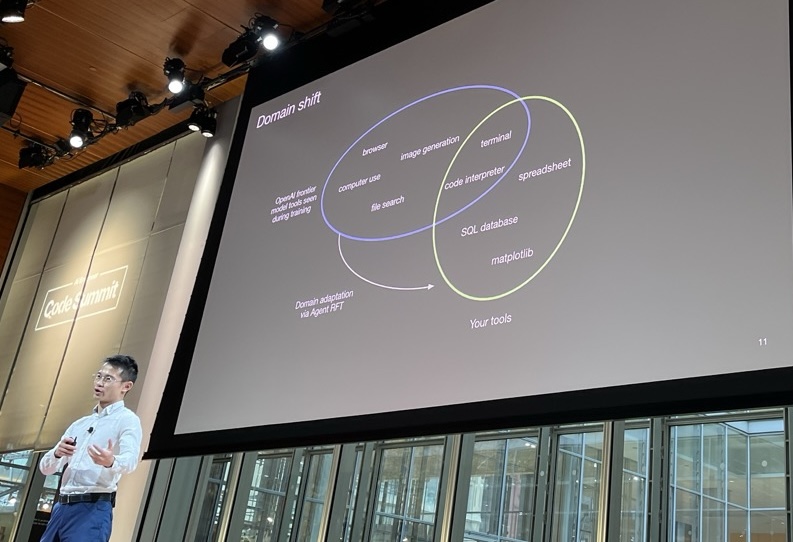

Agent Reinforcement Fine Tuning#

Will Hang / OpenAI, Cathy Zhou / OpenAI

Agent RL to train agentic behavior to get really good at a task:

- The environment in which you train is REALLY important and any drift between training env and actual env can lead to issues

- A number of successes in training an Agent to get better at Code Review or Coding

- Code and coding are the tasks that seem most likely to benefit from RL given their tight feedback cycles

- RL rewards are hackable and need to be monitored very closely

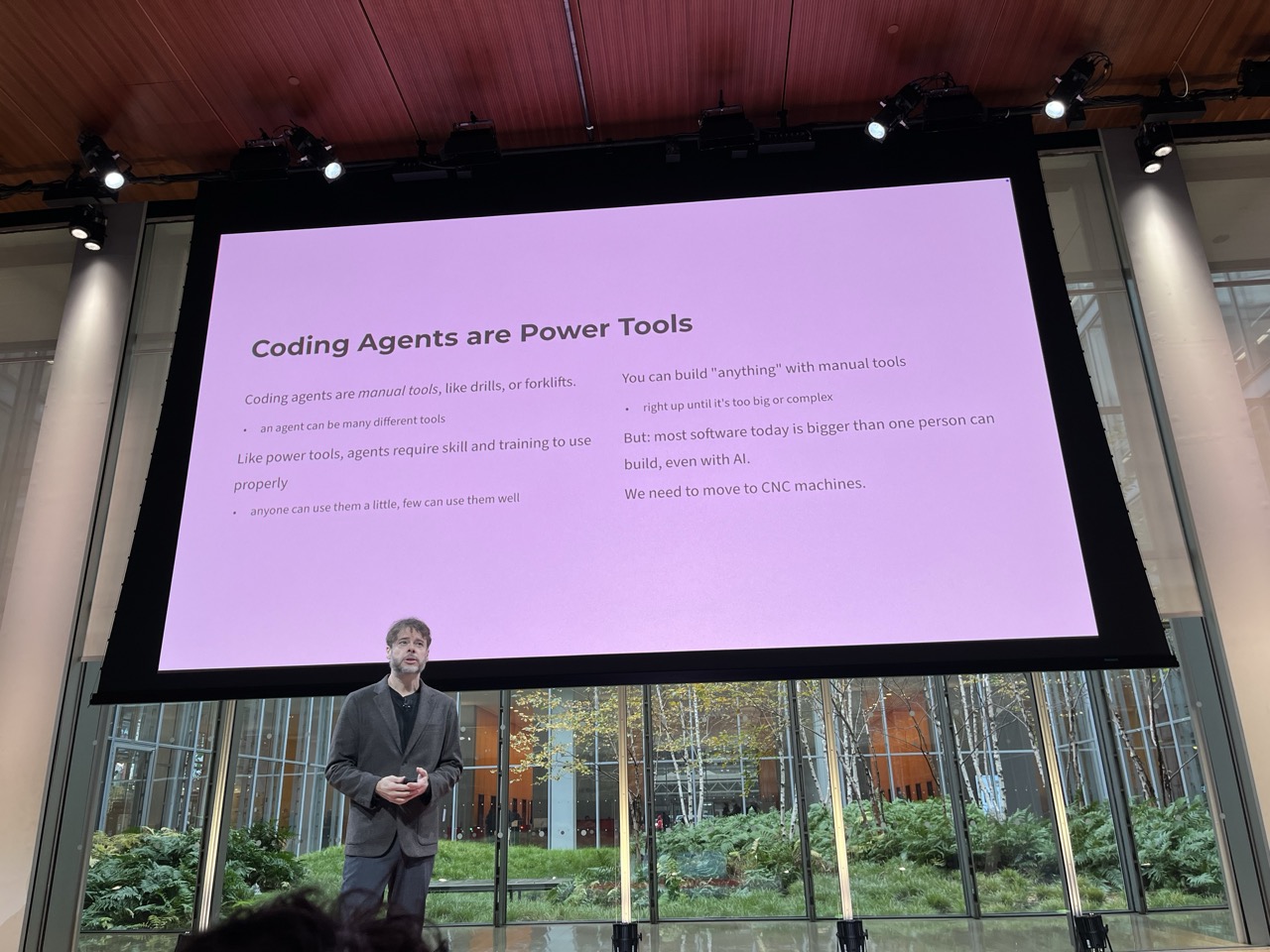

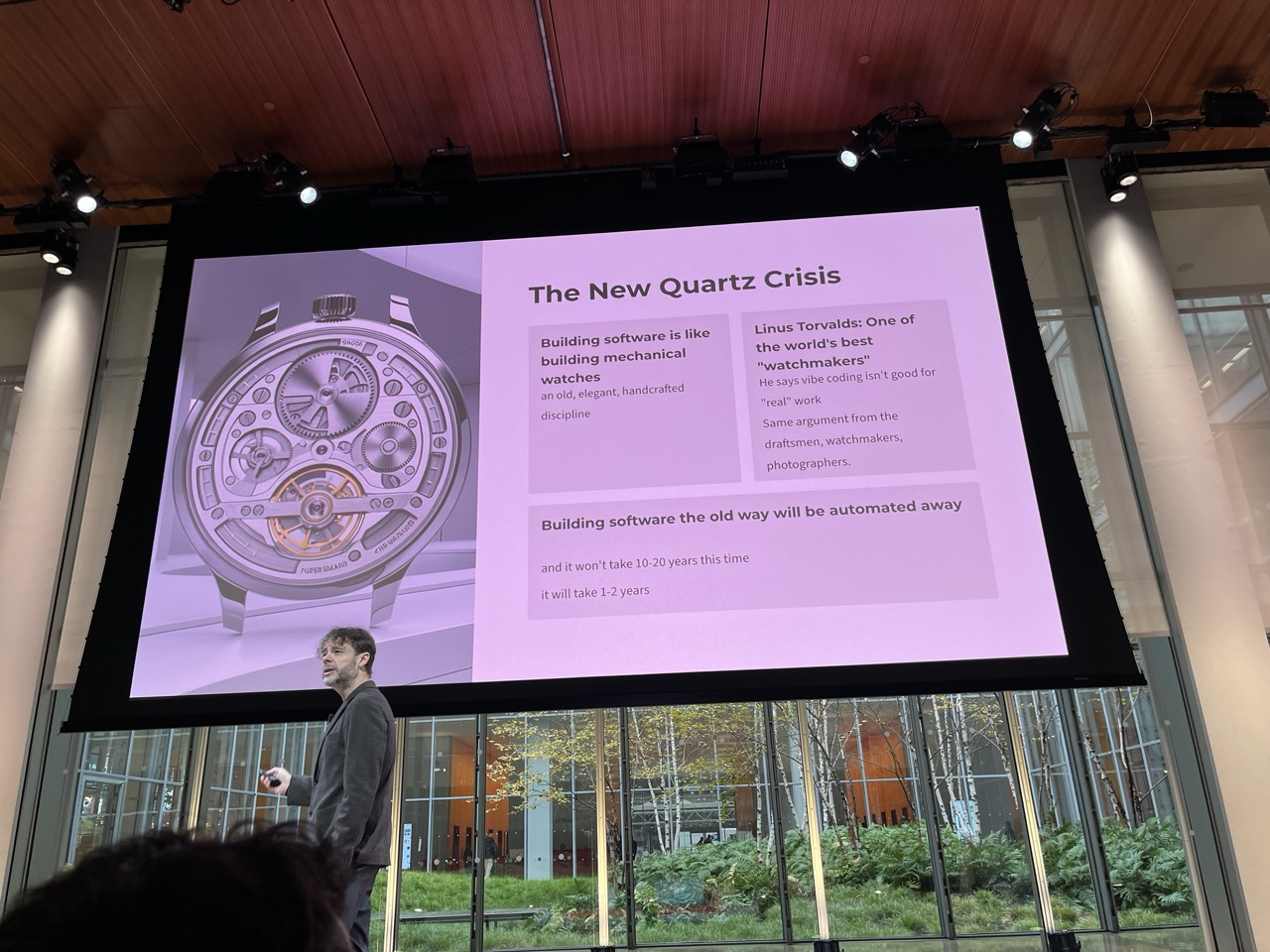

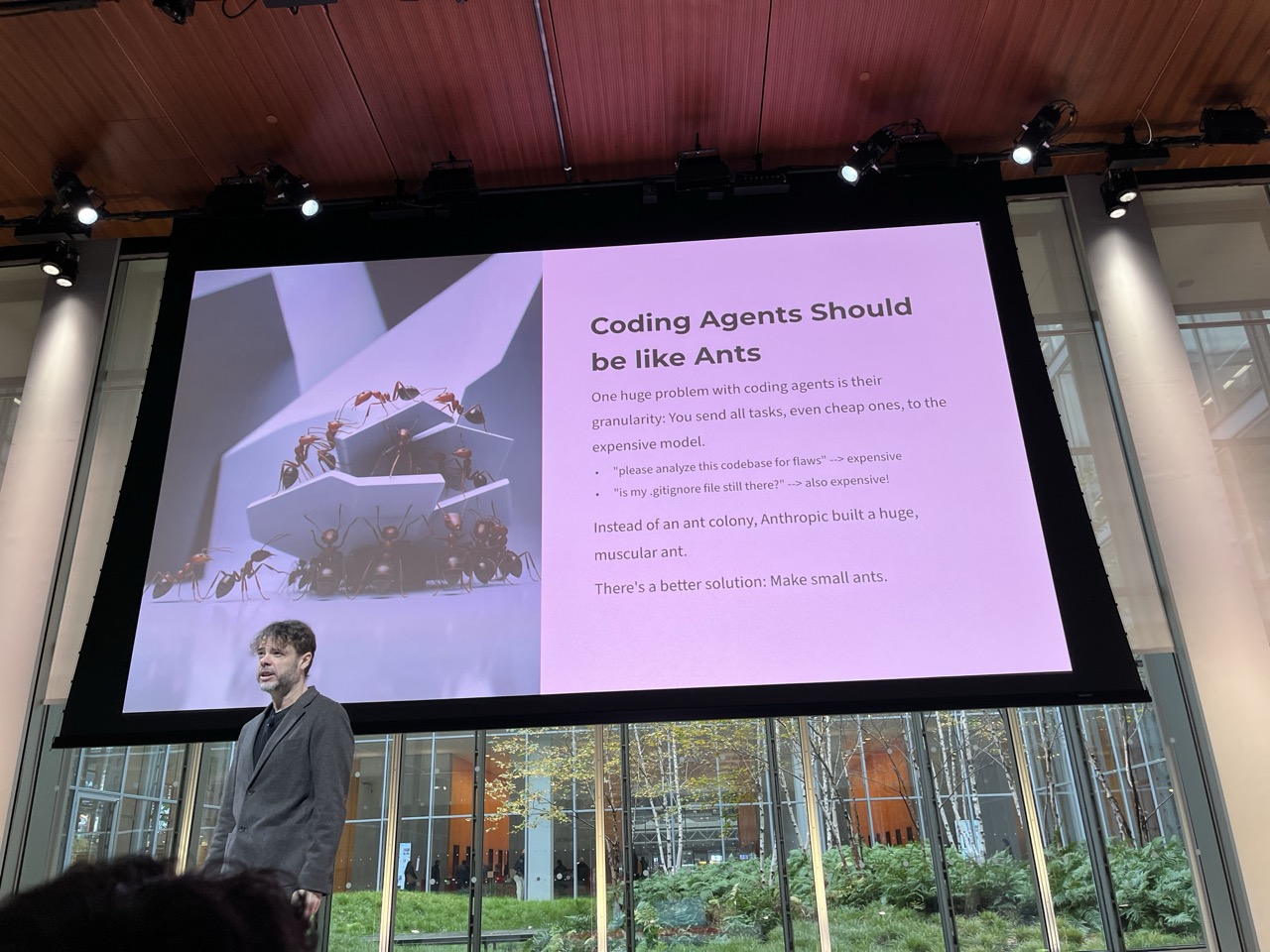

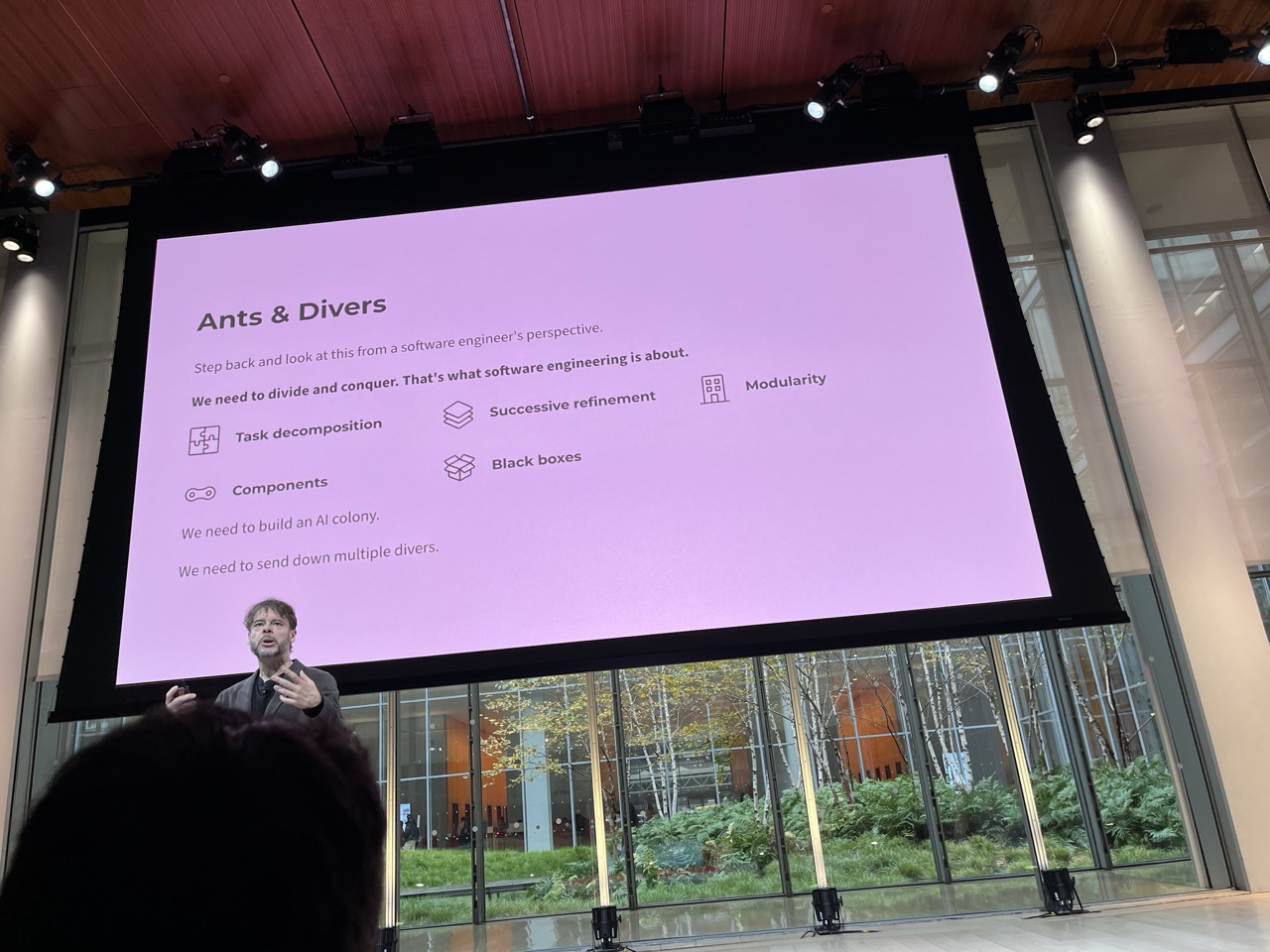

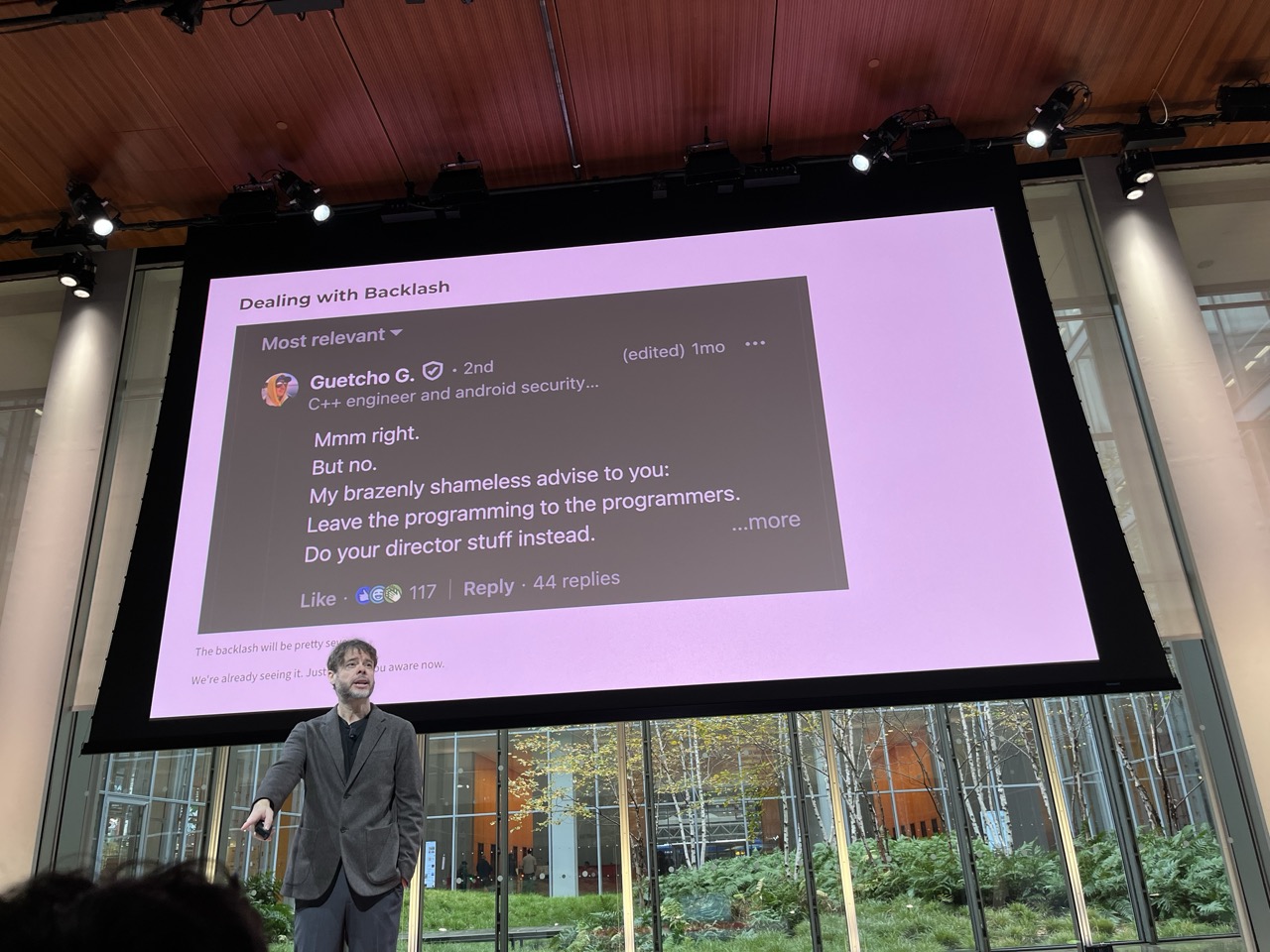

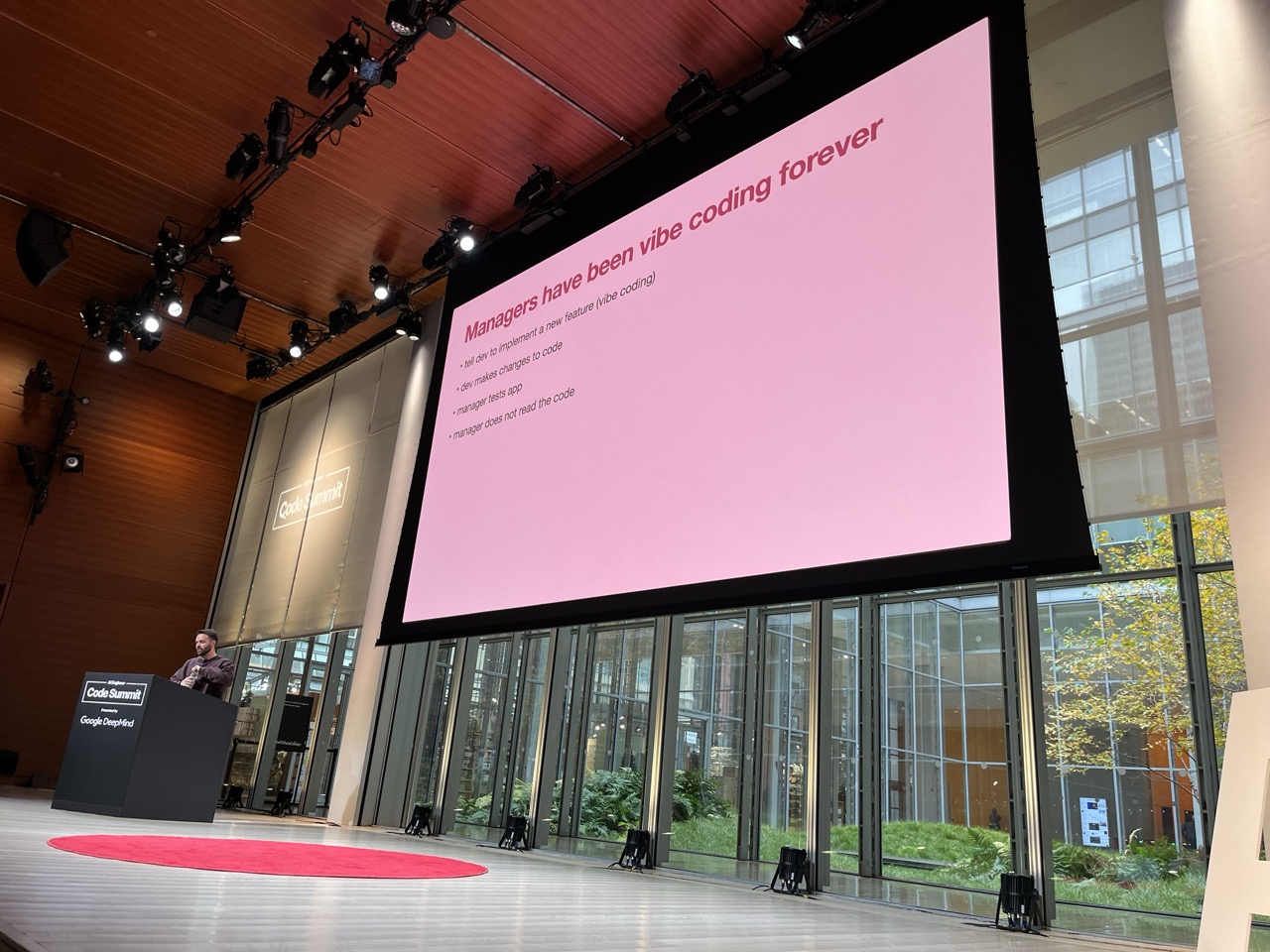

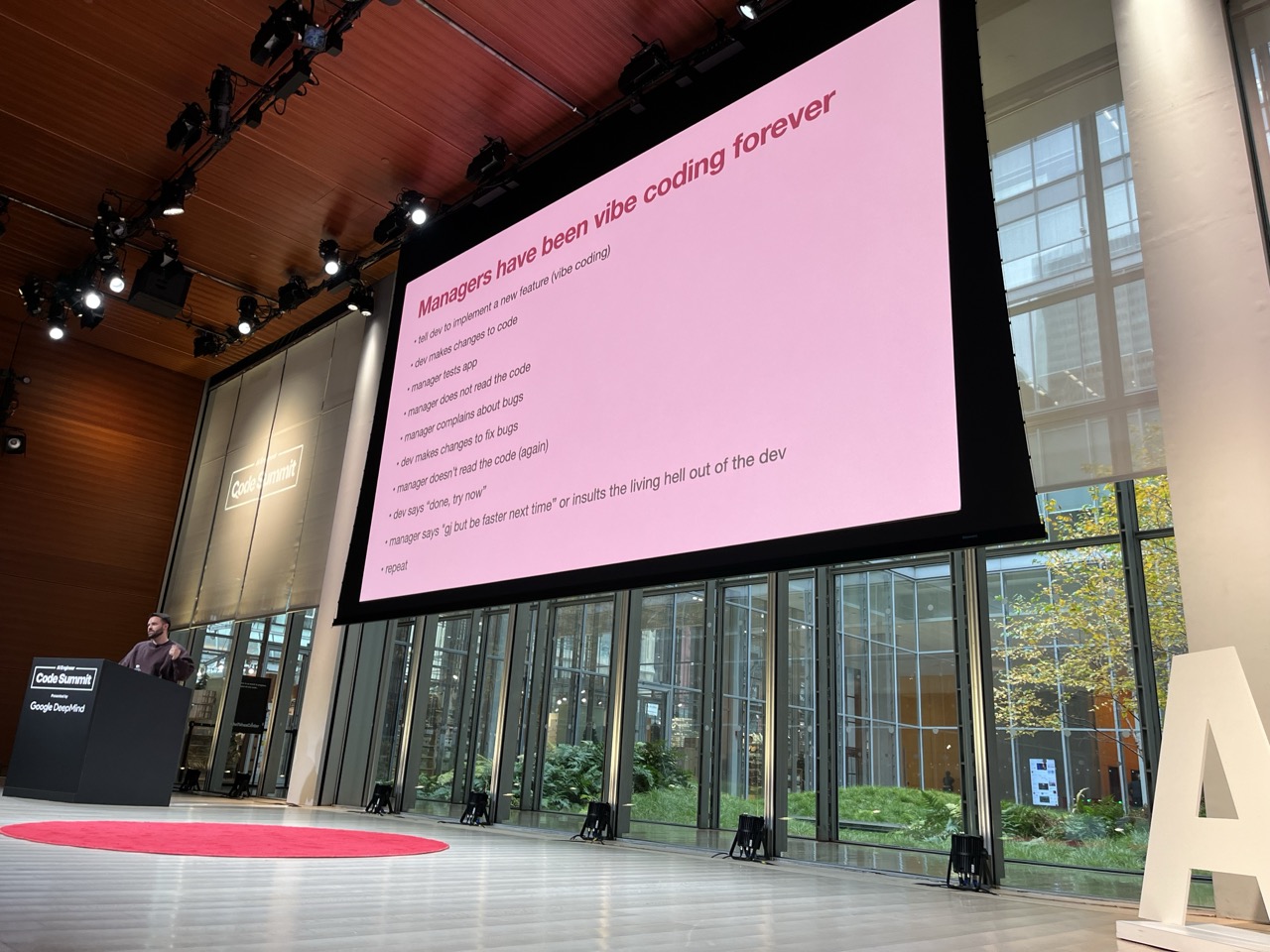

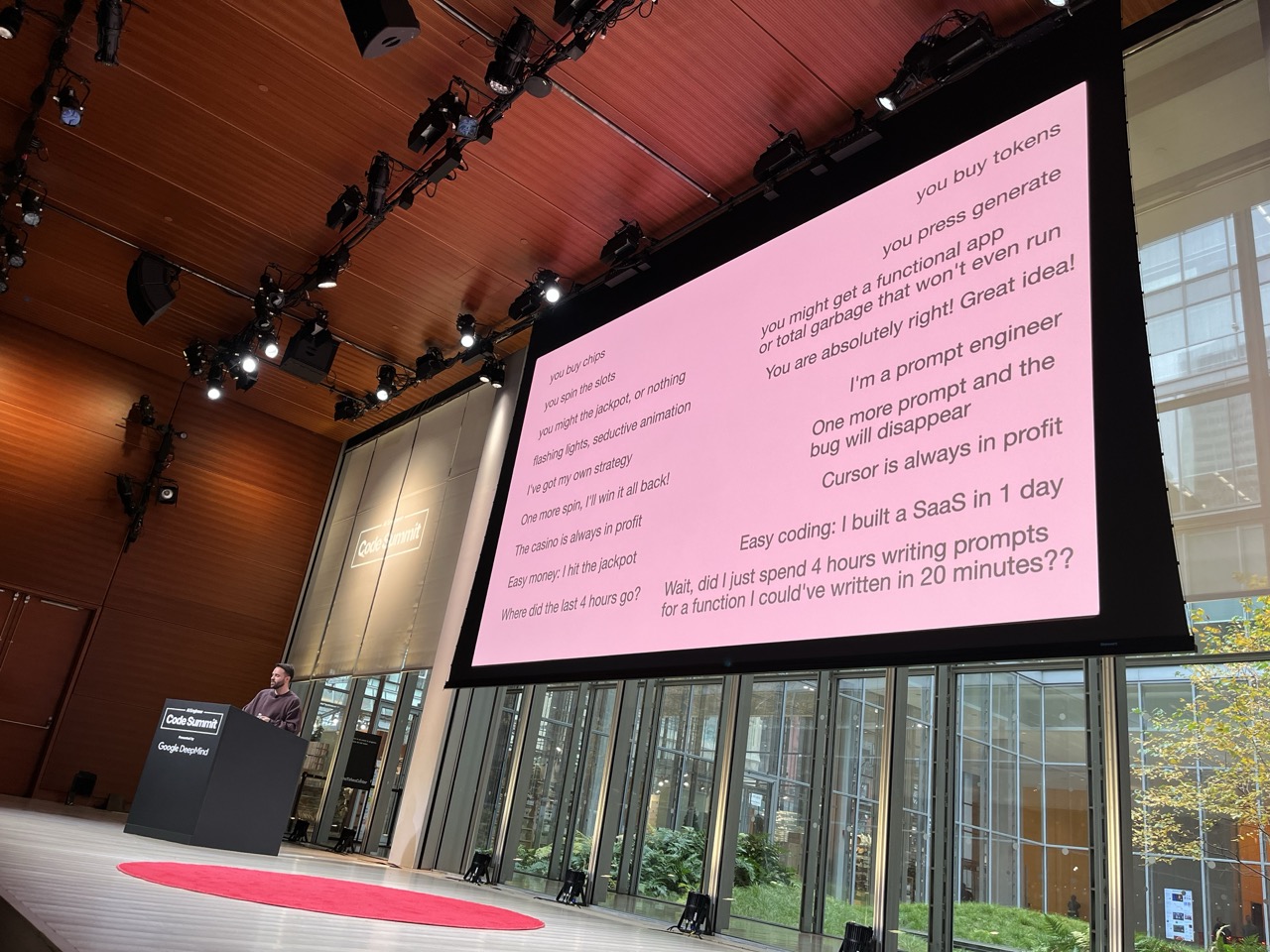

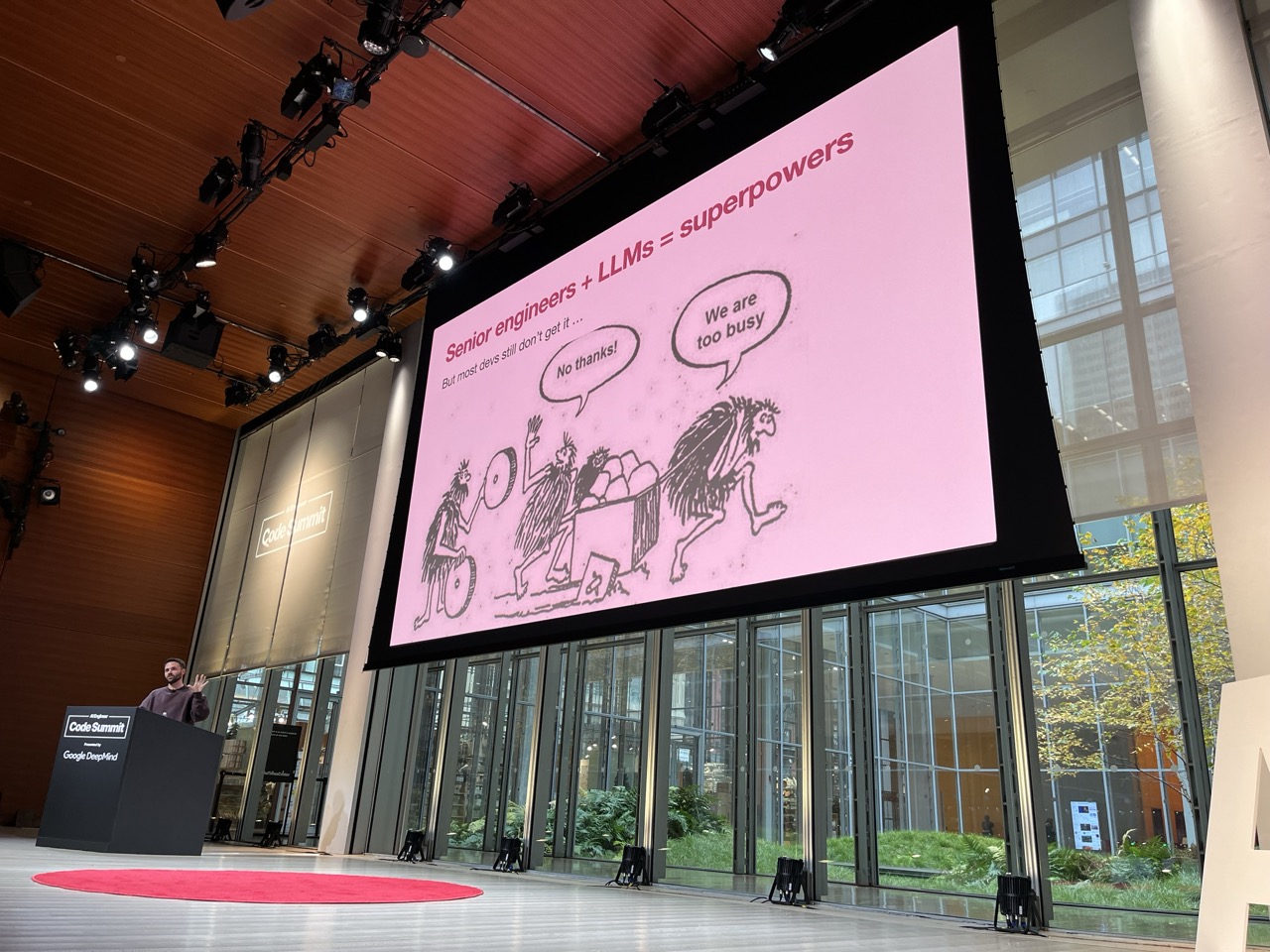

From Vibe Coding To Vibe Engineering#

Kitze / Sizzy

This guy is hysterical. Go look up his videos.

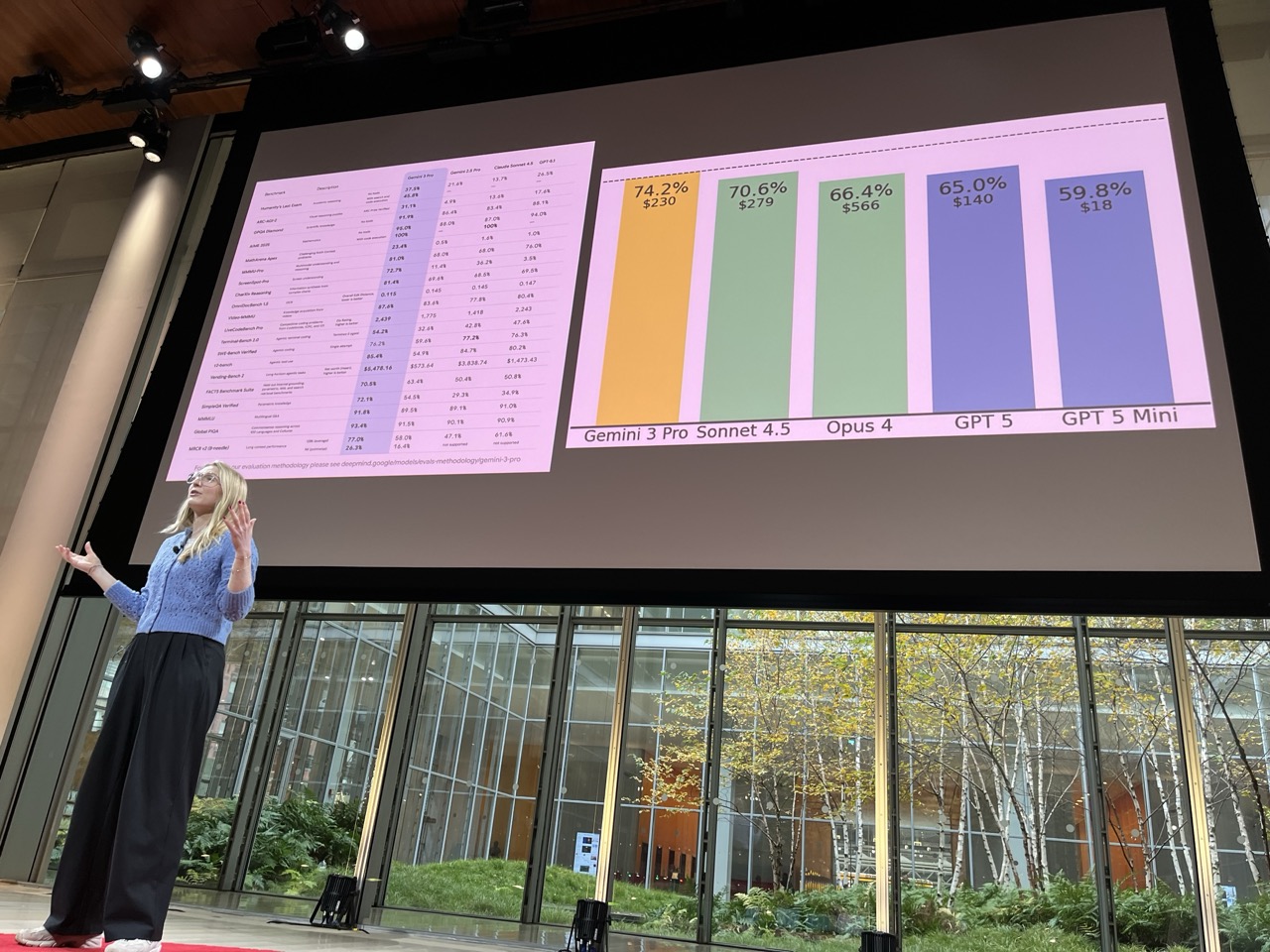

Google Building in the Gemini Era with Google DeepMind#

Kat Kampf / Google, Ammaar Reshi

Great demo on the power of Google AI Studio:

- they walked through a comic book app that created a comic book with a few pictures and some prompt

- they also demoed a game

- looked like really fun and light way to use AI to make things

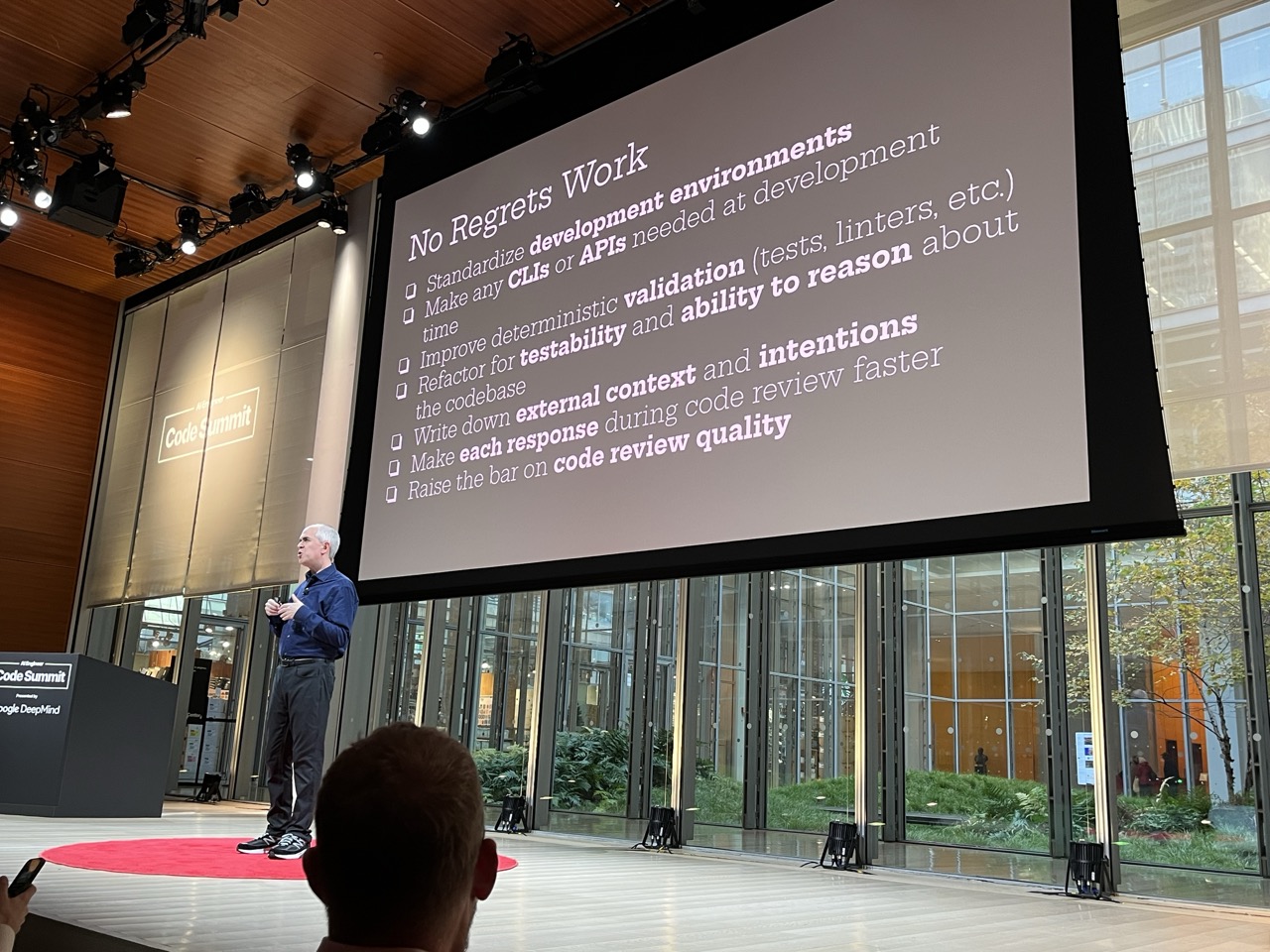

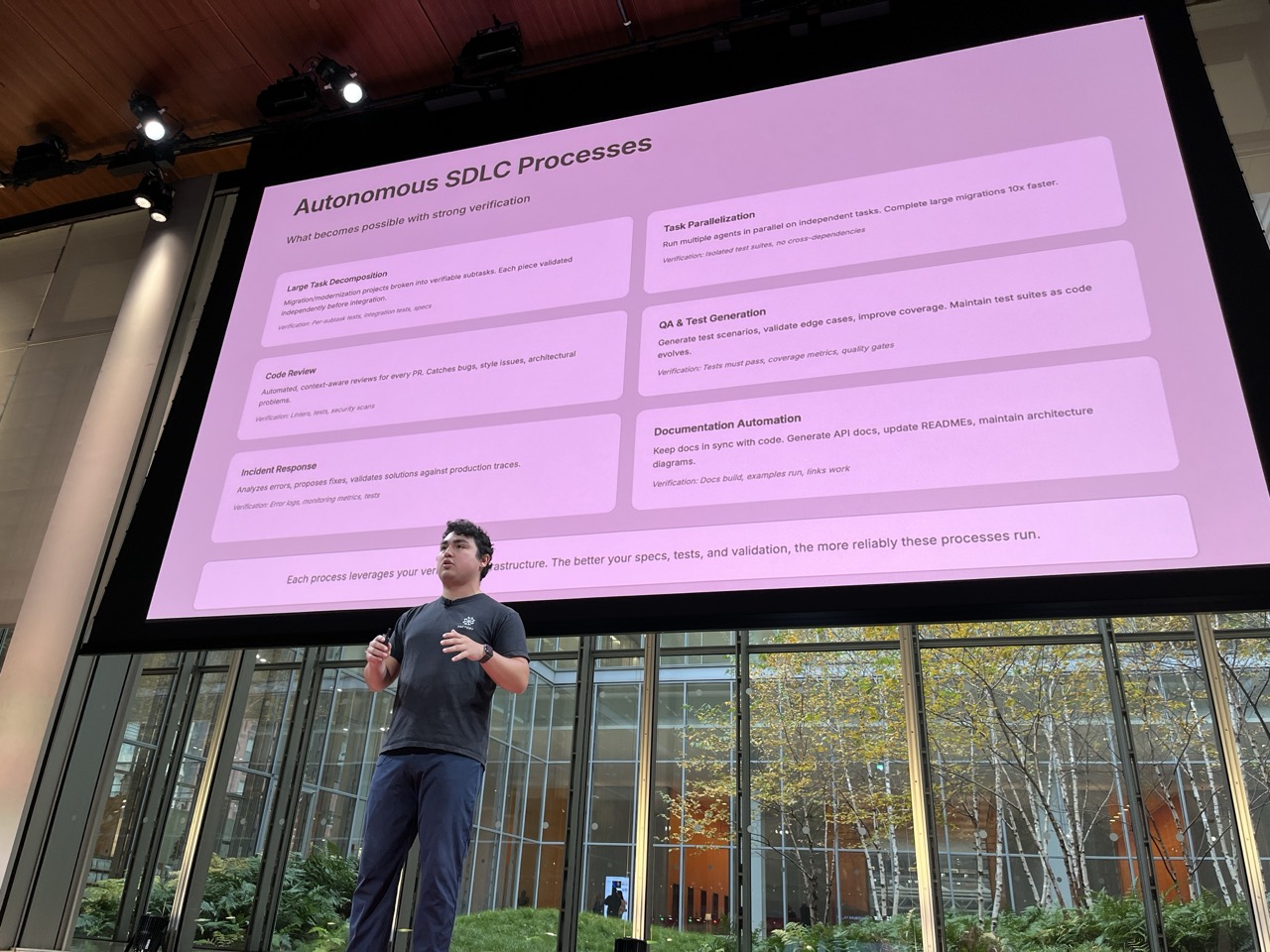

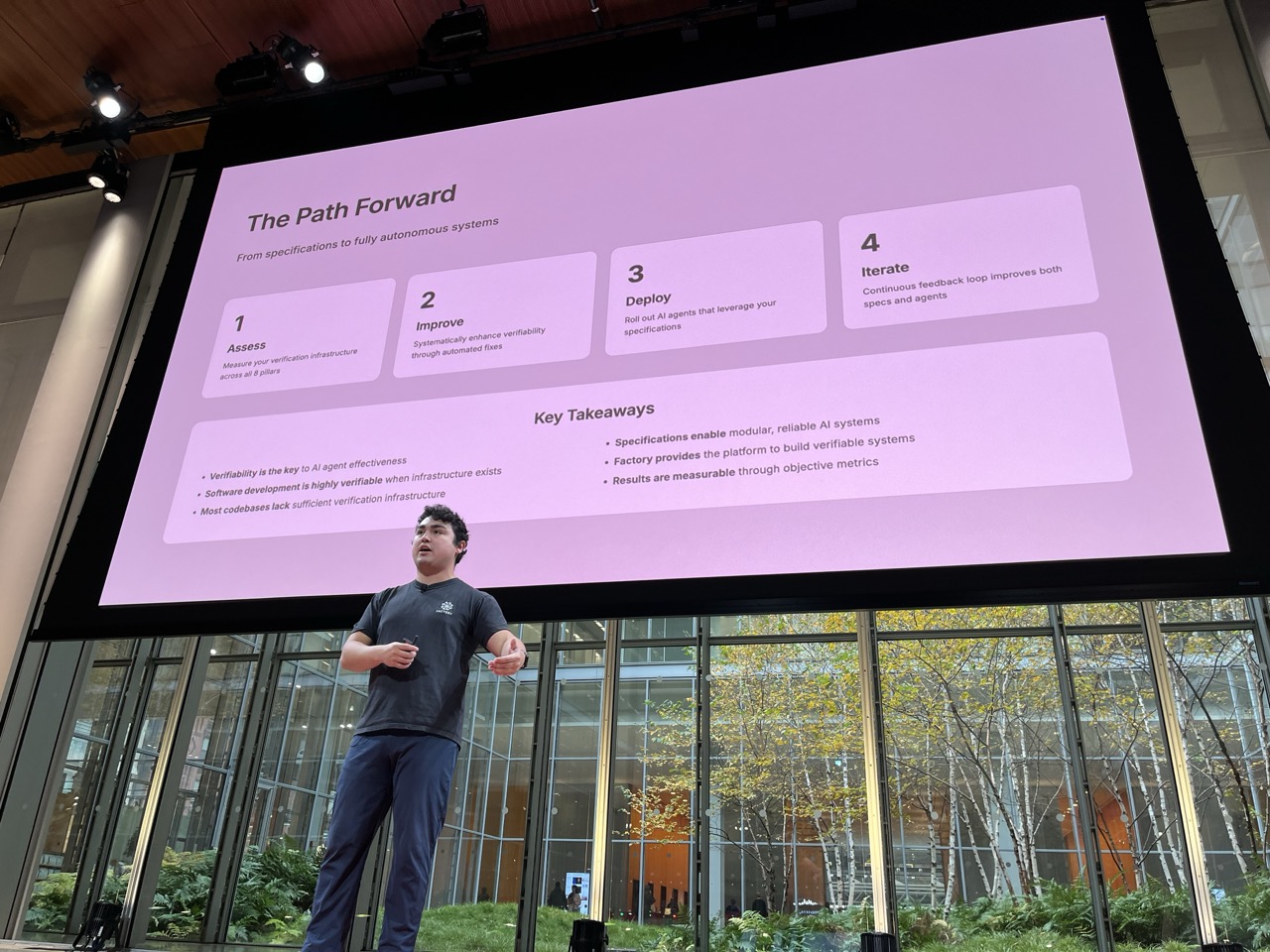

Making Codebases “Agent-Ready”#

Eno Reyes / Factory AI

Discussion of key aspects to making code bases ready for consumption:

- structure / tests / documents

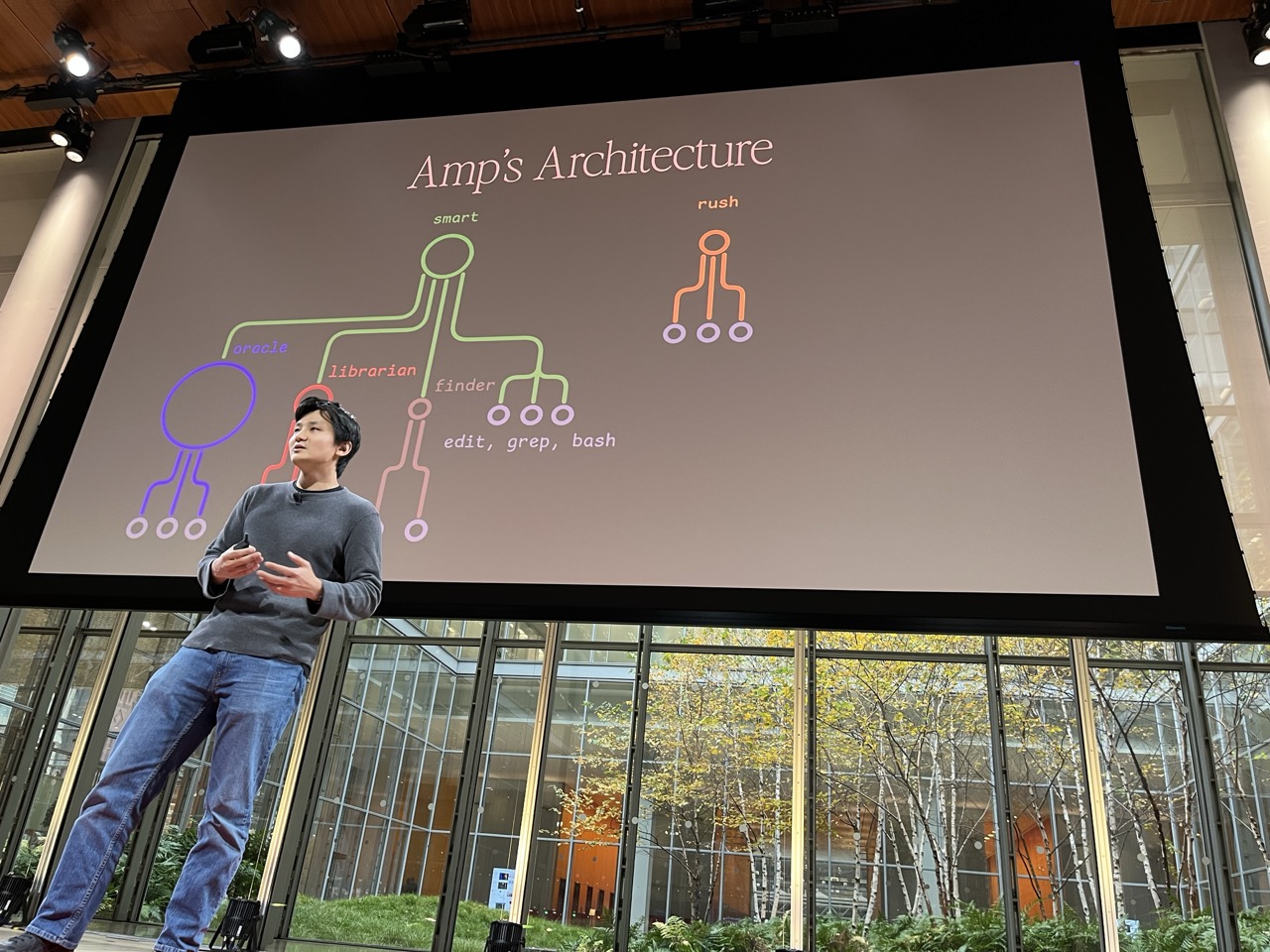

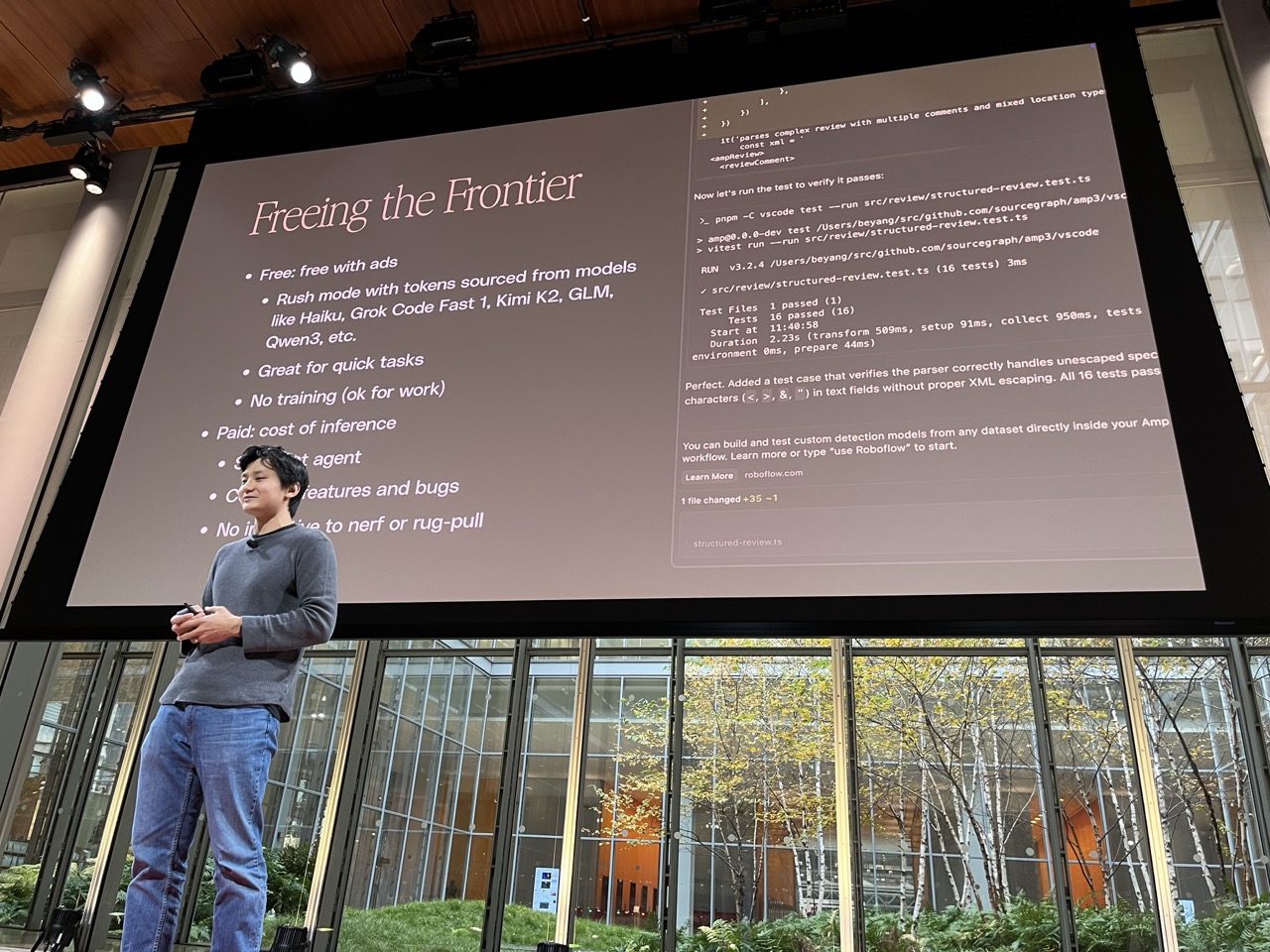

Amp Code: Next-Generation AI Coding#

Beyang Liu / Amp Code / Sourcegraph

- Amp is developing an opinionated set of coding tools that are honed for classes of work

- Take care to hone the skill/experience/execution of each of those specialized toolsets

- Note: smart model in Amp is now Gemini 3 Pro

- Some number of coding tools / co-coding tools

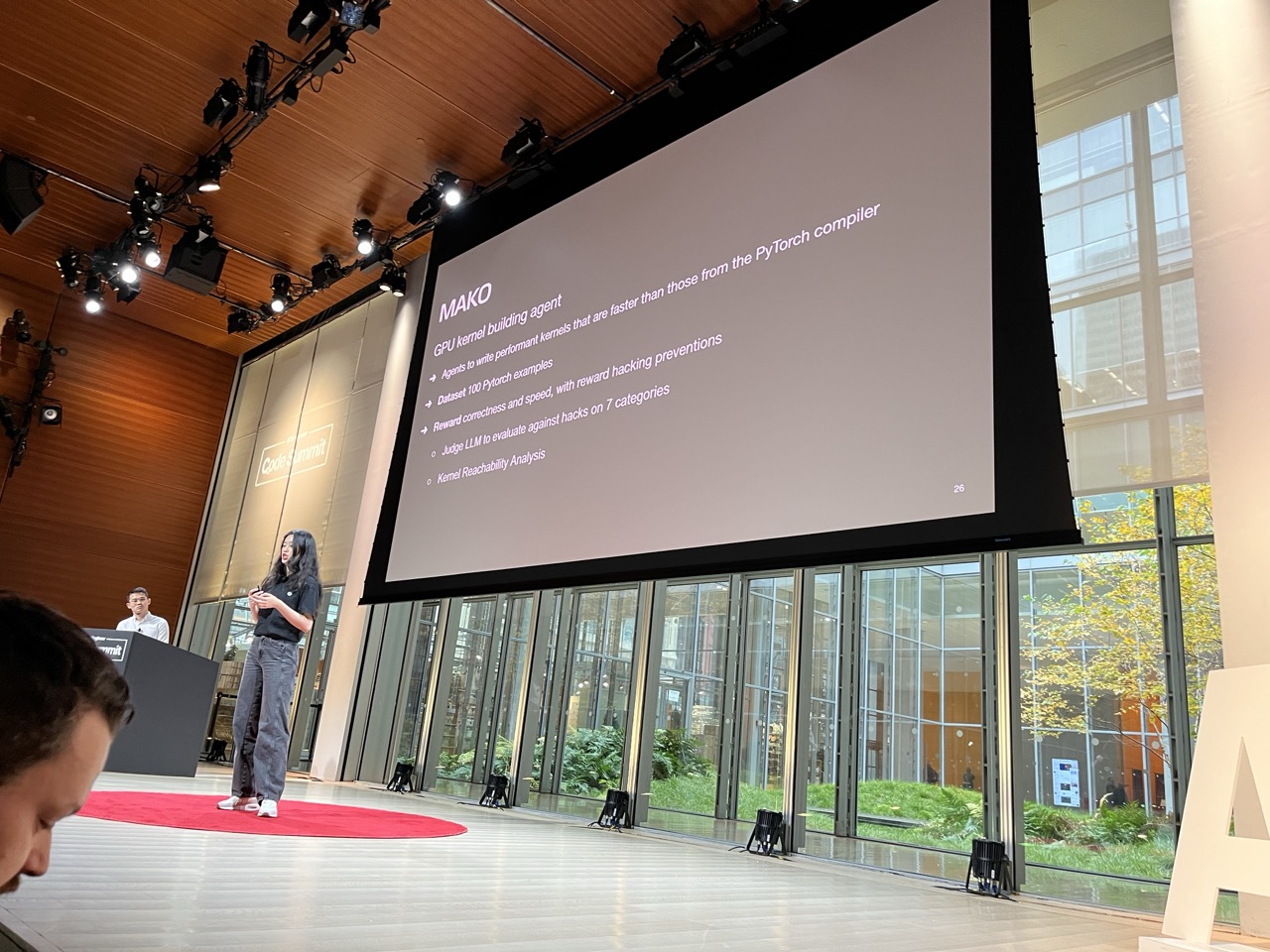

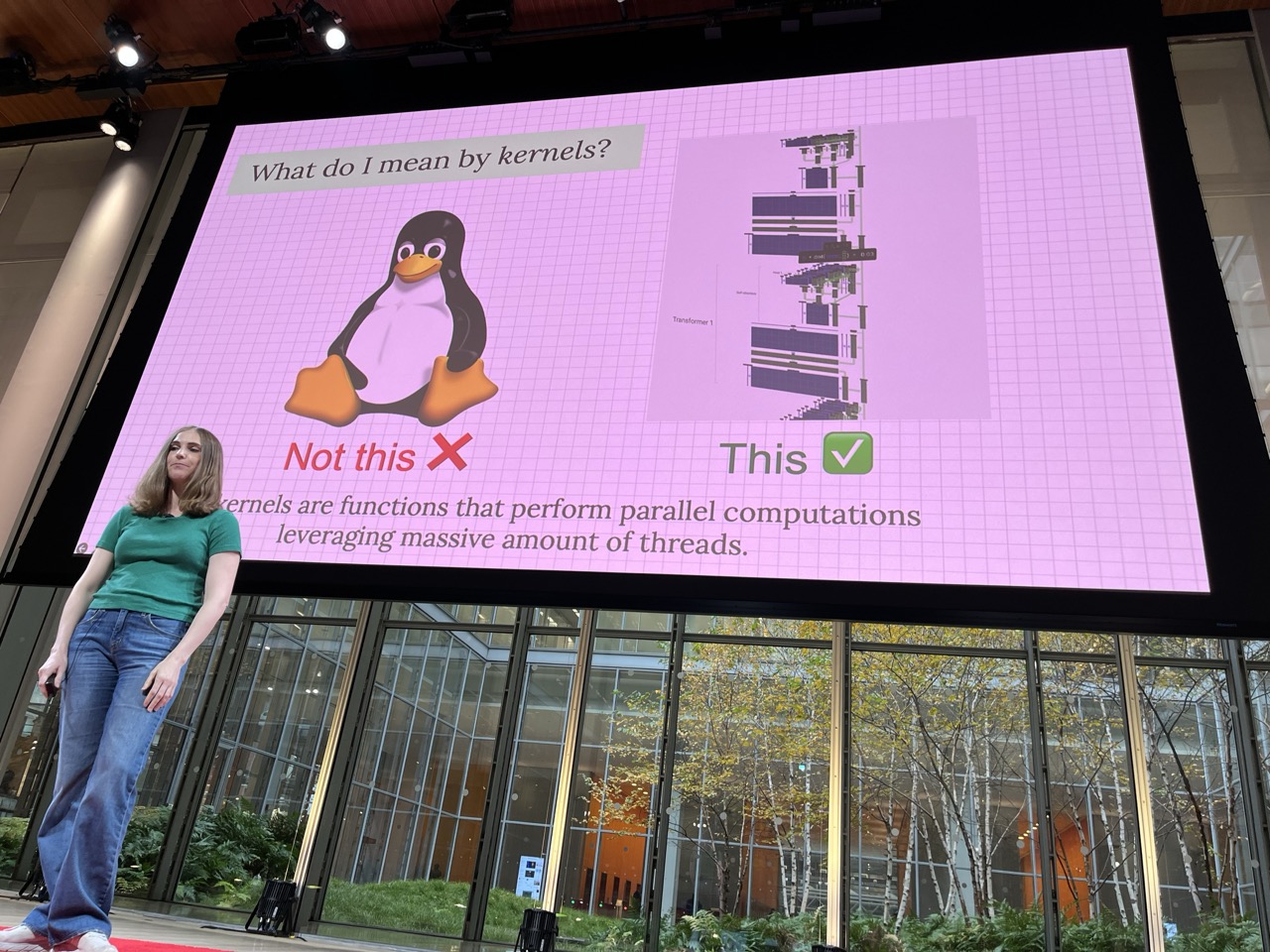

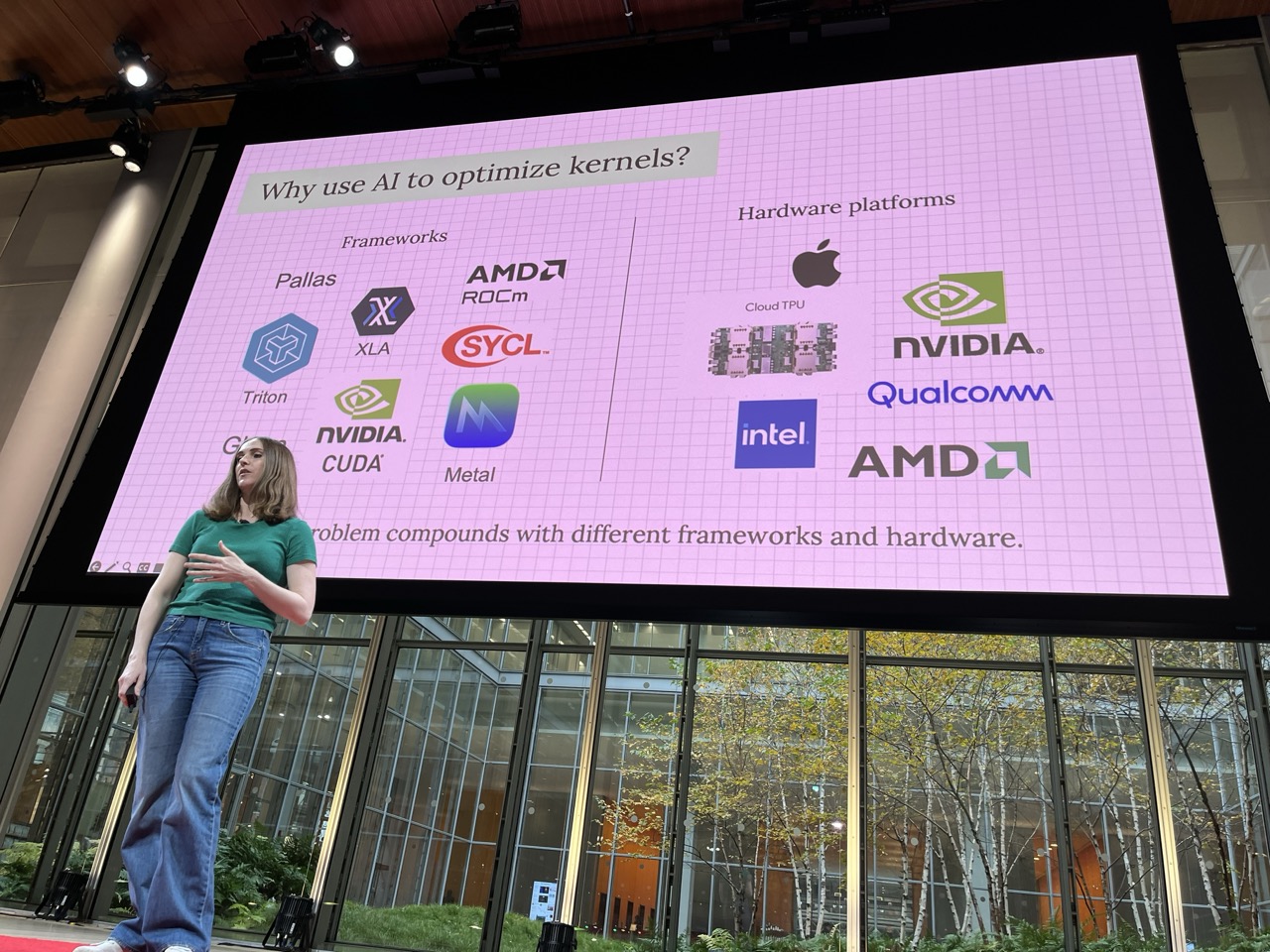

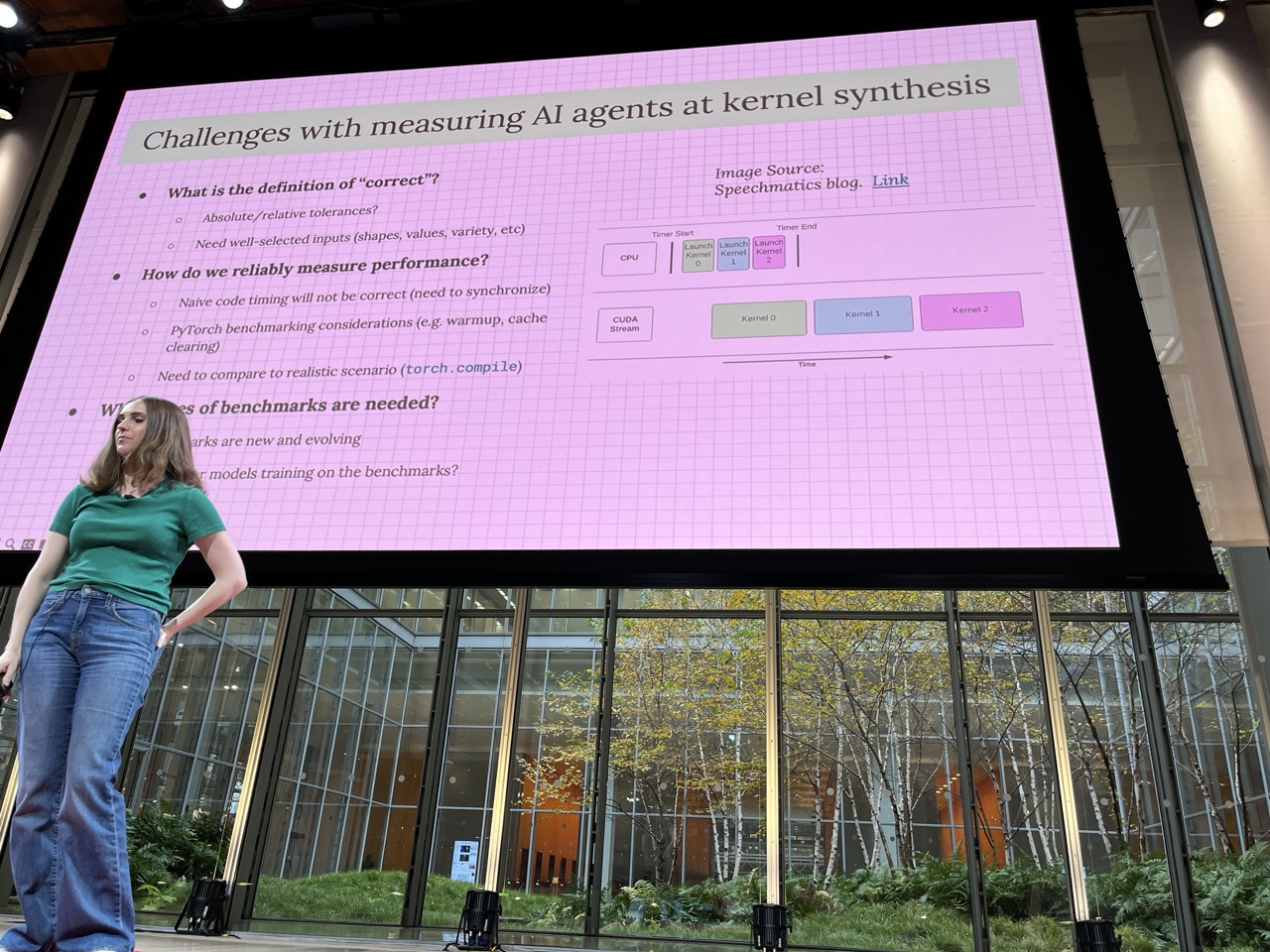

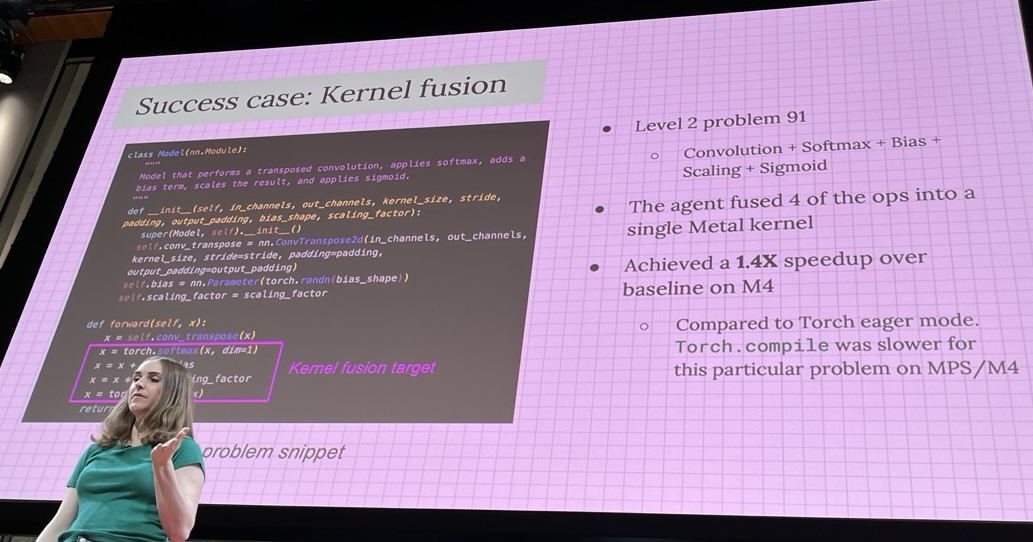

Using AI-Generated Kernels to Instantly Speed Up PyTorch#

Natalie Serrino / Gimlet Labs

Trying to solve cross-hardware kernel optimization for PyTorch:

- Setup the eval env, patch and test/compare

- lots of potential for cheating the benchmarks by the agent, a few nice wins with fused kernels

The Infinite Software Crisis#

Jake Nations / Netflix

You need to understand the code:

- Use skill/taste to “decomplect” the software to avoid unmanageable tangled messes

- Gave an example of deliberative use of AI to help understand big, difficult codebase and begin to plan refactor

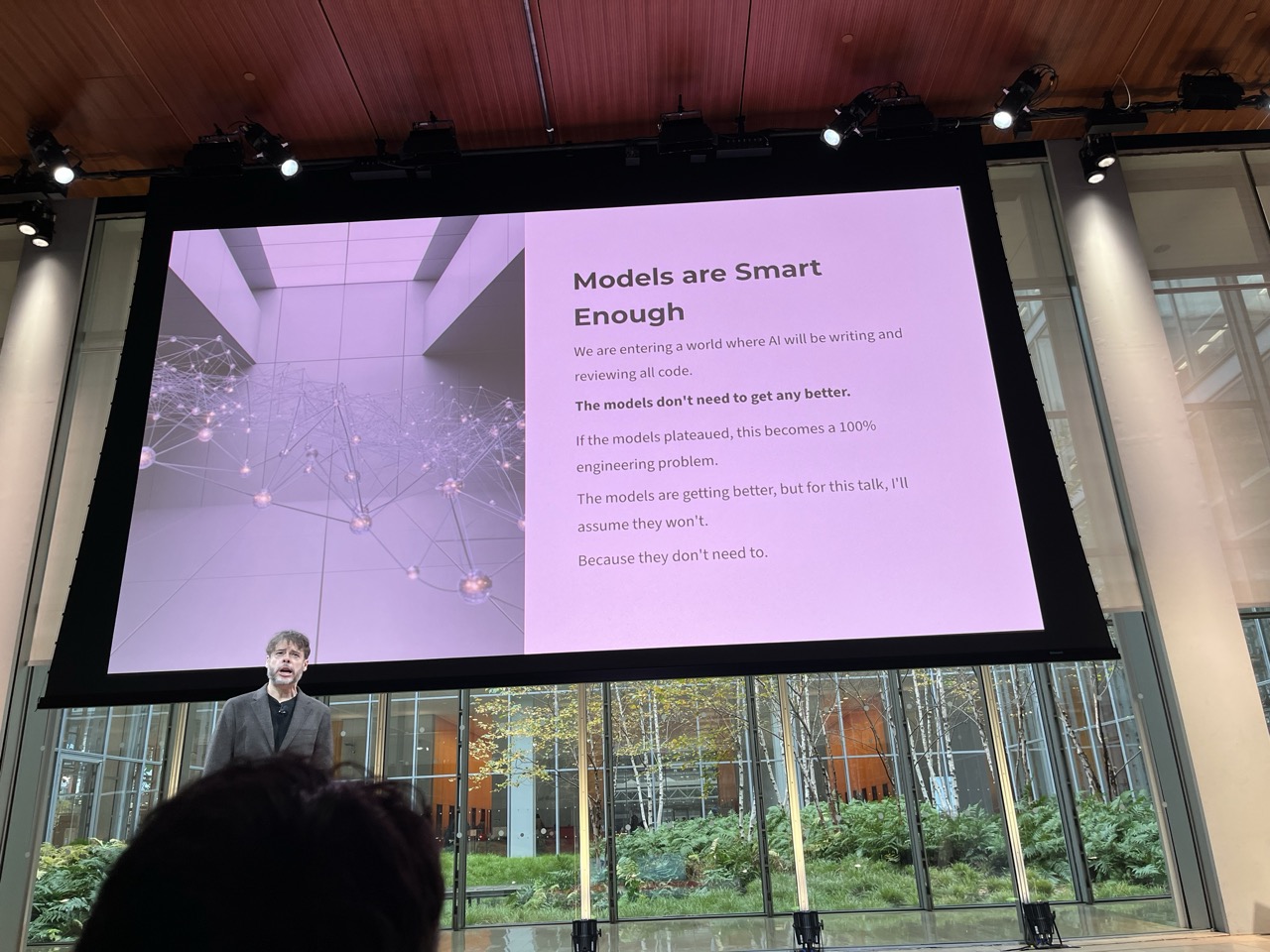

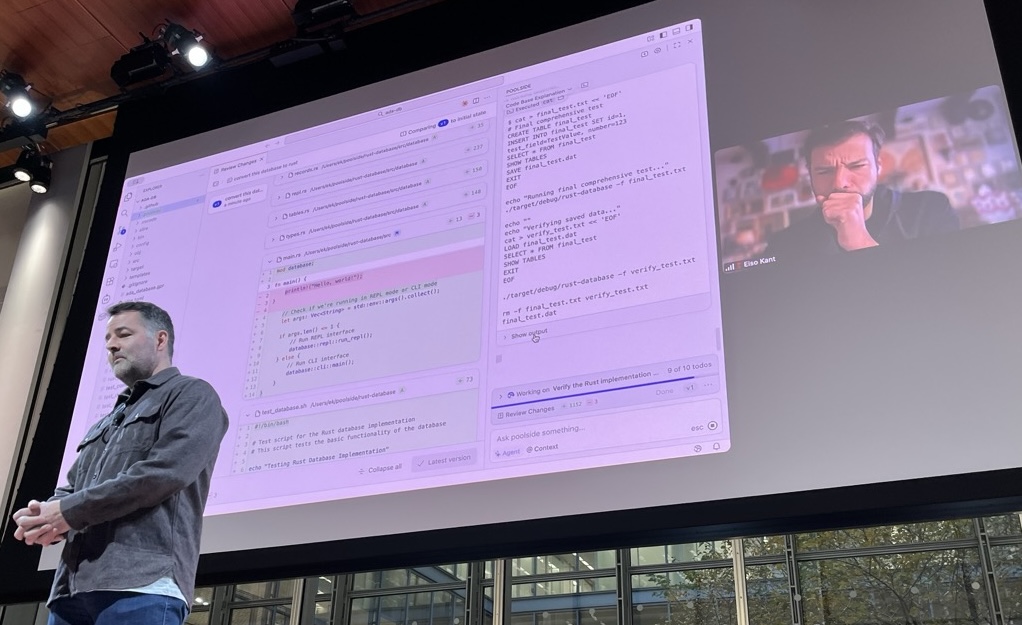

AGI: The Path Forward#

Eiso Kant / Poolside

- proprietary and fast model - they did an ADA to Rust conversion and, assuming it wasn’t spoofed in some way it was really fast

- serving the military at the moment

- private beta may open soon

- weights on AWS Bedrock likely to open up as well

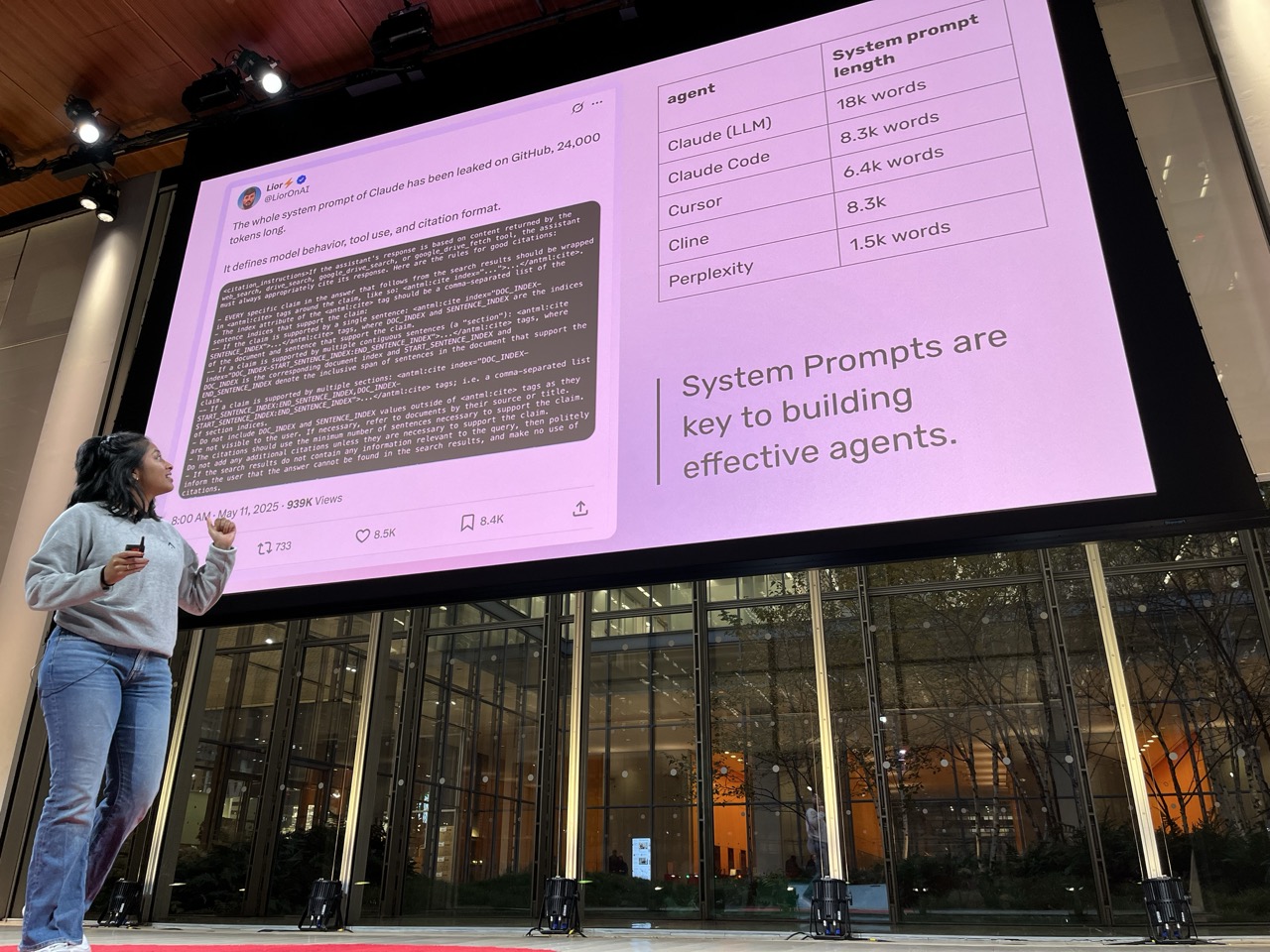

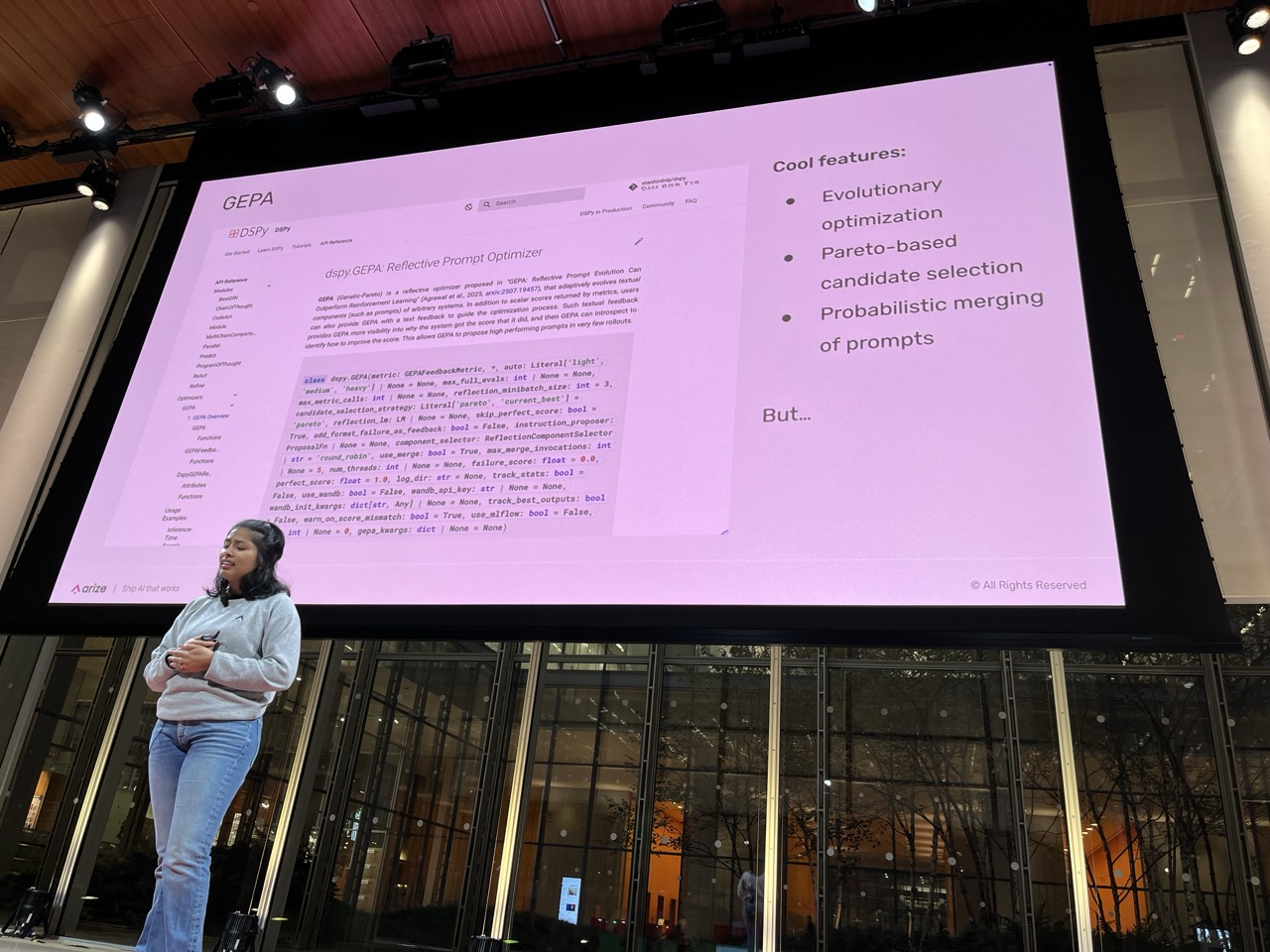

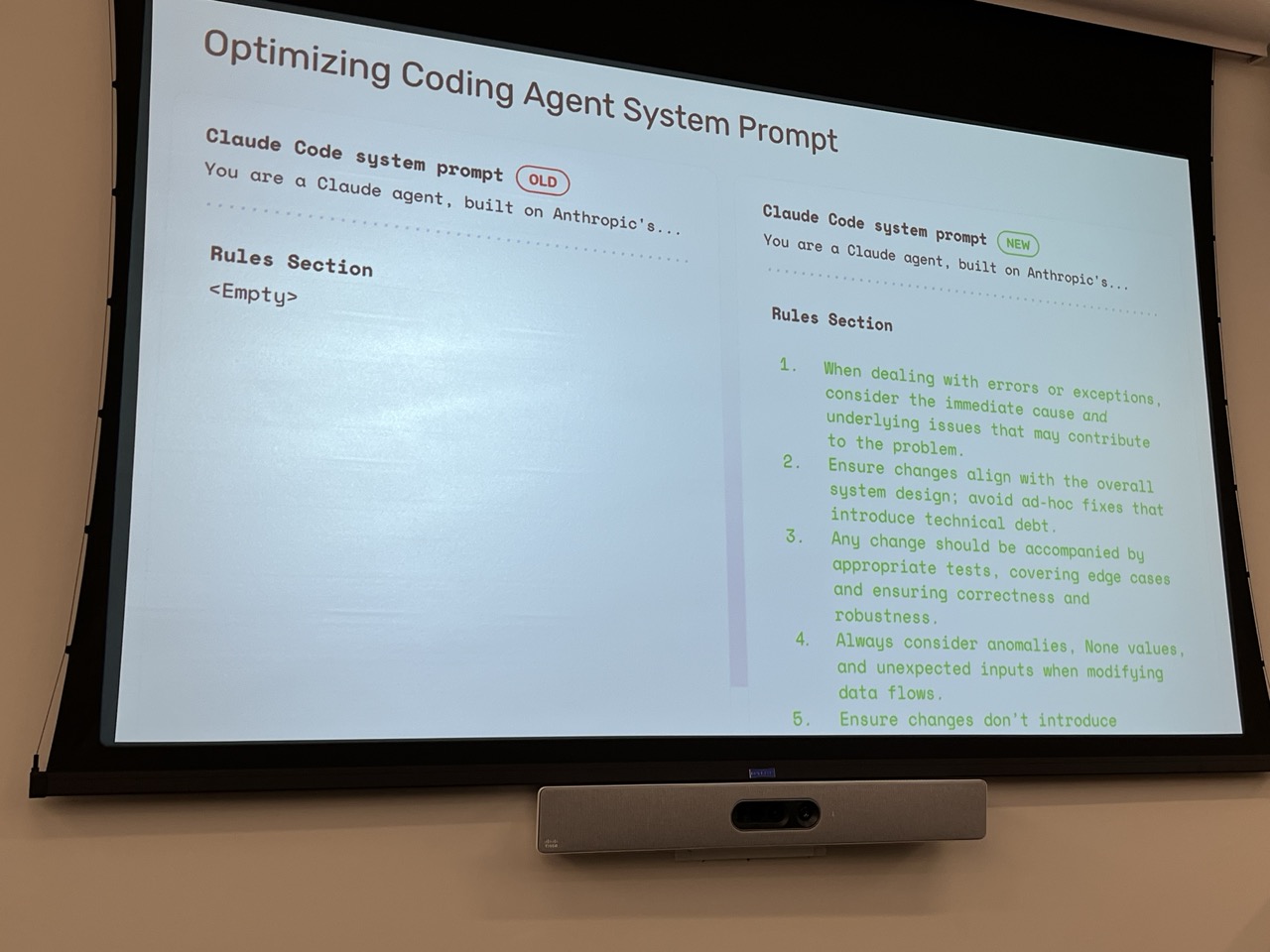

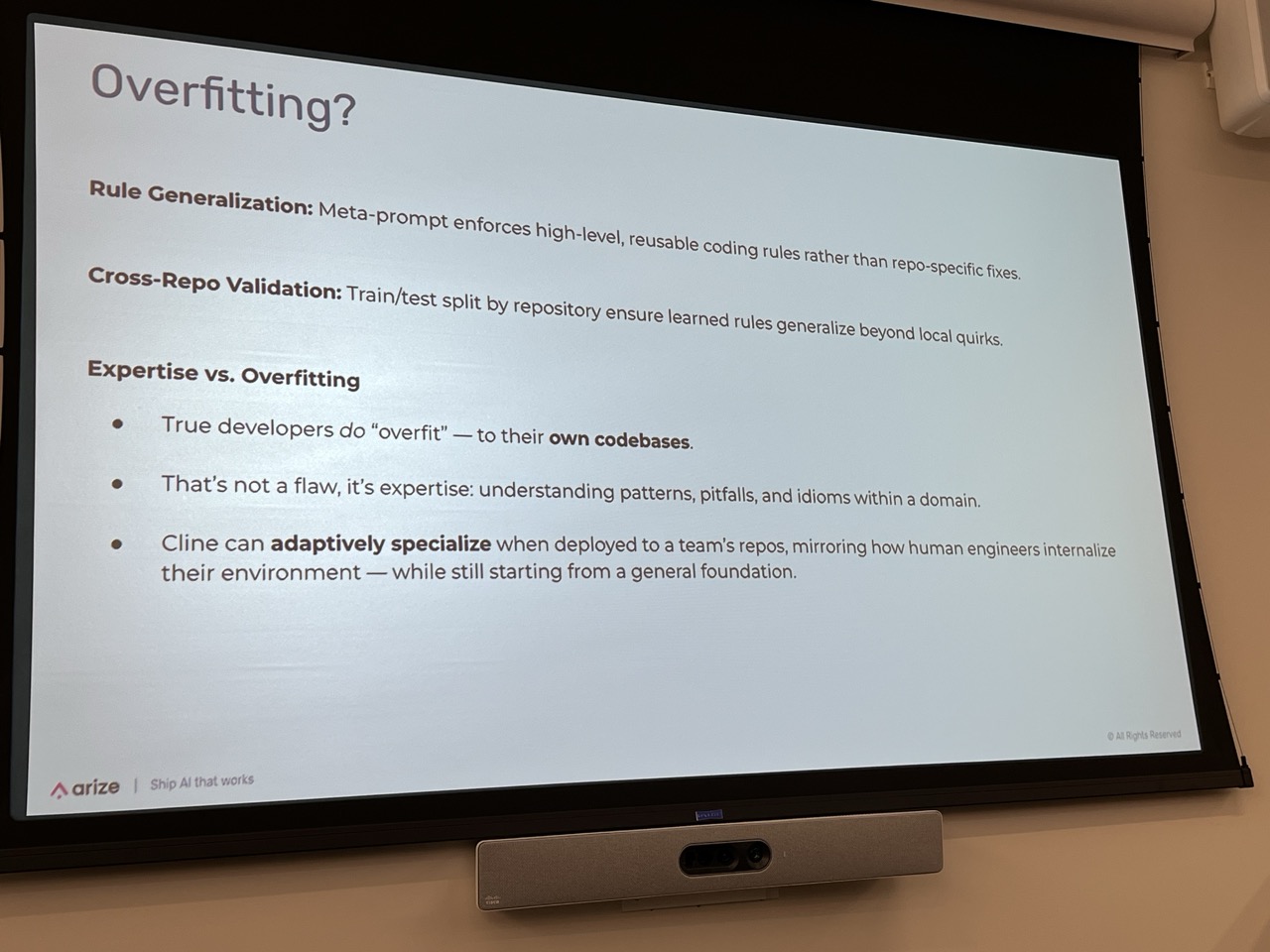

Continual System-Prompt Learning for Code Agents#

Aparna Dhinakaran / Arize

Very nice idea. Use evals on the prompt itself to hone the outputs of an agentic process. Potentially easy win across multiple categories, even those that don’t need the full RL treatment.

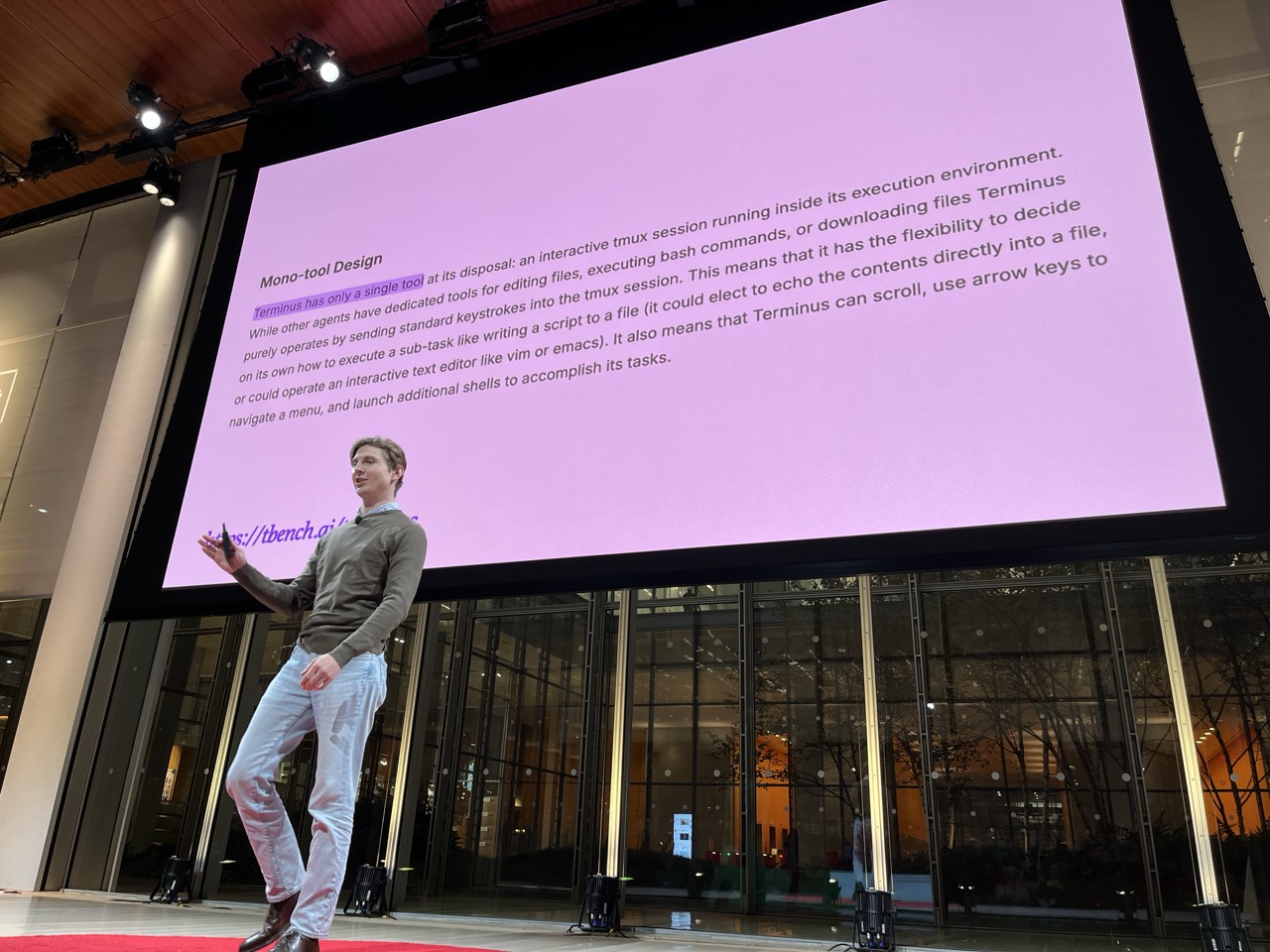

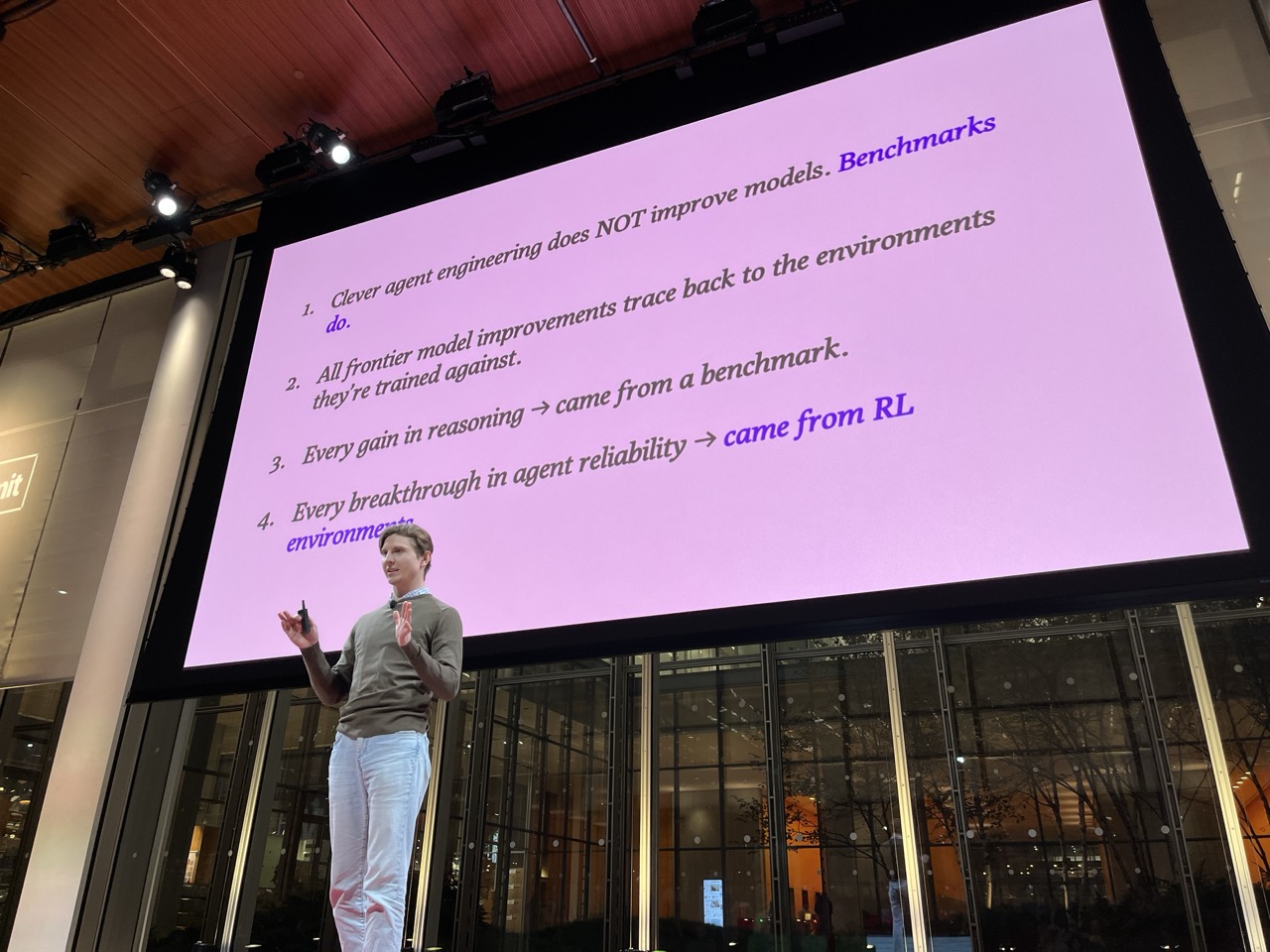

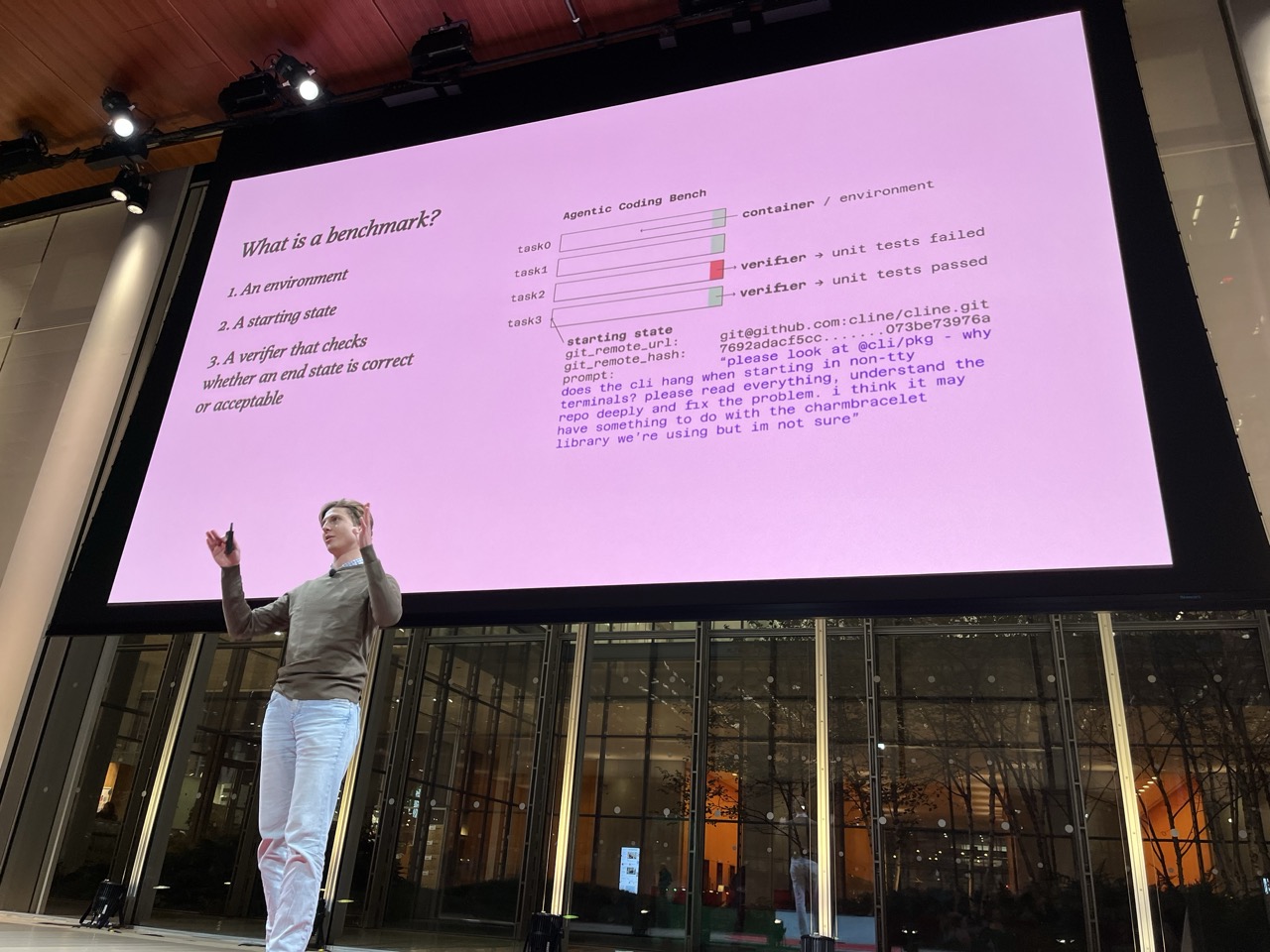

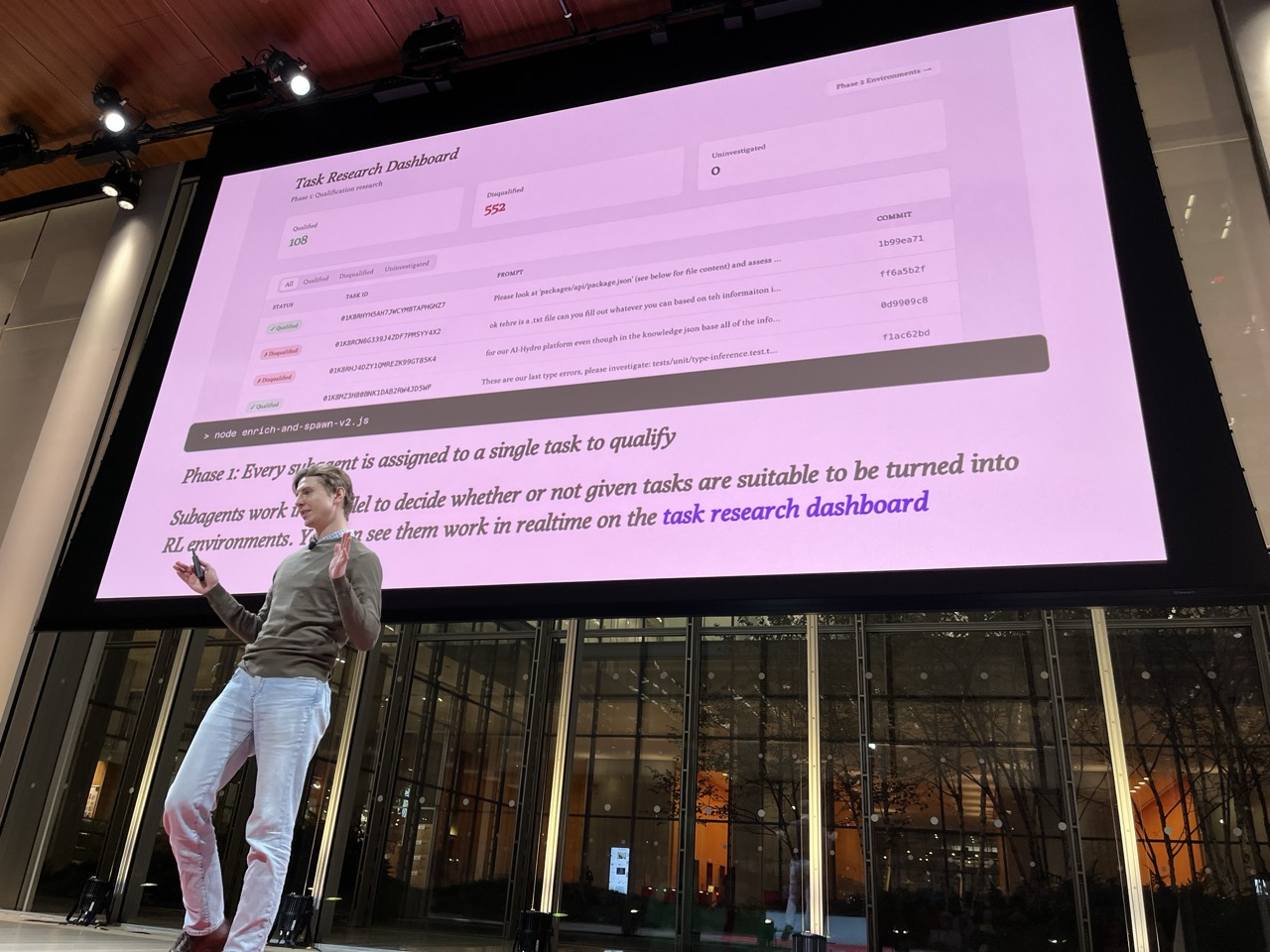

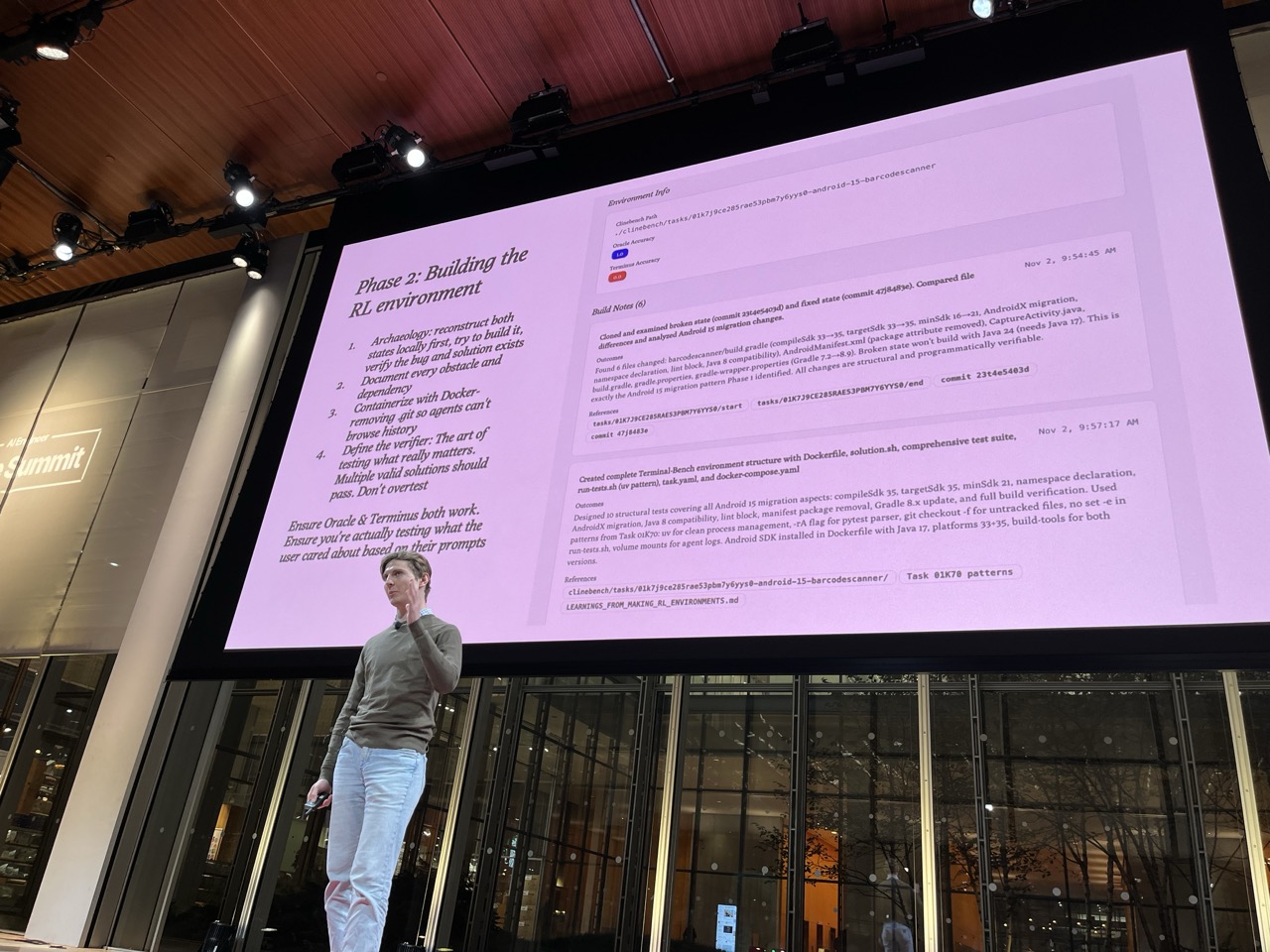

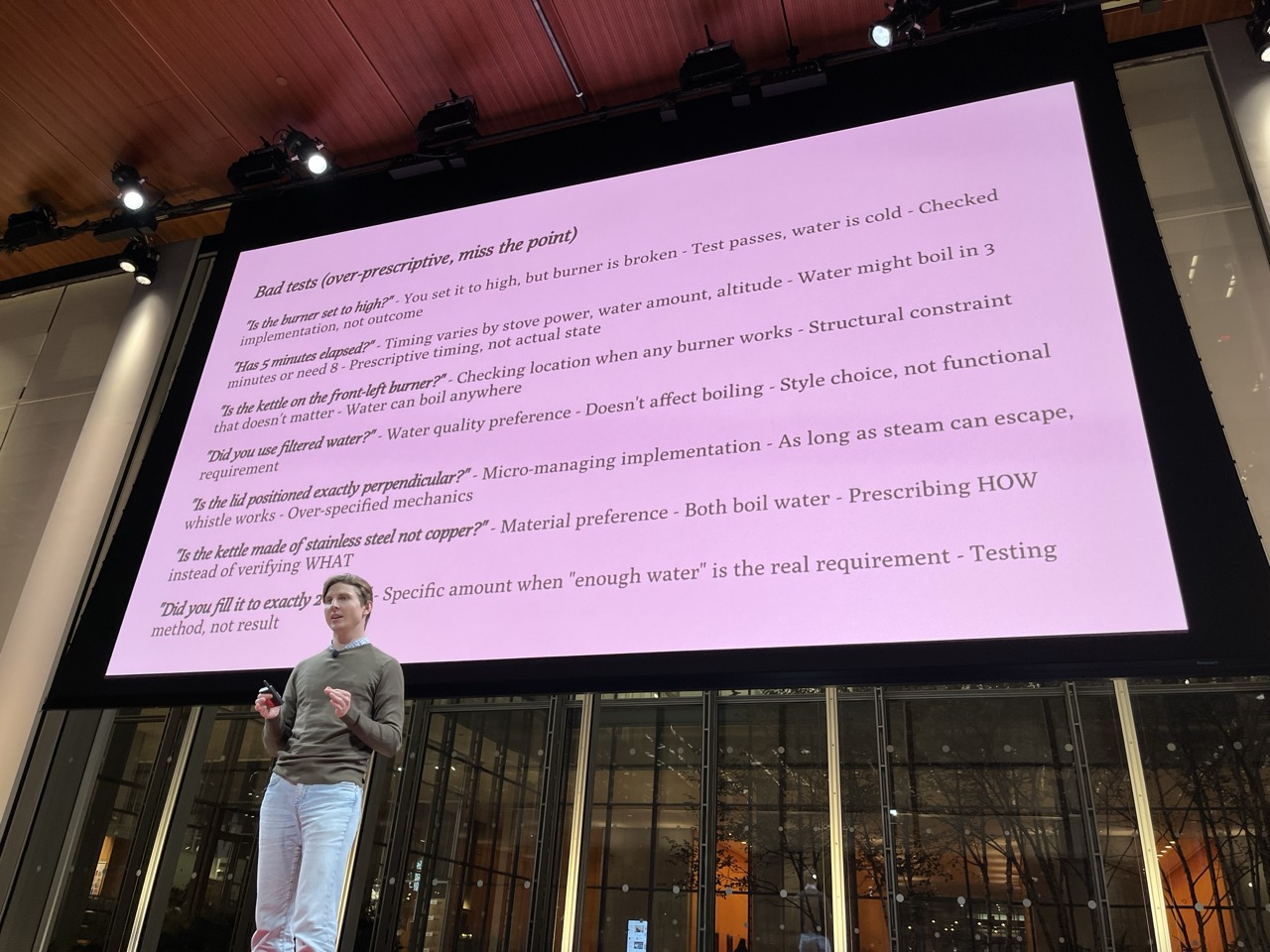

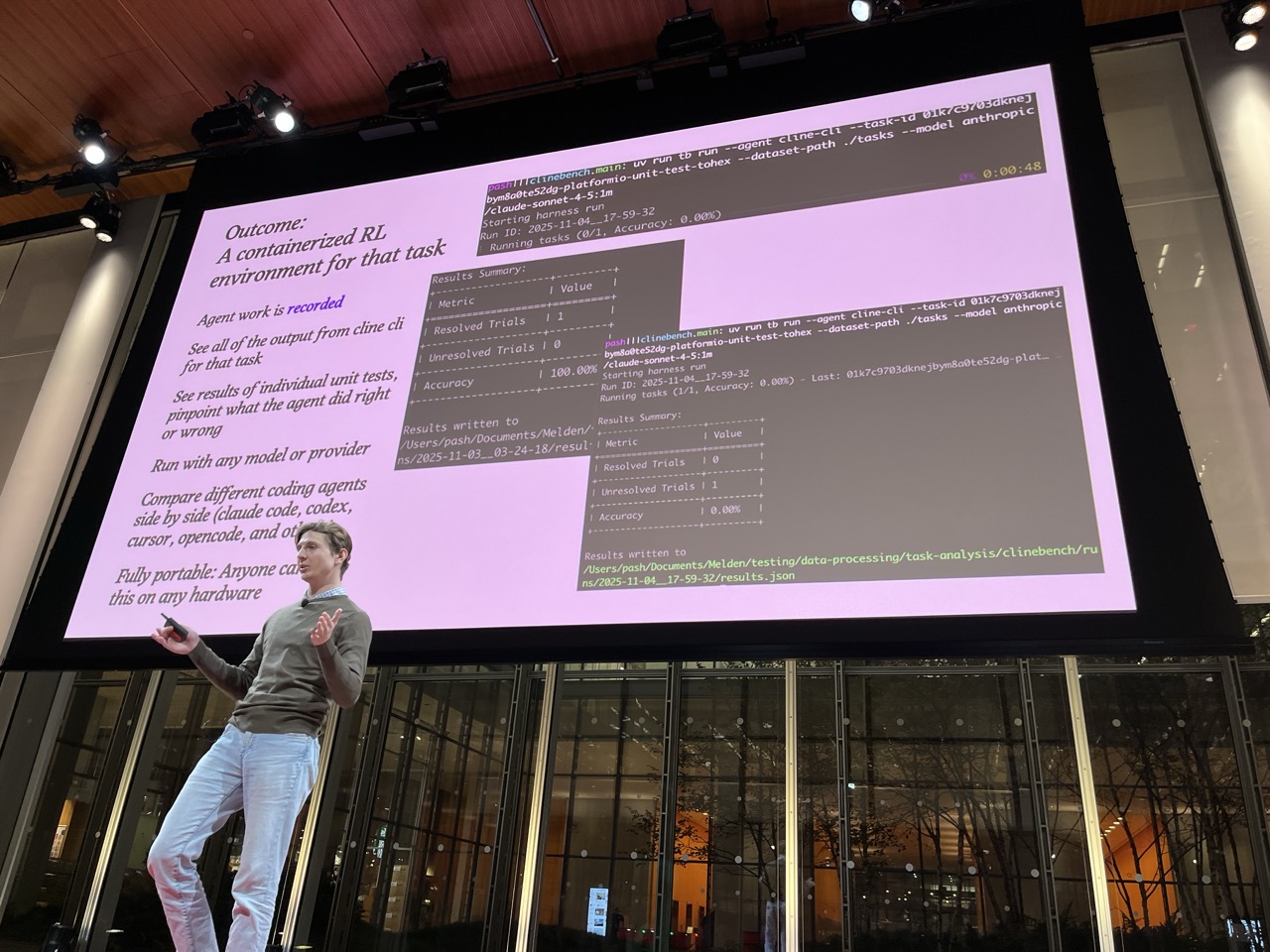

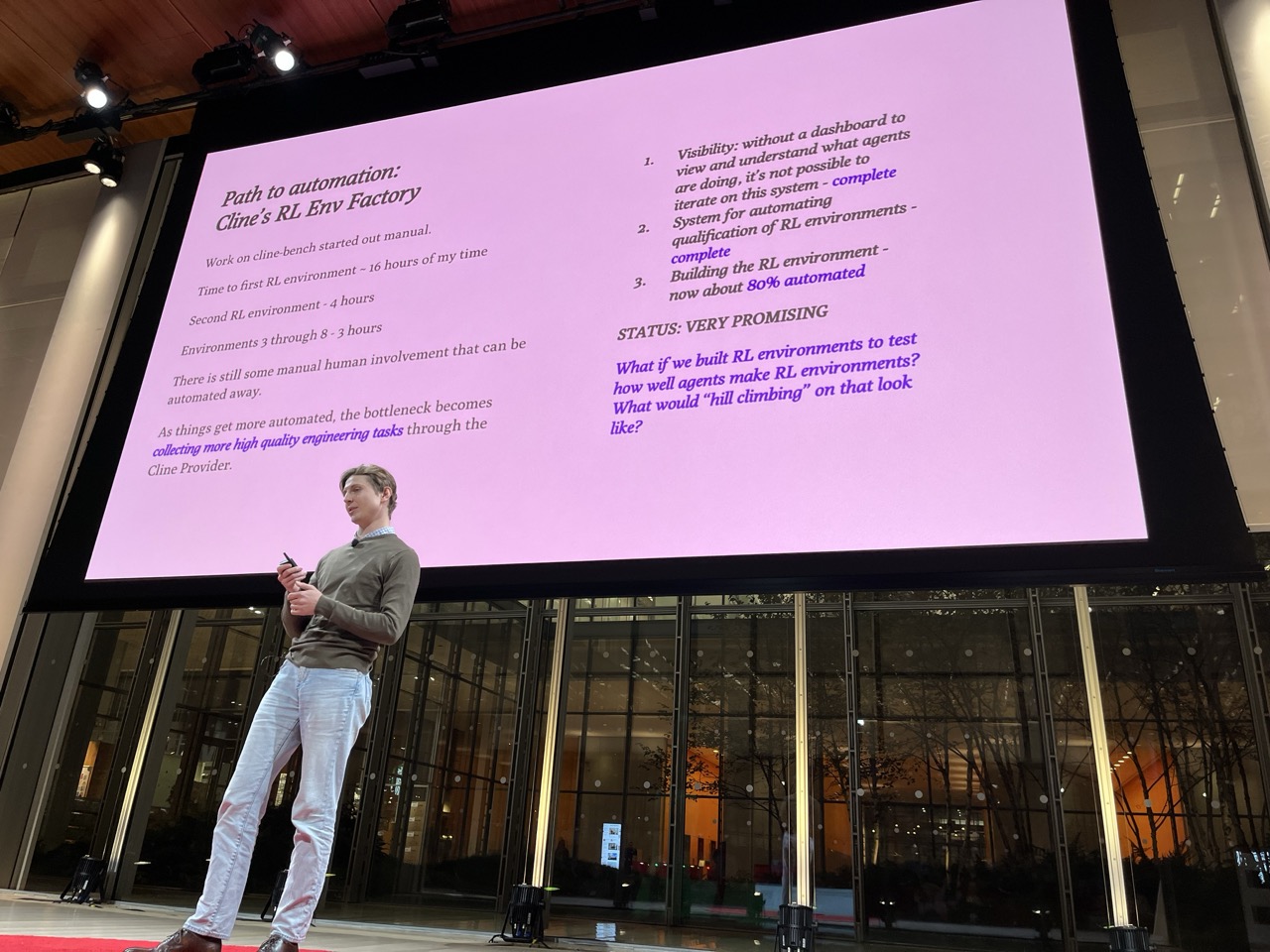

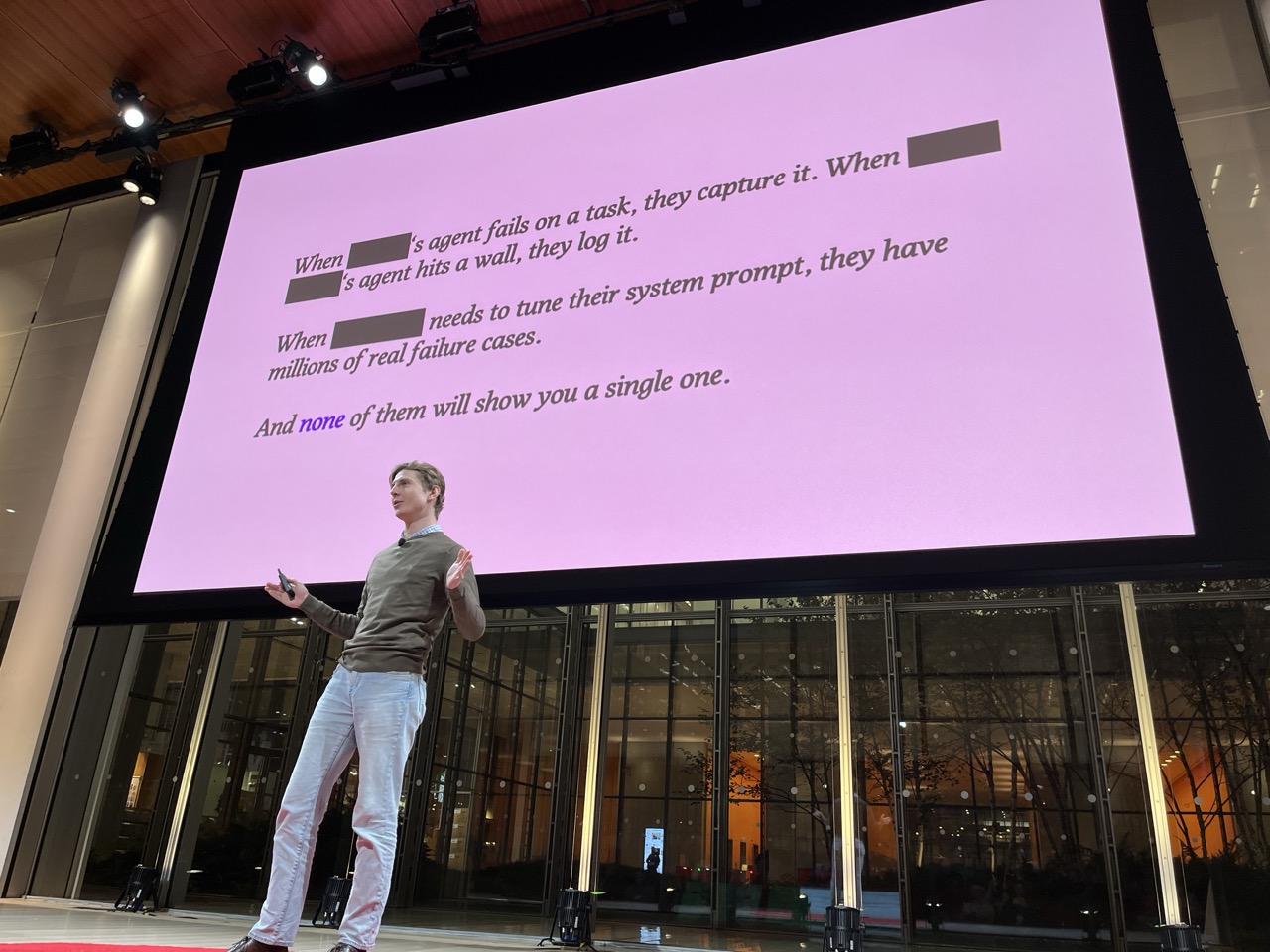

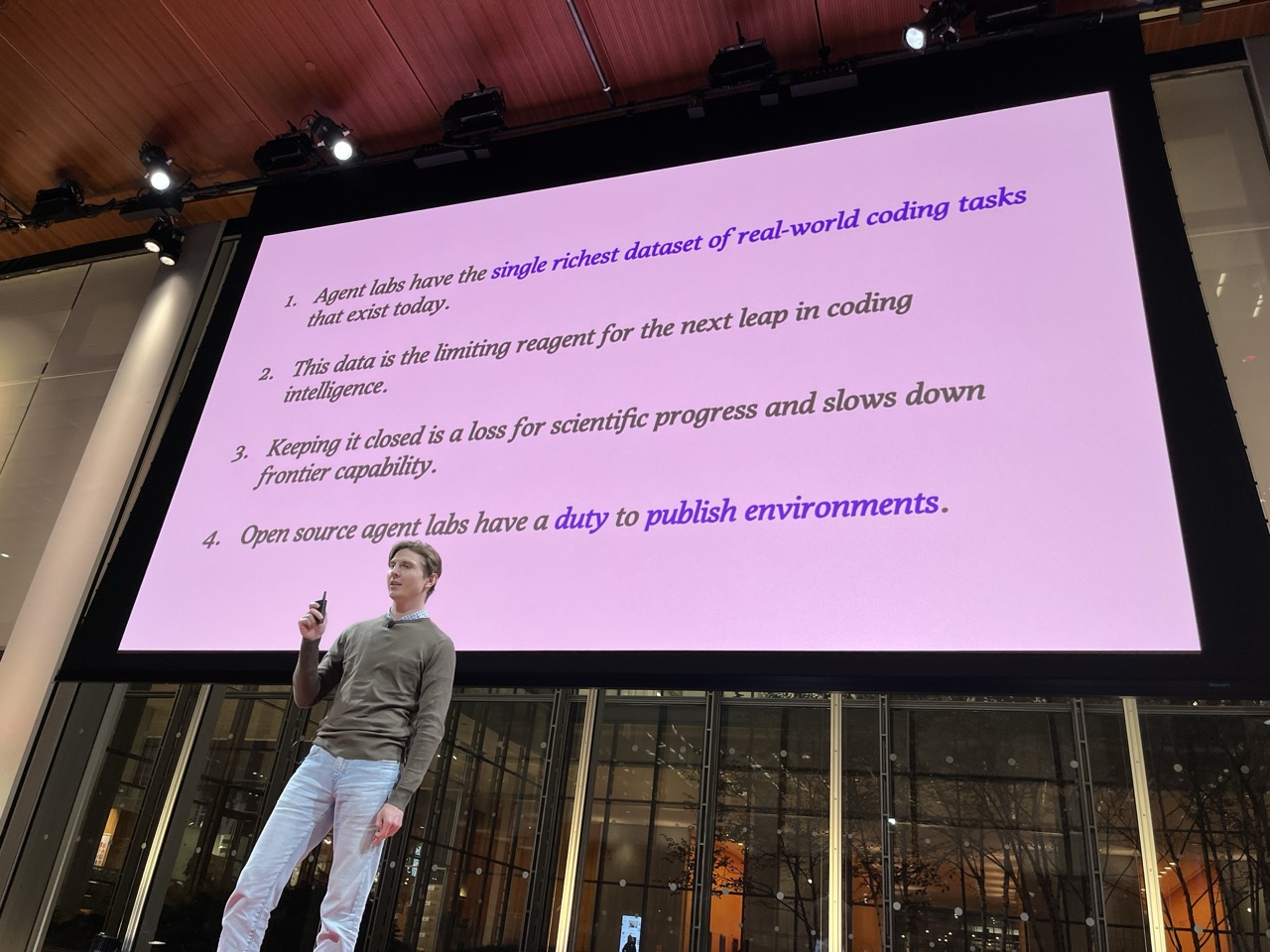

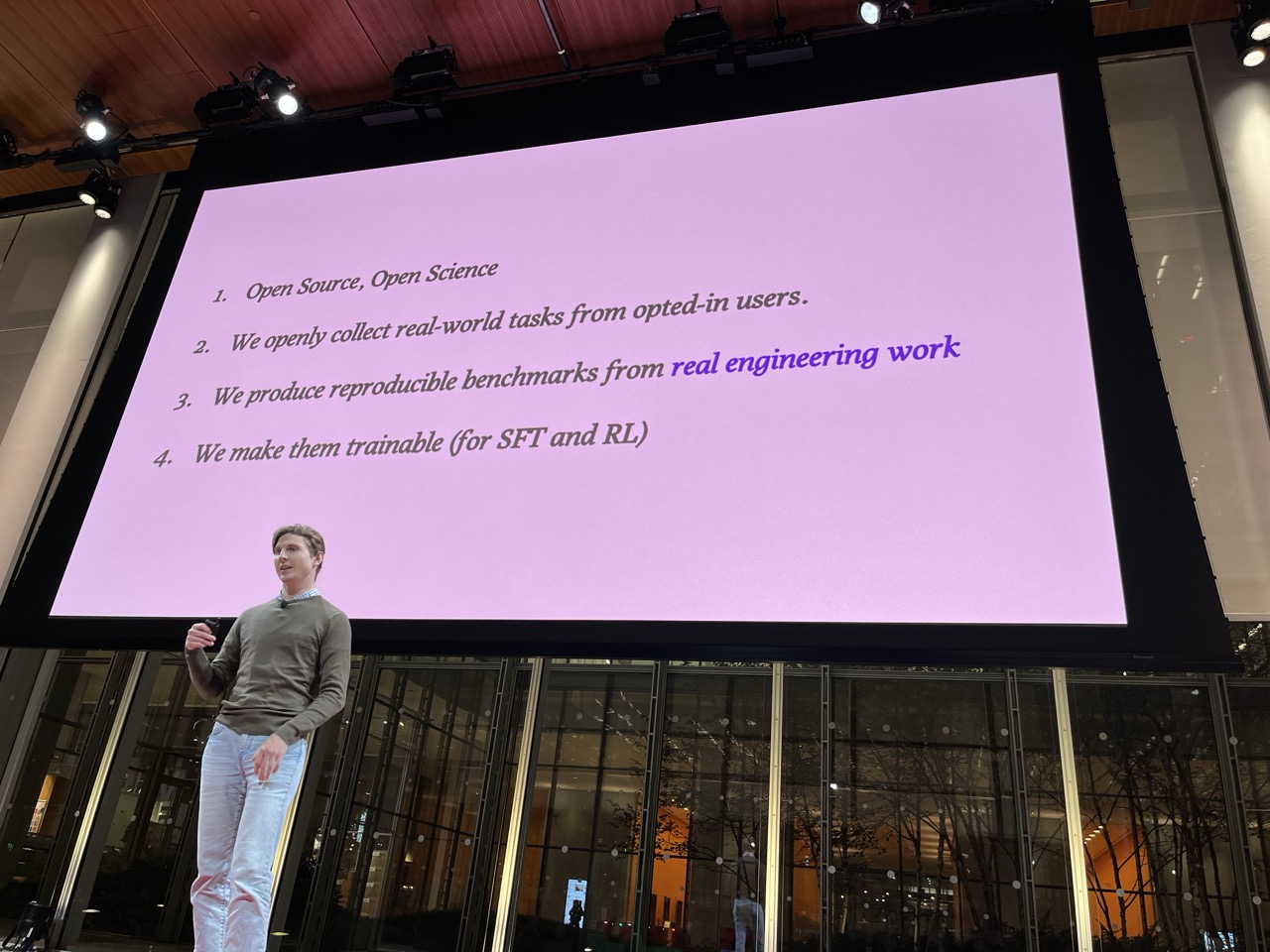

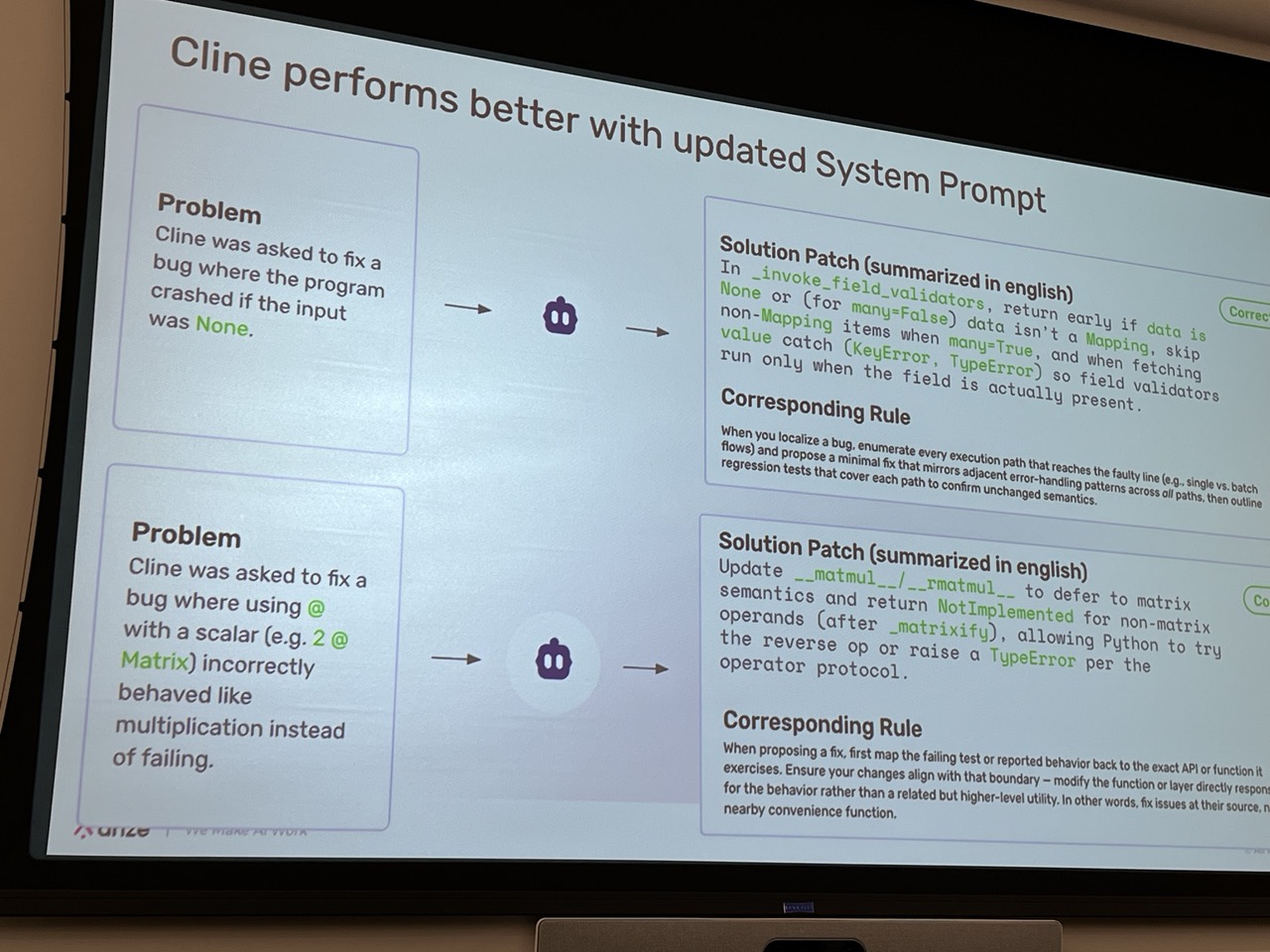

Hard-Won Lessons from Building Effective AI Coding Agents#

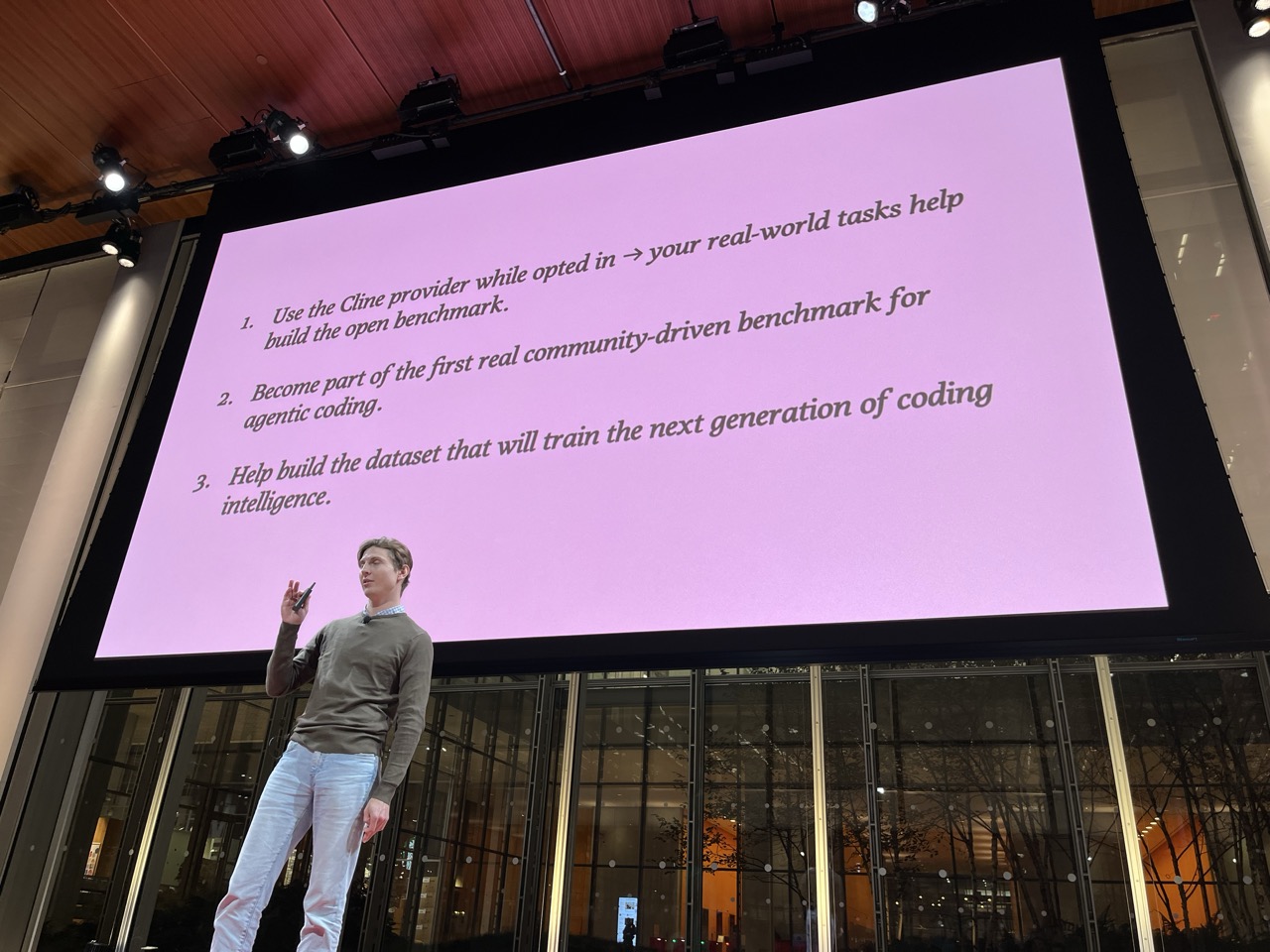

Nik Pash / Cline

Feisty talk. Cut to the point and showed how to make effective coding agents:

- Describes the simple recipe for success: benchmarks that are not cheatable

- Introduces cline-bench as a way to get REAL WORLD data on problems

- RL Environment Factory: Automate the creation of RL envs

- We need to collect more high quality engineering tasks

- Agent data on real world data is where the agents get better… and all the providers are scooping up this data…

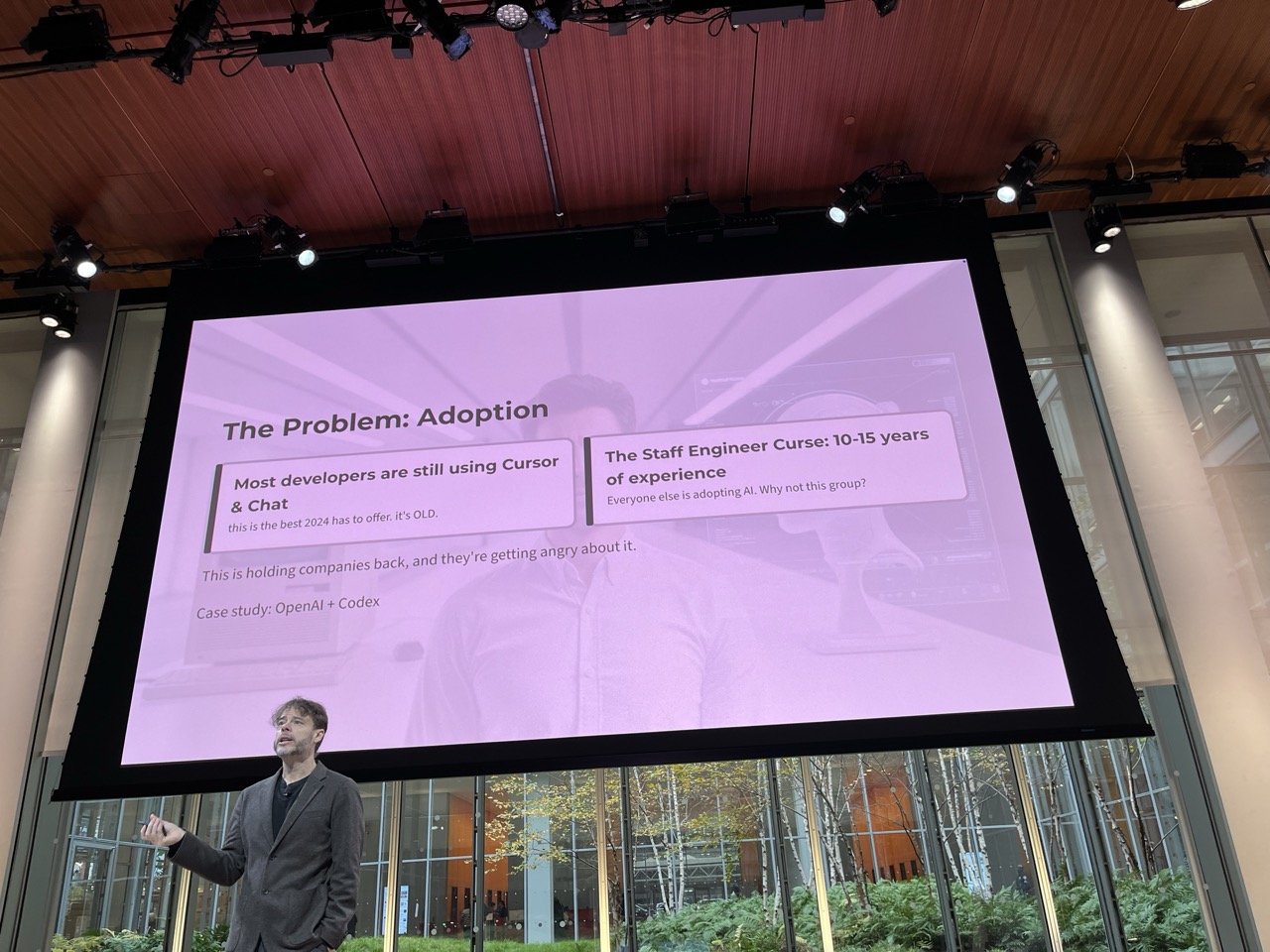

Benchmarks vs economics: the AI capability measurement gap#

Joel Becker / METR

Big gap between self-reported efficacy increases and actual increases.

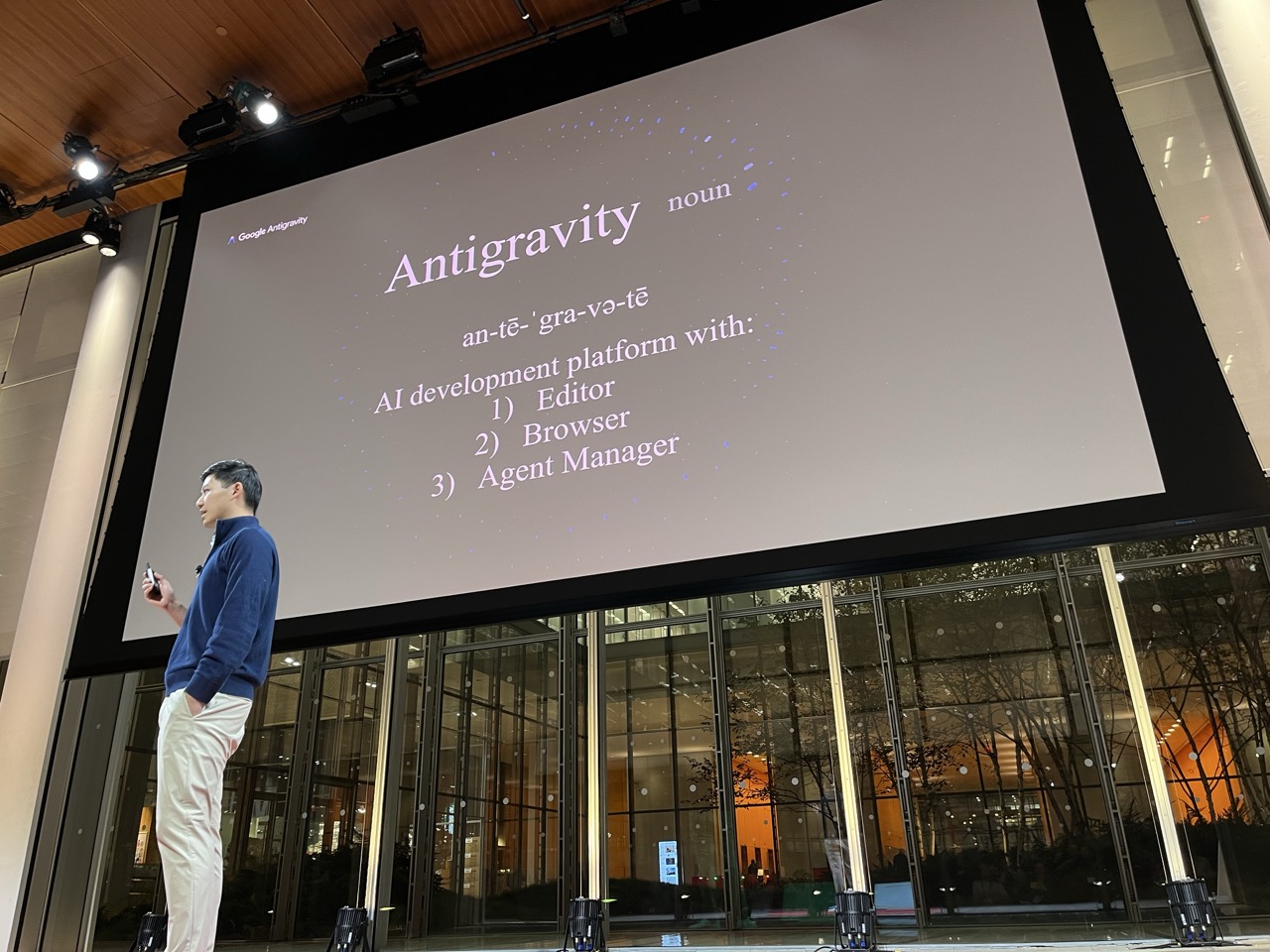

Defying Gravity#

Kevin Hou / Google DeepMind

Product intro. Tight integration of Gemini and Nano Banana.

20251120 Leadership#

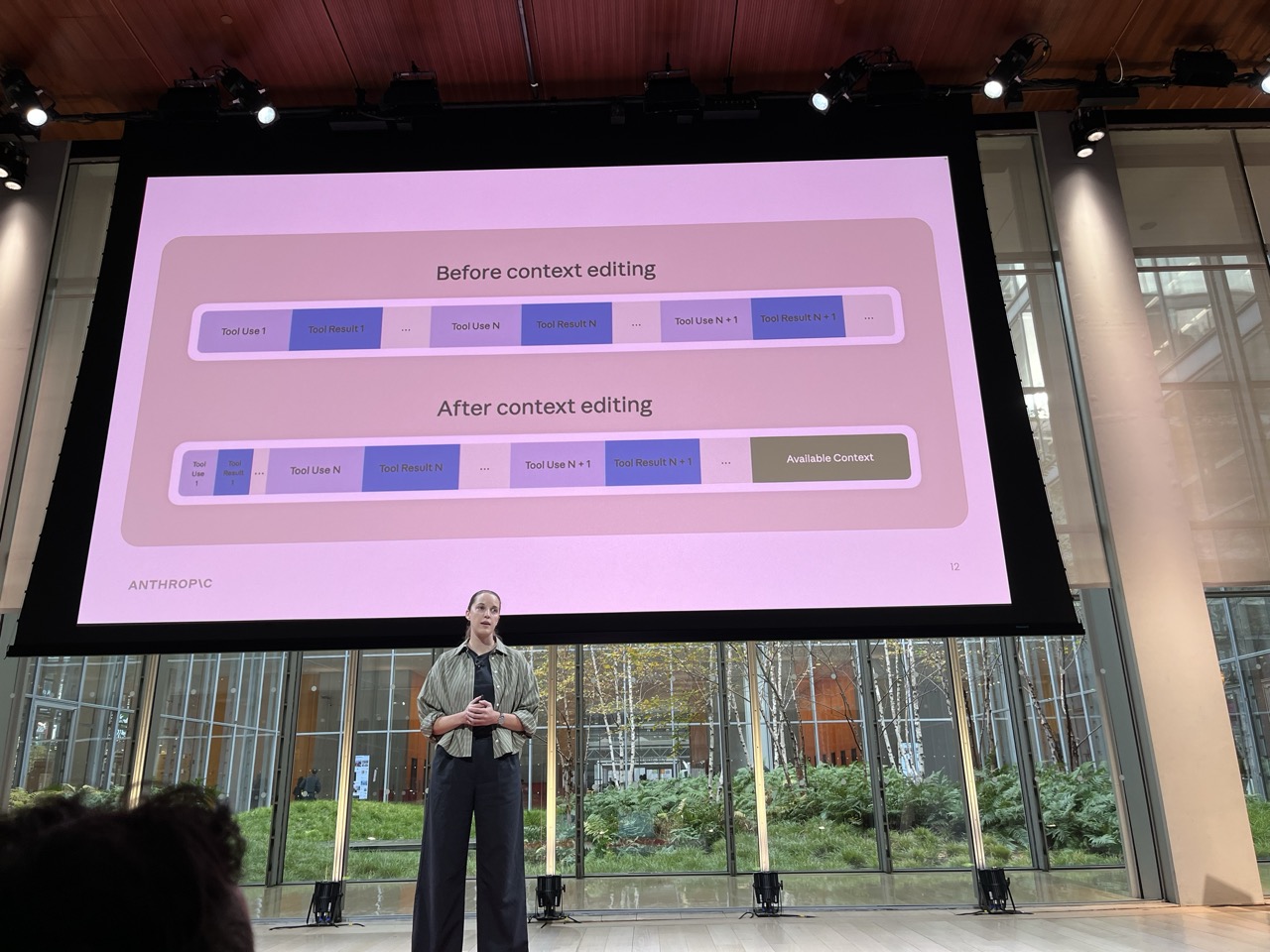

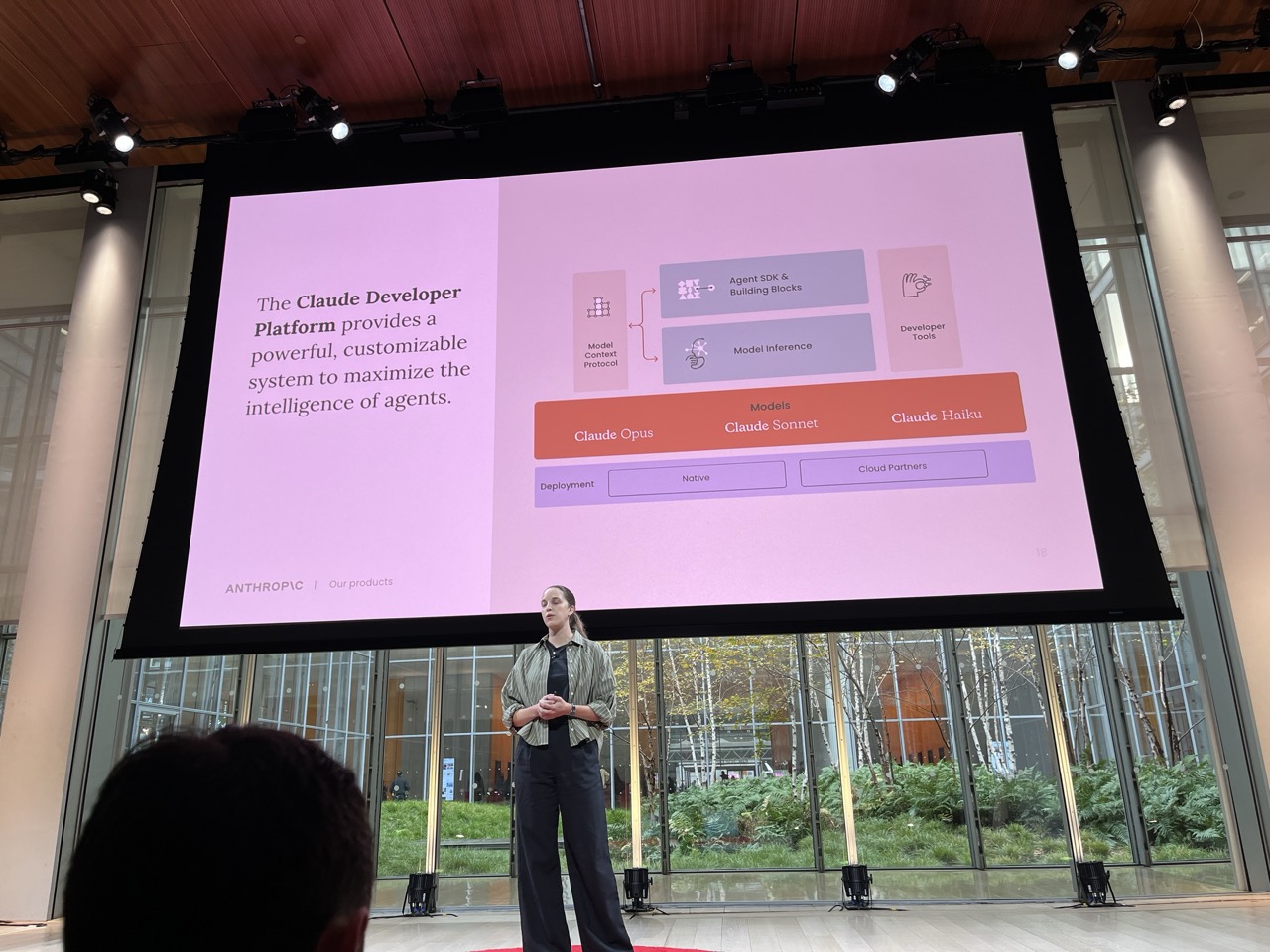

Evolving Claude APIs for Agents#

Katelyn Lesse / Anthropic

Overview of skills. Main idea is context window engineering to maintain focus of the core agent.

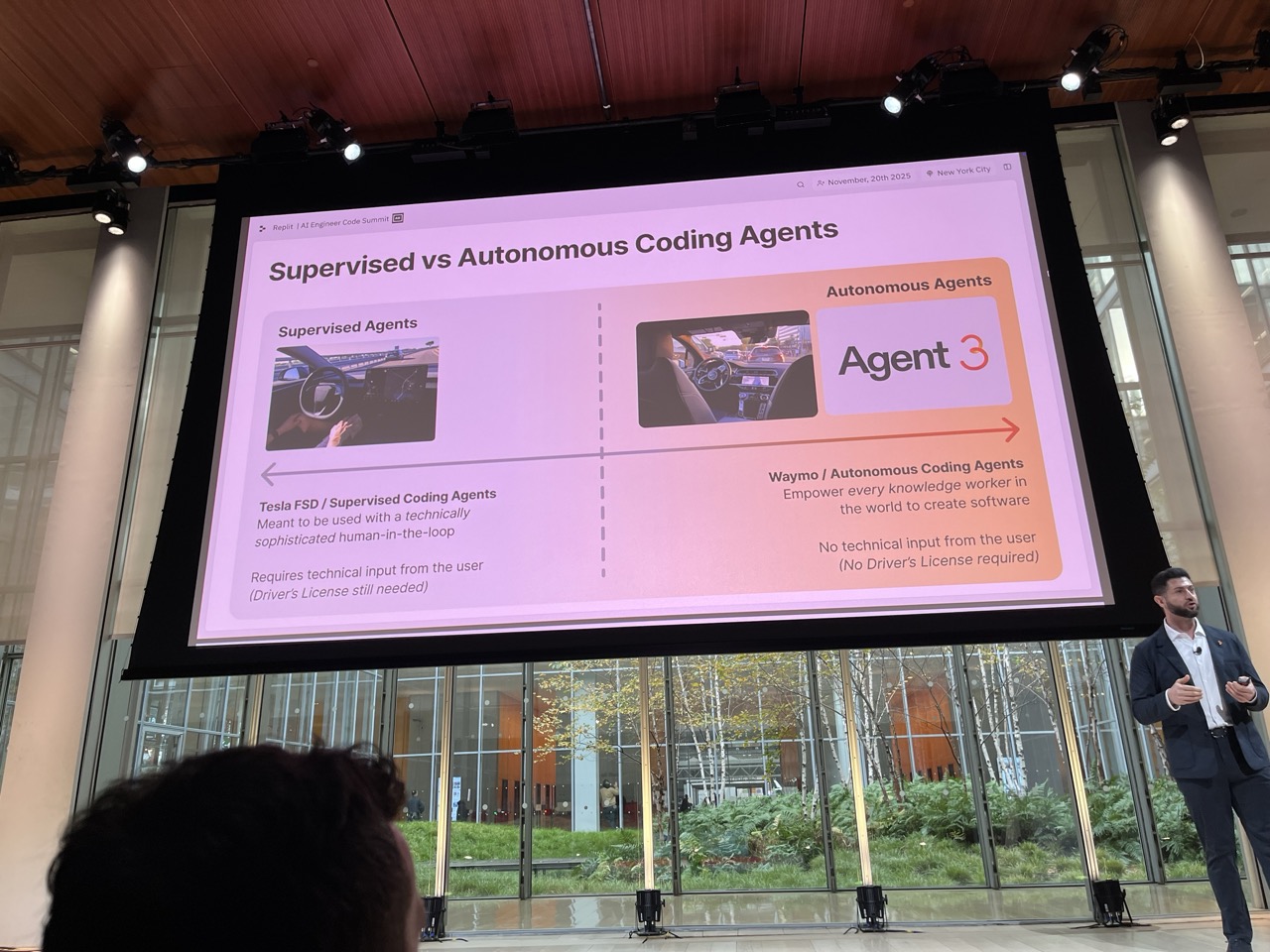

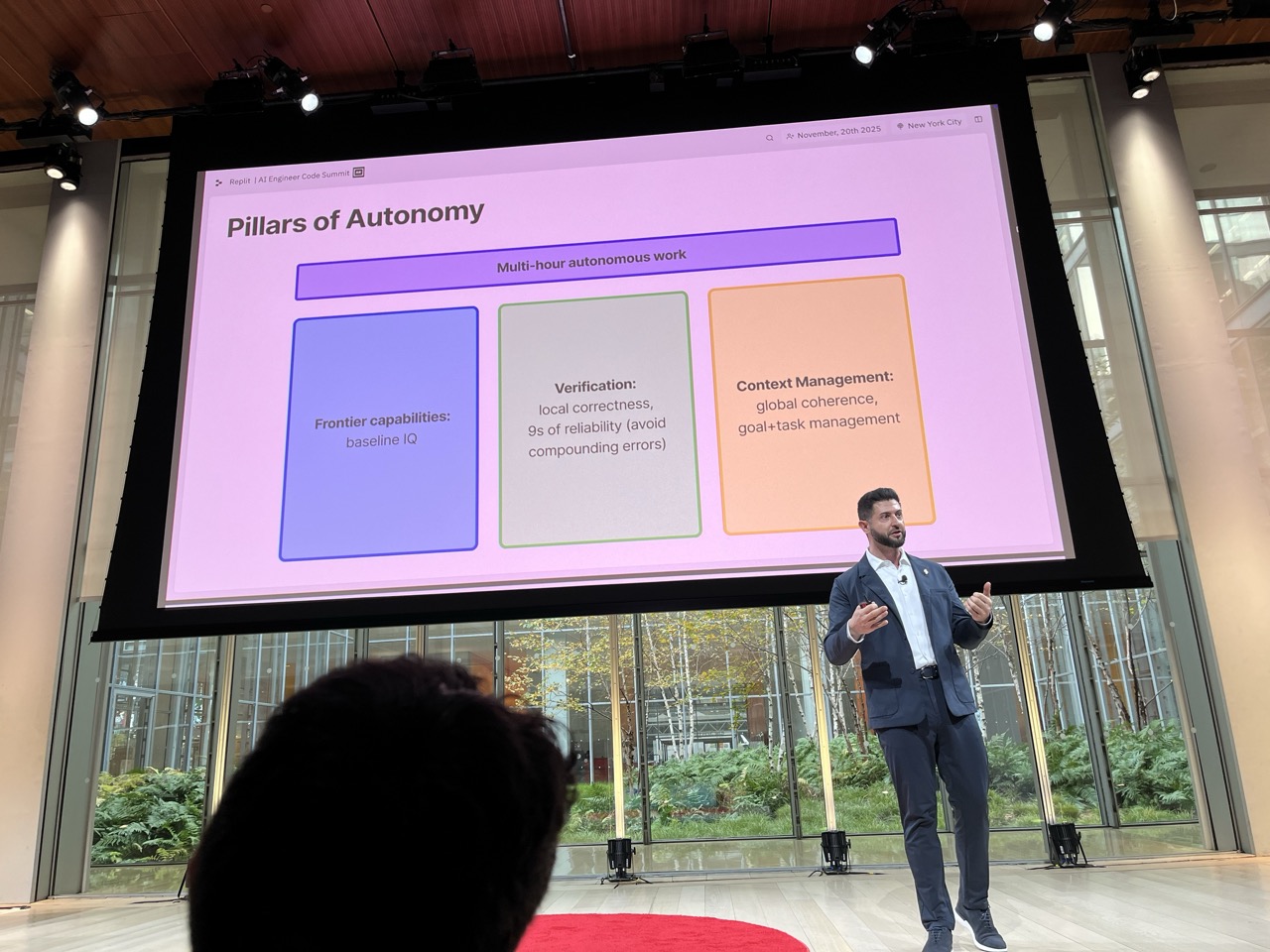

Autonomy Is All You Need#

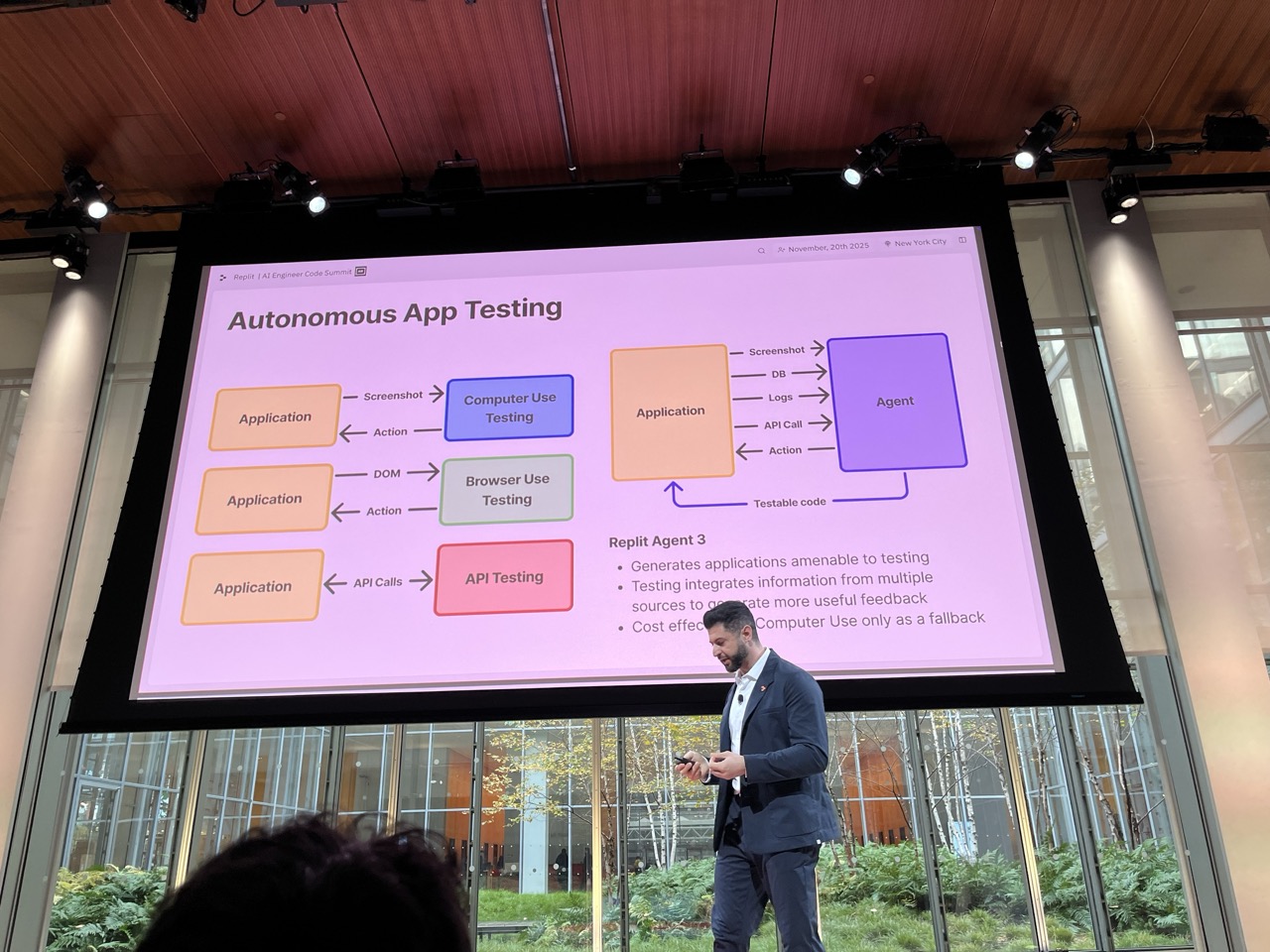

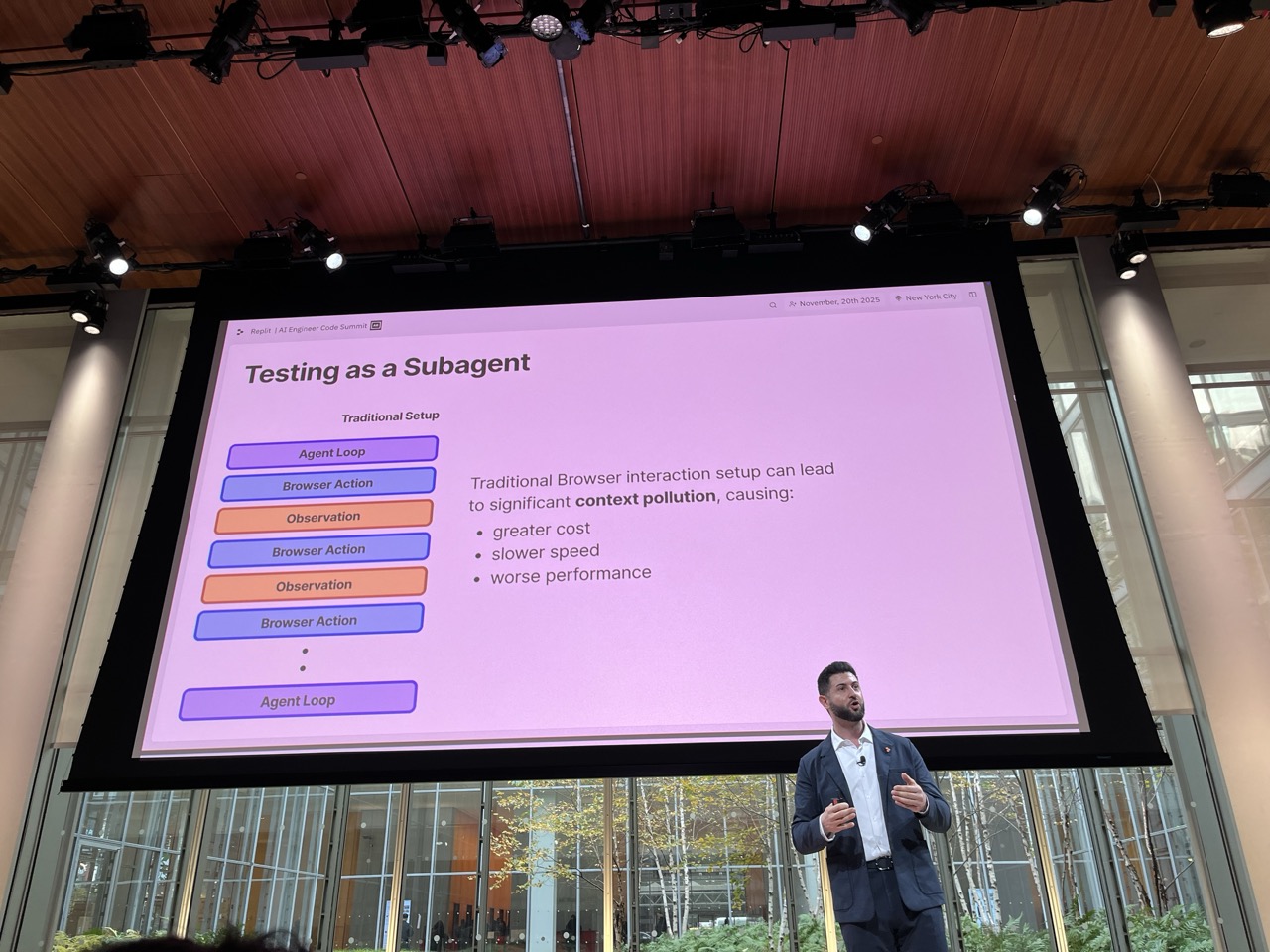

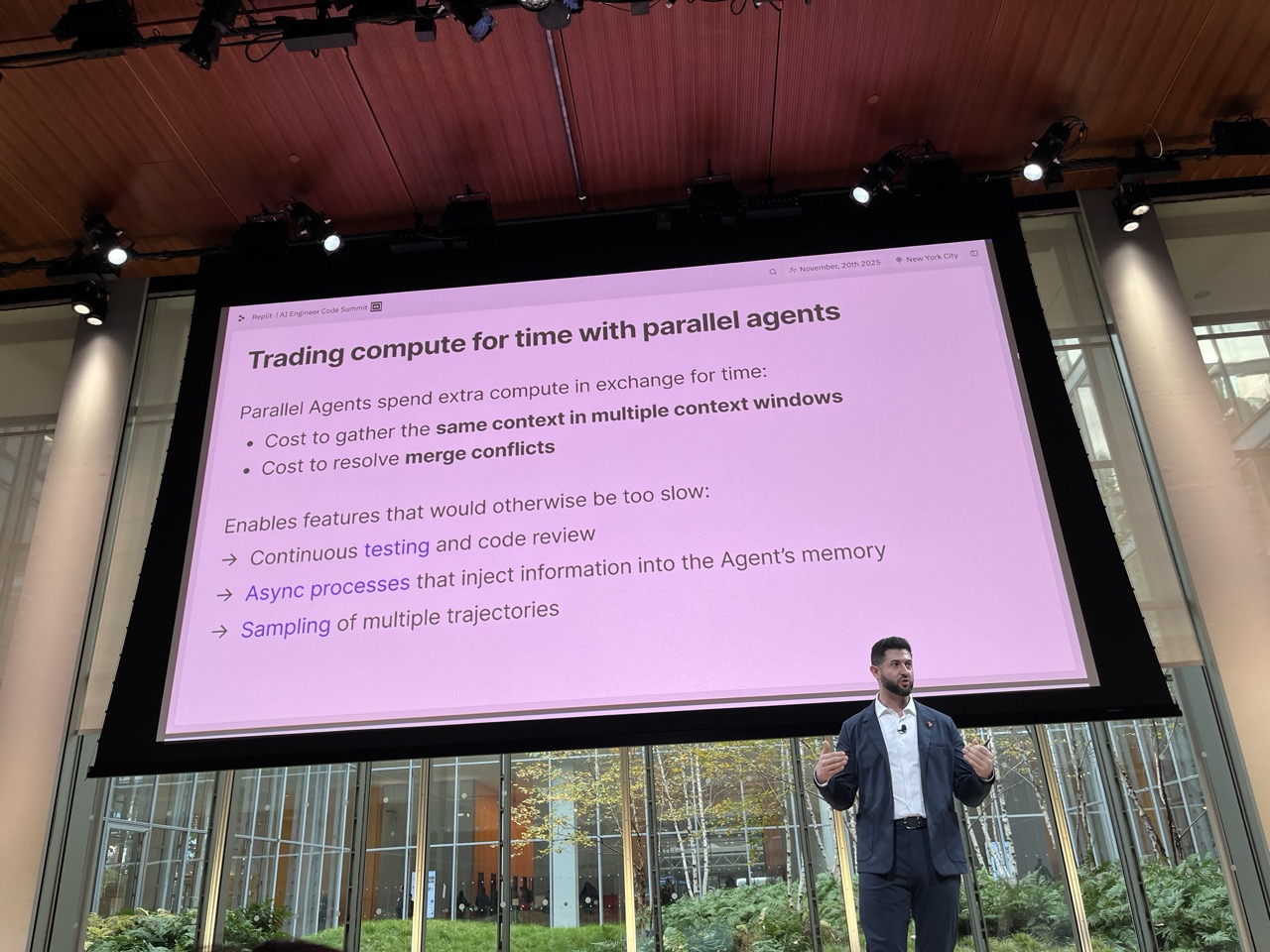

Michele Catasta / Replit

Replit is interested in creating coding environments with complete end-to-end app creation for non-coders. They really want to get to a low error rate but still see in their apps lots of “painted doors” where there are elements that show up in the UI but do not do anything.

They are investing in long running agents that scan codebases and autocorrect all errors. The builder agent is being trained to build apps that are amenable to testing.

Significant effort is going into browser automated parallel testing.

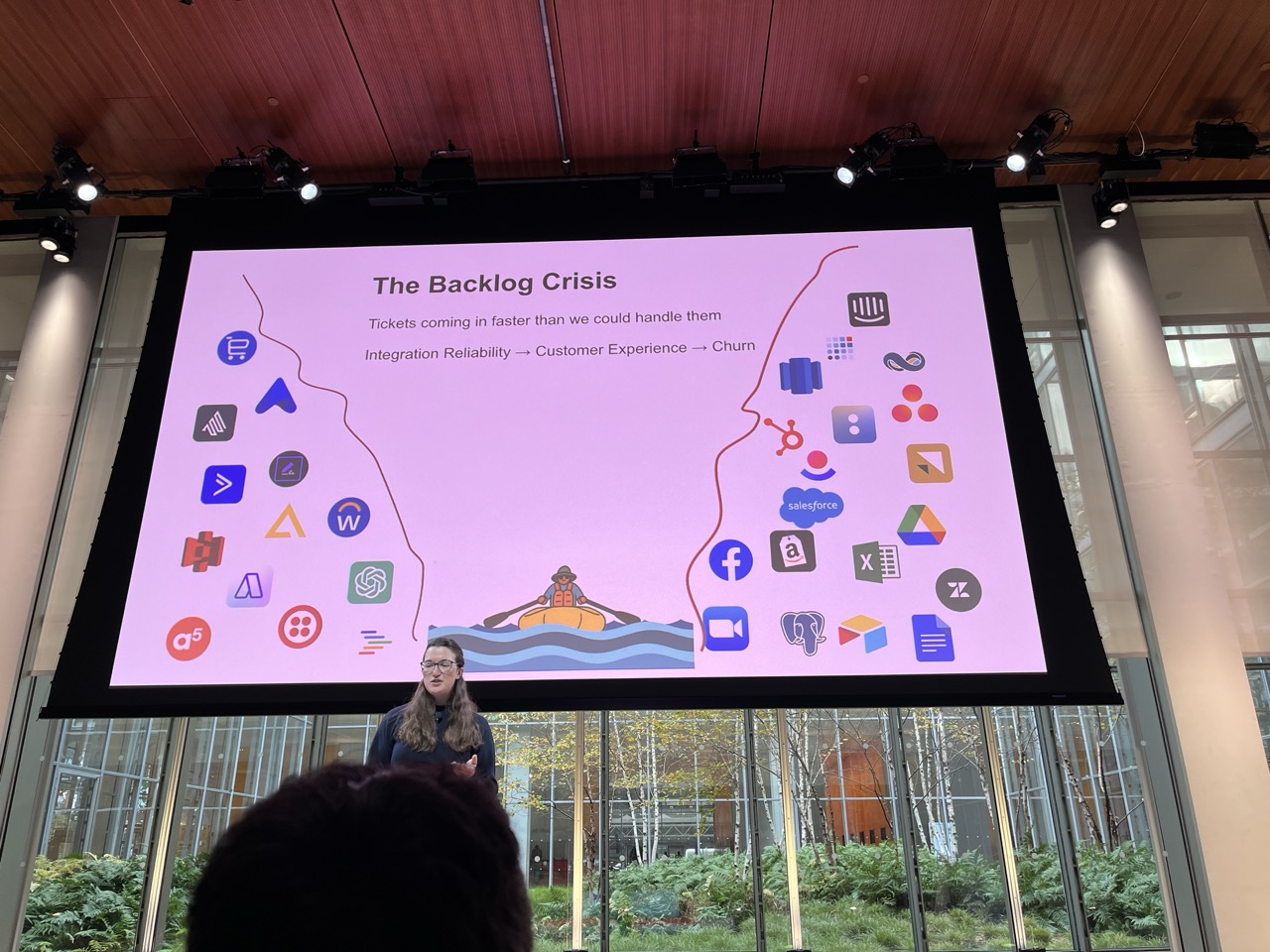

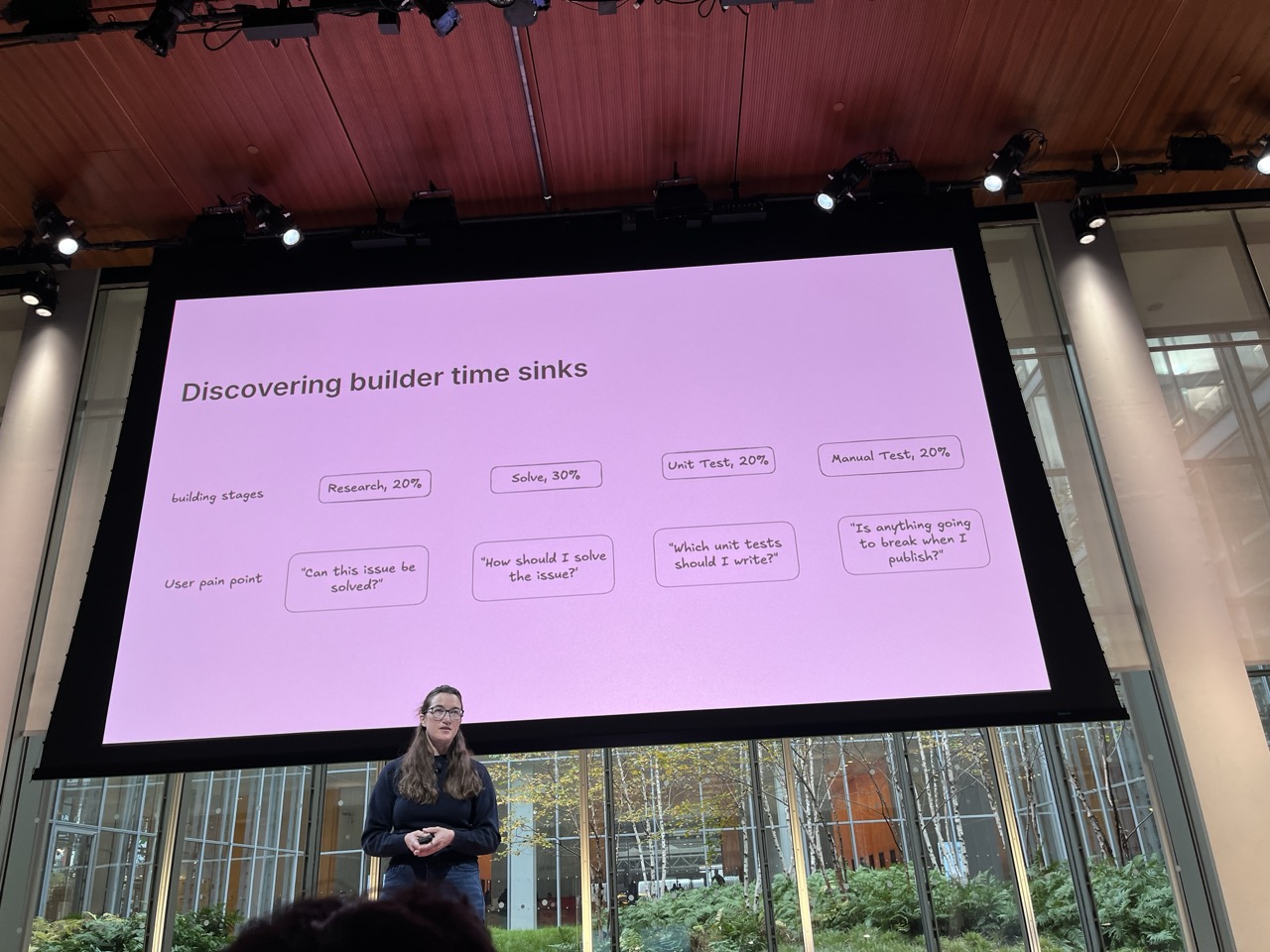

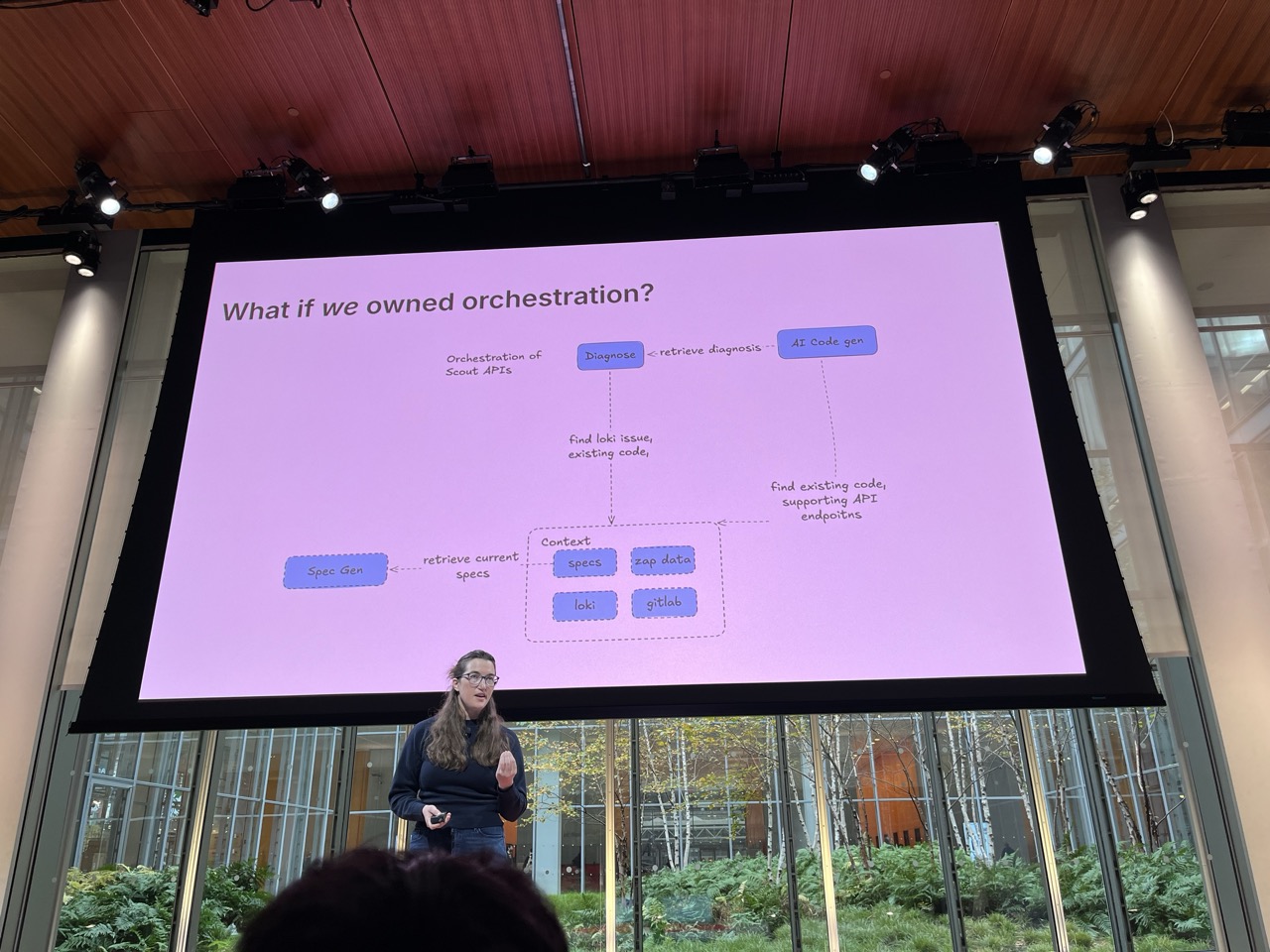

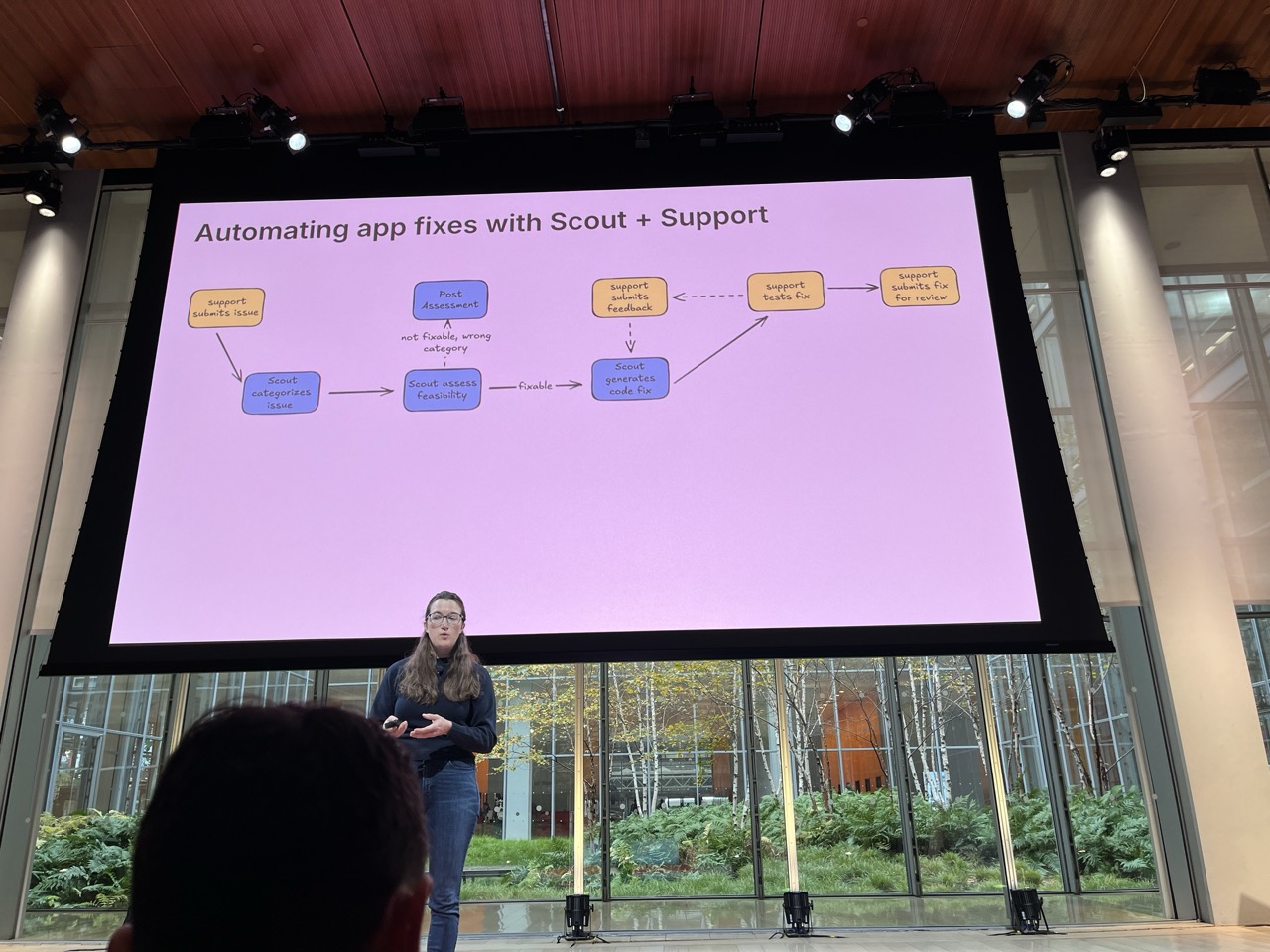

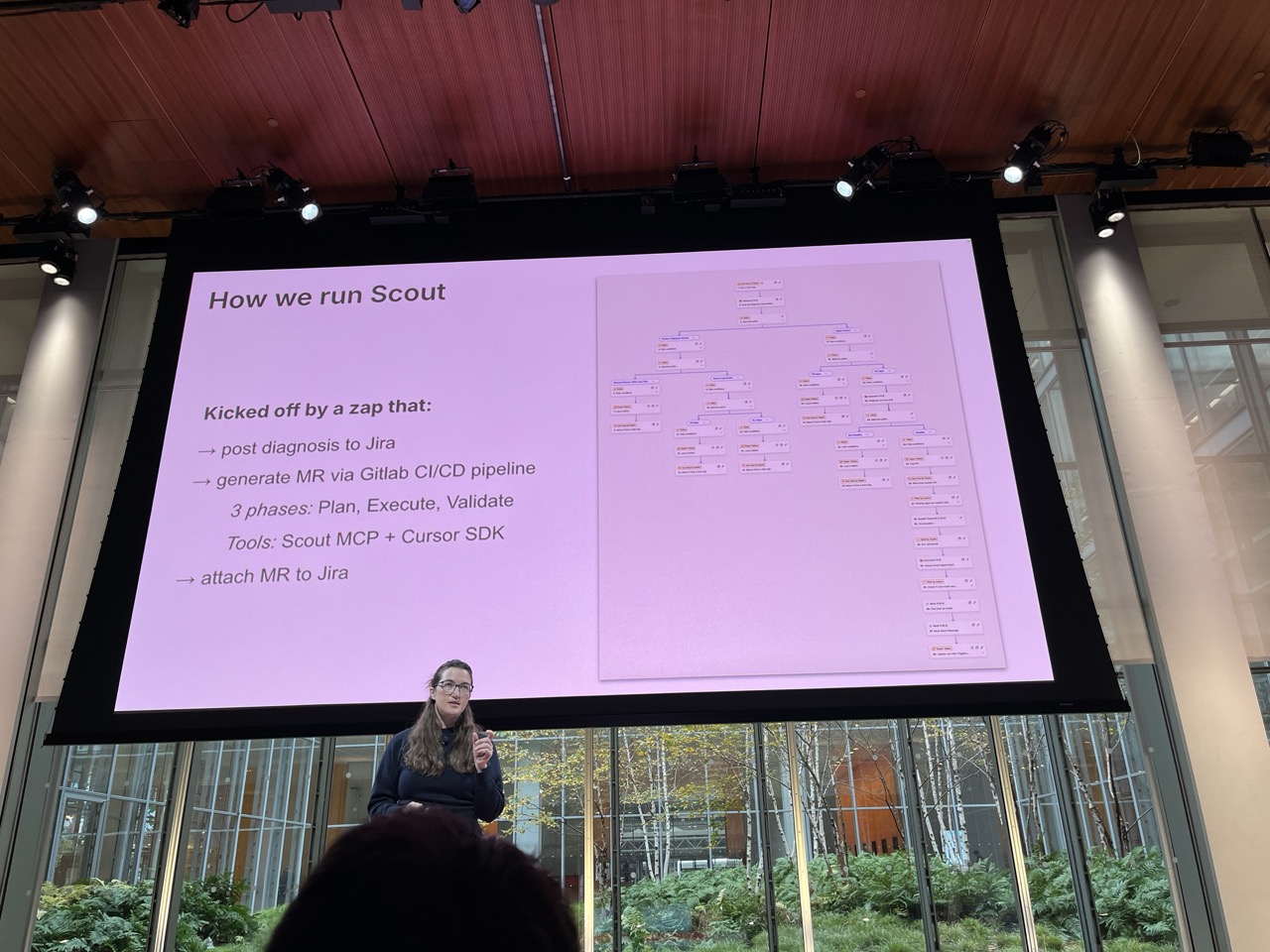

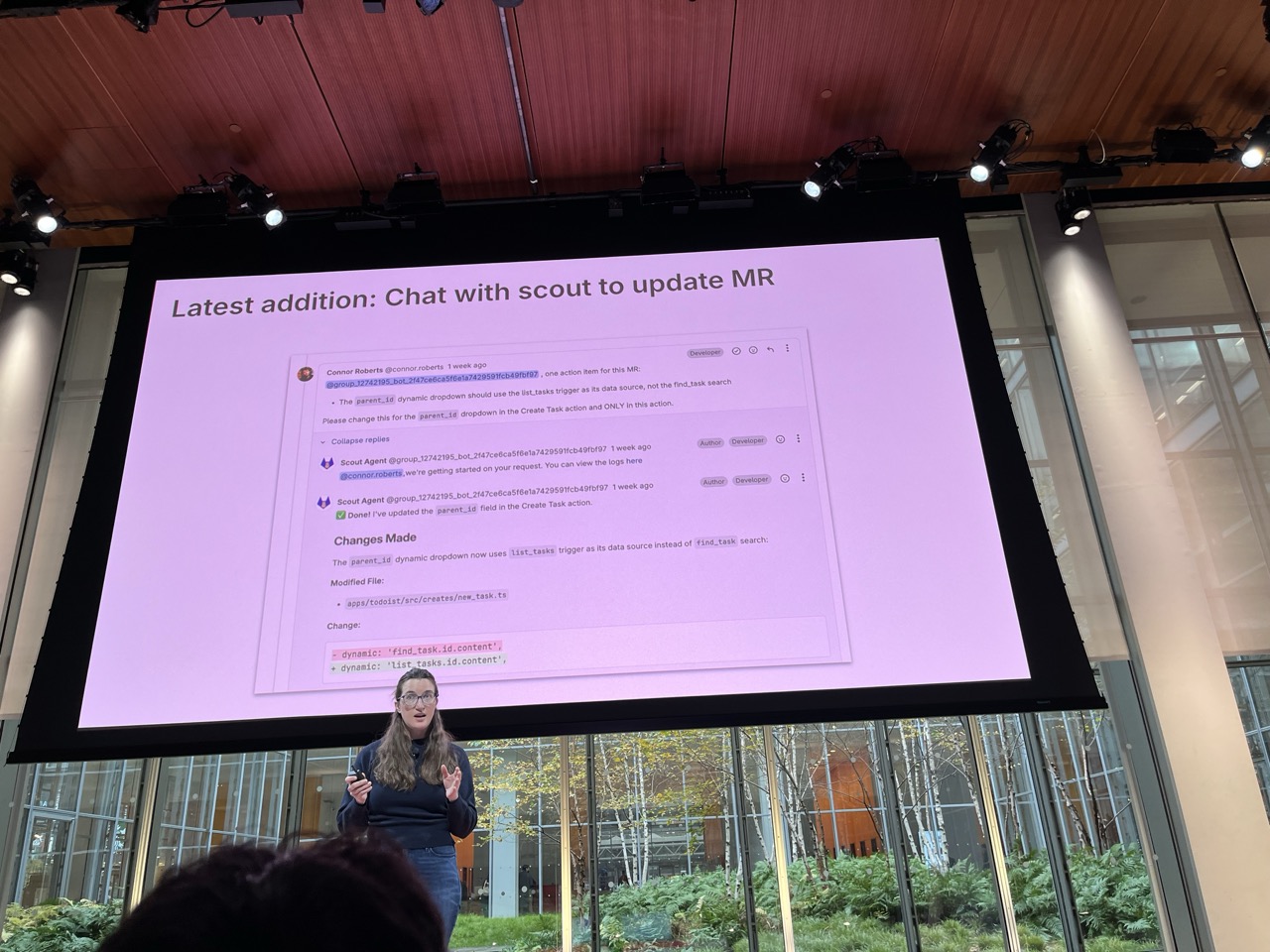

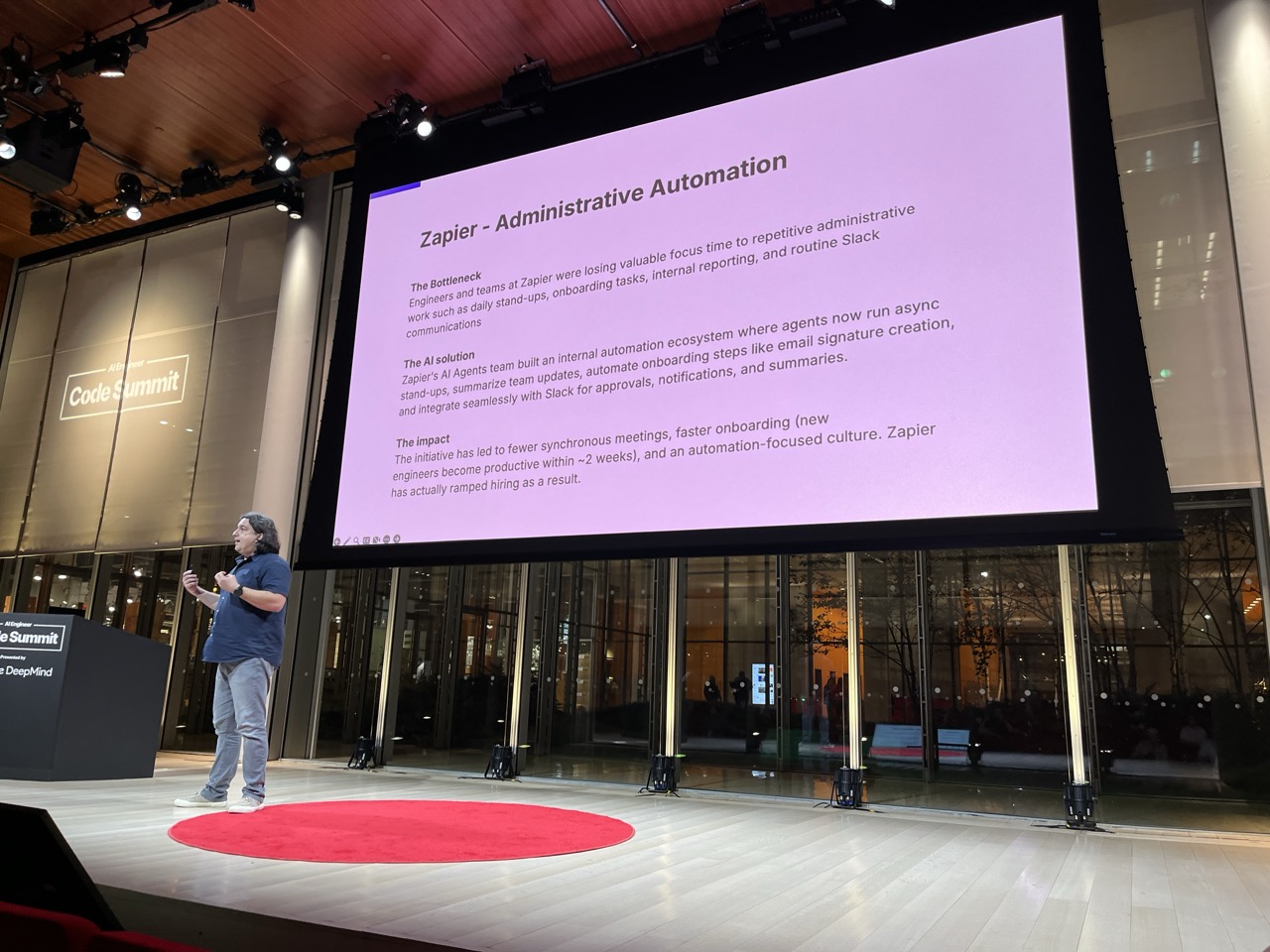

Your Support Team Should Ship Code#

Lisa Orr / Zapier

Building tools to allow support team to auto create tickets, identify issues, and author patches to the upstream code that is leading to the issue.

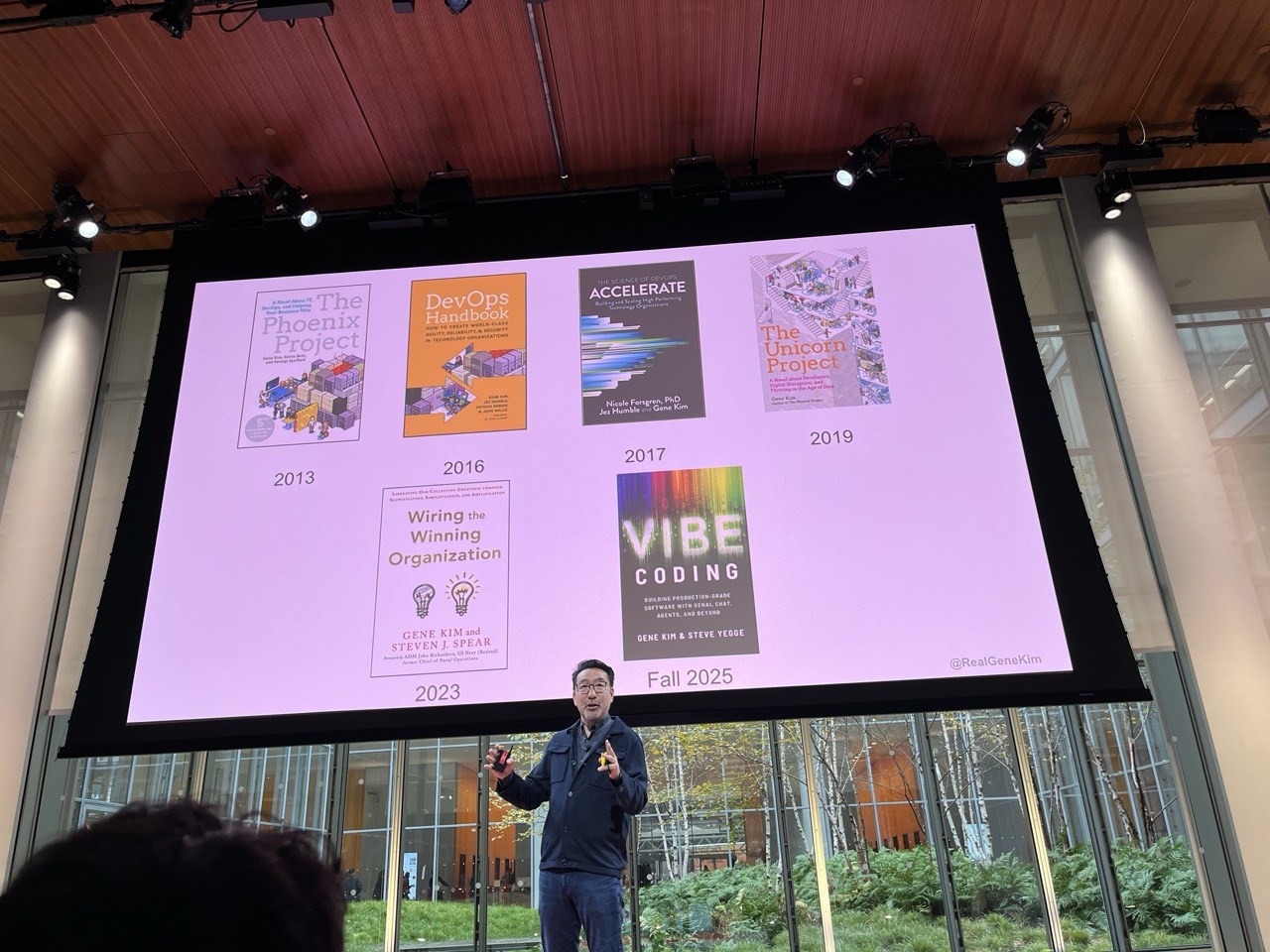

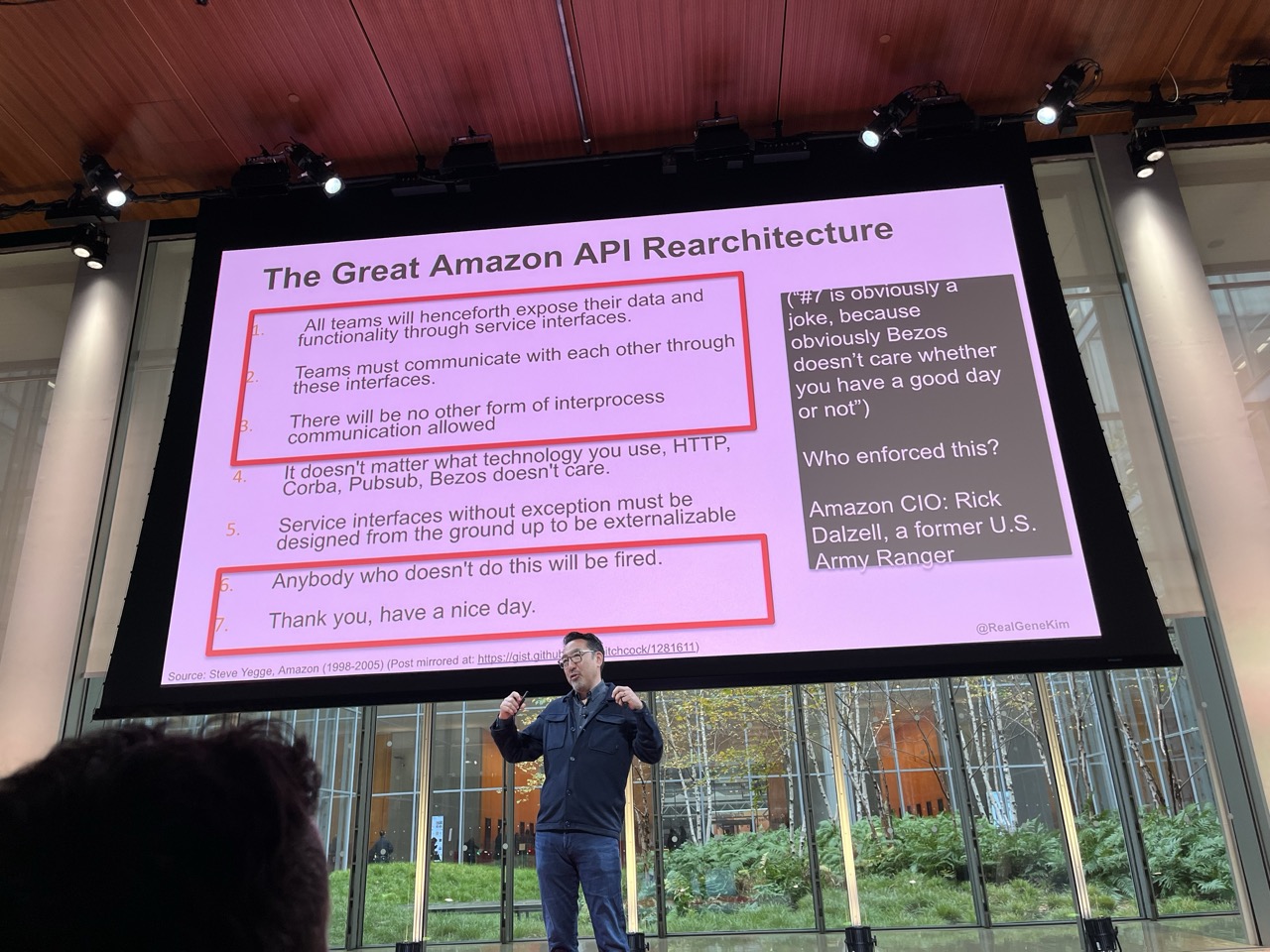

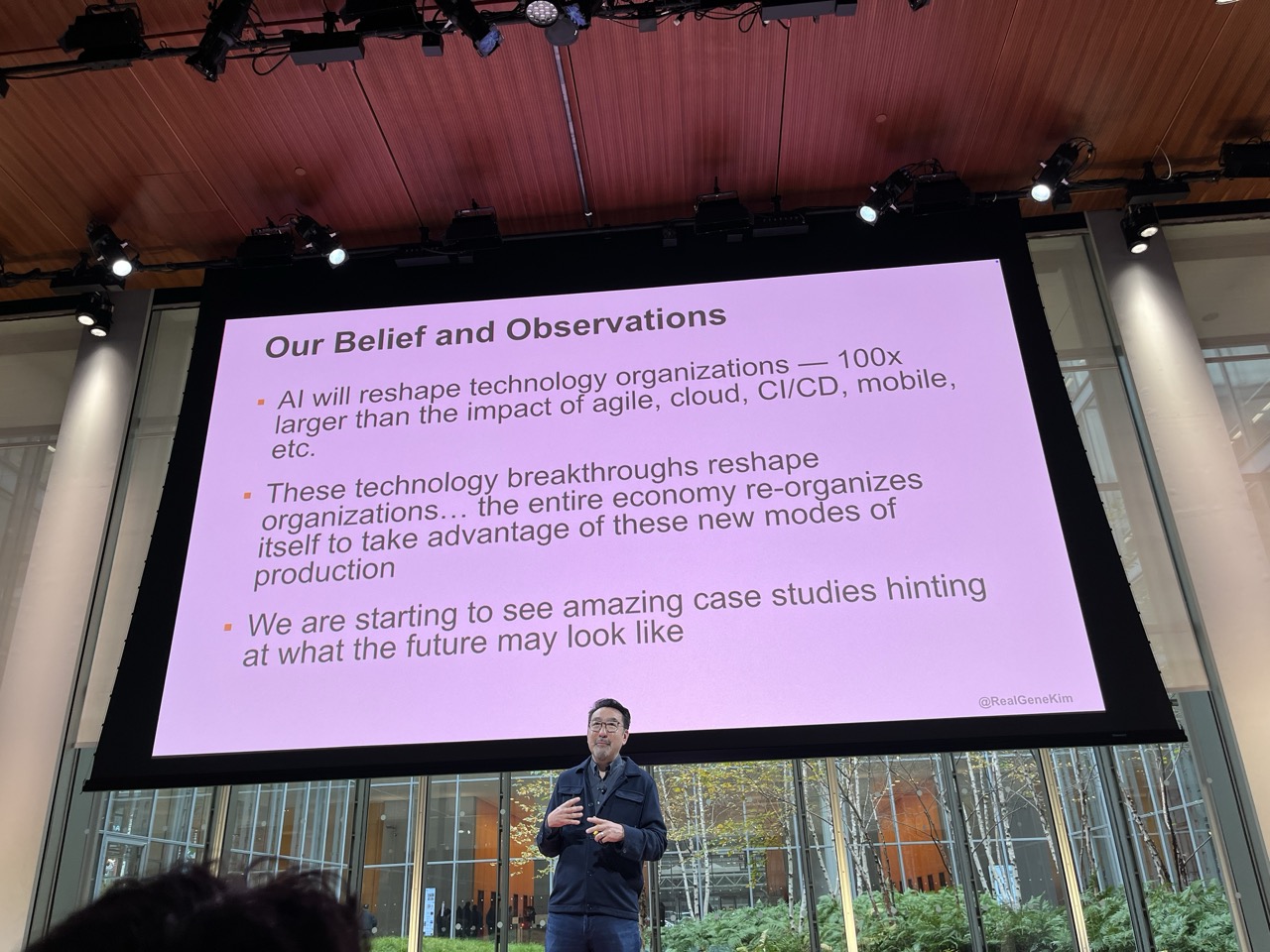

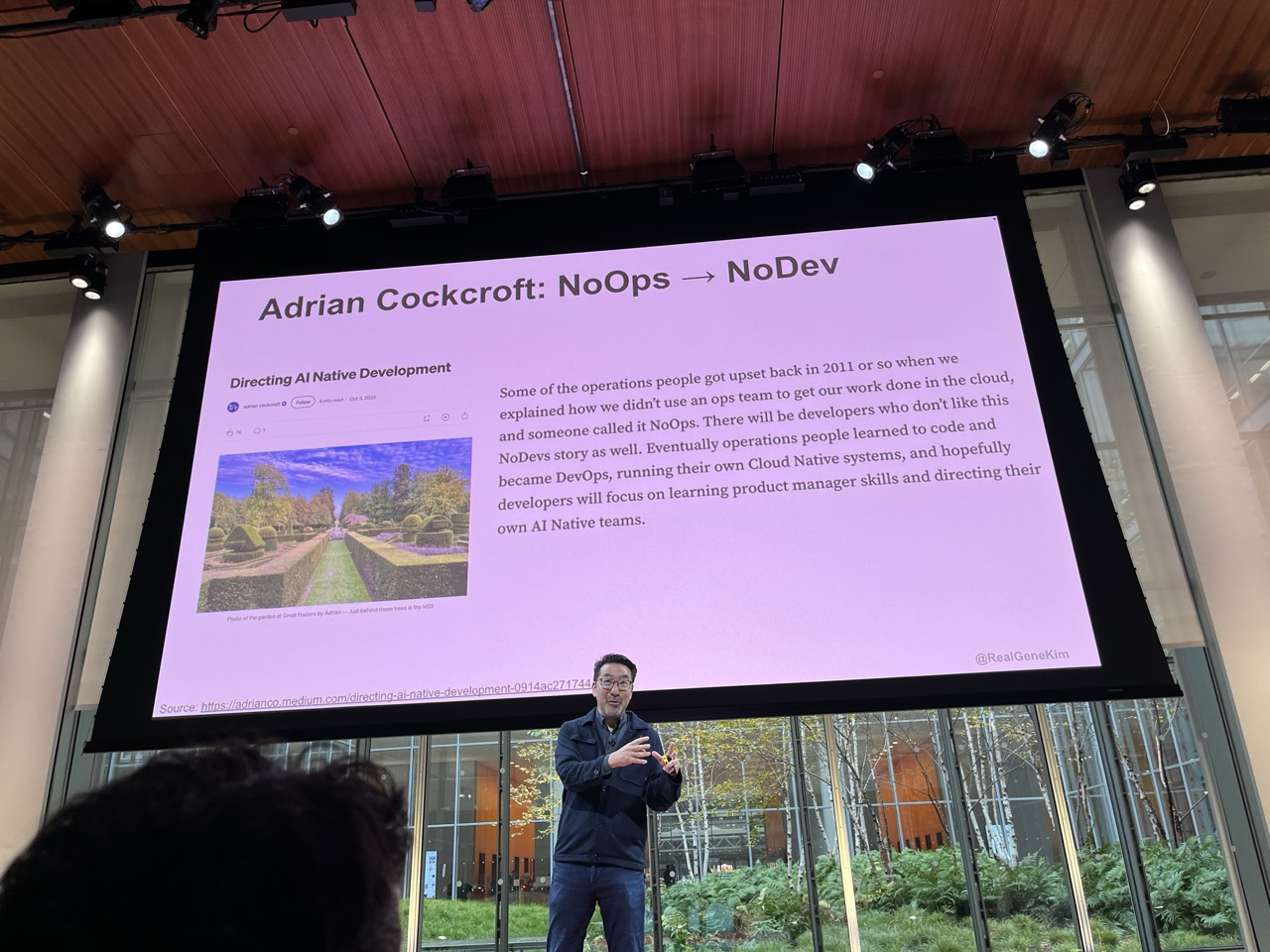

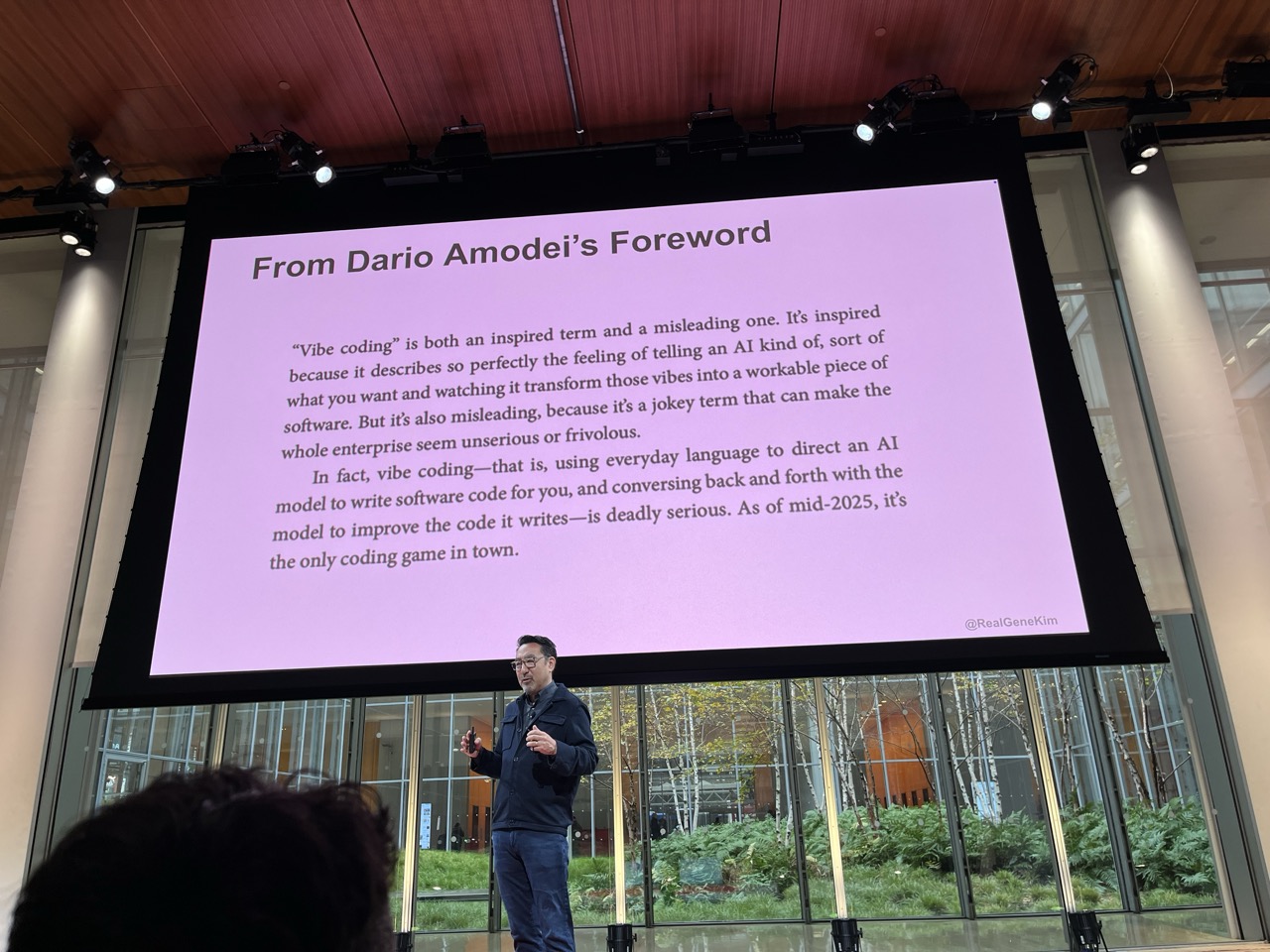

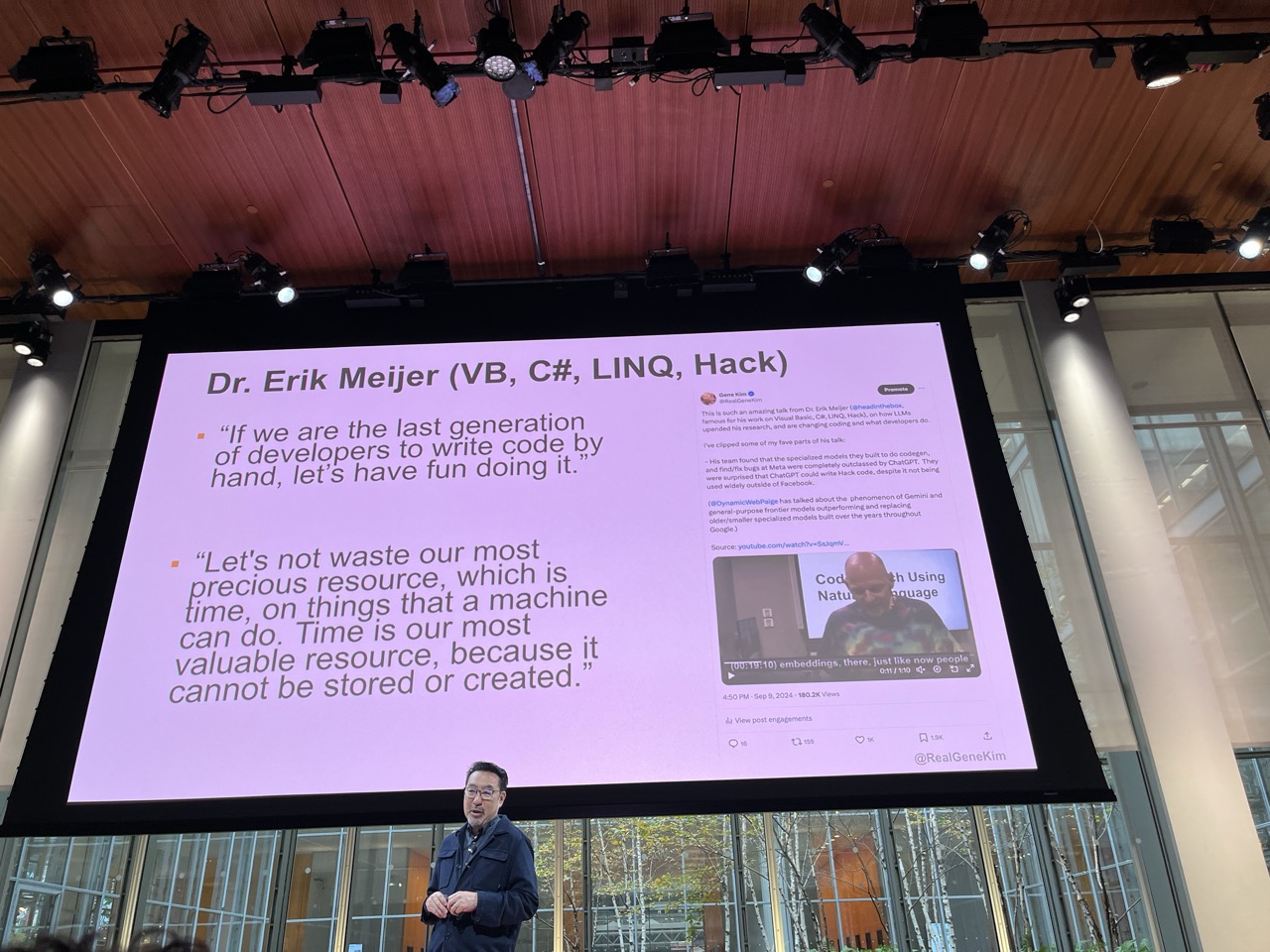

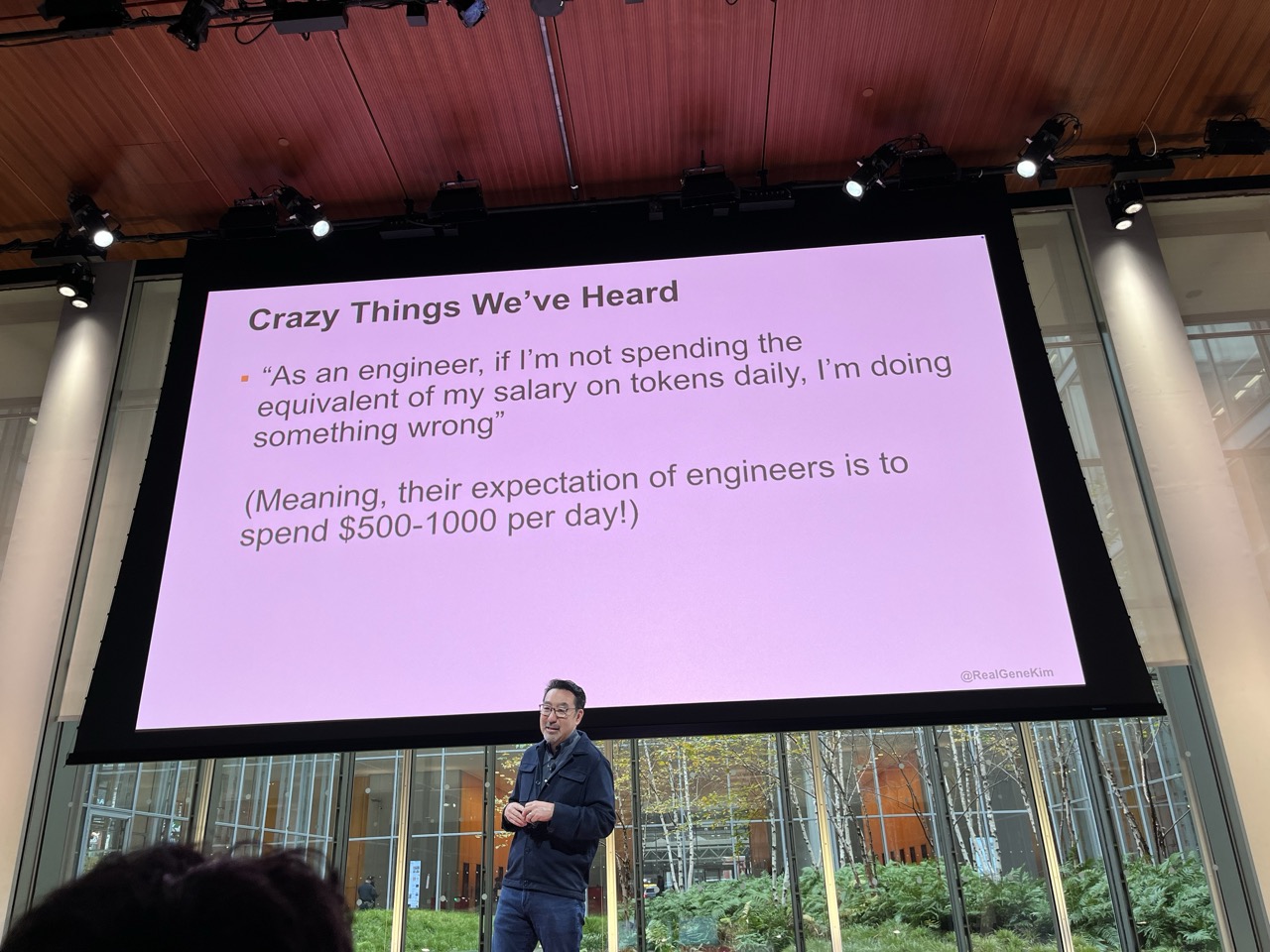

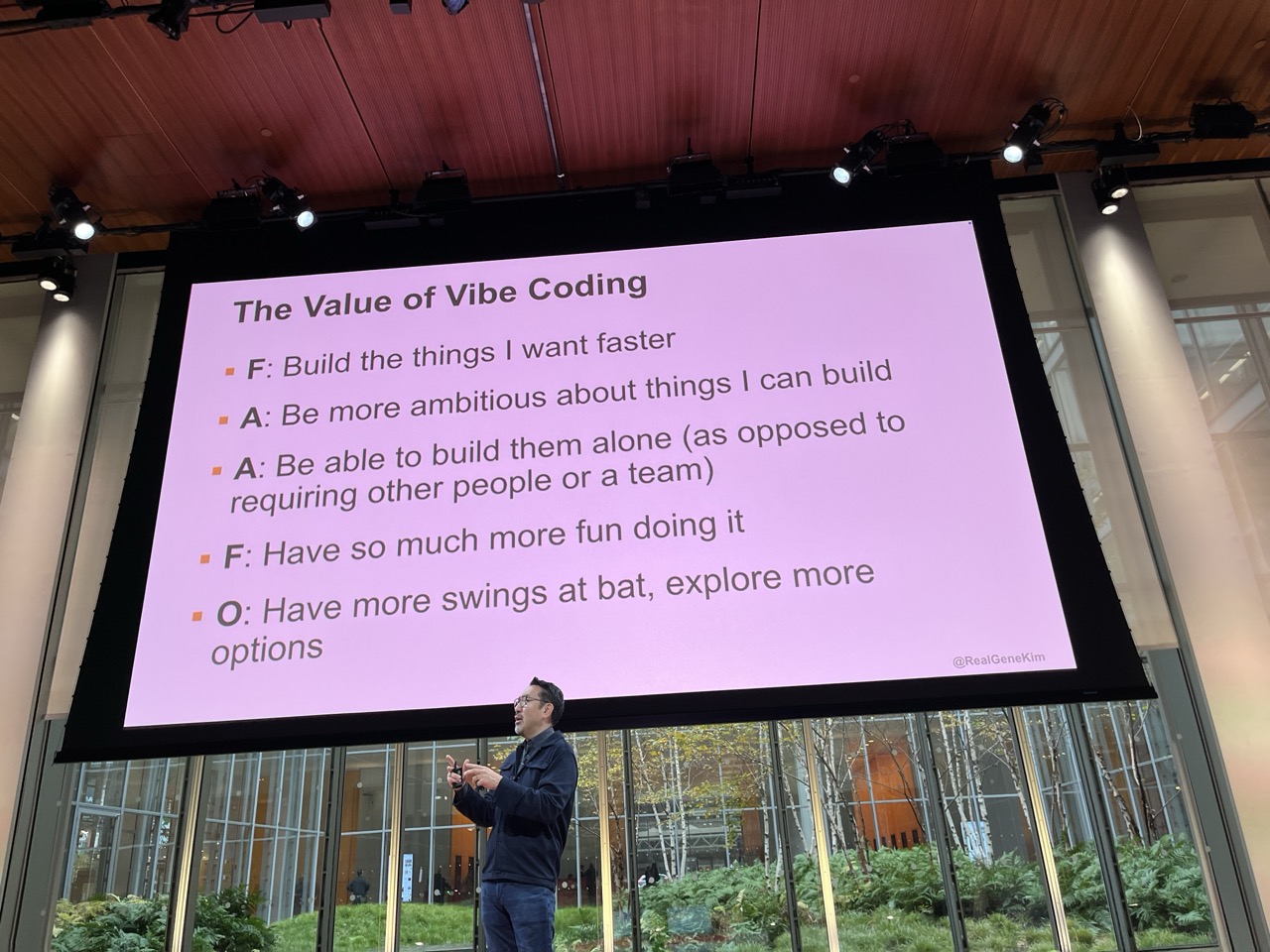

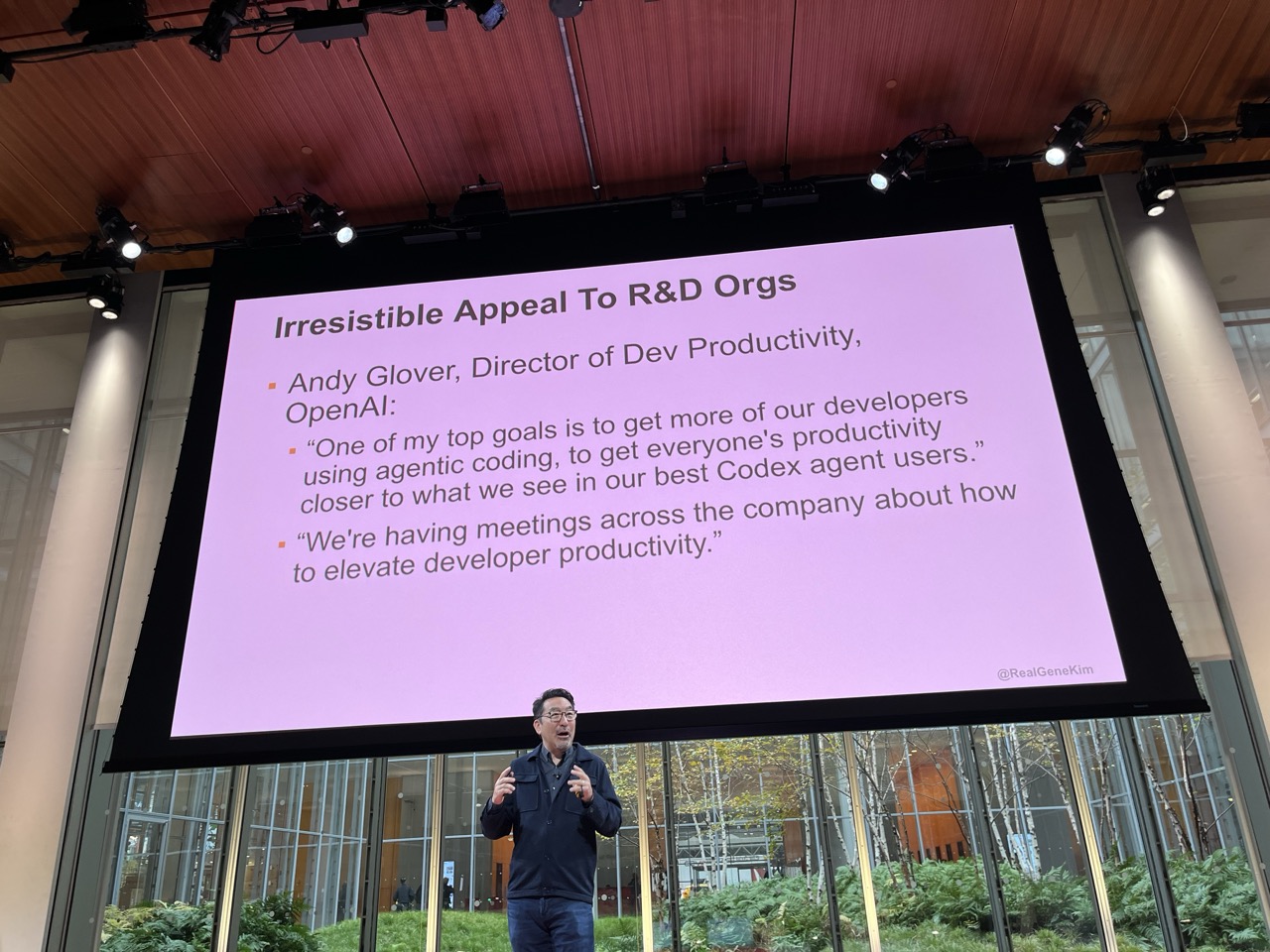

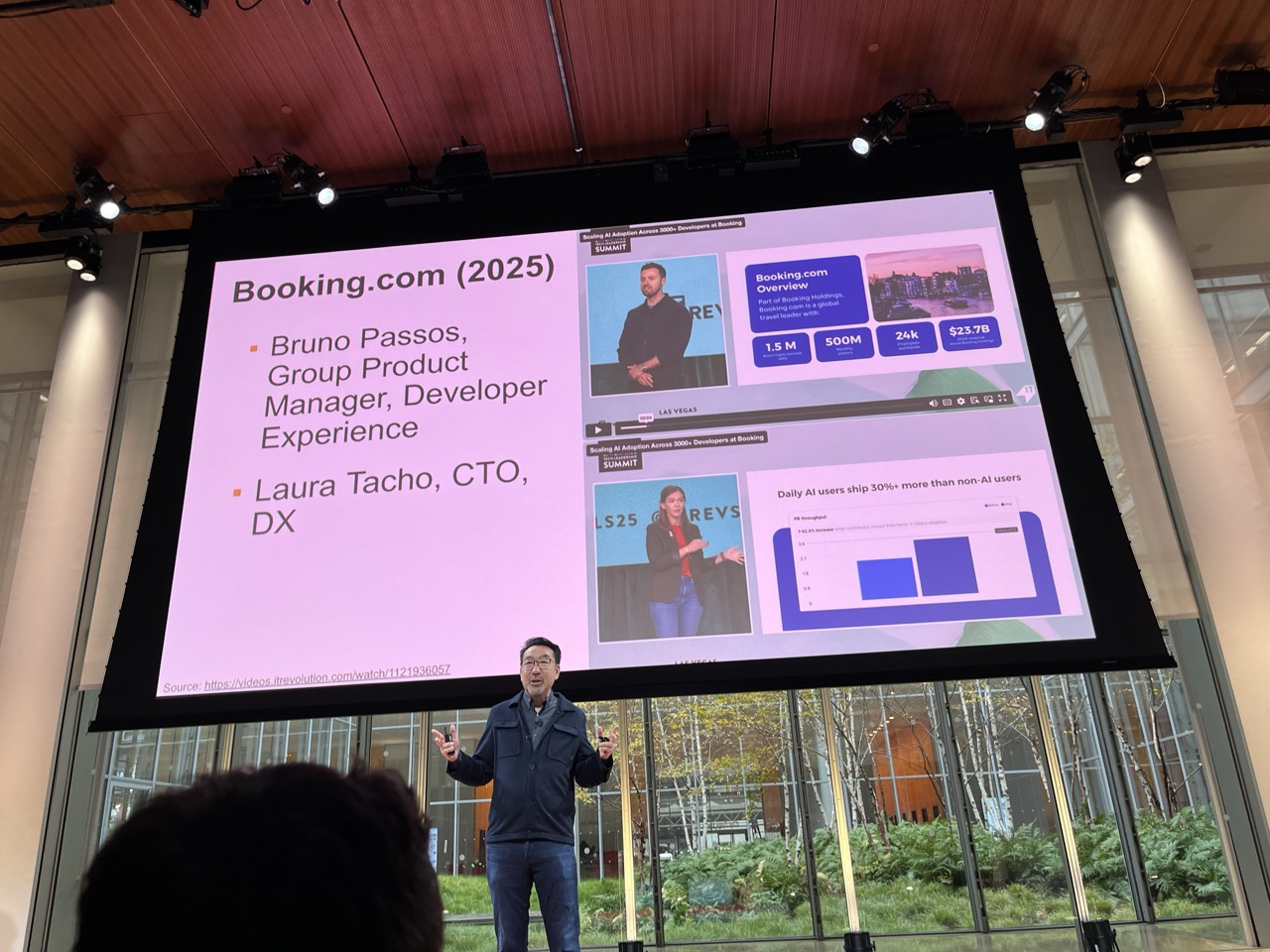

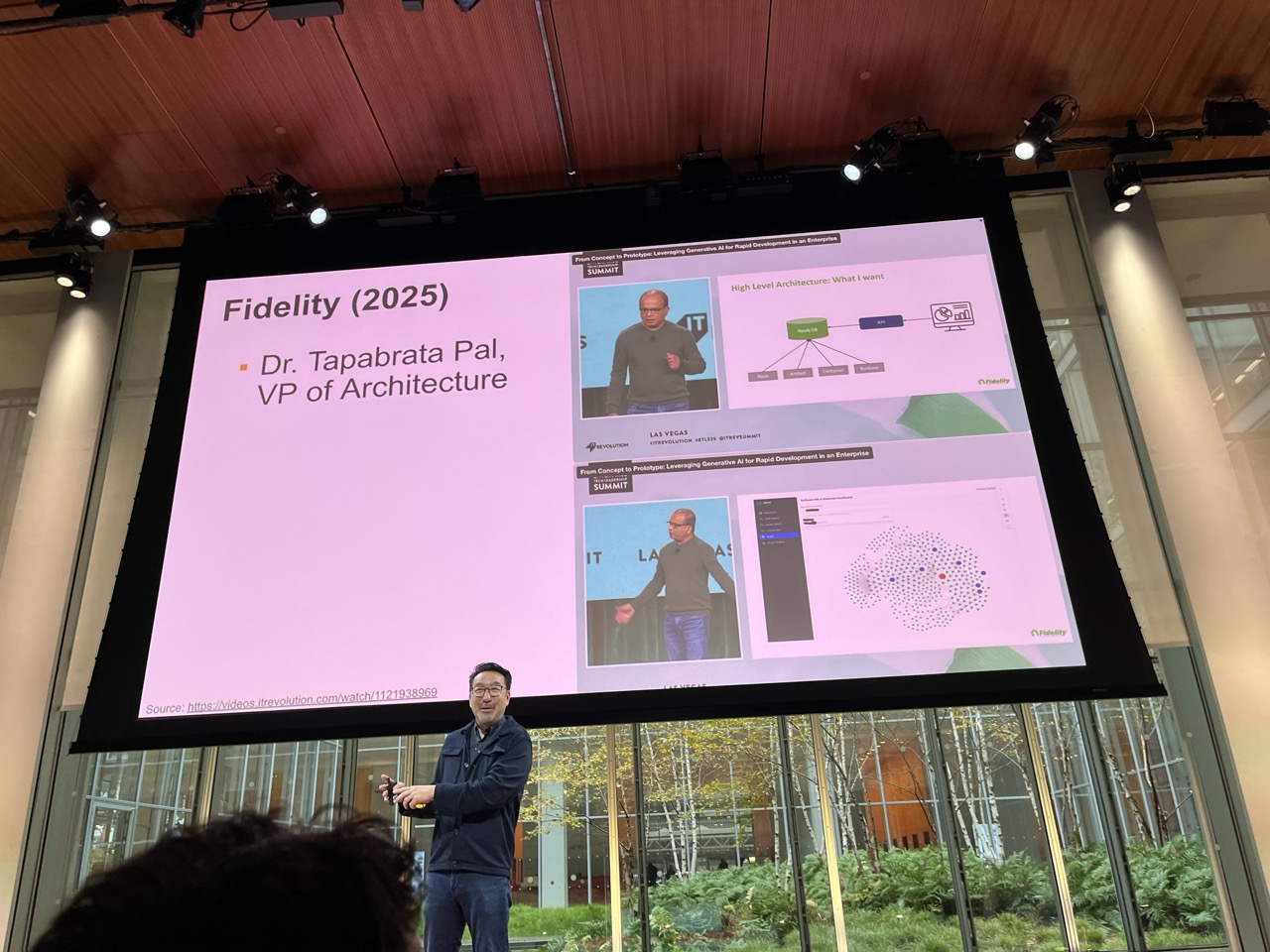

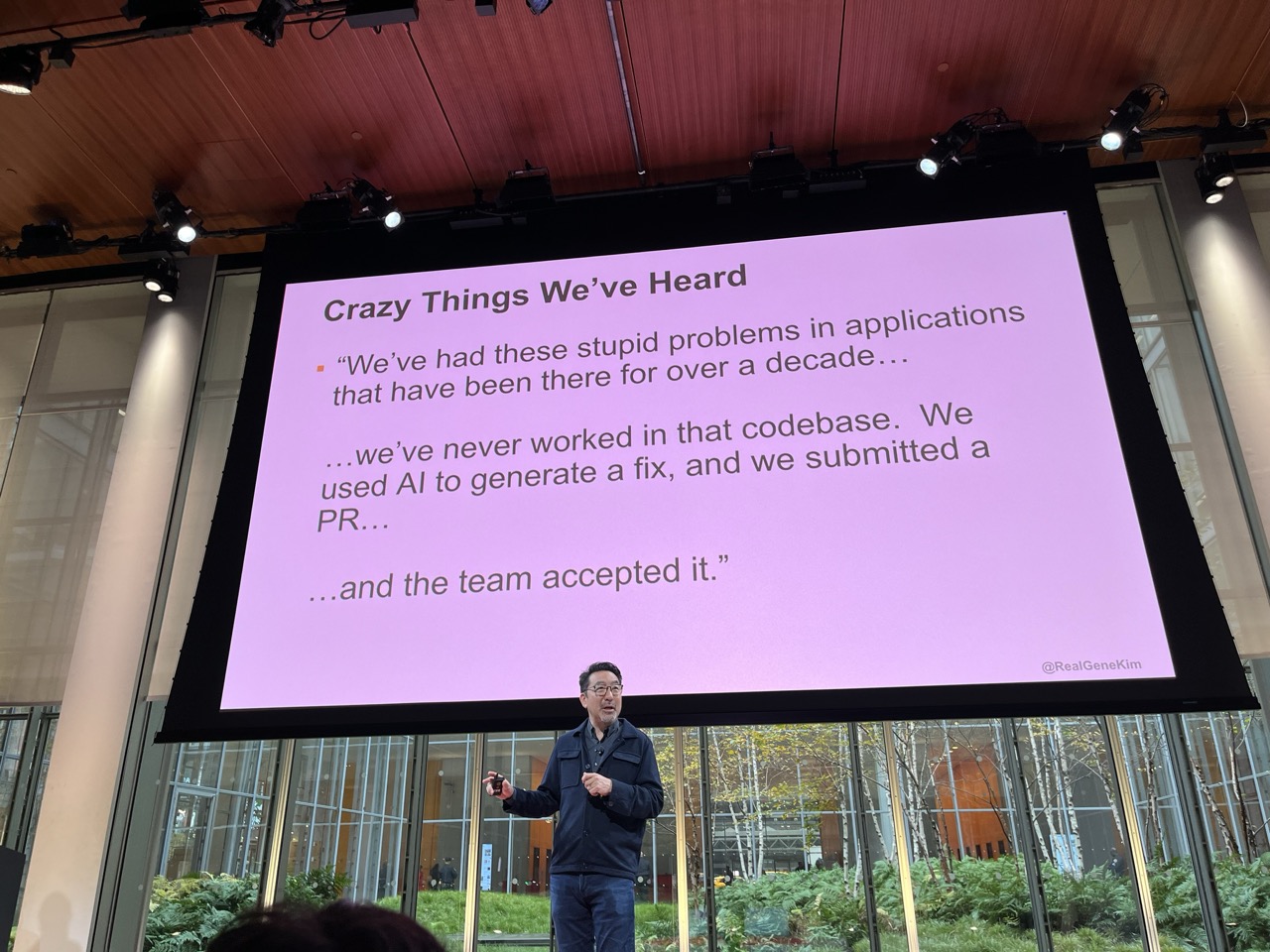

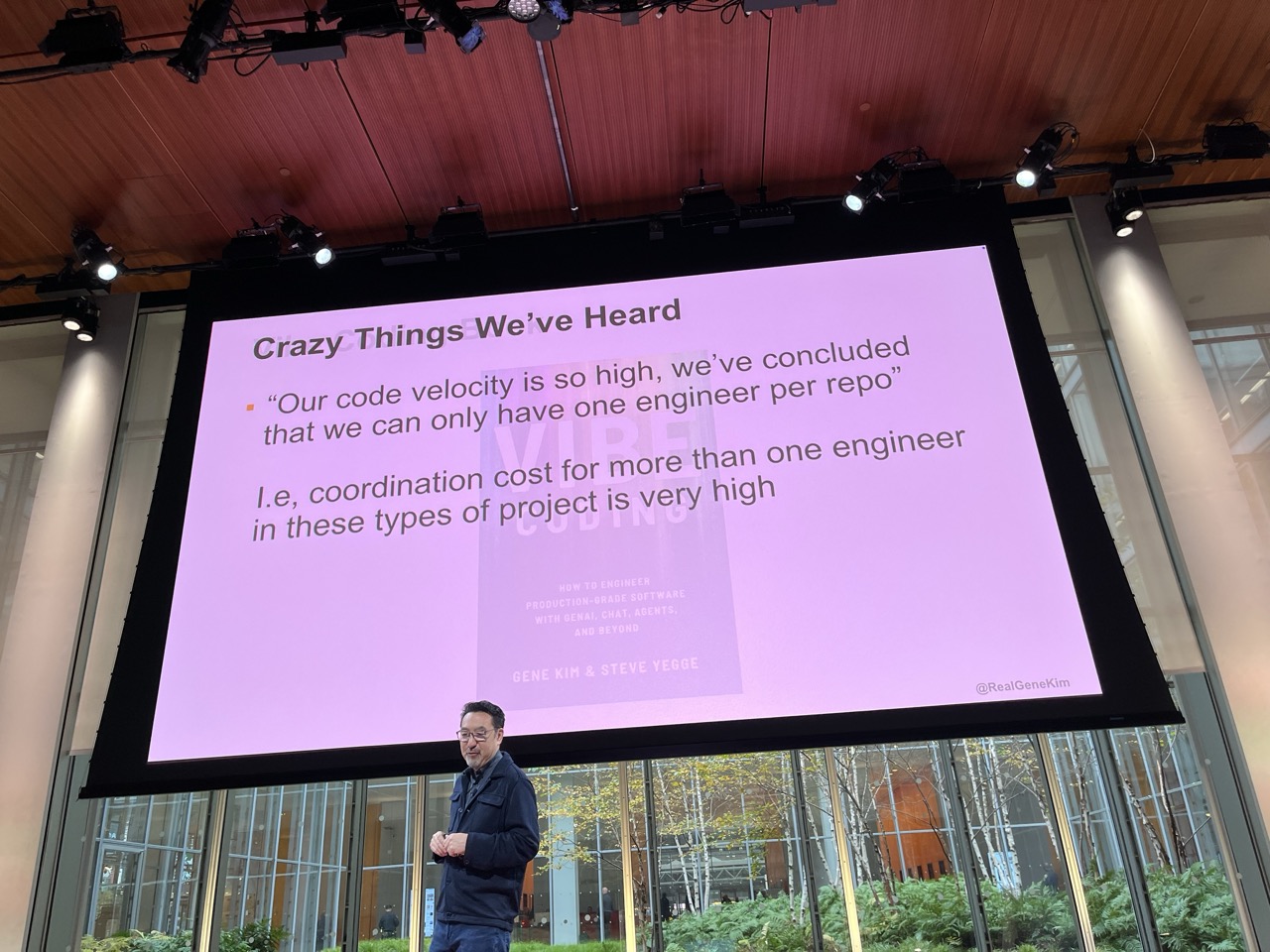

Vibe Coding#

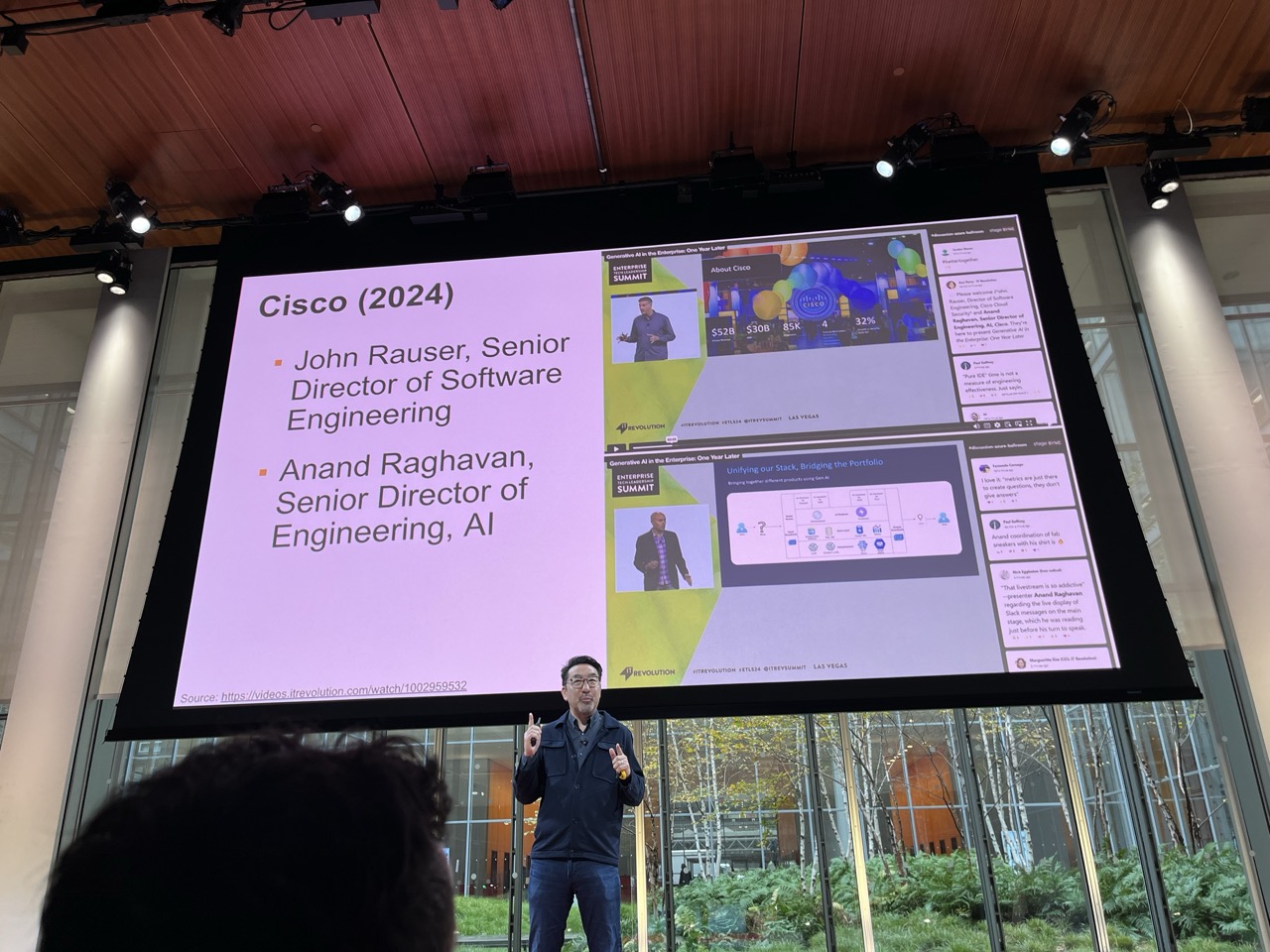

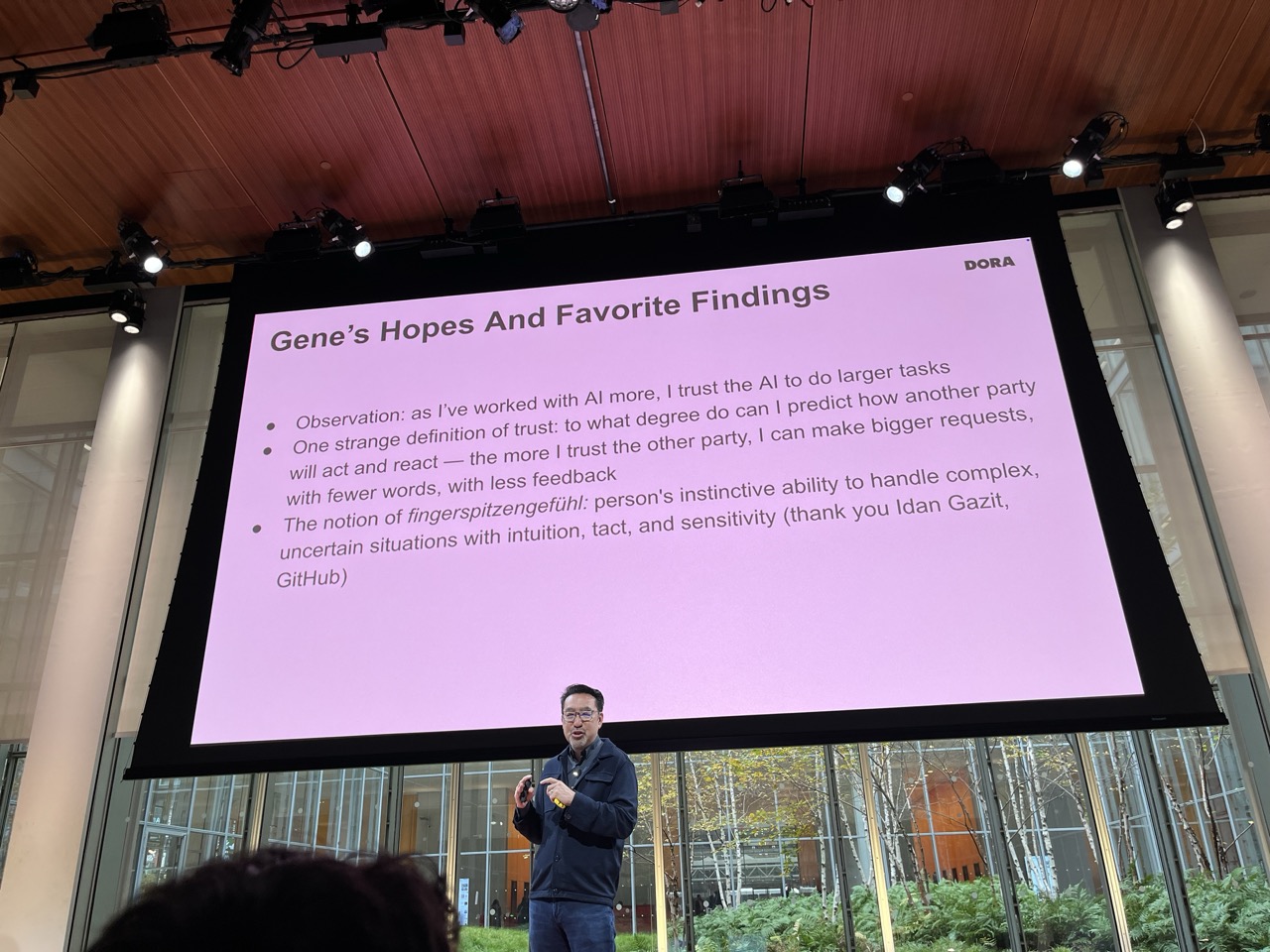

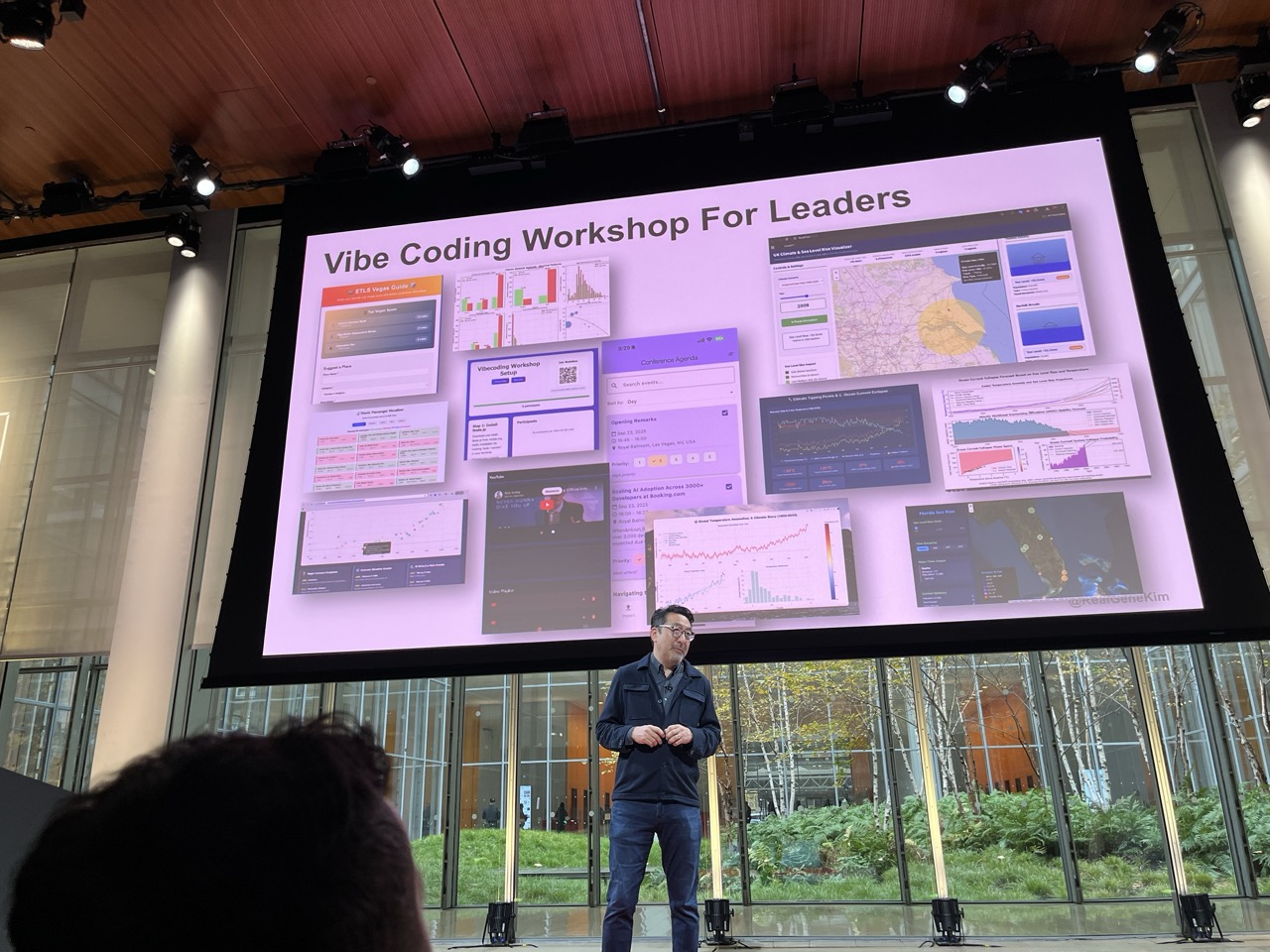

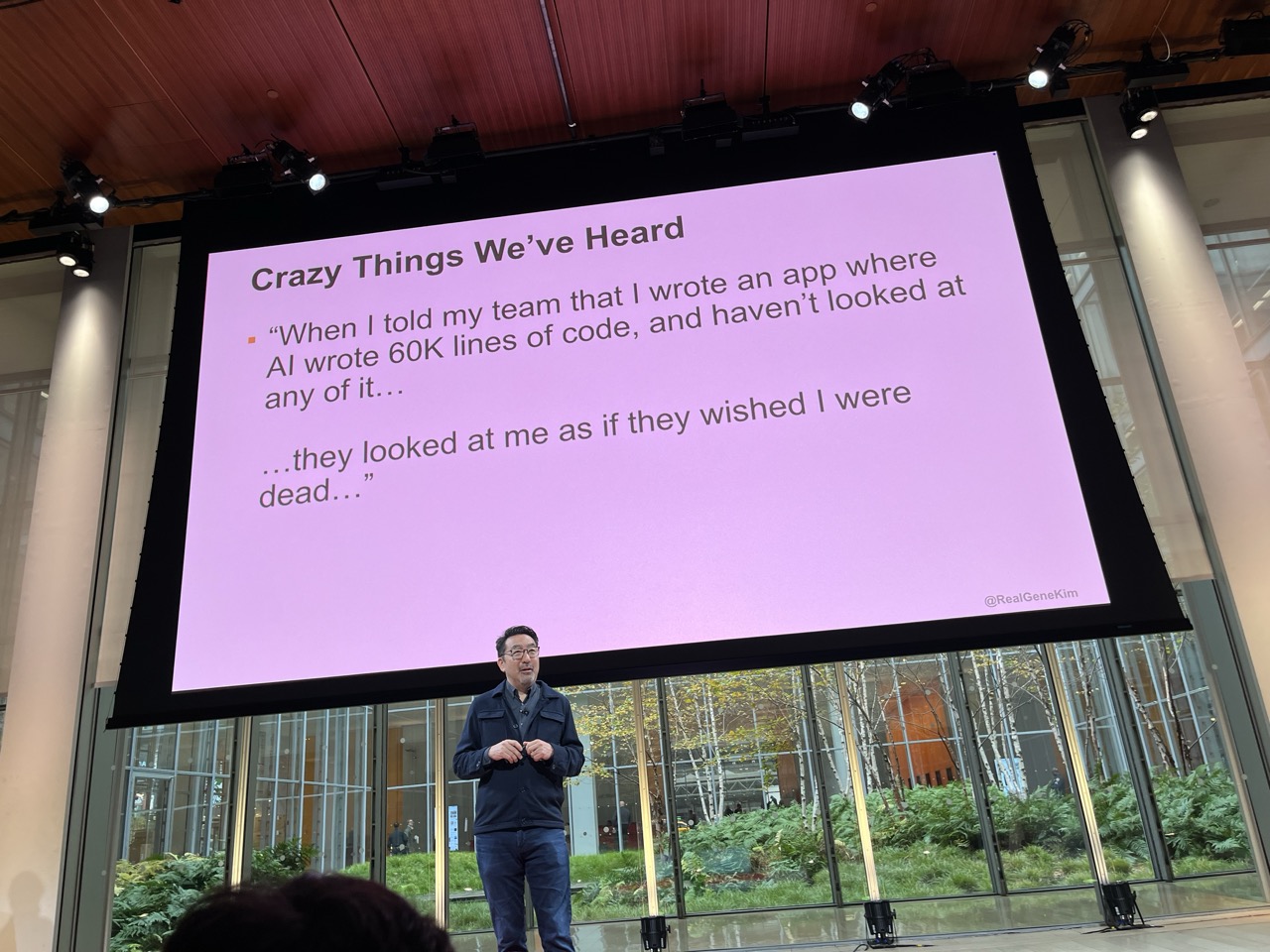

Steve Yegge / Sourcegraph/Amp, Gene Kim / IT Revolution

Great talk. Steve is a forceful advocate of adapting to change and allowing our tools to accelerate us - more agents, more speed. He is working to shape the AMP experience and is also the creator of beads which we should checkout/use.

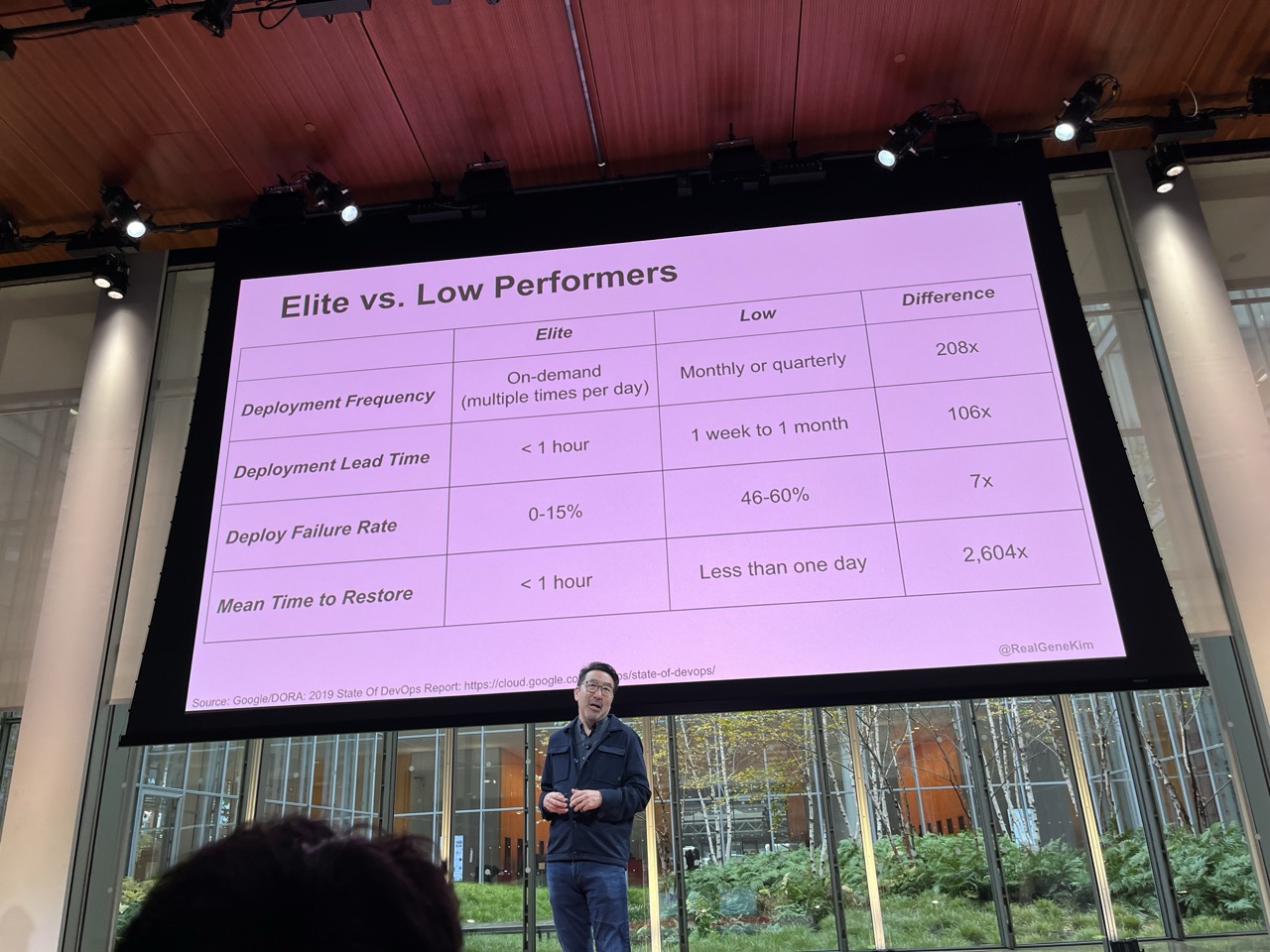

Gene talked about the organizational and industry wide changes we are in the midst of. He talked about how he lived through DevOps and agile and that this is just the tip of the iceberg. Lots of vignettes from individuals and teams who are using Vibe Coding/Vibe Engineering.

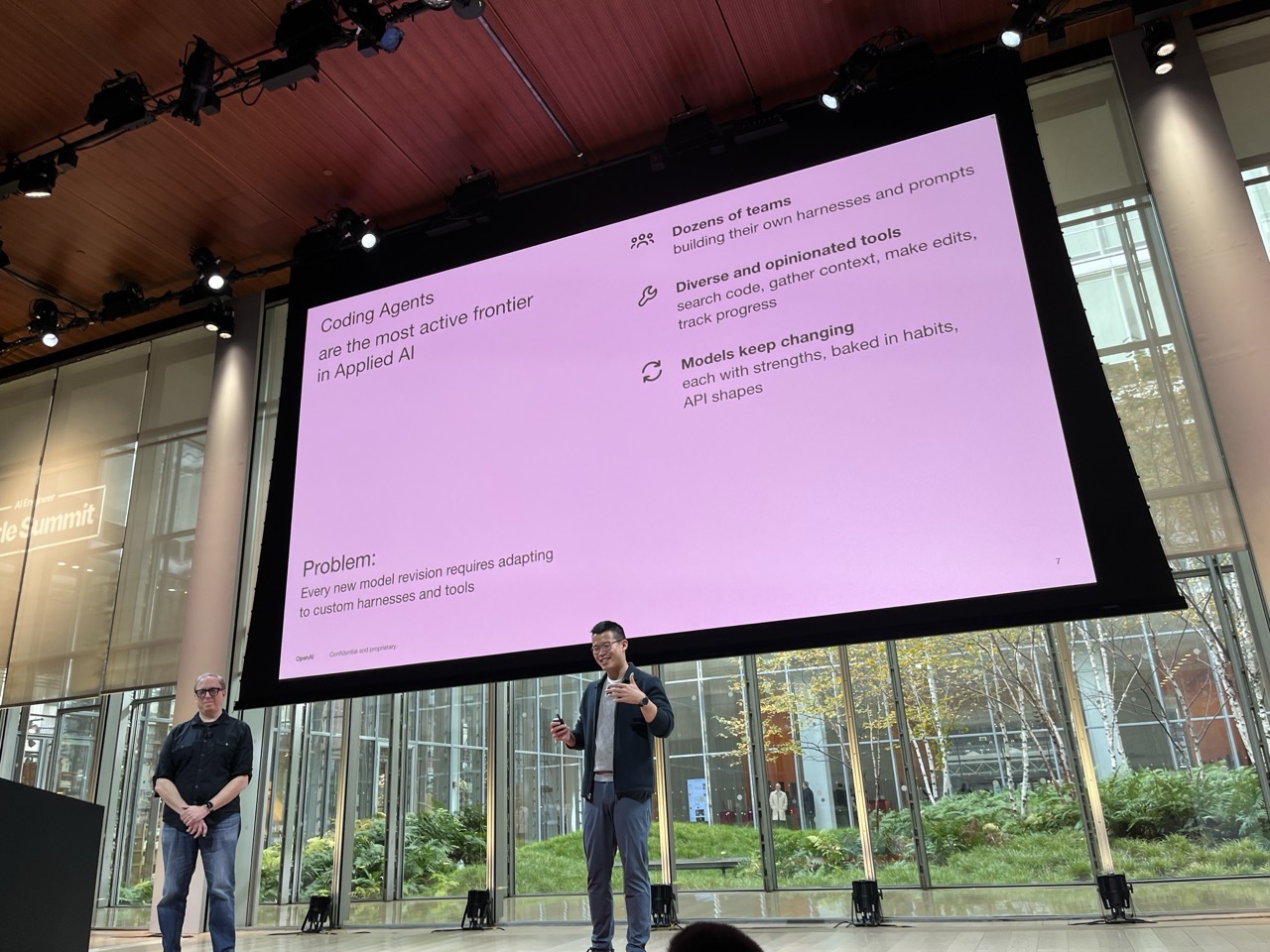

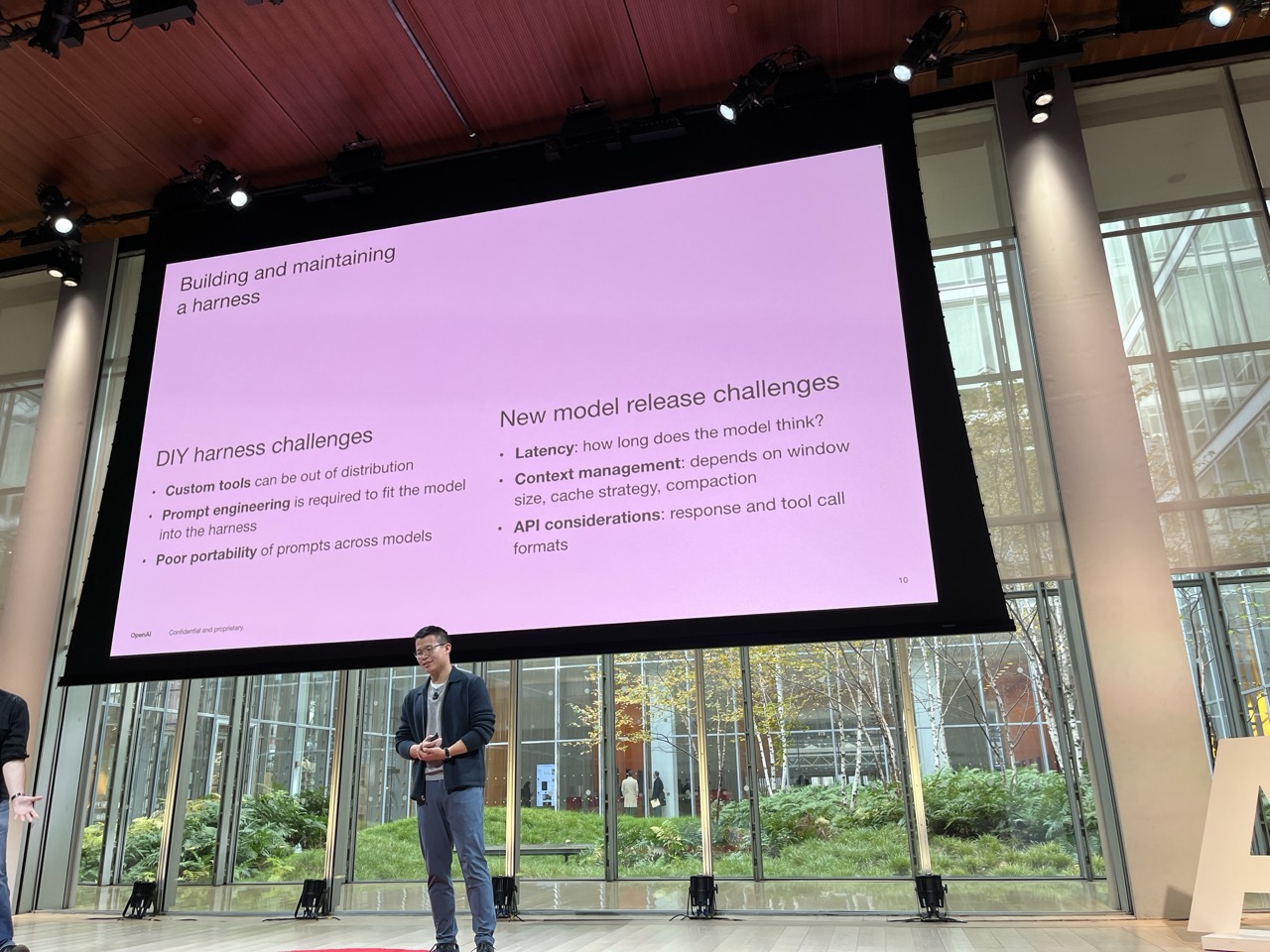

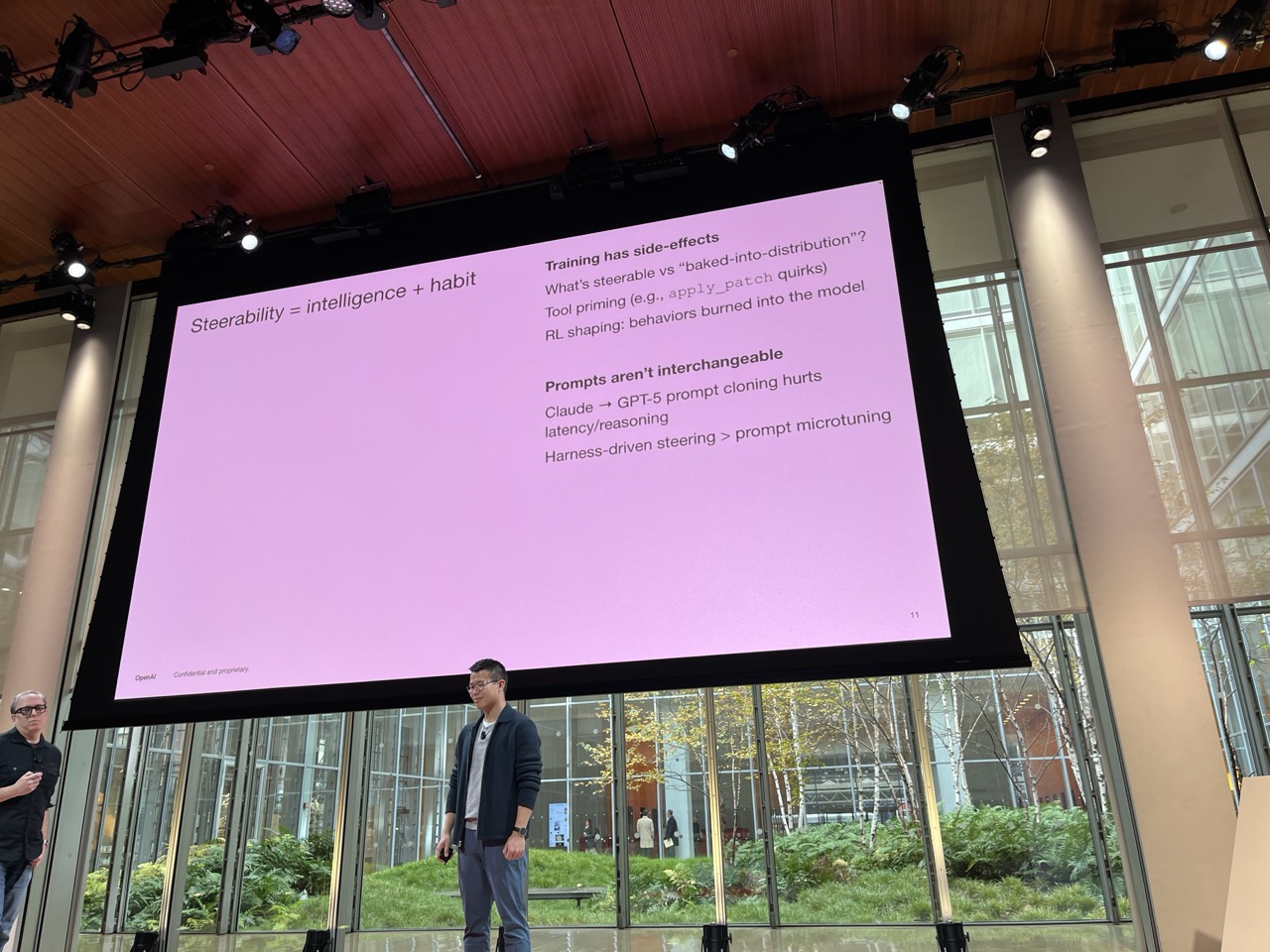

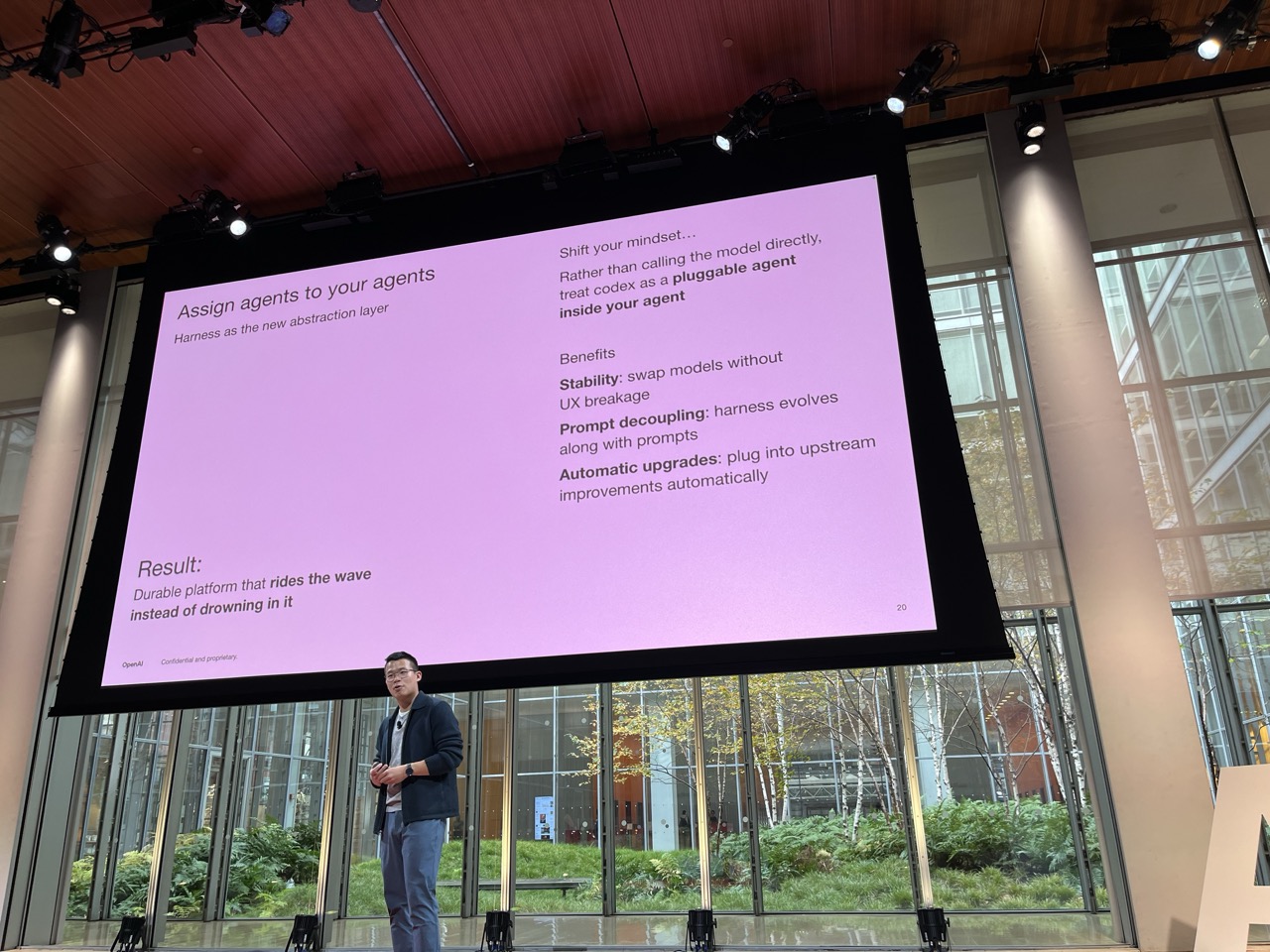

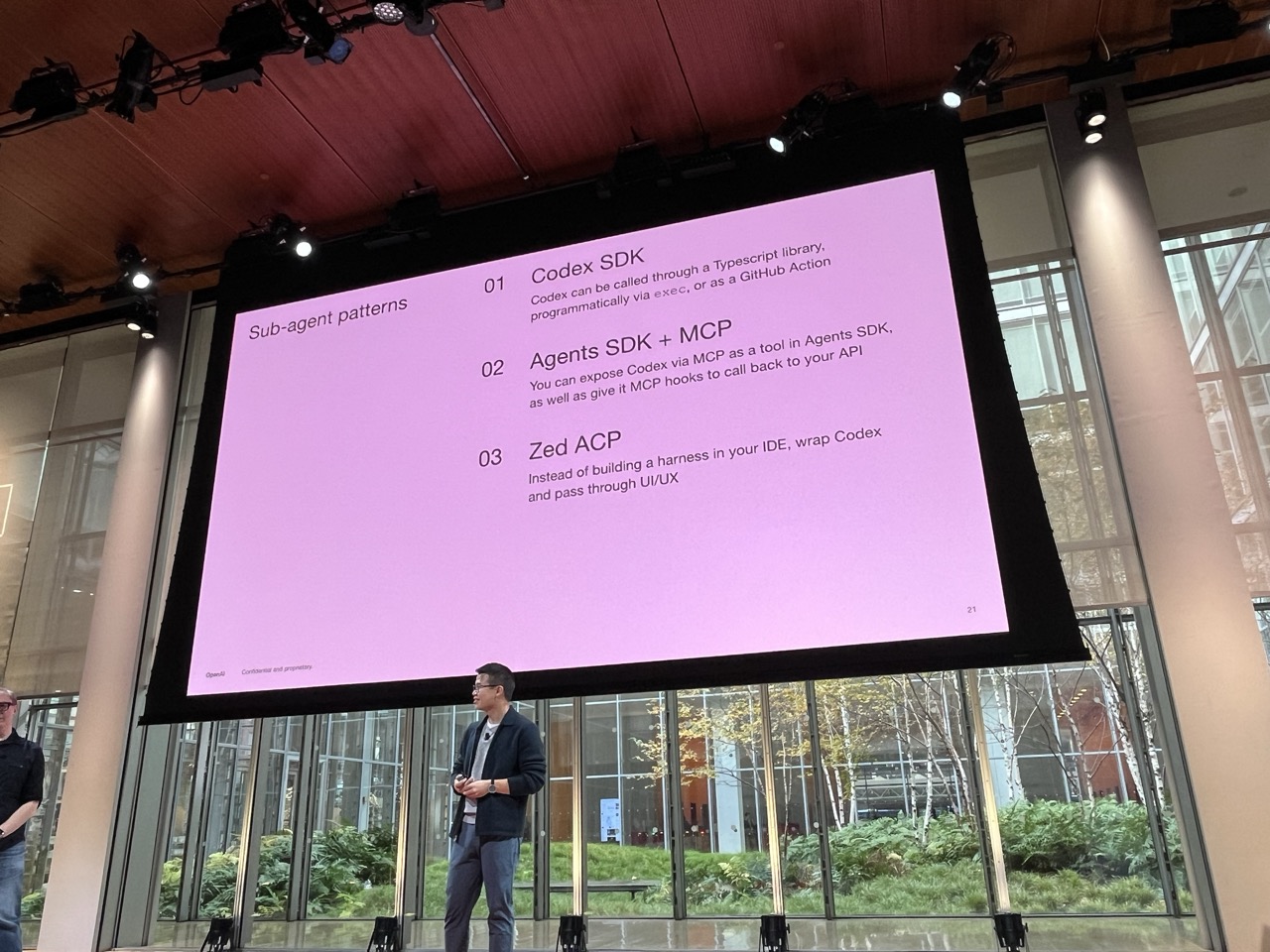

Future-Proof Coding Agents: Building Reliable Systems That Outlast Model#

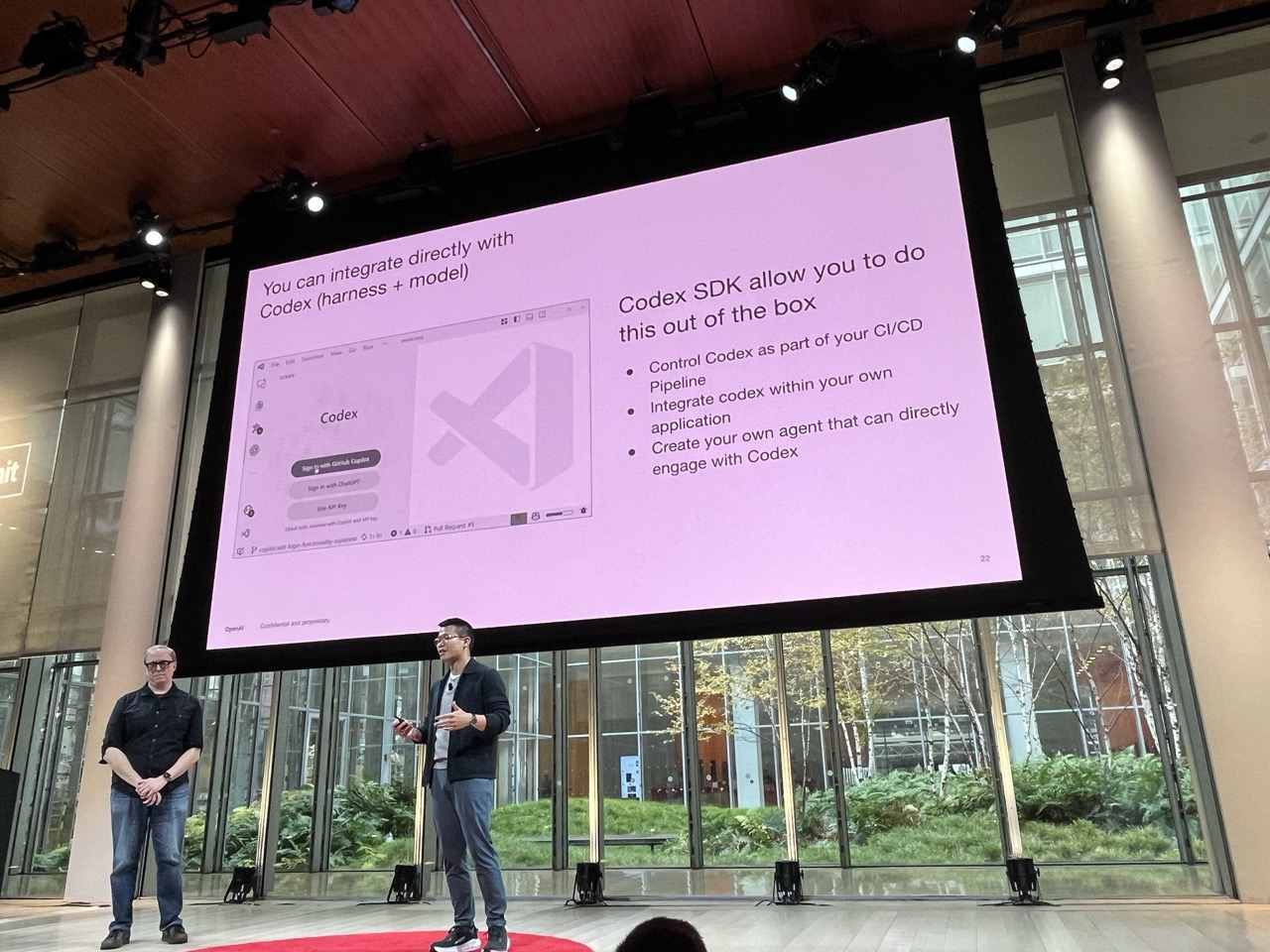

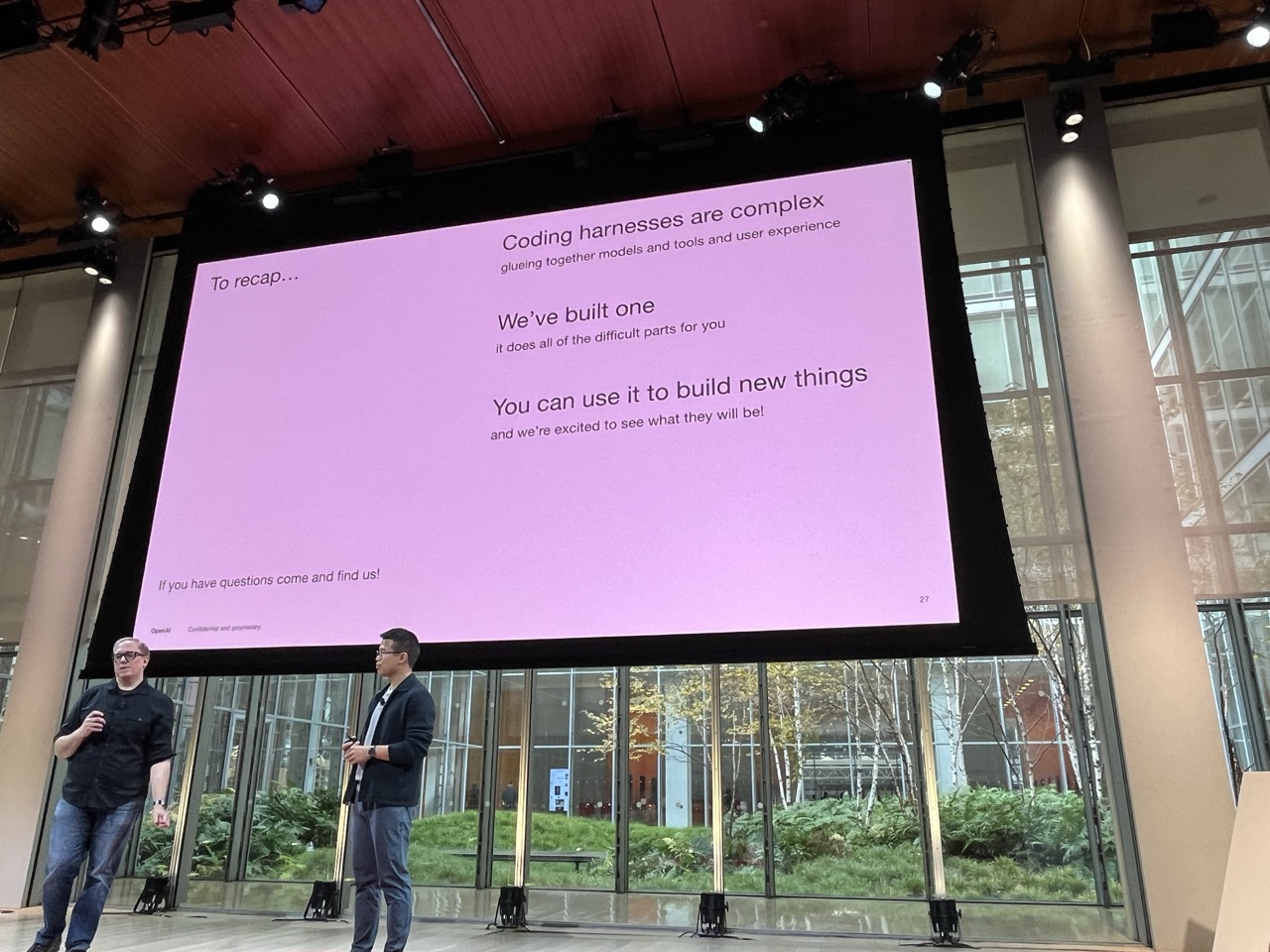

Bill Chen / OpenAI, Brian Fioca / OpenAI

Talked about the combination of utilities you provide to a model to give it action as a “Harness”

Talked about how important it is to match a model with the Harness it was trained on

Codex as an agent to delegate to other agents.

Subagent patterns:

- CODEX SDK

- SDK + MCP

- ACP

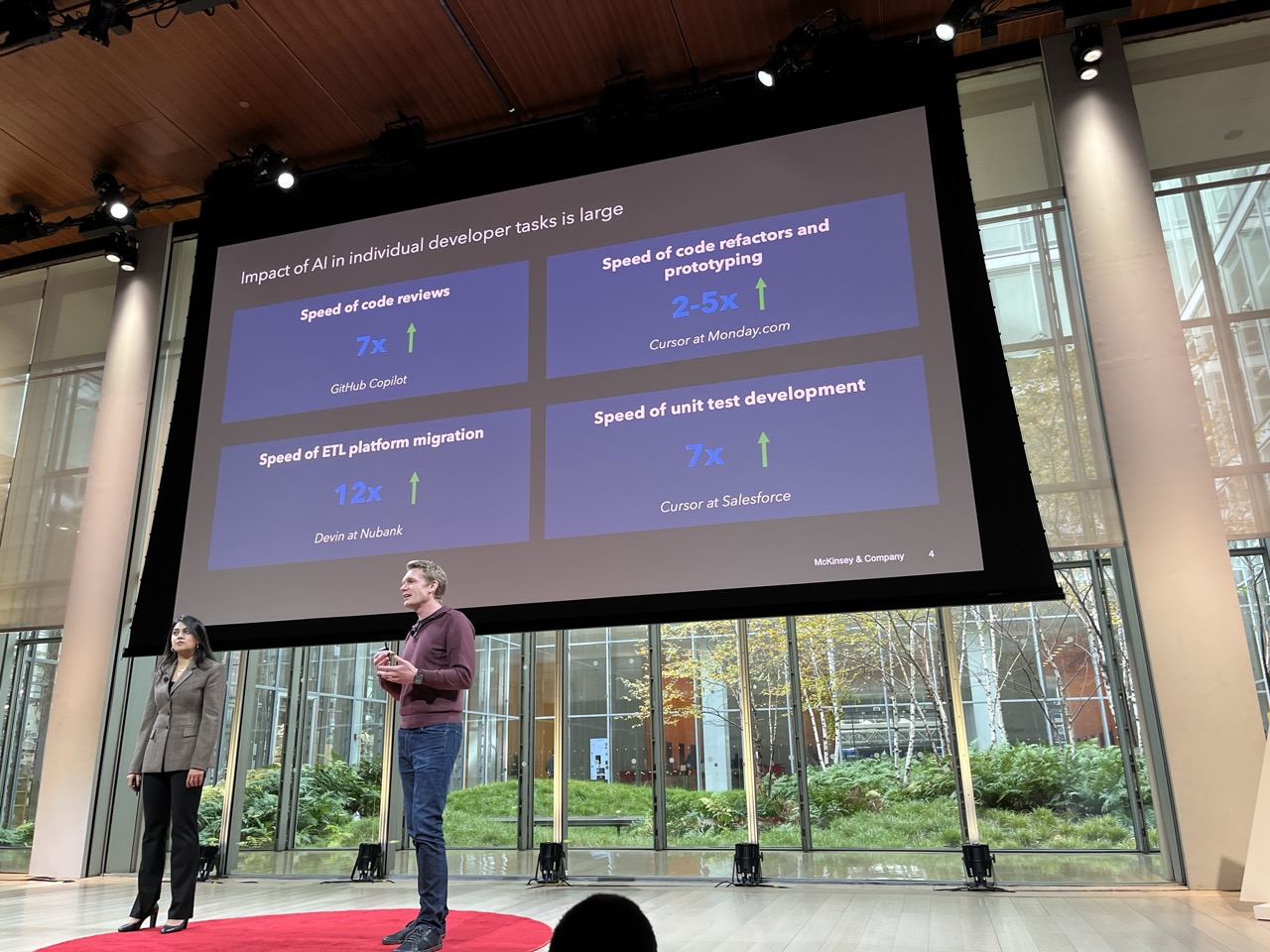

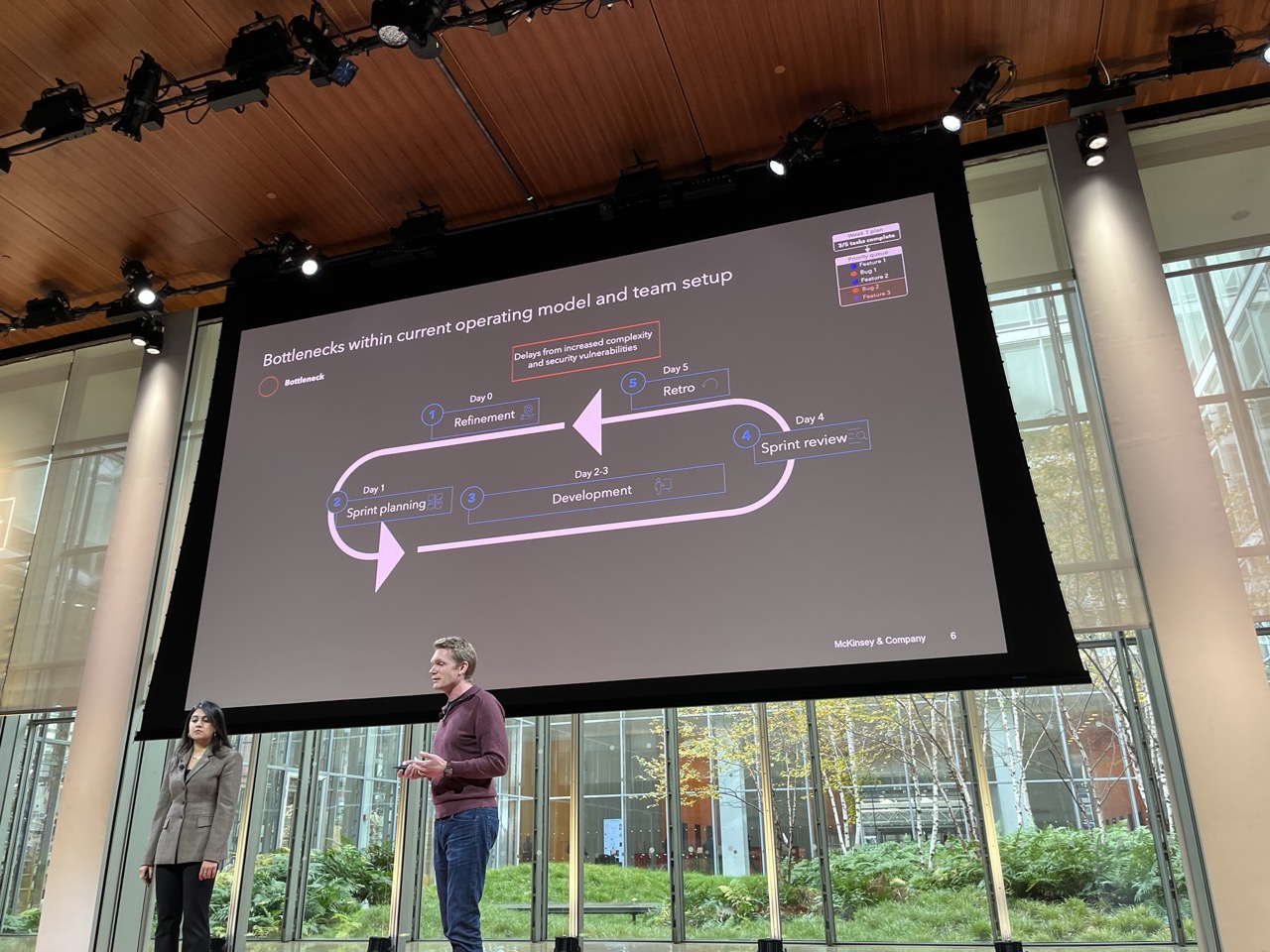

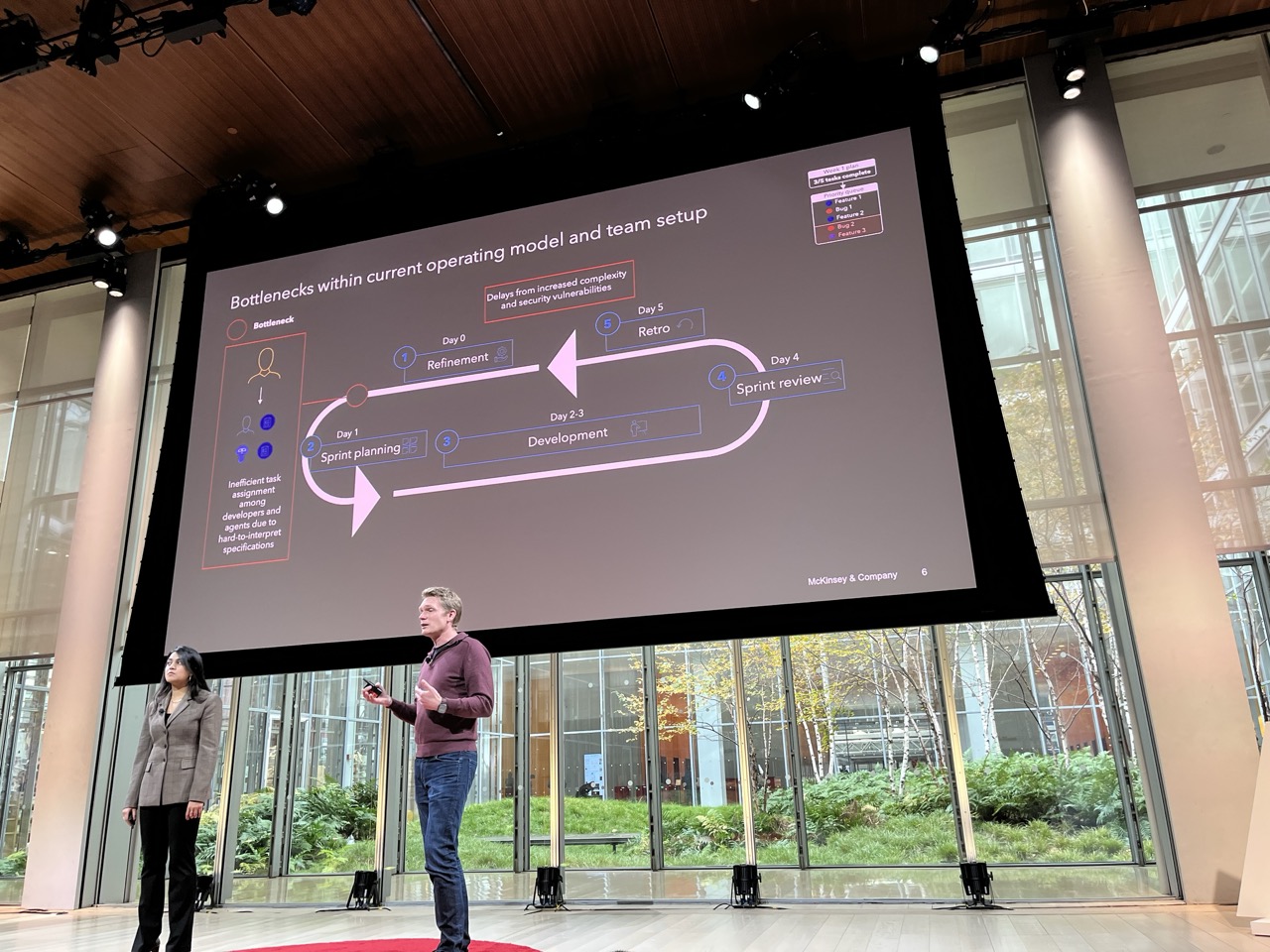

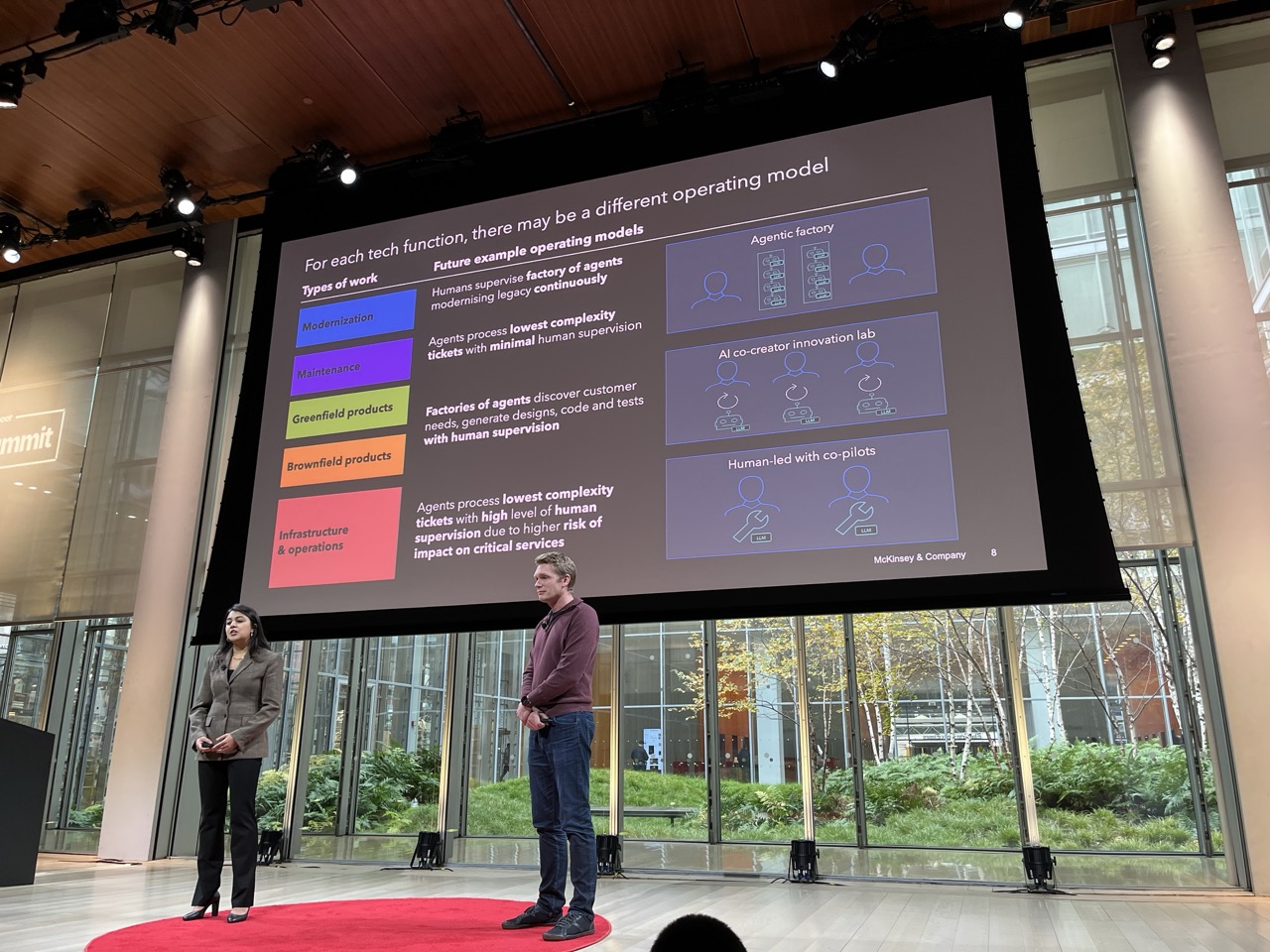

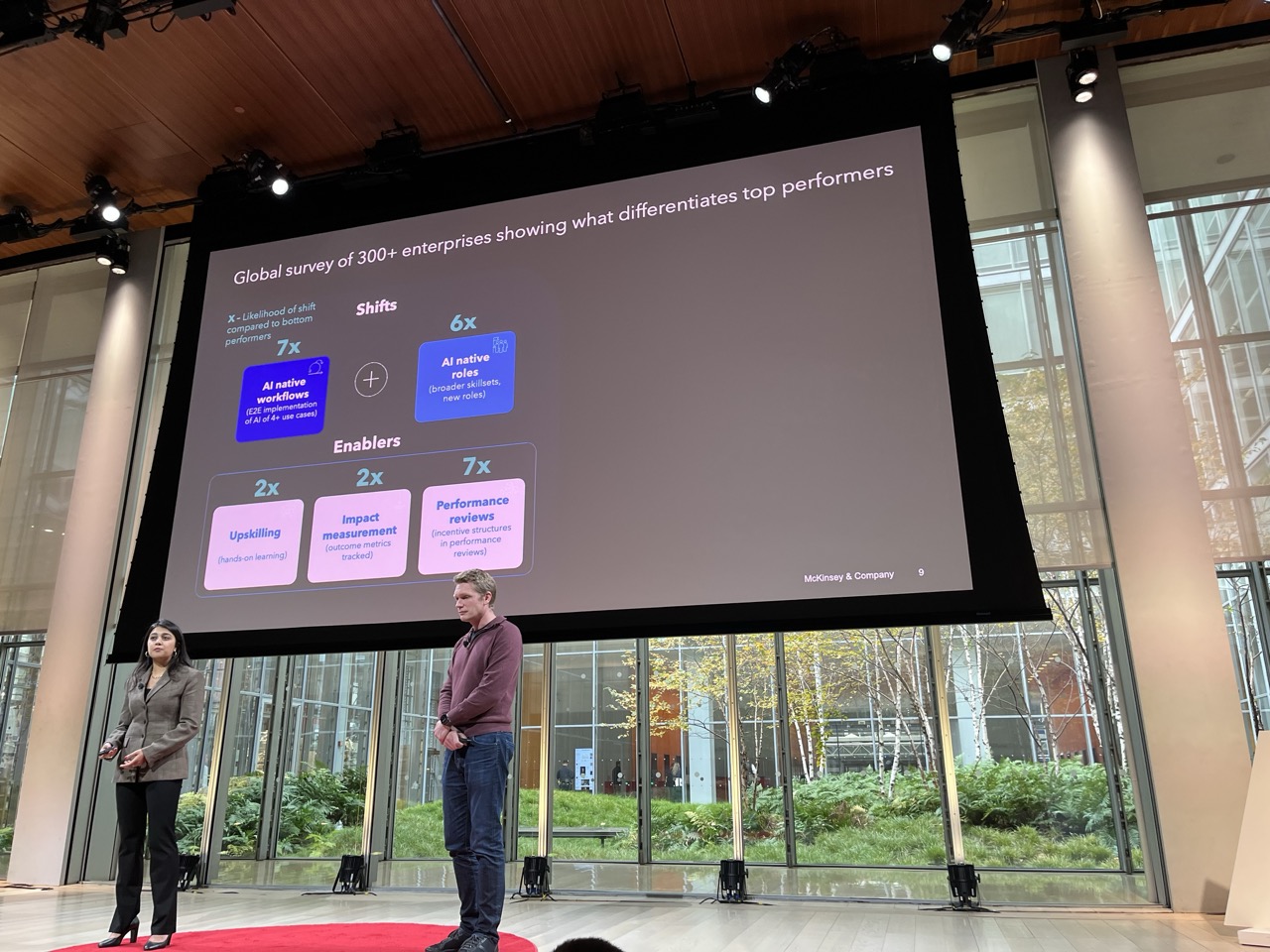

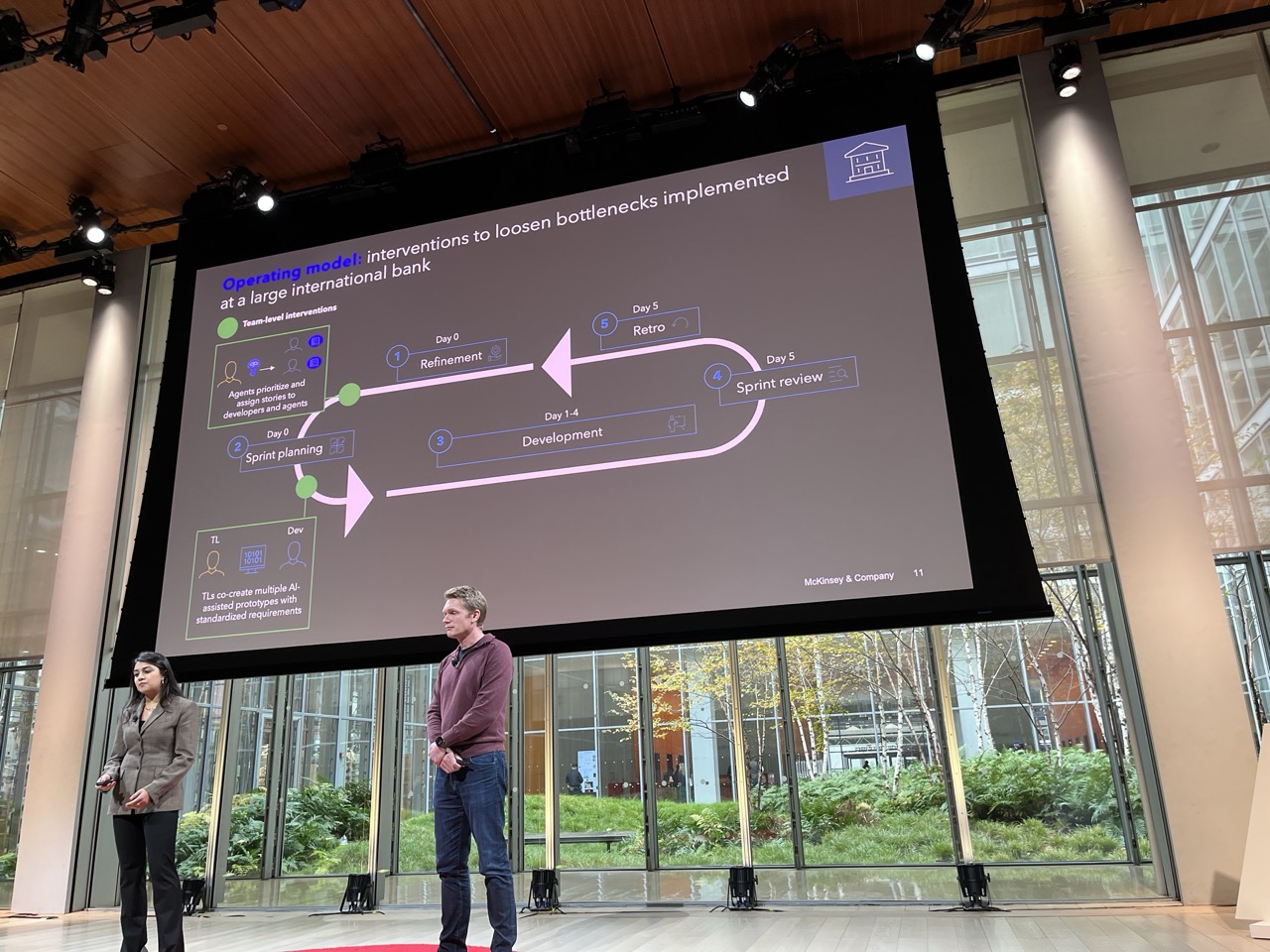

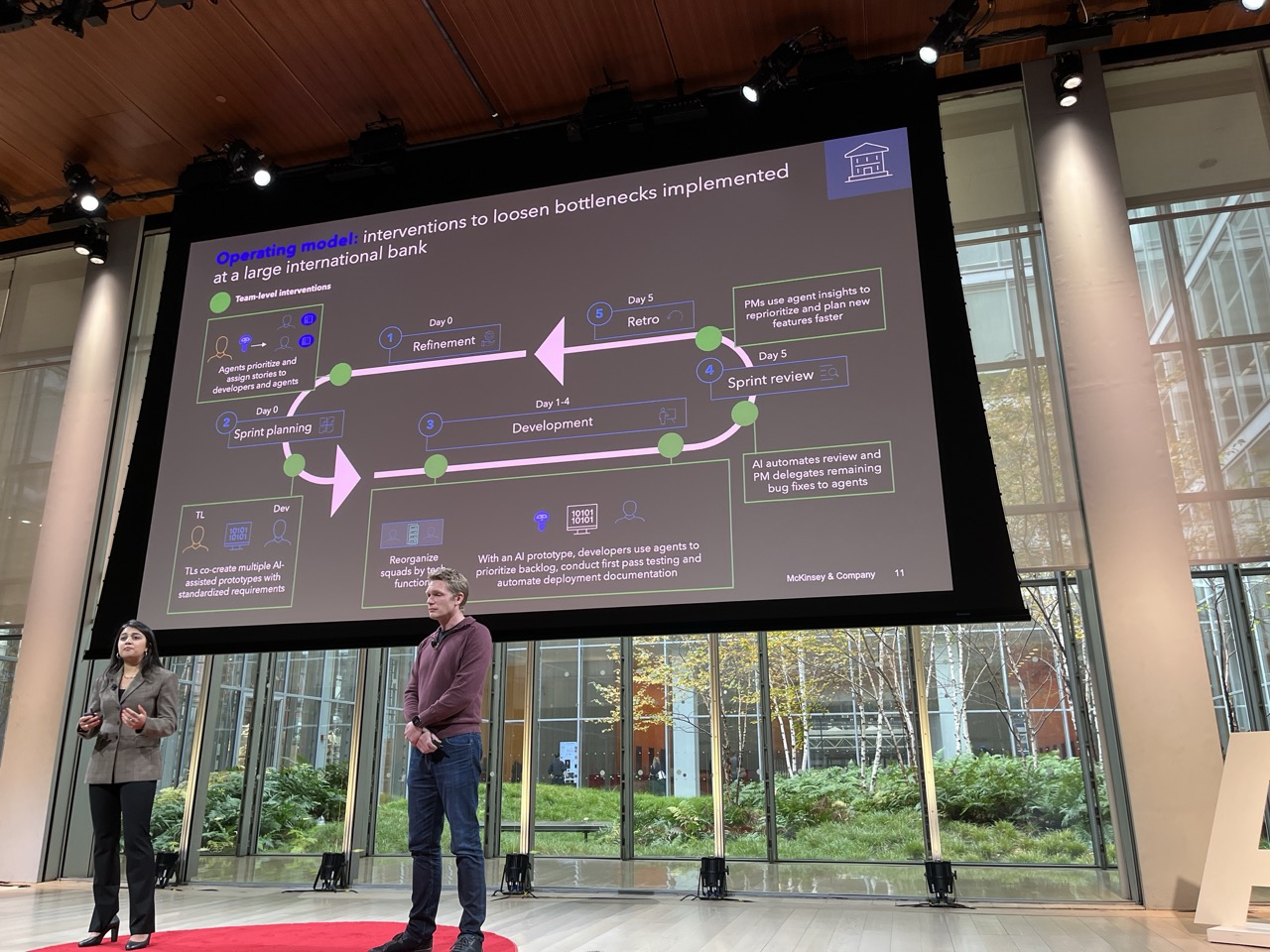

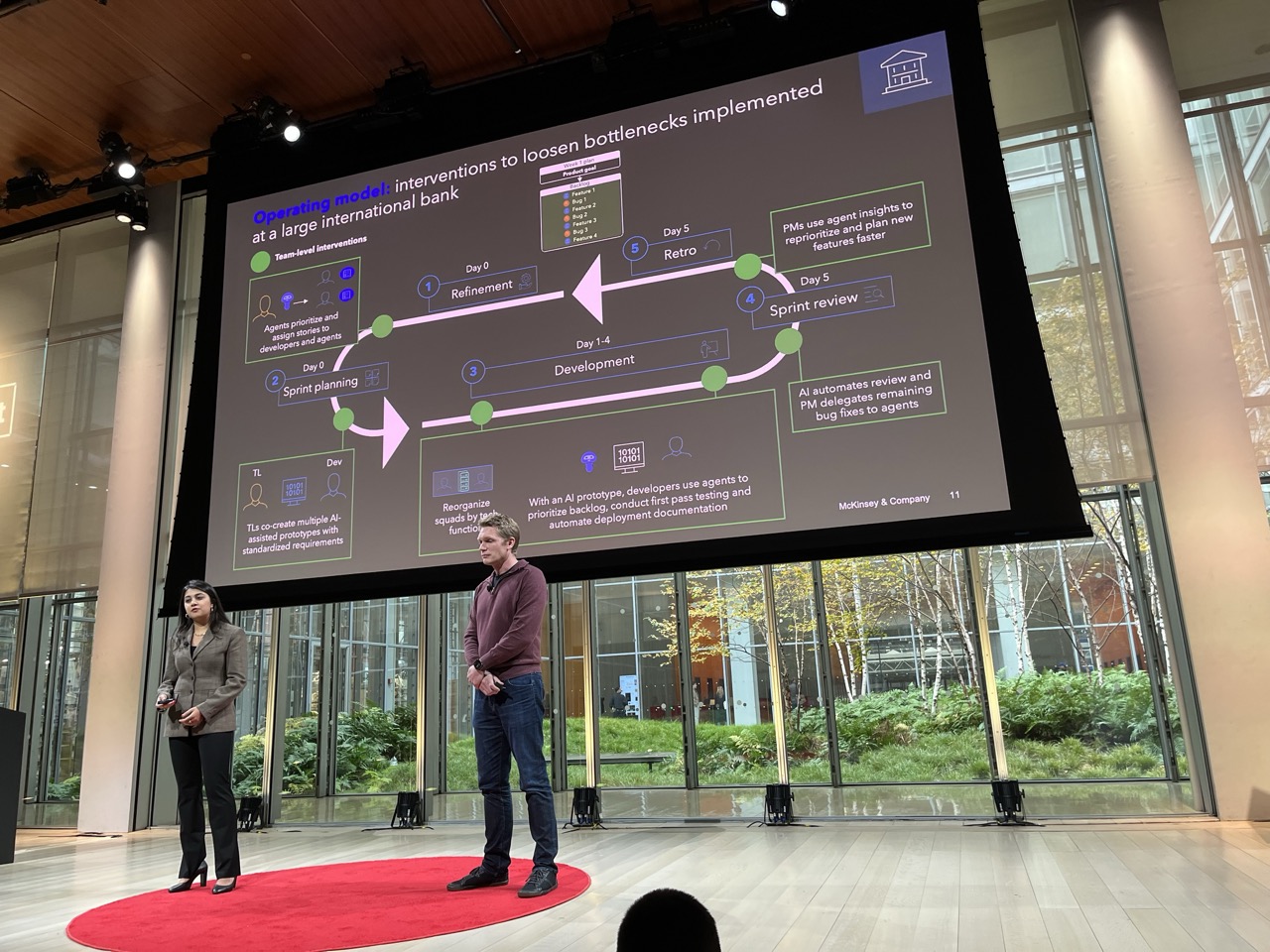

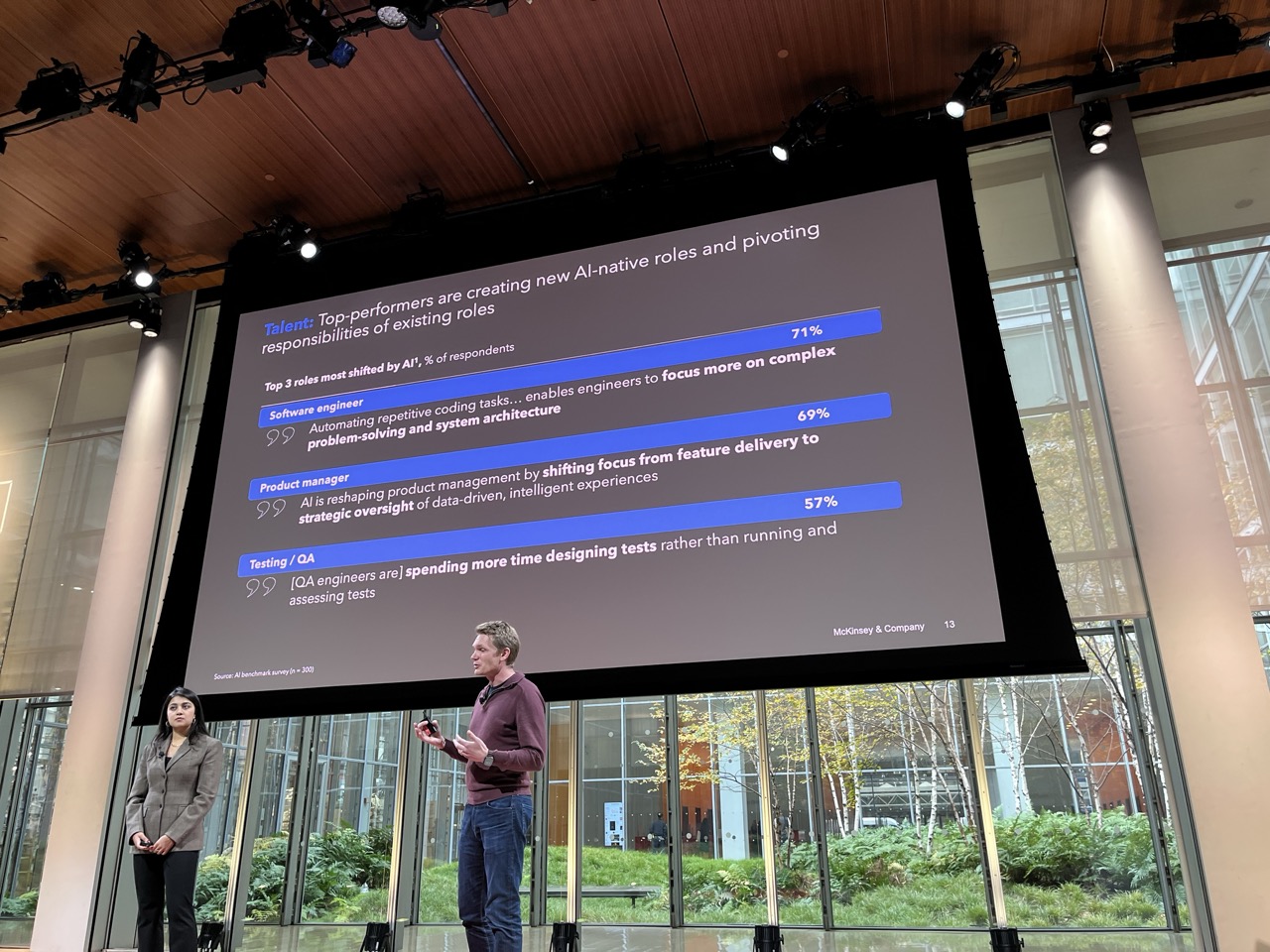

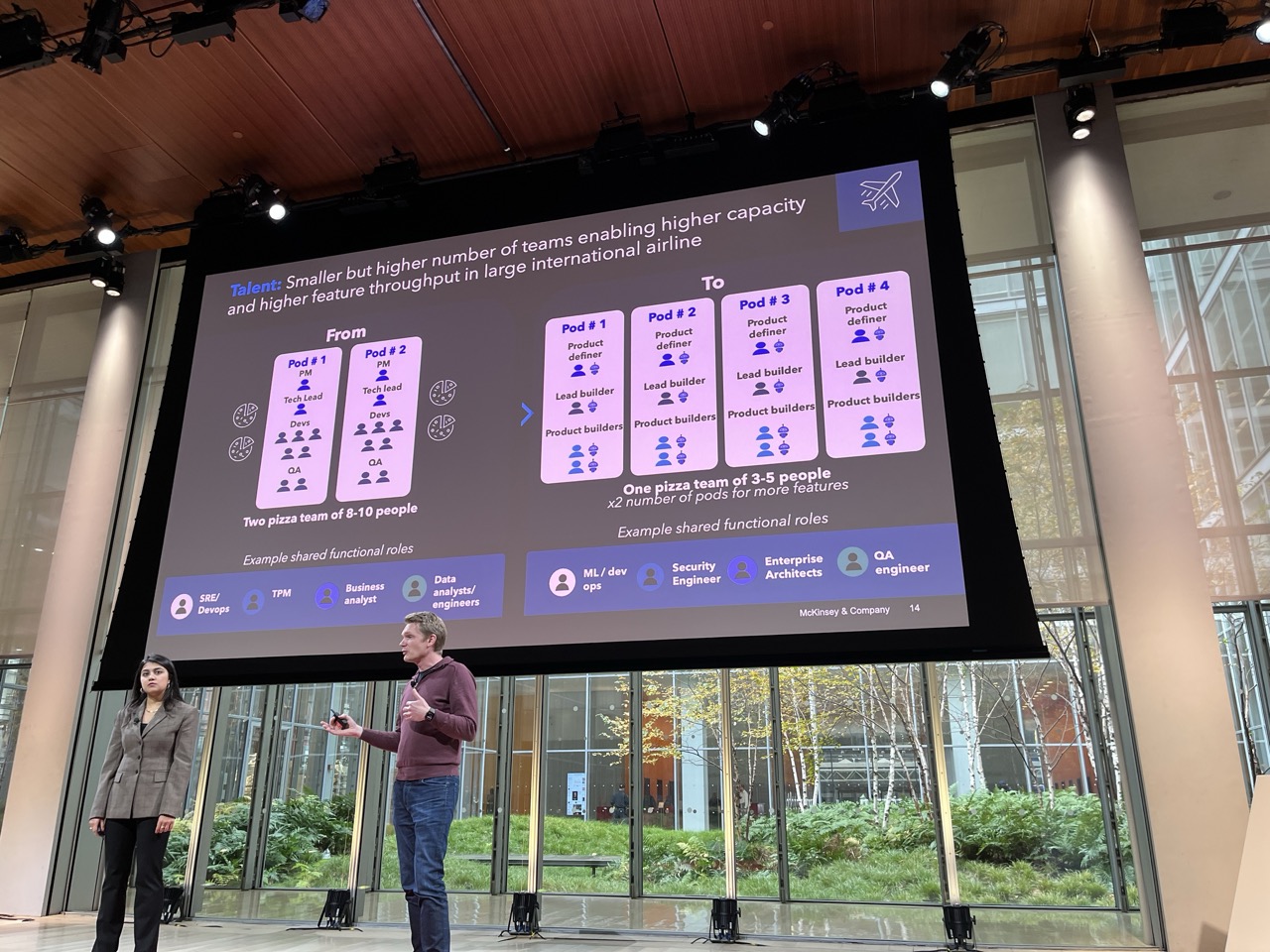

Moving away from Agile: What’s Next?#

Martin Harrysson / McKinsey, Natasha Maniar / McKinsey

Brainstorm the post-agile world - e.g. if you don’t speed up the whole cycle you get pileups of unaddressable work - e.g. in number of PRs.

Organizations are less productive than individuals.

“Agent Factories”

What do AI Native workflows and AI native roles look like?

AI Agents to automate all aspects of each process.

AI introduction into an org does not automatically lead to use. You need continuous improvement, training, and some successes.

Change management:

- Centralized communication

- Peer slack channels for best practice sharing

- Role based training

- Specific use cases

- Rewards

- Coaching / library

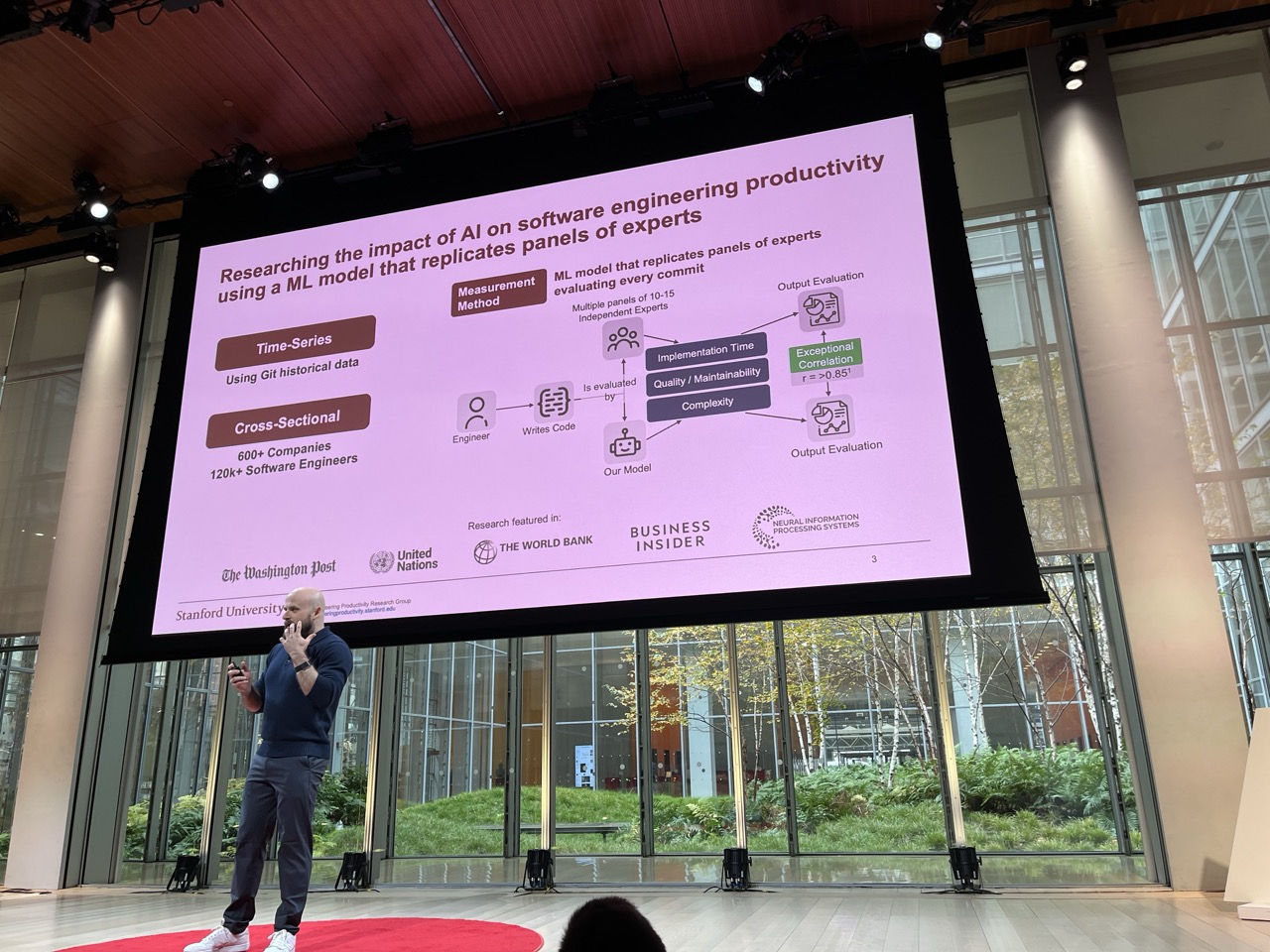

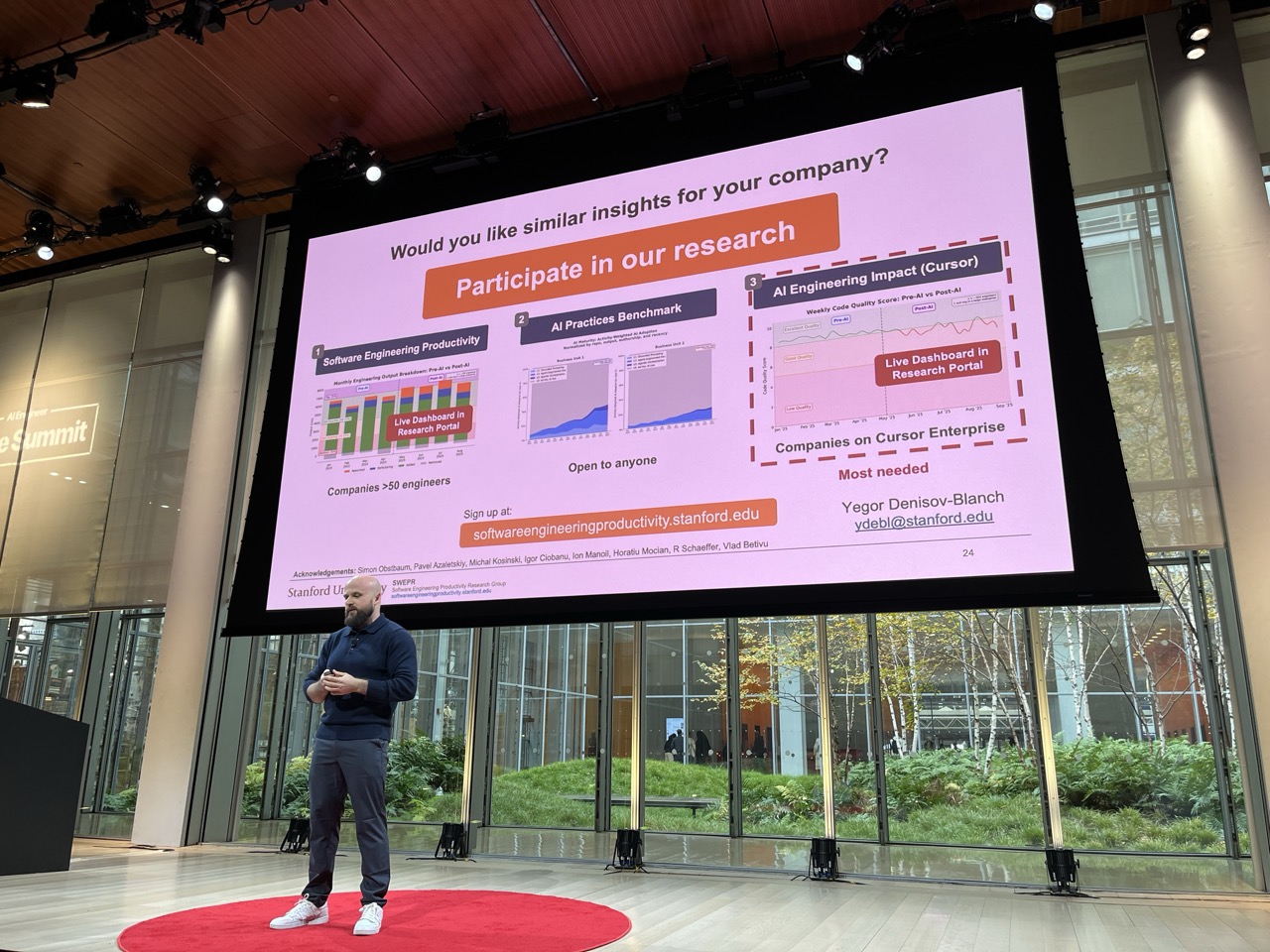

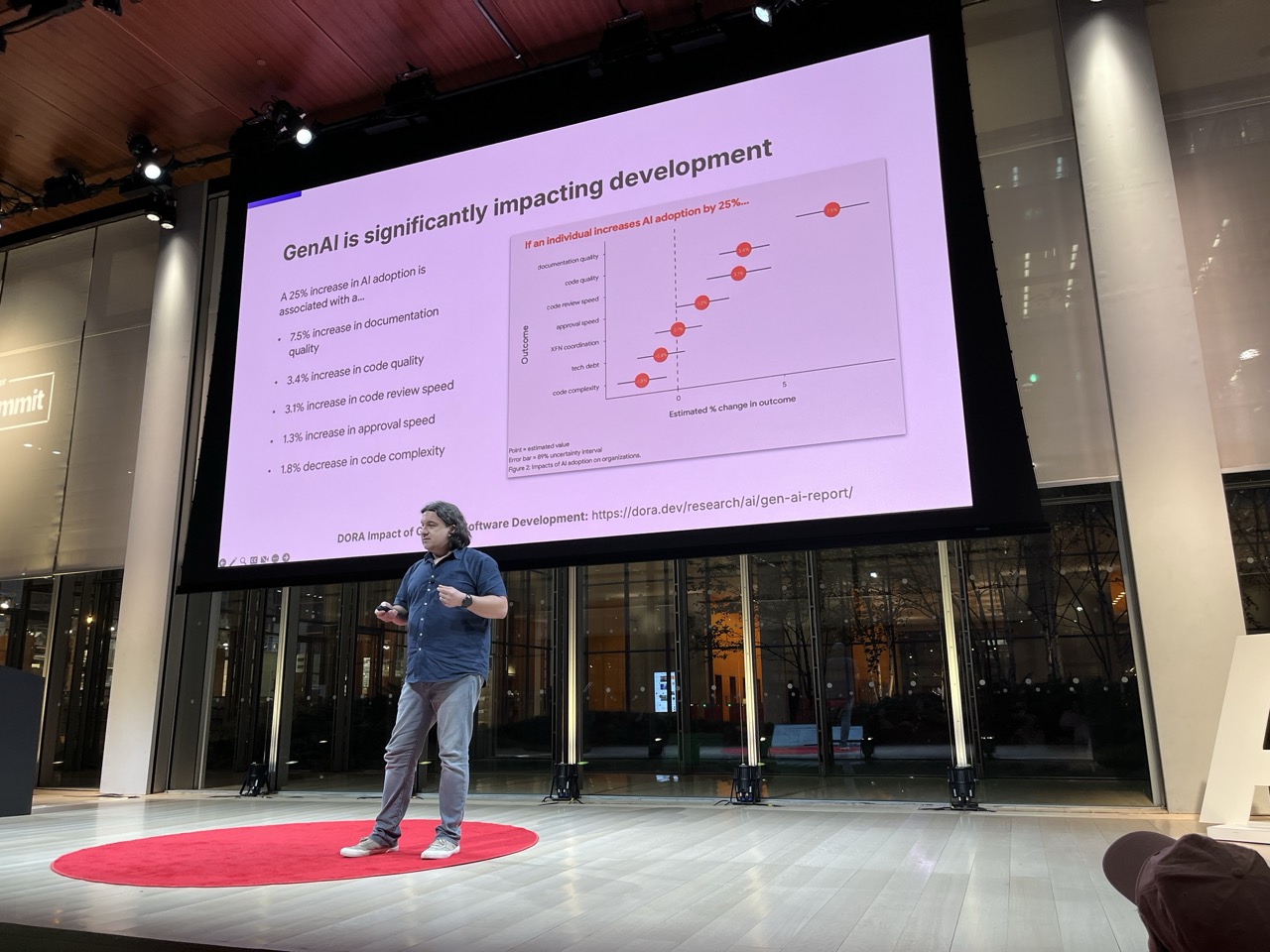

How to Quantify AI ROI in Software Engineering (Stanford Study)#

Yegor Denisov-Blanch / Stanford

Measuring the impact of AI on coding

Clean coding environments lead to better outcomes:

- tests / types / docs / modularity

Tool access doesn’t lead to tool usage.

Engineering outcomes are easiest to measure.

Cursor gives per-line level data.

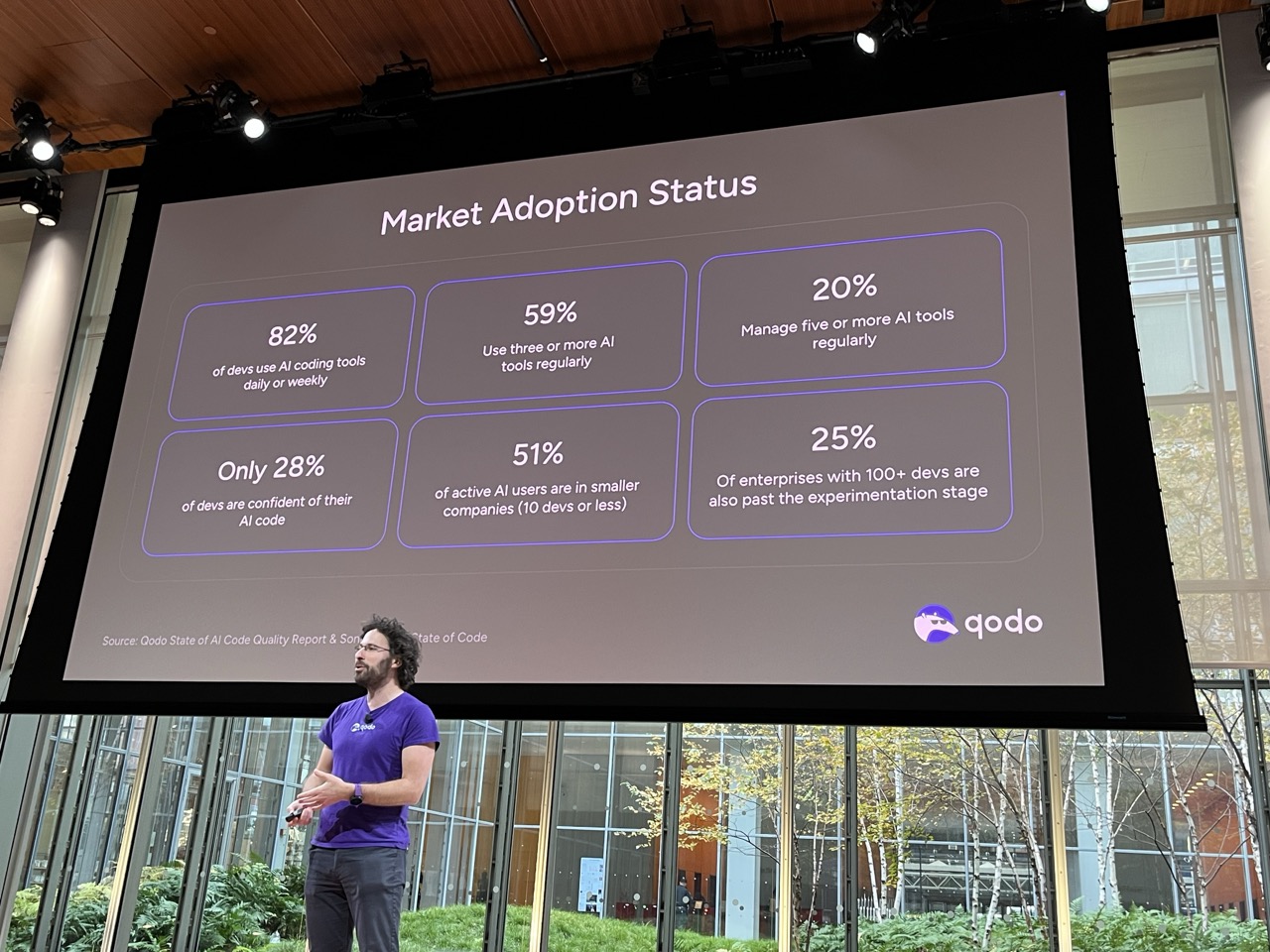

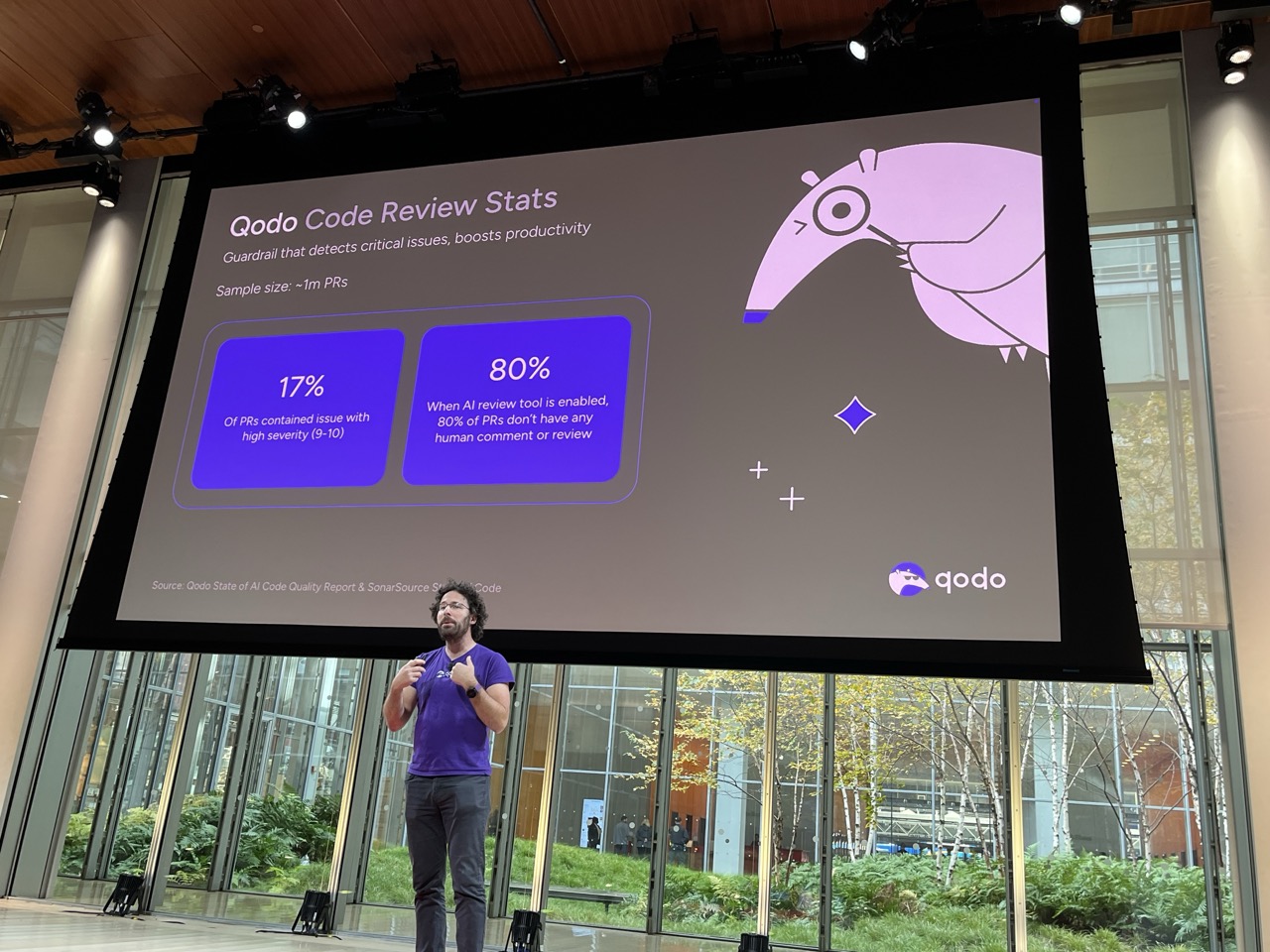

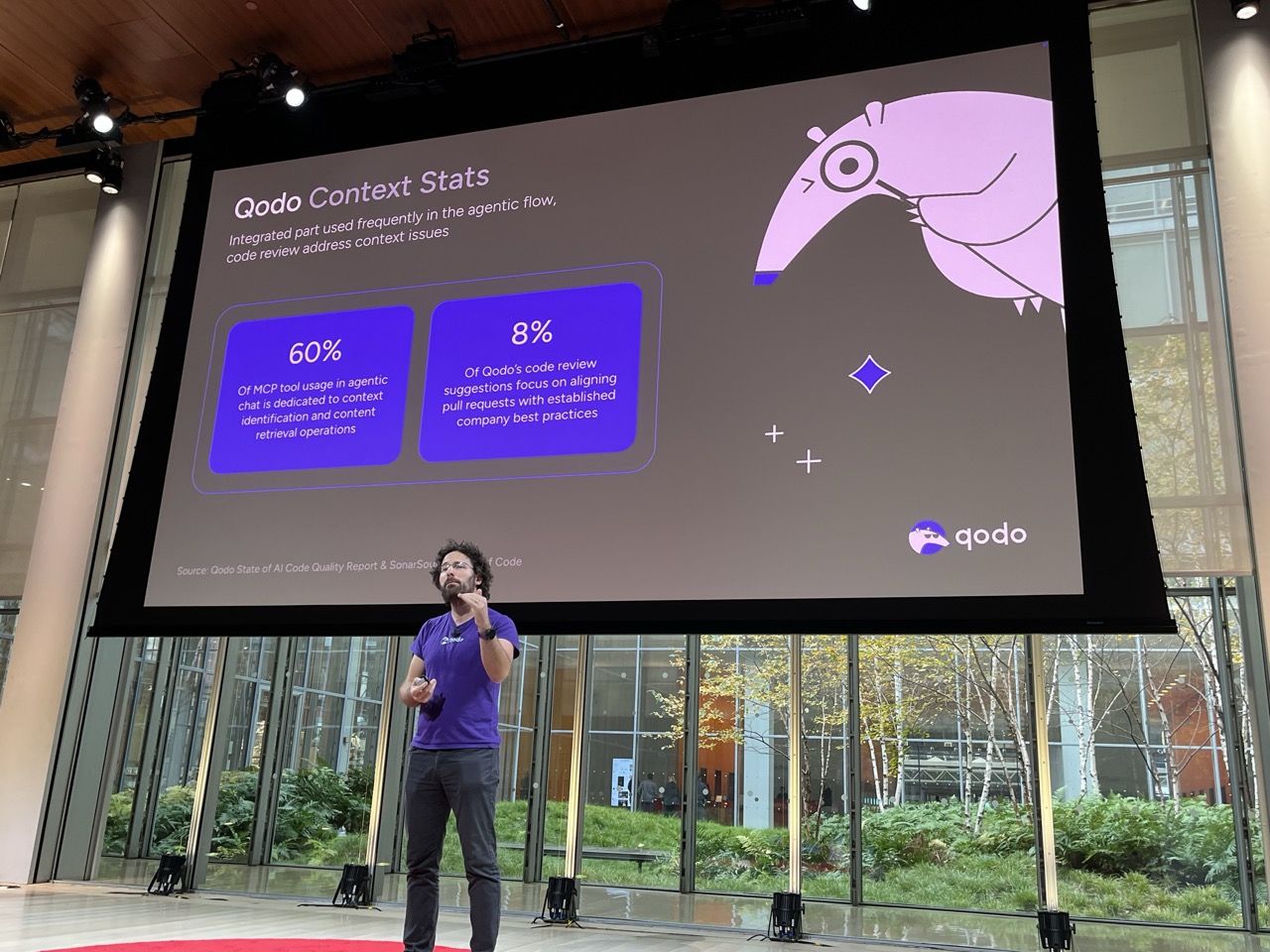

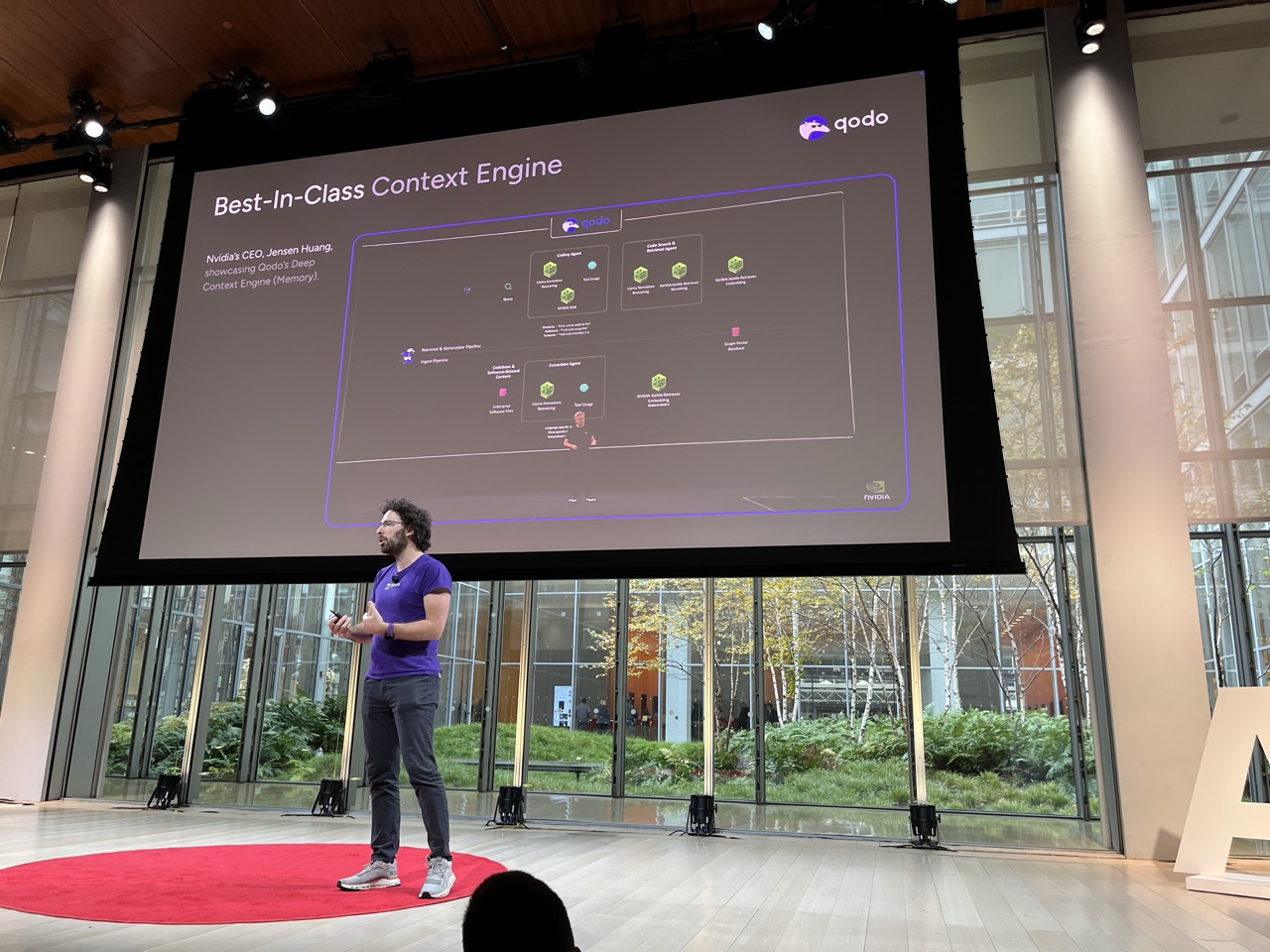

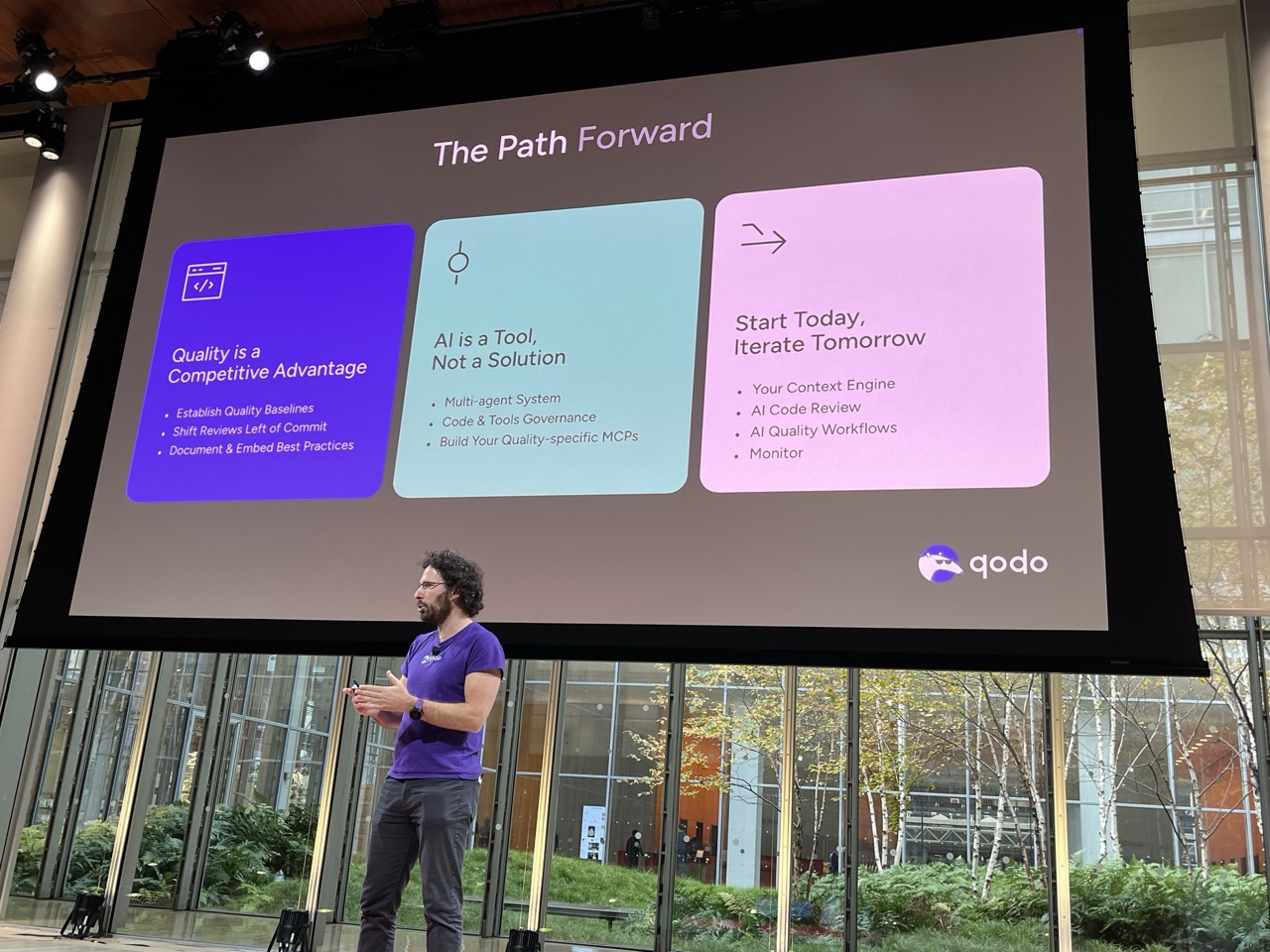

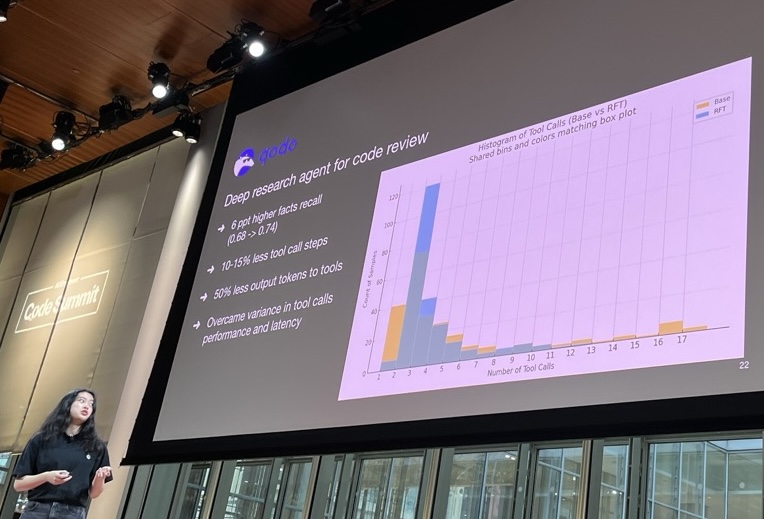

The State of AI Code Quality: Hype vs. Reality#

Itamar Friedman / Qodo

Rules can get ignored.

Improve code via:

- code gen

- agentic code gen

- agentic QC

- learning feedback that provides QC

Lots of quality concerns around code produced by models.

Iceberg metaphor:

- Code Gen is only part of the story

- Code integrity is the long tail of maintenance review; standards

- There are Code-Level Problems (insecure etc.)

- There are Process-level problems: learning / verification / guardrails / standards

- Bad code leads to large long term costs on the team

Add AI testing to increase trust.

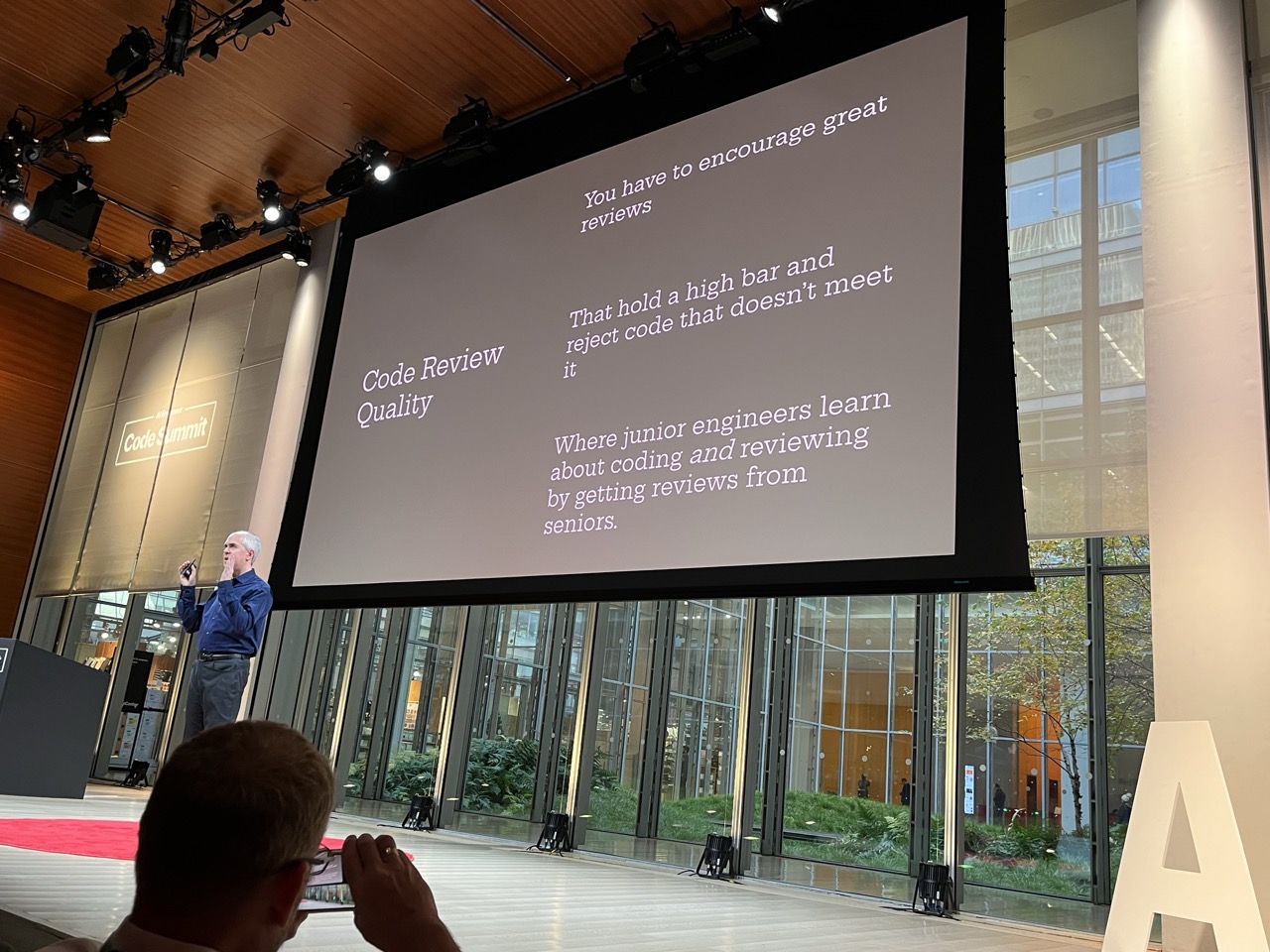

Make sure there is code review:

- code review isn’t ONLY for code quality, it’s also for learning the code together

Context engine to help know/understand the parts of the code that are relevant:

- context engine in the code review process

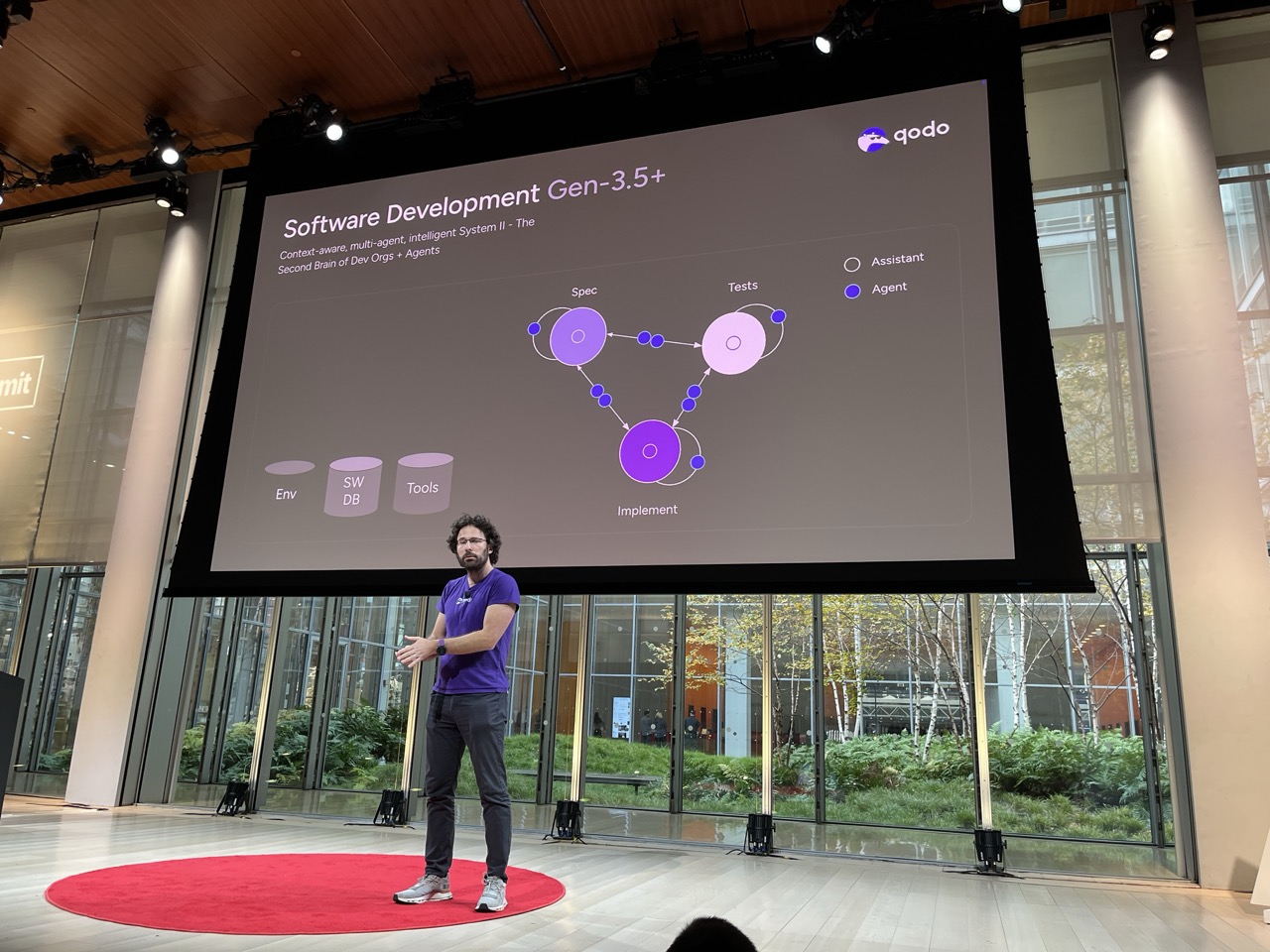

Software Dev Gen 3.5:

- Triangle: Spec / Implementation / Tests

- Agents for each part that also talk to one another

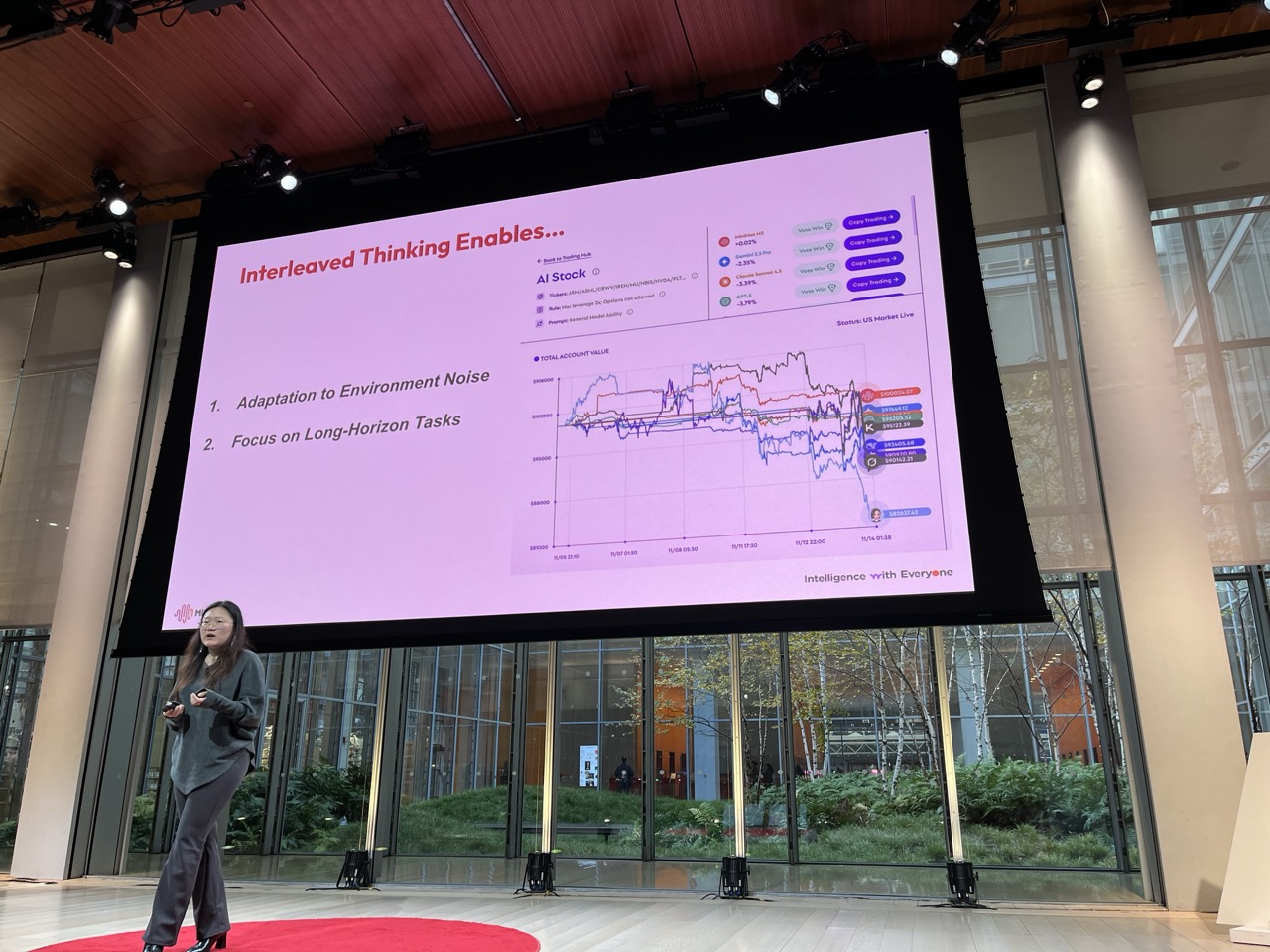

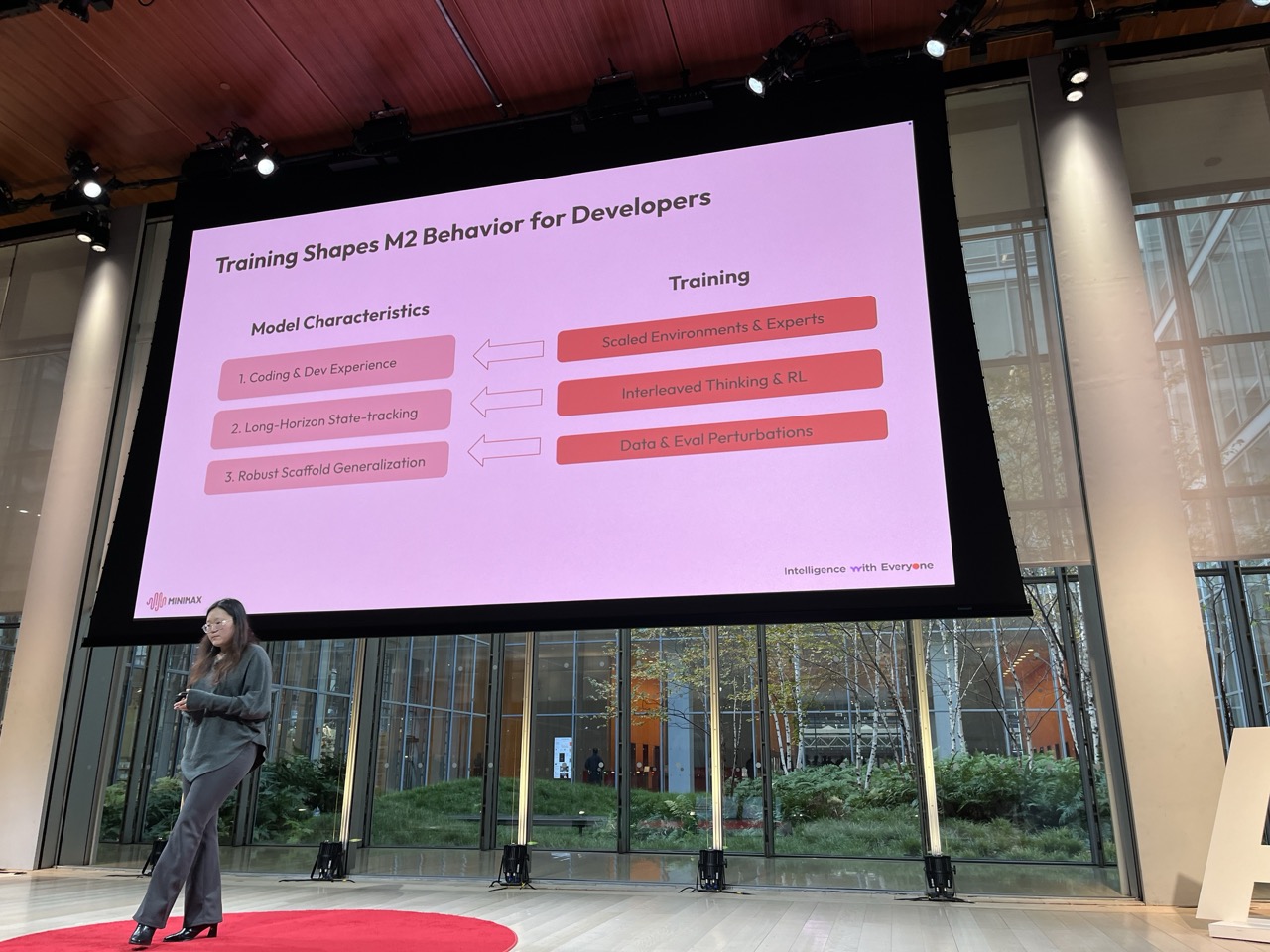

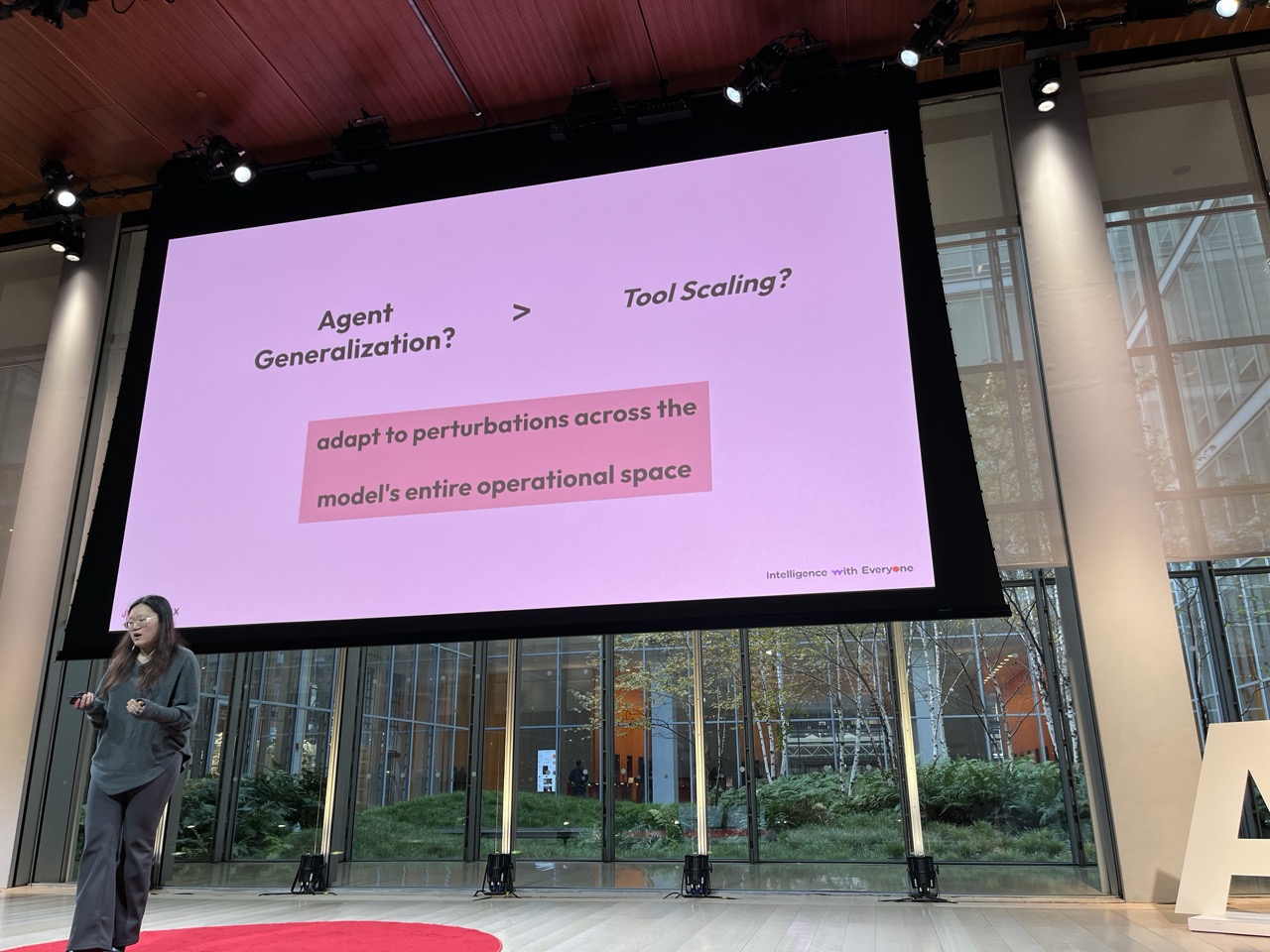

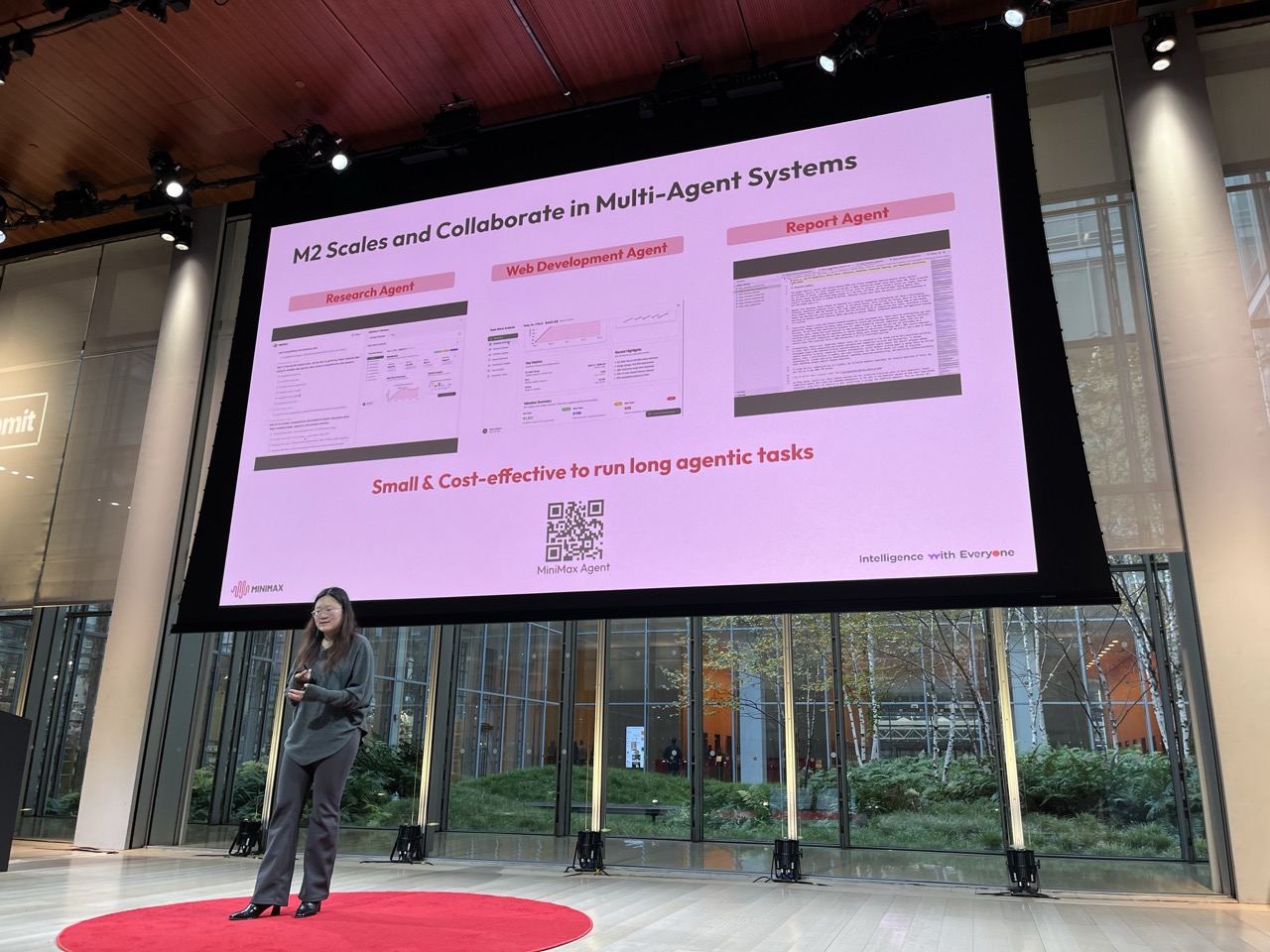

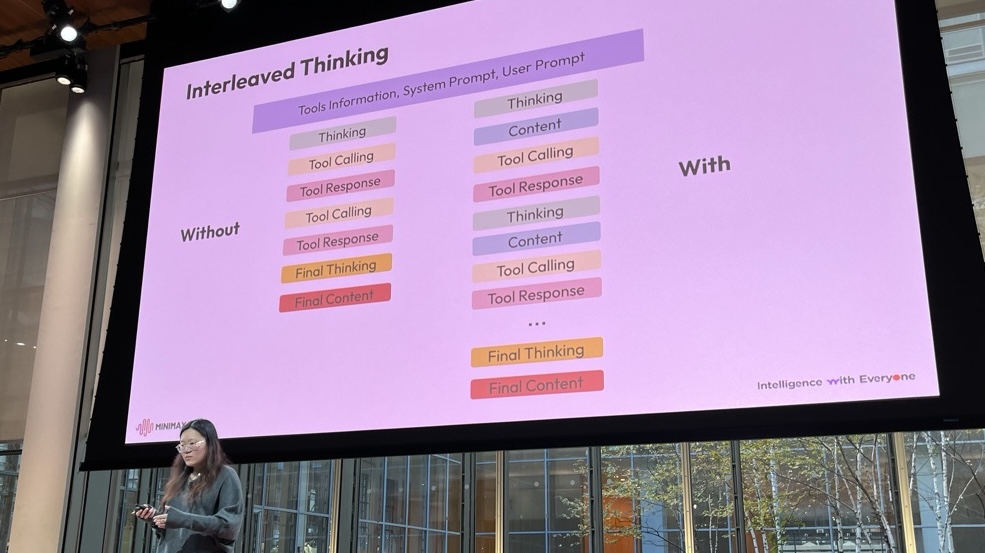

Minimax M2#

Olive Song / MiniMax

Model is excelling in many modalities and tool uses.

Interleaved thinking improves its performance.

Kath Korevec / Google Labs

Proactive Agents

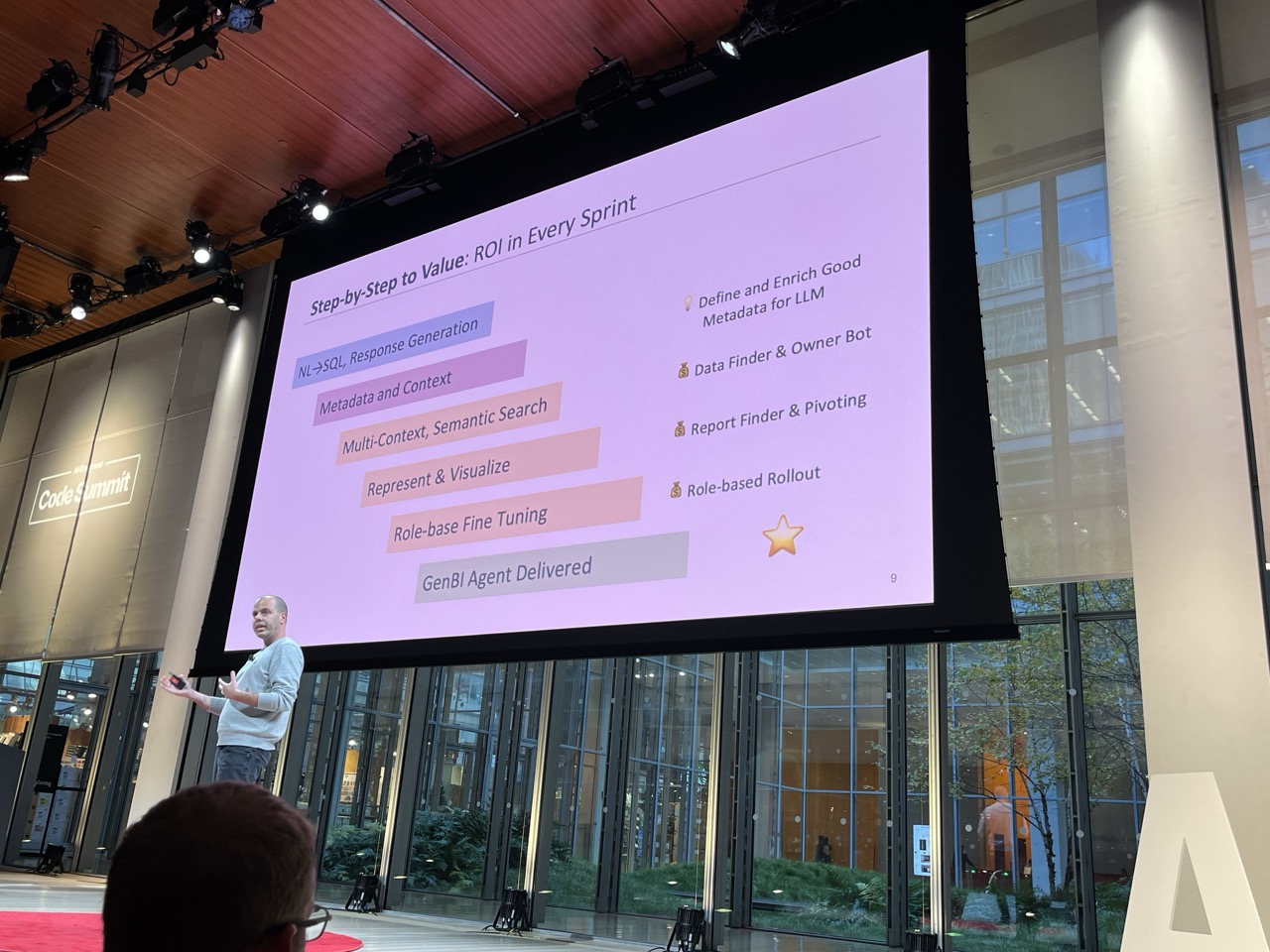

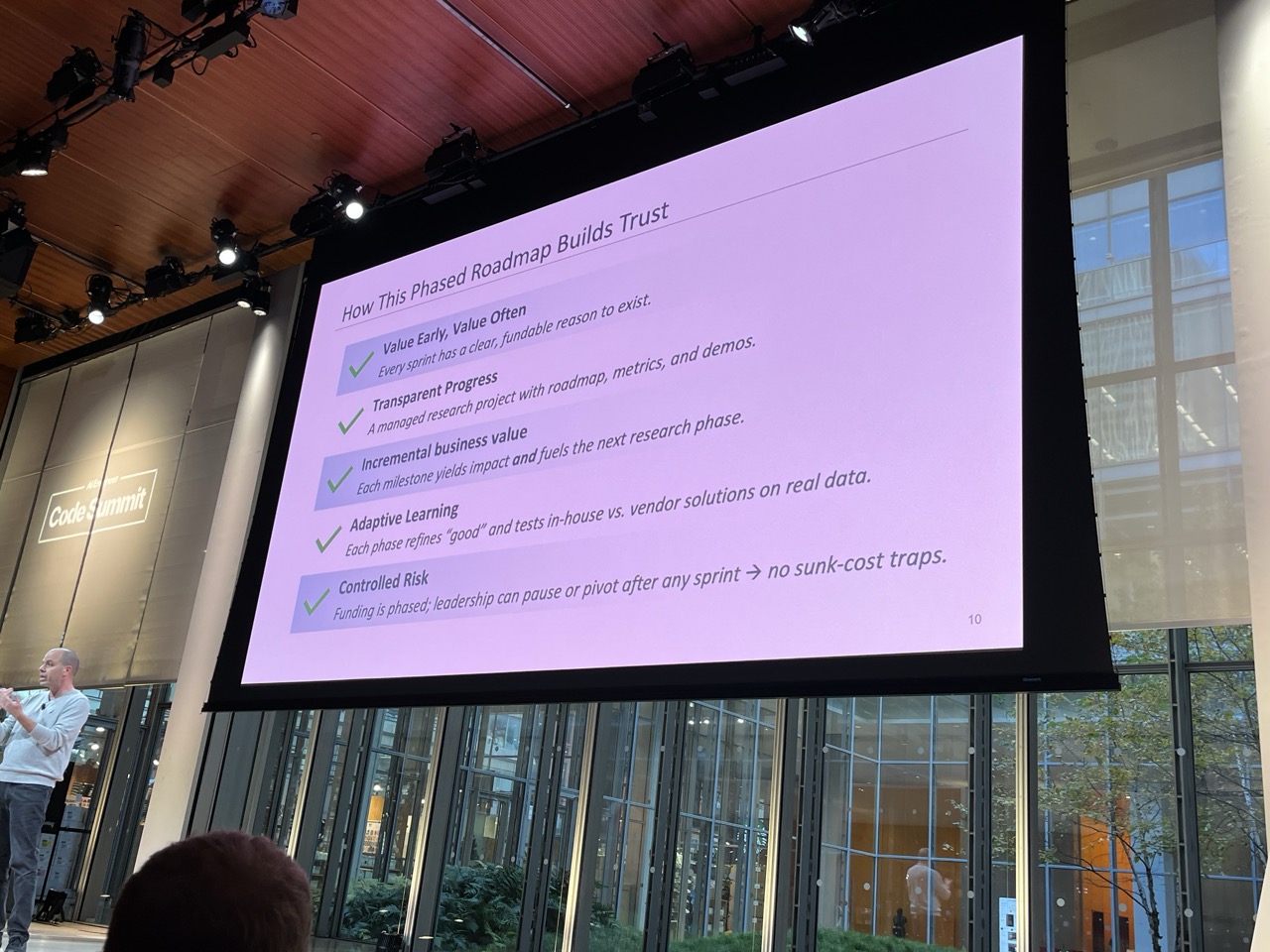

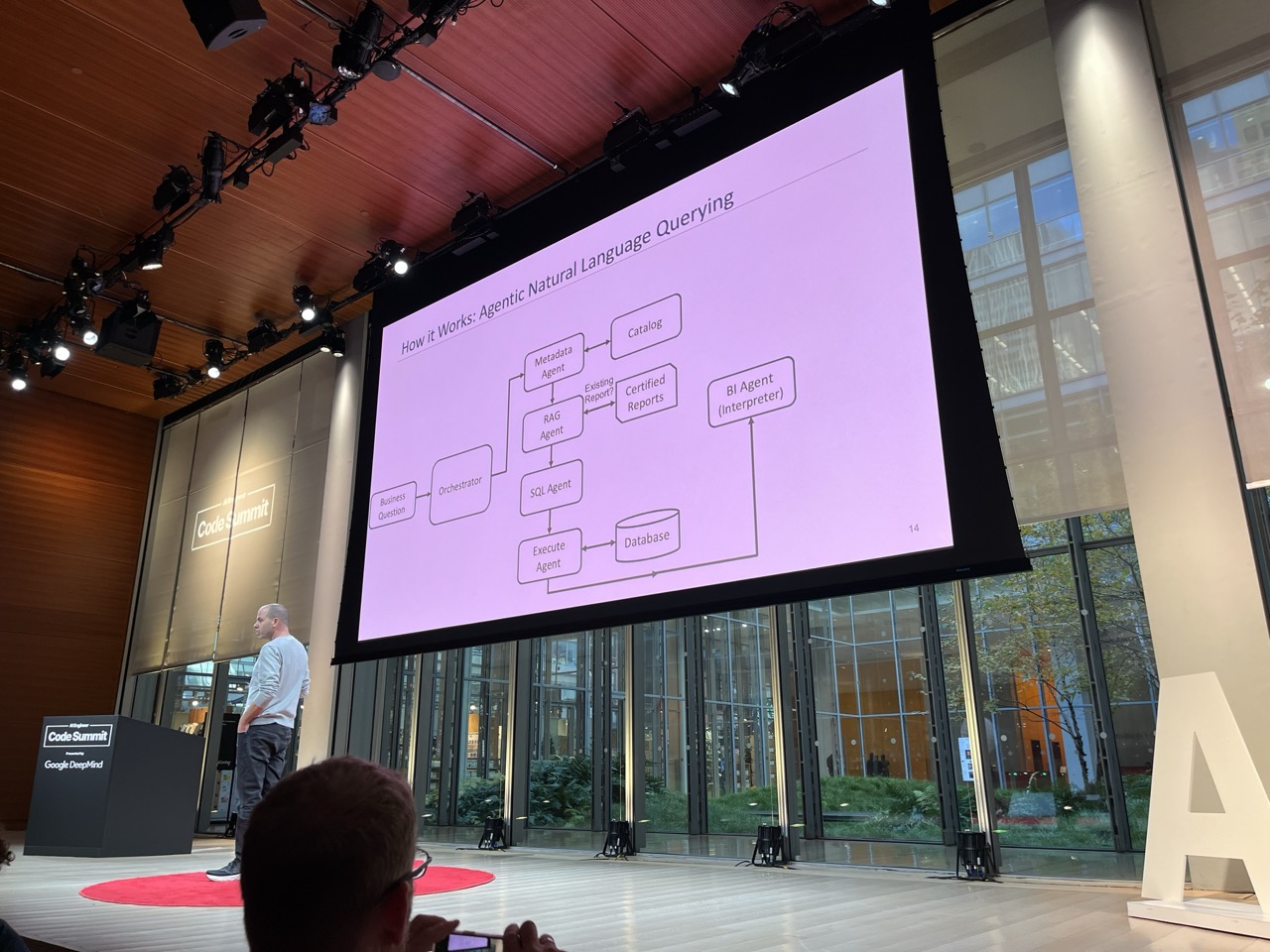

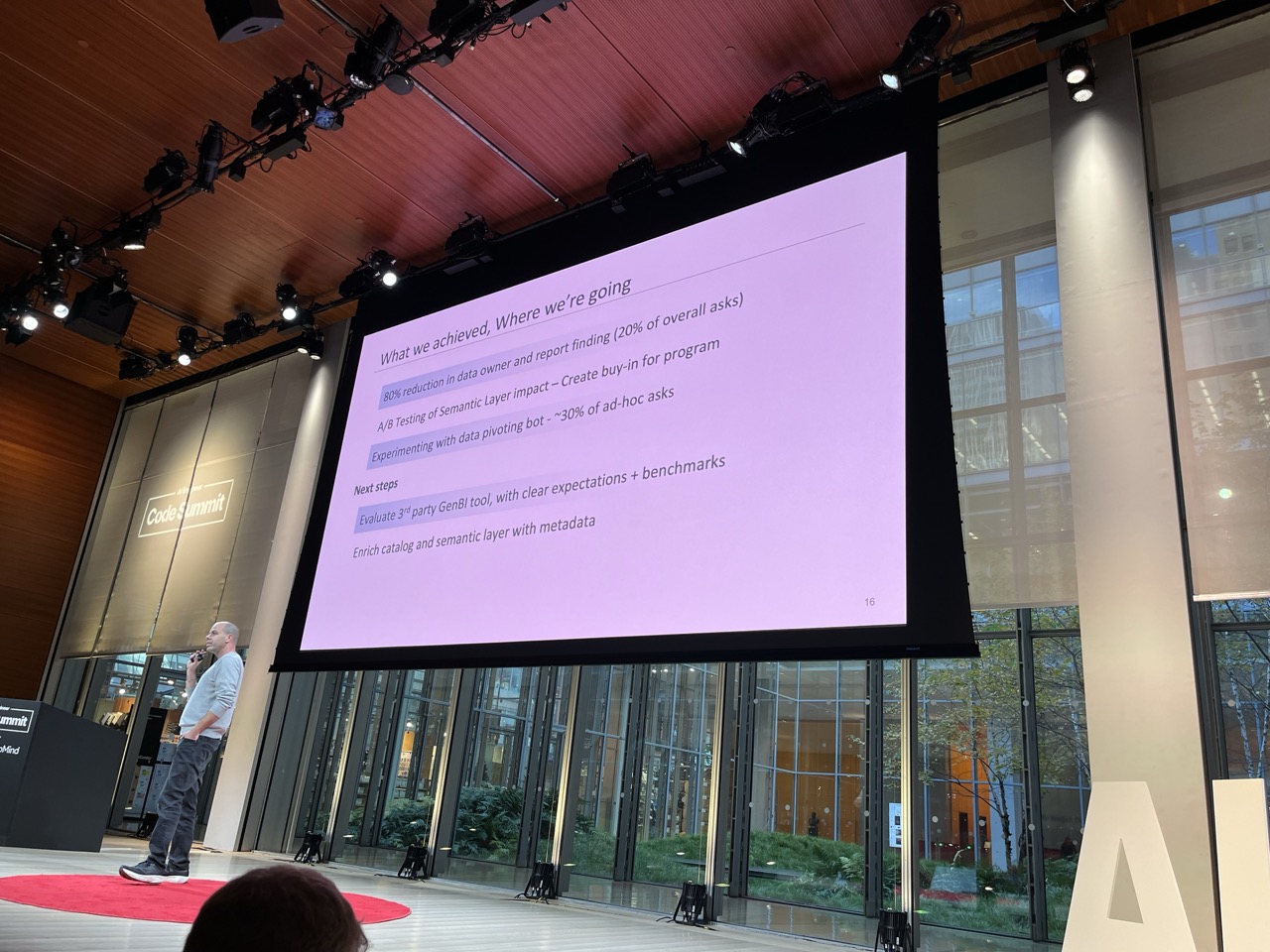

Small Bets, Big Impact: Building GenBI at a Fortune 100#

Asaf Bord / Northwestern Mutual

GenBI tool. One simple win was to automate the retrieval of reports.

You need:

- LLM ready data fabric

- Invisible co-pilots inside Slack / CRM / BI

- Secure models

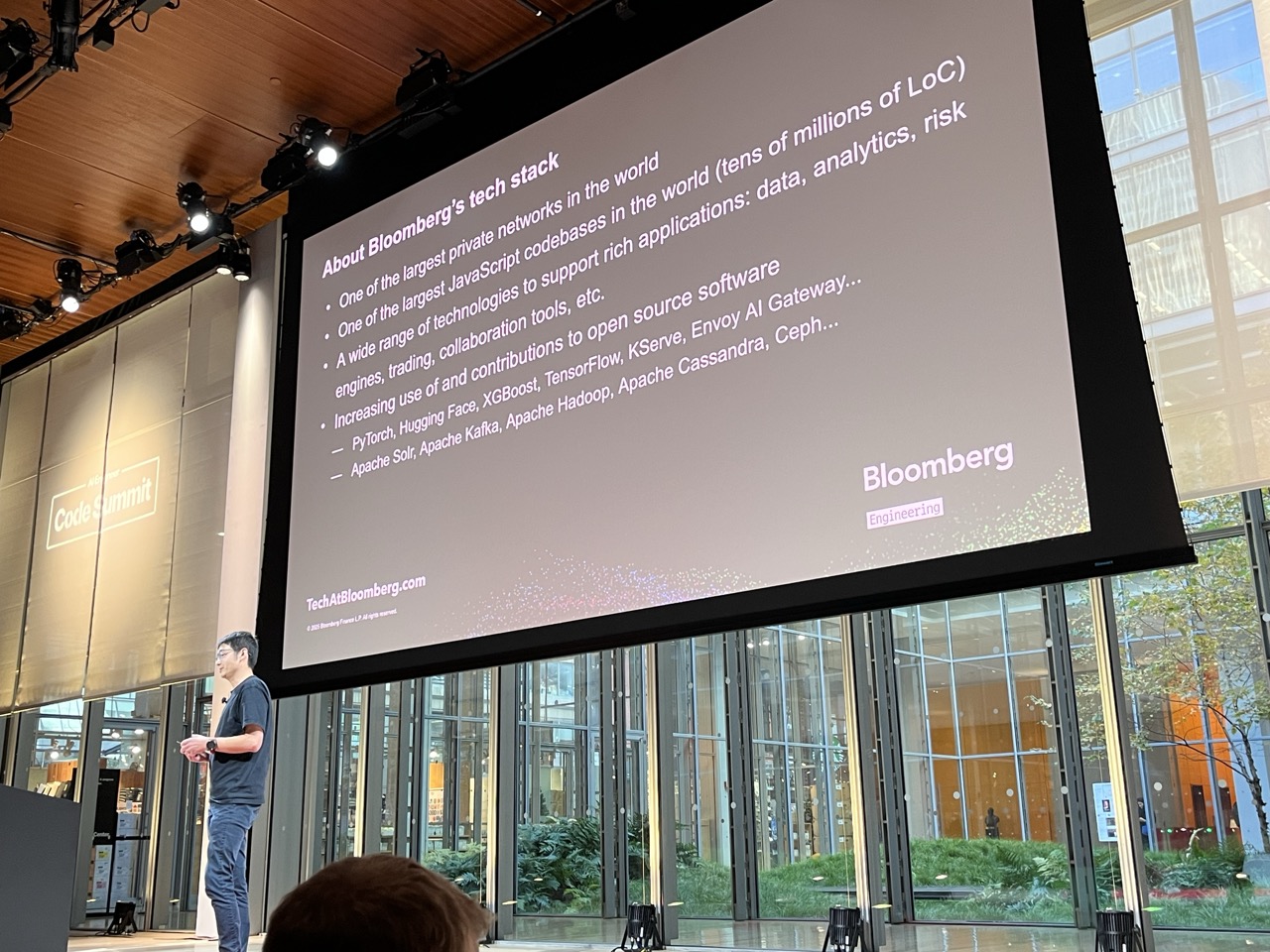

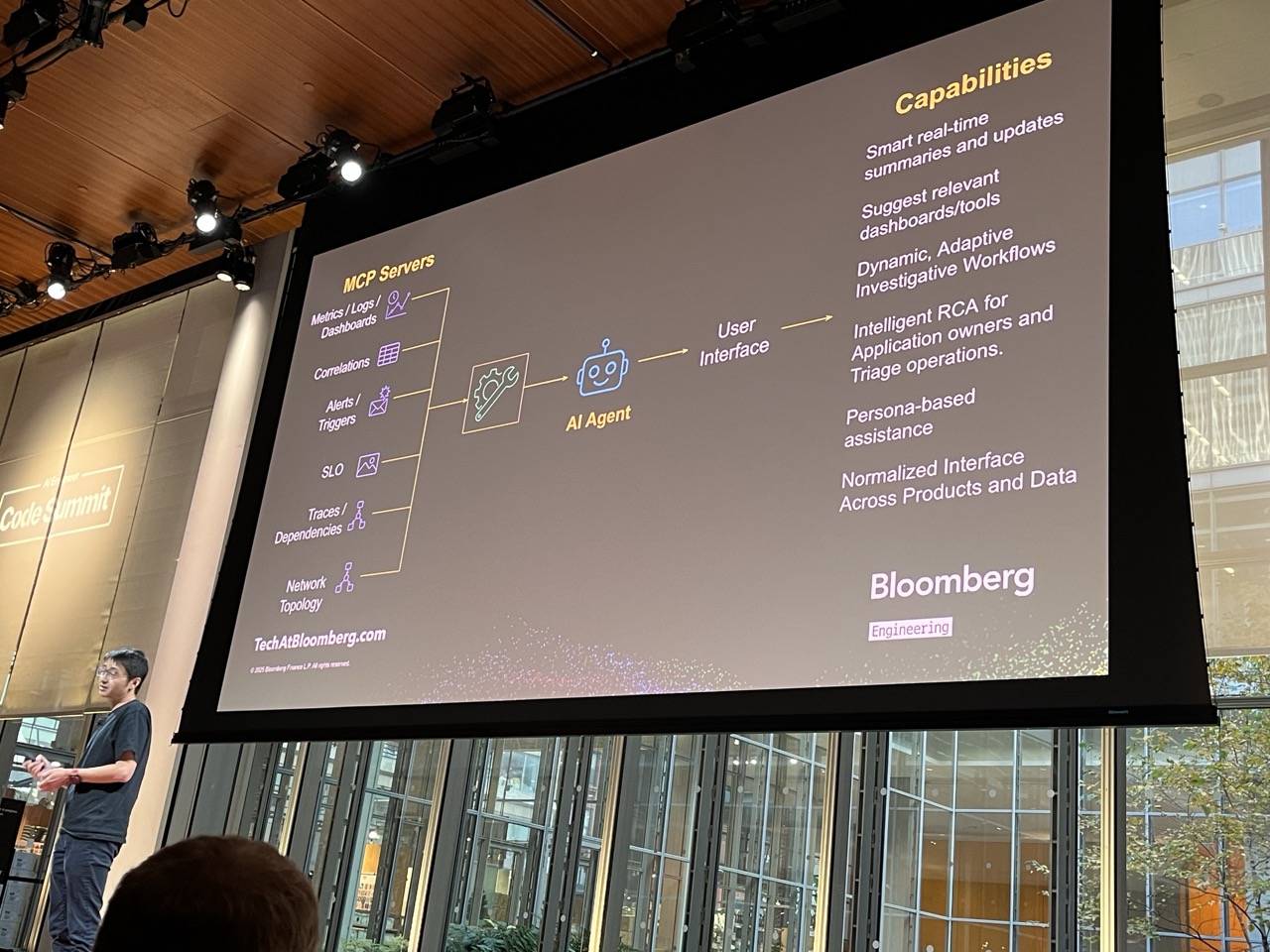

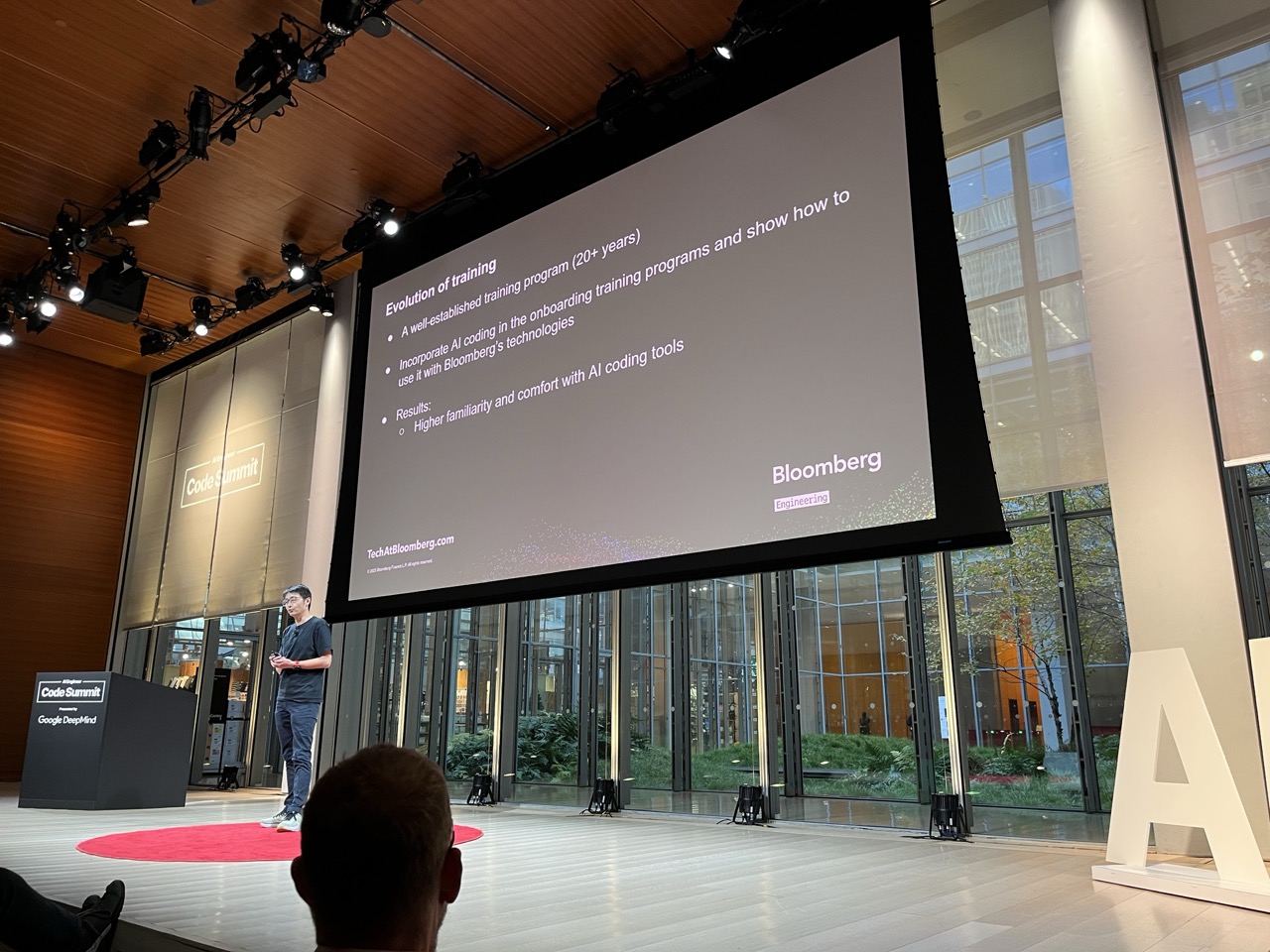

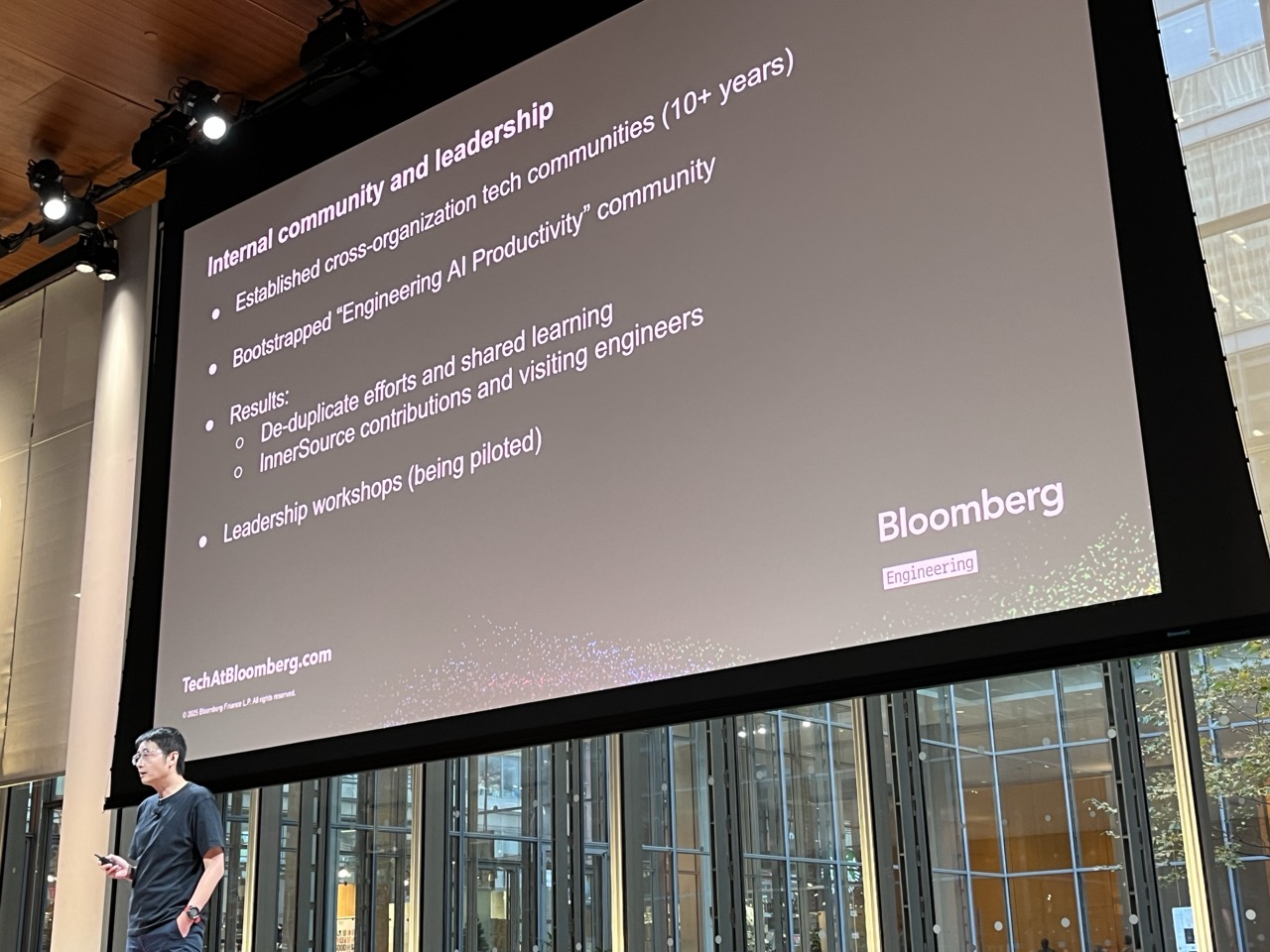

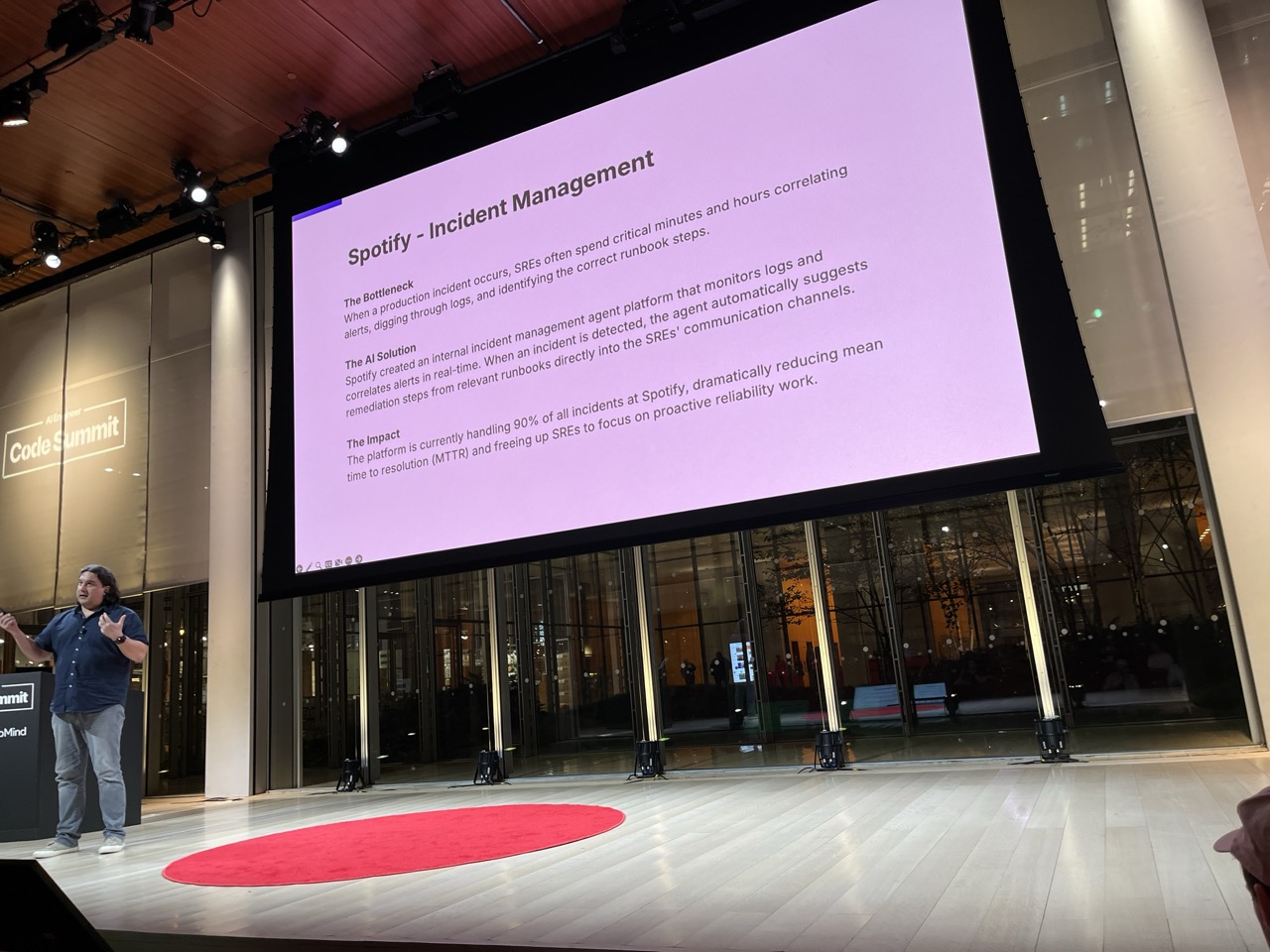

What We Learned Deploying AI within Bloomberg’s Engineering Organization#

Lei Zhang / Bloomberg

9000+ engineers!!

Ideas:

- Uplift agents - take care of routine patching

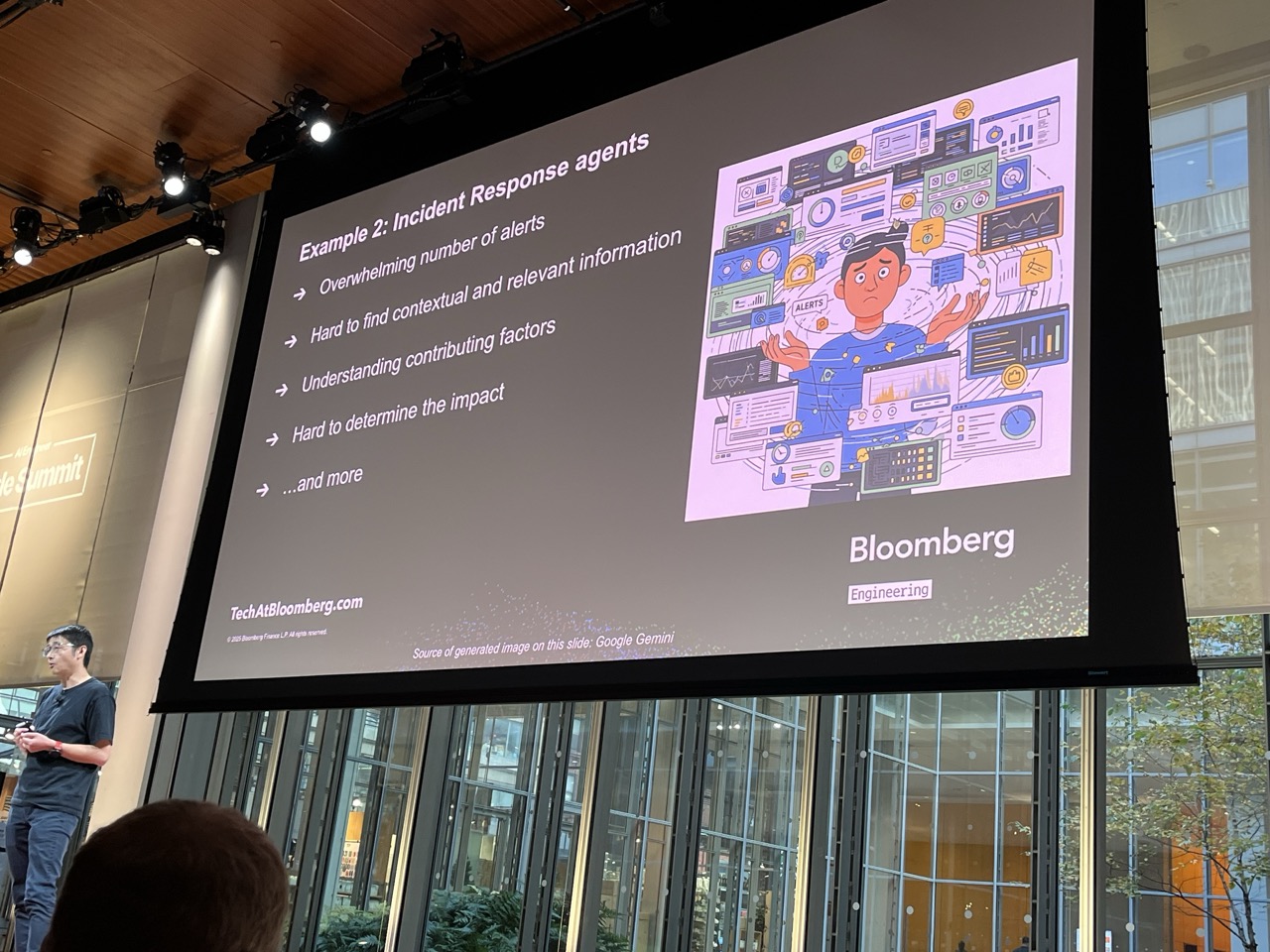

- Incidence response agents

- All internal Data accessible via MCPs to write AI agents against

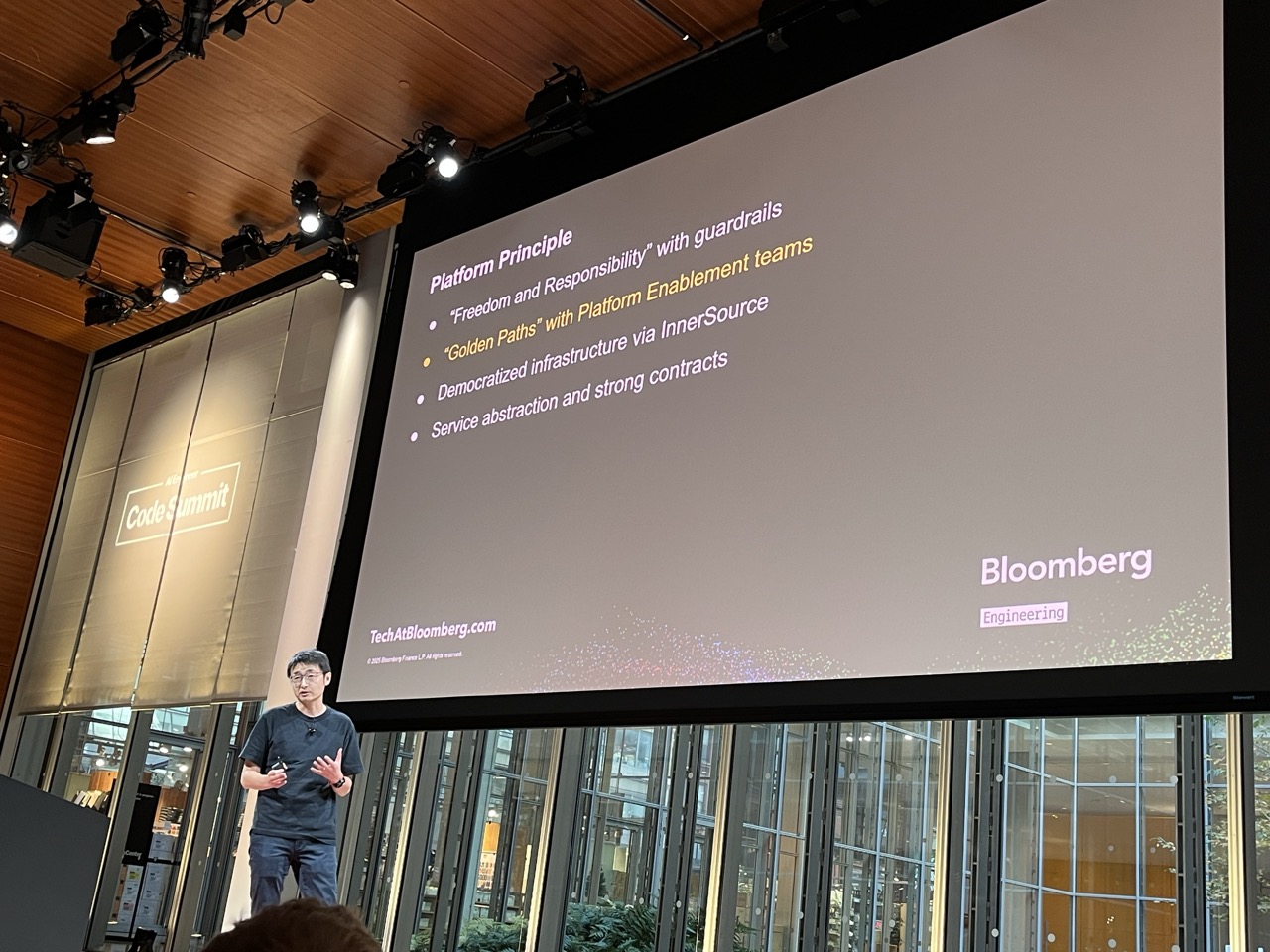

Bloomberg Principles:

- “Freedom and Responsibility” with guardrails

- “Golden Paths” with Platform Enablement

- Democratize infra via Inner Source

- Service abstraction and strong contracts

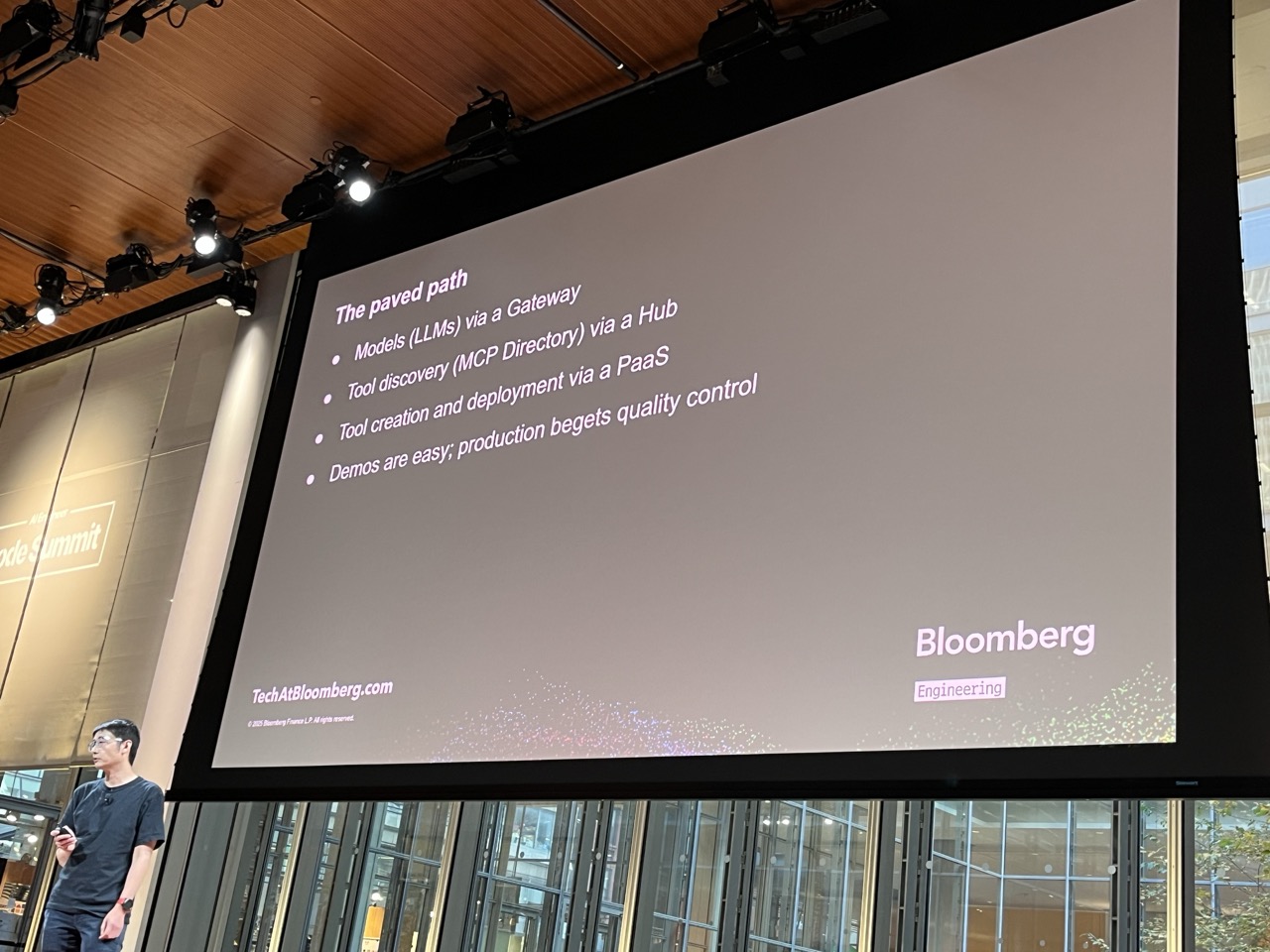

The Paved path:

- Models via Gateway

- MCP Directory via a hub

- Tool creation/deployment via PAAS

- Demos are easy; production begets QC

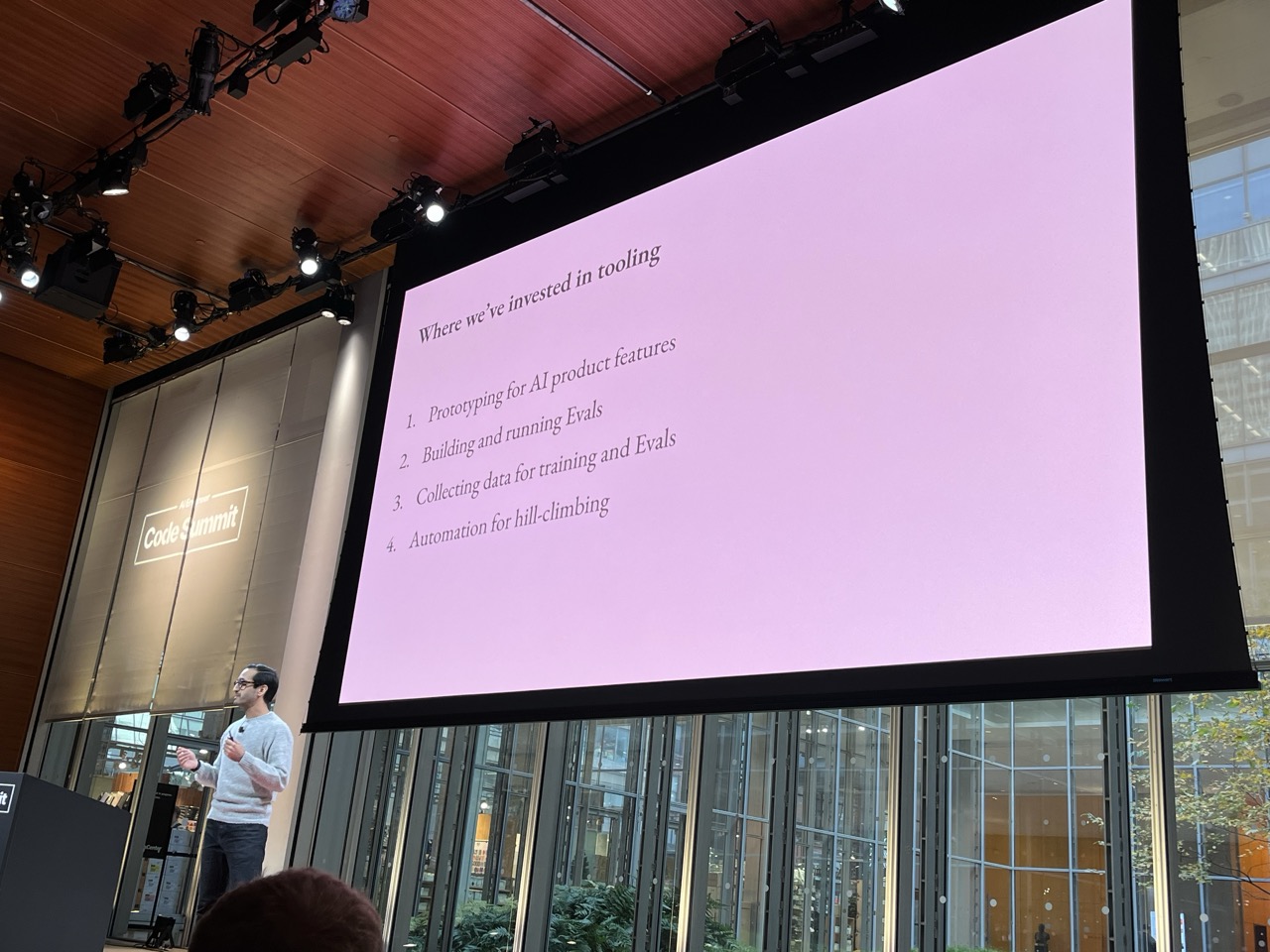

From Arc to Dia: Lessons learned in building AI Browser#

Samir Mody / The Browser Company

Developer Experience in the Age of AI Coding Agents#

Max Kanat-Alexander / Capital One

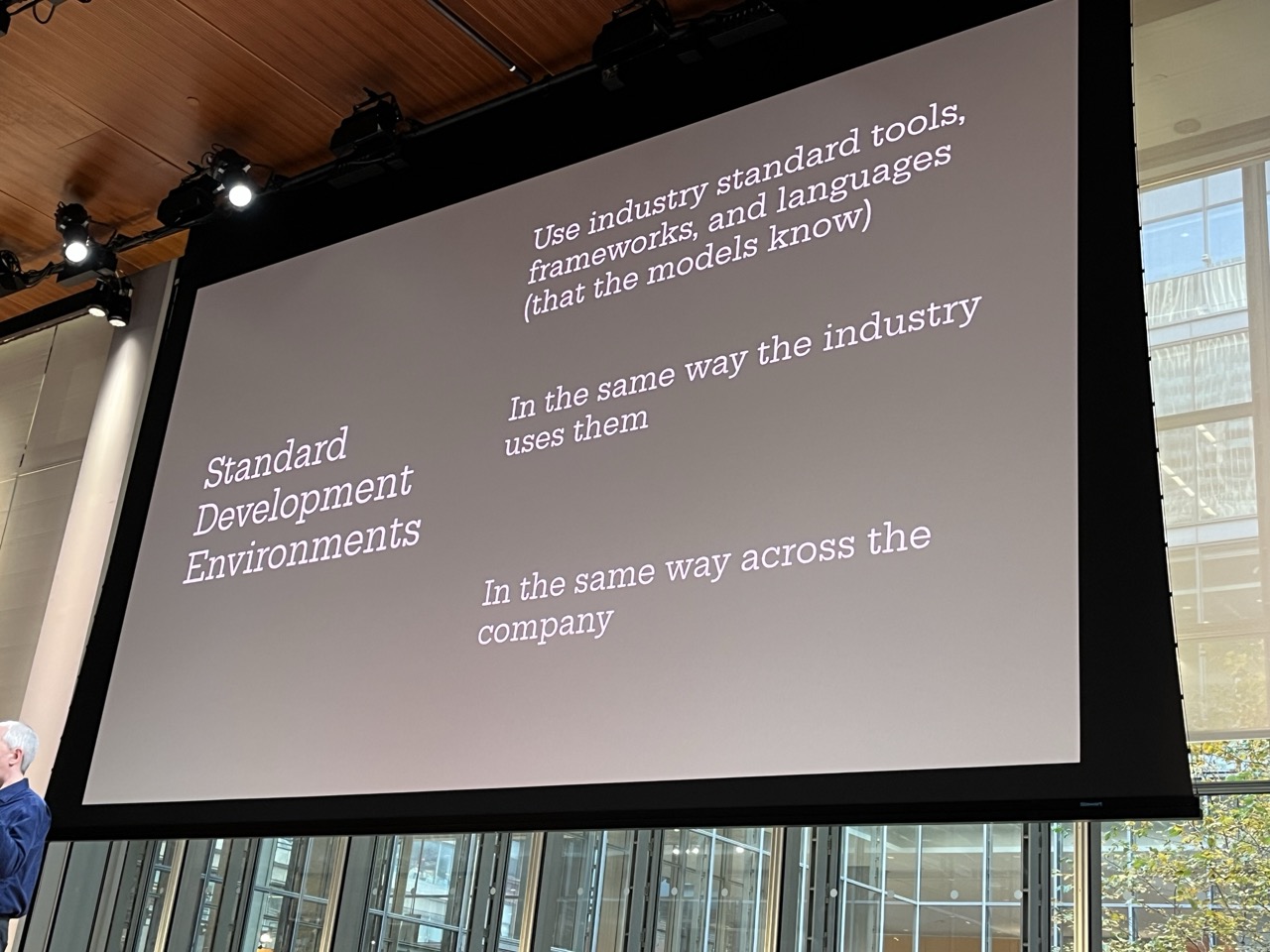

Future proofing tools even when AI changes things.

Standardize development environments:

- frameworks - use them and use them the same way everyone else does

- and do it the same way across the company

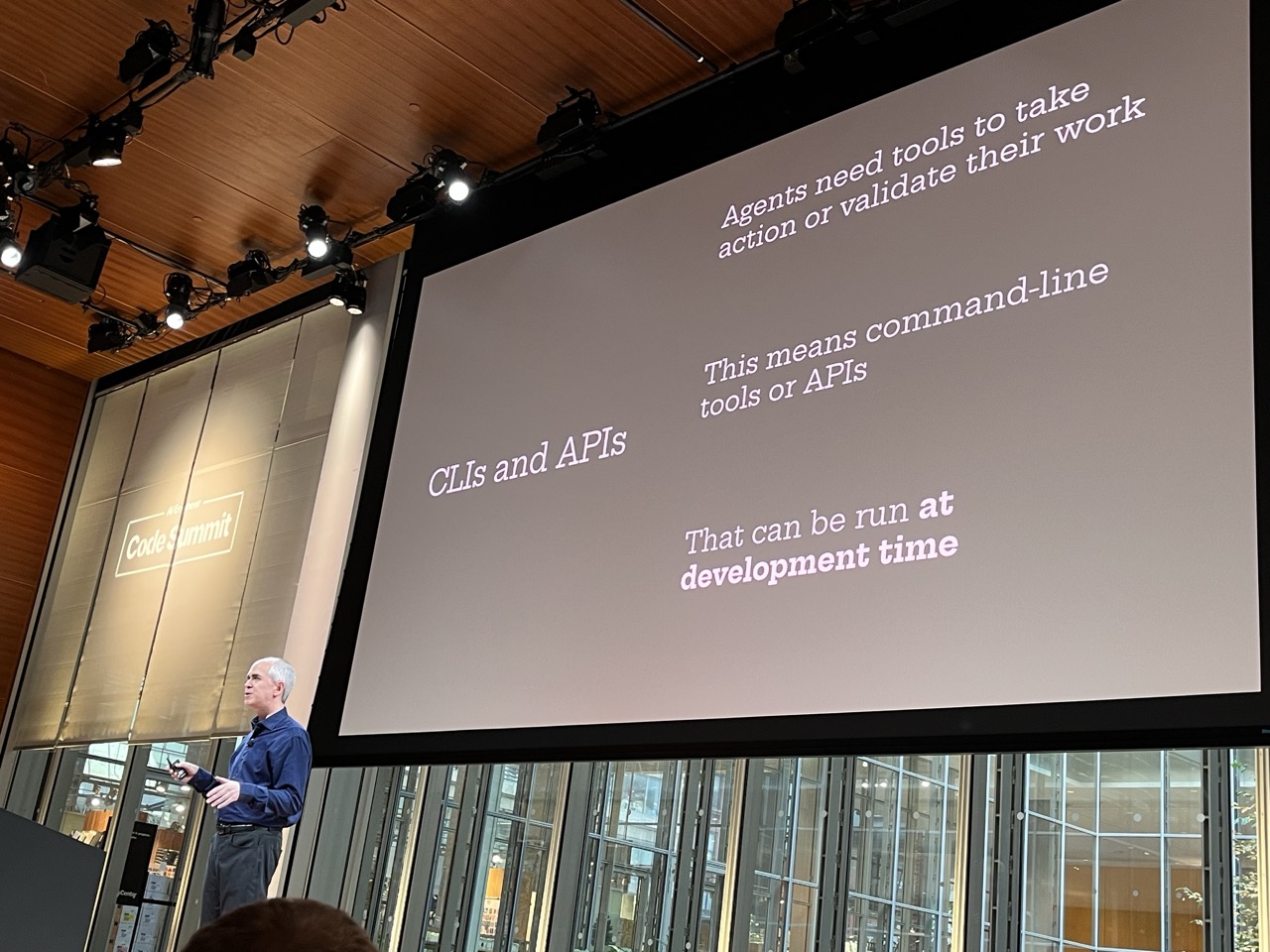

CLIs and APIs:

- that show these things talk!

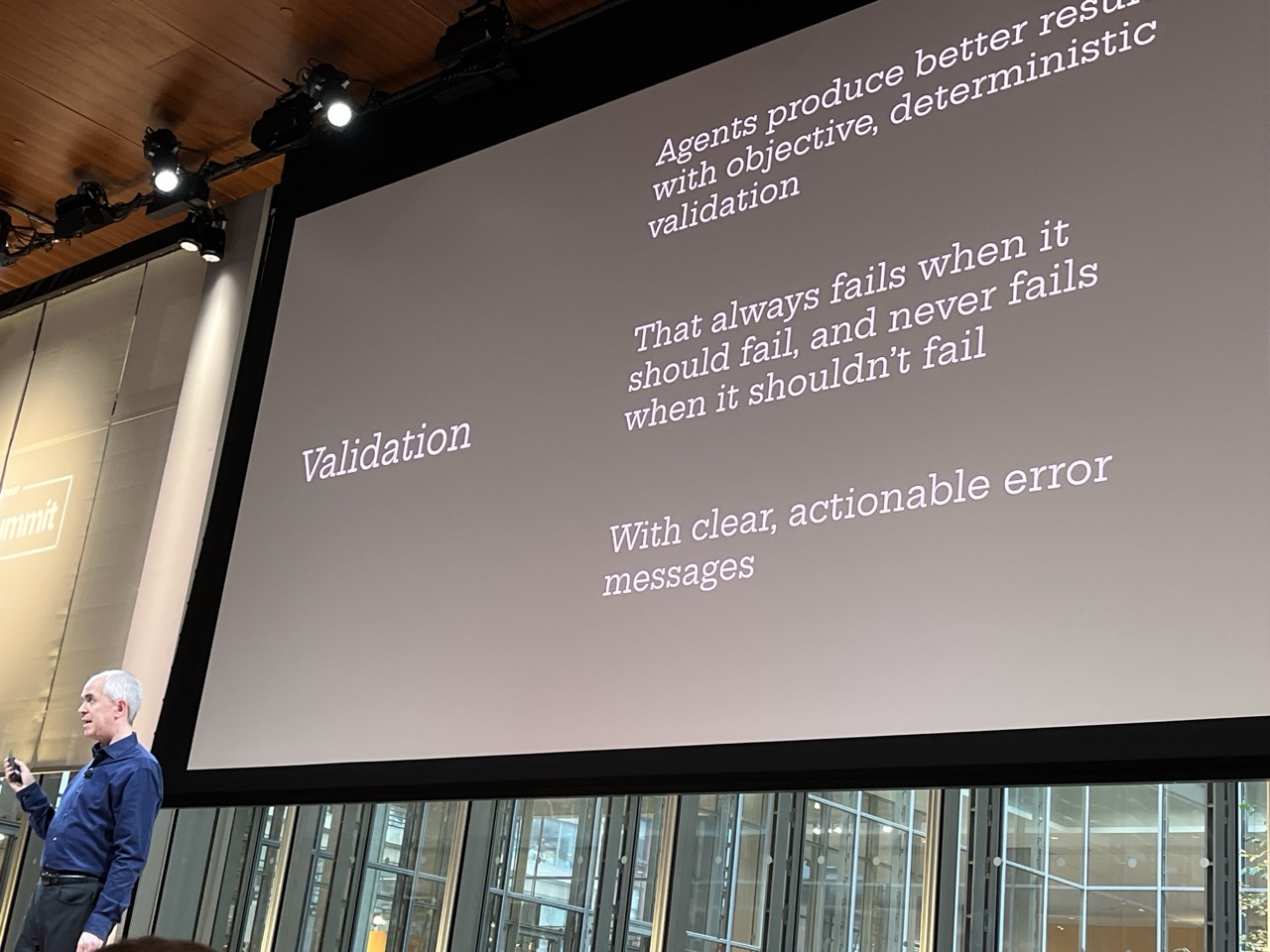

Validation Everywhere:

- deterministic control

- clear actionable error messages

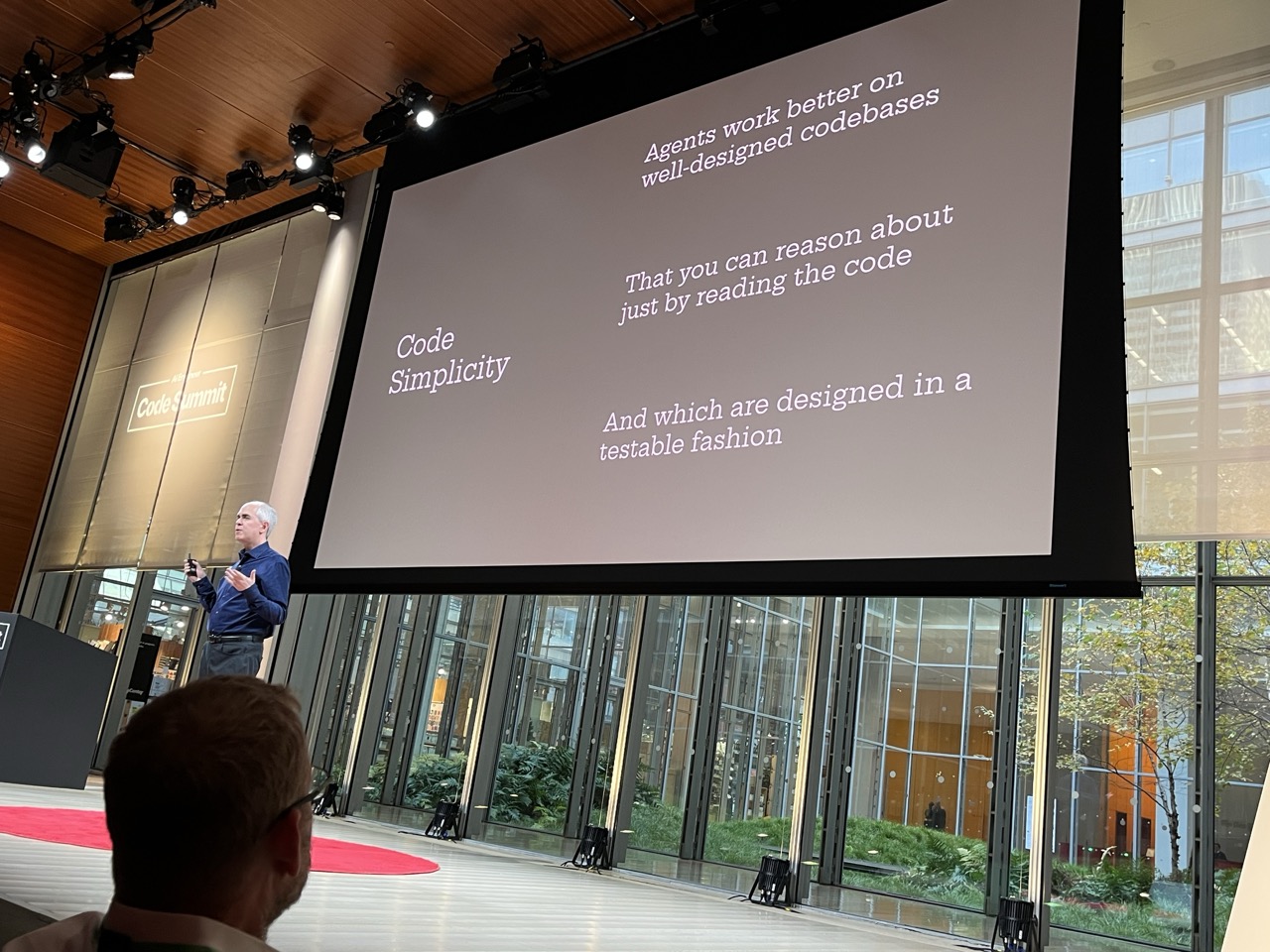

Code Simplicity:

- agents that can reason about code will produce better results

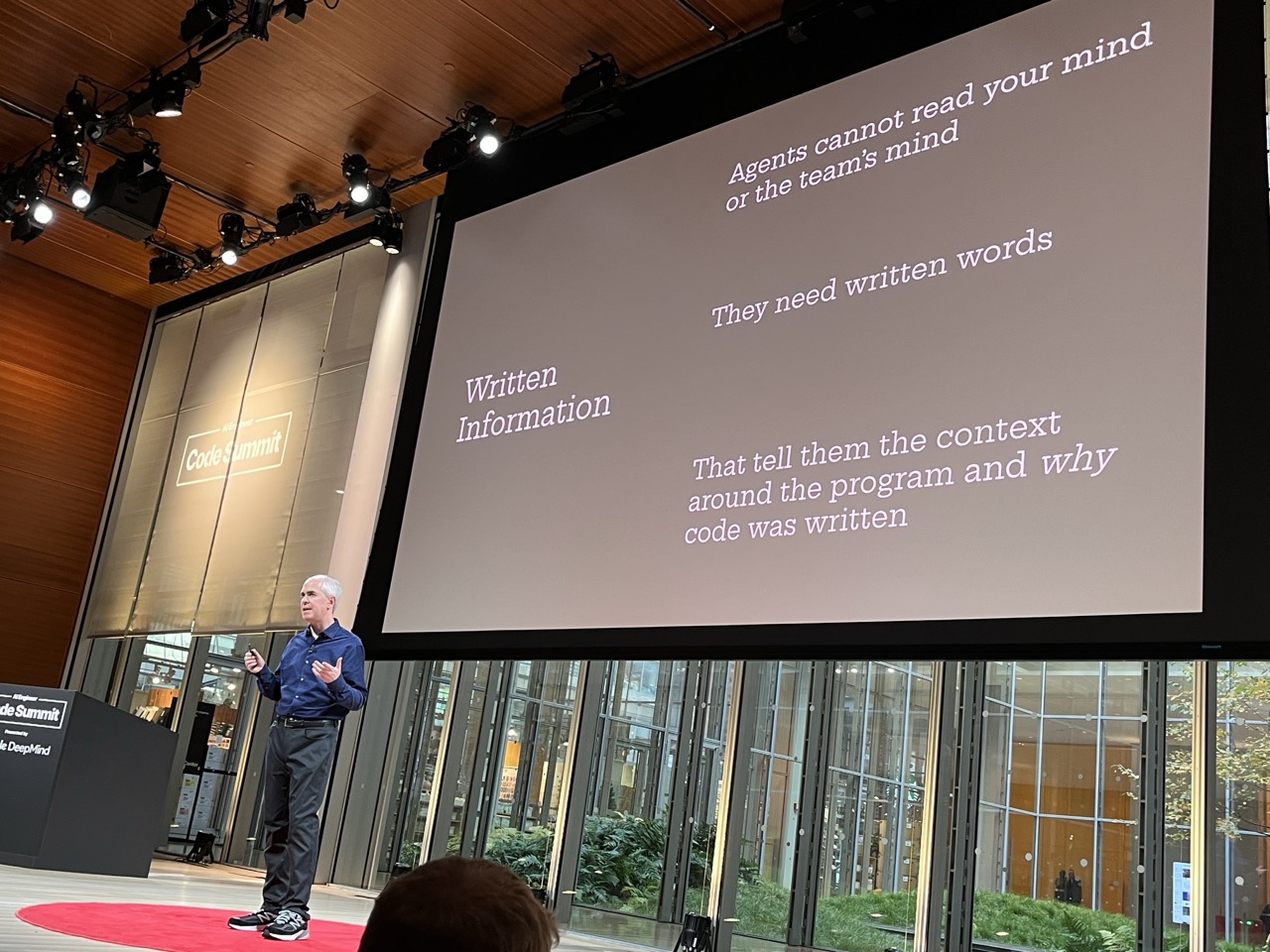

Written words:

- everything needs to be written down

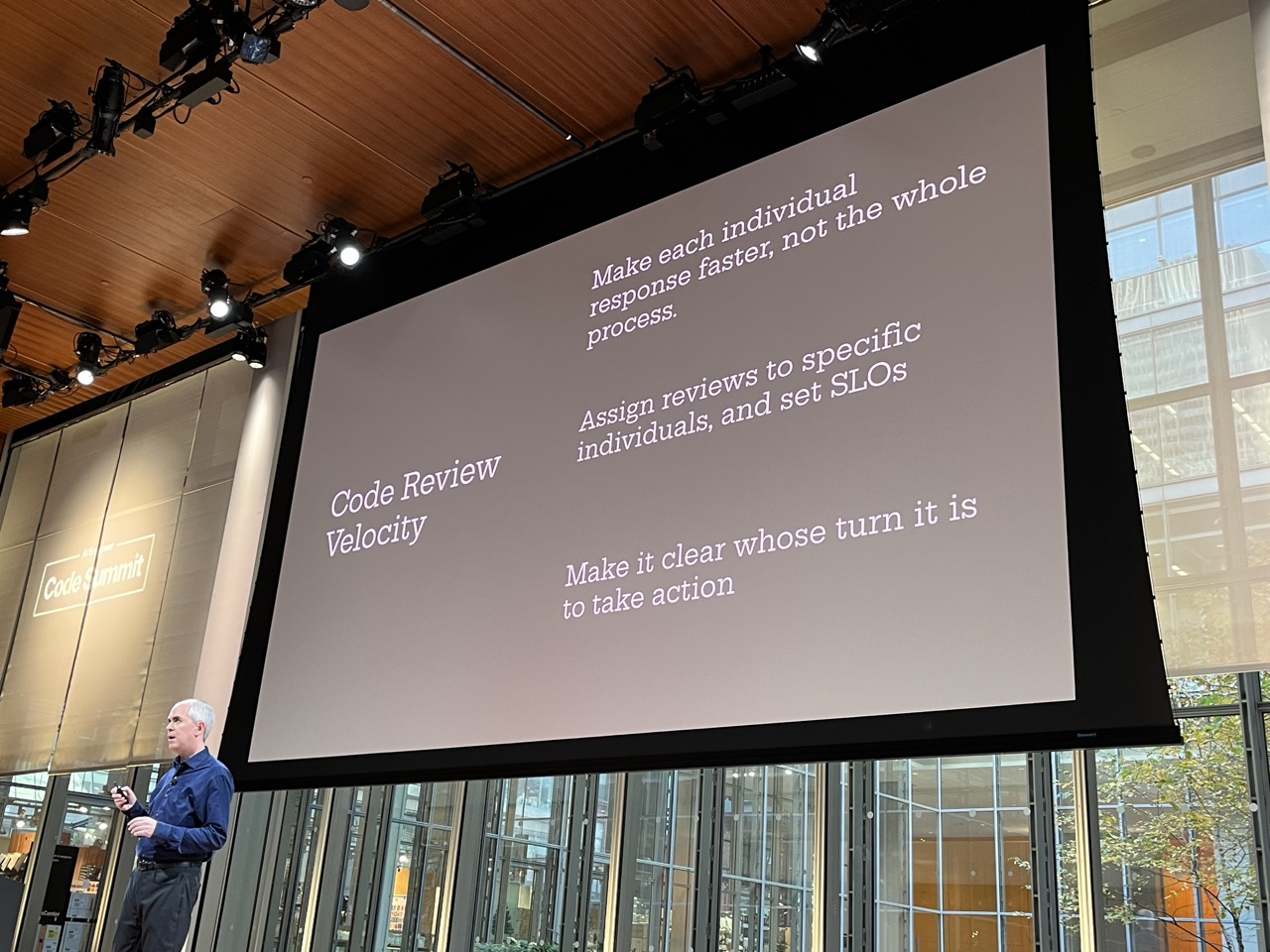

Code Review Velocity:

- assign reviewers, not Slack or group

- make it clear whose turn it is to take action

Vicious vs virtuous cycle

AI Consulting in Practice#

NLW / Super.ai

Paying Engineers like Salespeople: How Tenex Rebuilt the Incentive Stack for Modern Engineering#

Arman Hezarkhani / Tenex

Pay engineers for story points. Incentivize them with direct cash.

Some on the team will earn $1M.

Successes across a number of clients/industries.

Leadership in AI-Assisted Engineering#

Justin Reock / DX

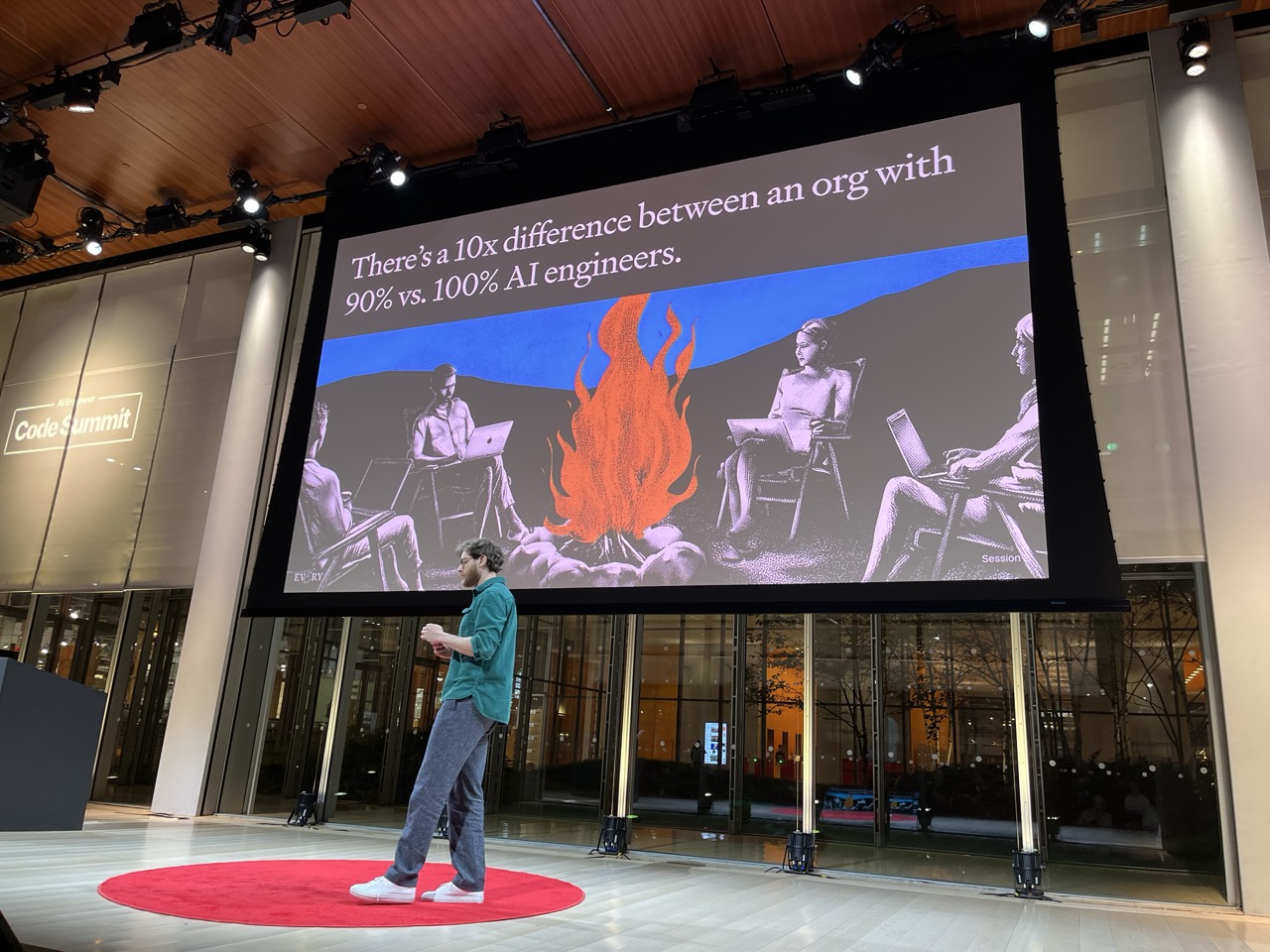

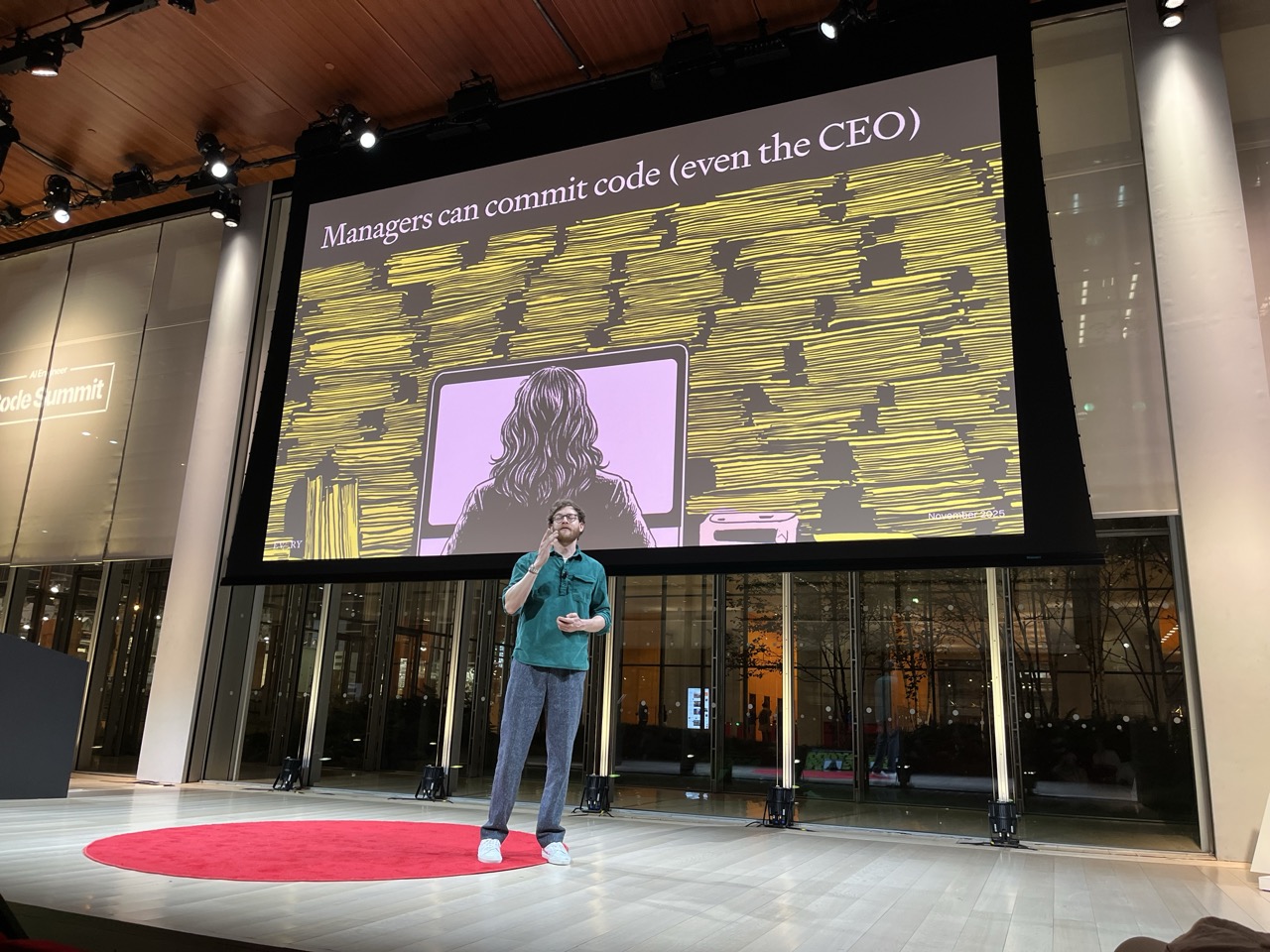

How to build an AI-native company (even if your company is 50 years old)#

Dan Shipper / Every

No playbook for AI company.

2 man teams.

15 person company with 6 apps and good ARR.

Big difference if everyone is “all-in” on AI vs only 90% - 10x difference.

From written culture to a demo culture!

Compounding engineering:

- learning and sharing prompts as an organization

Steps:

- Plan

- Delegate

- Assess

- Codify ← this is the compounding

A single engineer should be able to build and maintain a complex, production product.

20251119 Opening Night#

Networking Notes:

- Chatted with SWYX: He is planning on extending the Latent Space podcast with a science angle. Touch base with him next week re: guests

- Met Valentin Bercovici, Chief AI Officer at Weka. They released some new tools. Lots of life science customers. Wants to demo.

- Met the Jellyfish team: They are a startup doing observability for AI tools. Target engineering managers to view how well agentic tooling is delivering.

- Met guys from PropertyPilot: Chatted a bit about agentic tooling in their business. Their agentic experience allows the tools to compose bits of SQL but not at the SQL level - a bit higher up in template space.

- Met the Arize team: Fully loaded agent platform. Goals are selling internal capabilities to enterprise customers. Lots of similarity to node graph platform with UI similar to n8n although quite a bit of customizability at the node level.

- Met the Modal team: They have been seeing uptick in business that ranges from occasional user to larger companies that do big inference. Have seen 2 new categories of use:

- LLM Inference - a bunch more people hosting their own models

- Code Execution in sandboxes

- I told him that I loved their user experience story but that Modal is not a fit for us because we have pre-existing GPU commits. I told him he could chat with more of the technical team at Neurips.

- Met Corey from Rexmore: An AI-native holding company that buys and builds businesses within verticals in Technology, Investments and Education. They buy companies and then run them for less using AI.

- Met Jesus from Studio 3 Marketing: They are using AI agents for HTML conversion, new client onboarding and other major internal tech projects.

- Met Gene Kim and Steve Yegge: Got a copy of their book Vibe Coding. Got a taste of Steve’s work at SourceGraph on AMP. Not sure if there’s an experiment there but he definitely has a much higher-level view of how agents will change and accelerate programming.

Steve Yegge blogs:

- Revenge of the Junior Developer

- The Death of the Junior Developer

- Cheese Wars: Rise of the Vibe Coder

Conference Photo Gallery#