AI Engineering Code Summit Distilled

Organizations are rapidly assessing and adopting AI into their engineering practice. Some individuals are becoming insanely effective, making companies like Tenex possible where engineers get paid based on story points delivered and thereby have a direct stake in product success. Similarly, a small company like Every is able to grow to millions of dollars in ARR with a small team, each member of which owns end-to-end ownership of entire product applications.

In Steve Yegge and Gene Kim’s talk about their Vibe Coding book, they point to many examples of companies that are embracing the speed and power that vibe coding/vibe engineering can provide, and they make a strong case that those companies that embrace the zeitgeist will end up taking market share from those that don’t.

In the same conference, however, there were also a number of data points showing that AI adoption leads to much higher variance in outcomes when measured in actual companies (METR study, Stanford’s Software Engineering Study), so the excitement was partially mitigated by senior engineers from large organizations (Bloomberg, Capital One) that are trying to increase the use of agents within their organizations in a more measured style.

Training and Designing Code Agents#

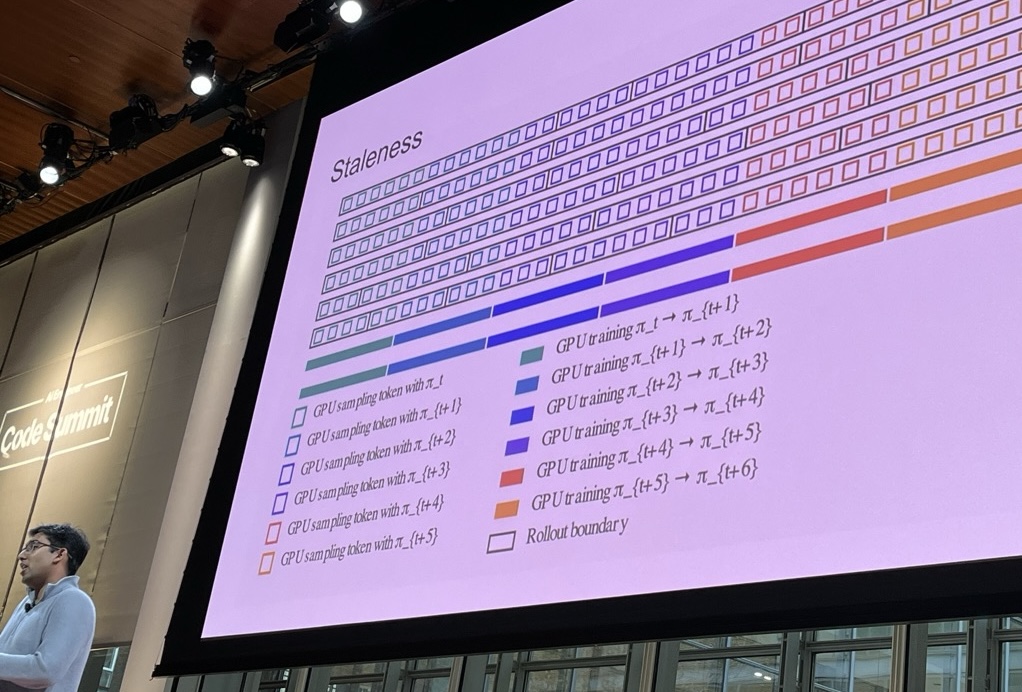

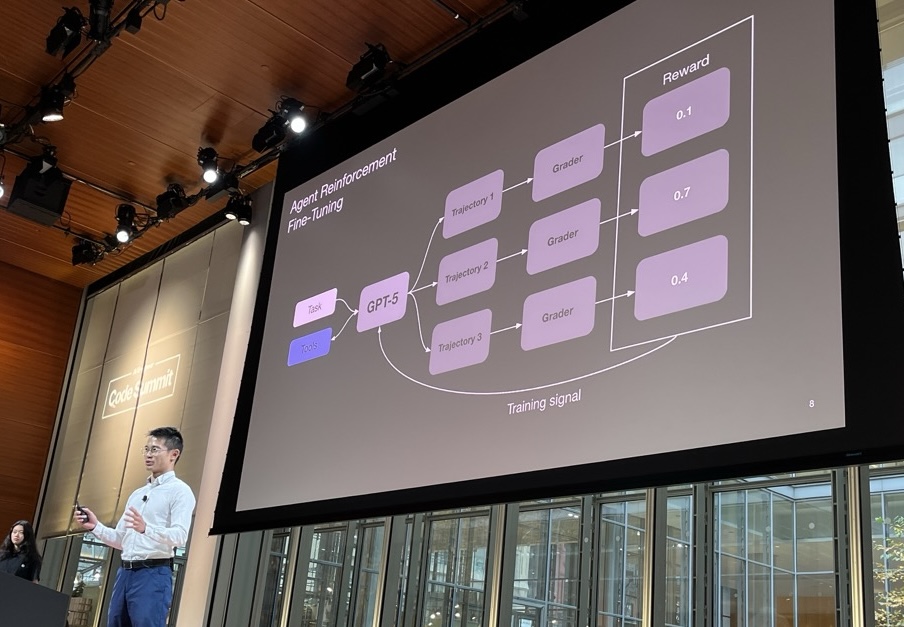

Most of the talks at the summit were related to software developers and coding agents in particular. Software has the property of being verifiable and testable, and we got some insights into how code agent labs like OpenAI (for Codex) and Cursor (for Composer) are gathering and training their data.

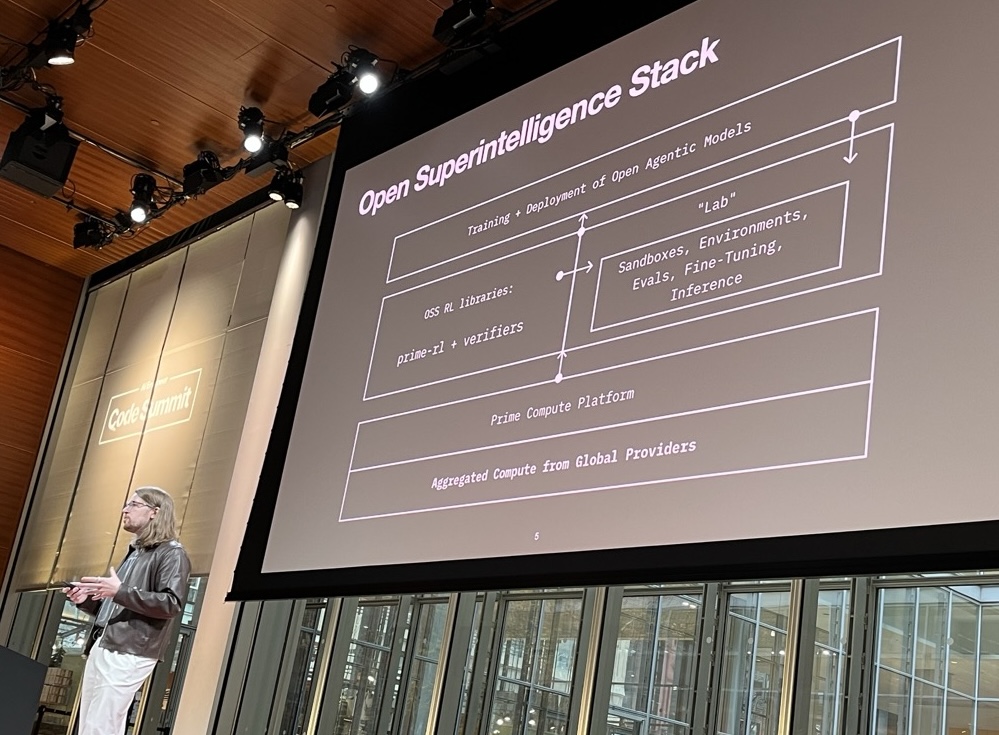

The scale of the data they are collecting is impressive, although the founder of Cline announced an effort, cline-bench, which aims to capture similar data and make it available for improving future open source models. The ability to do this is facilitated by RL environment companies like Prime Intellect that provide standardized platforms for evaluation and embodied by Applied Compute that wants to train models on YOUR data—an internal RL for hire if you will.

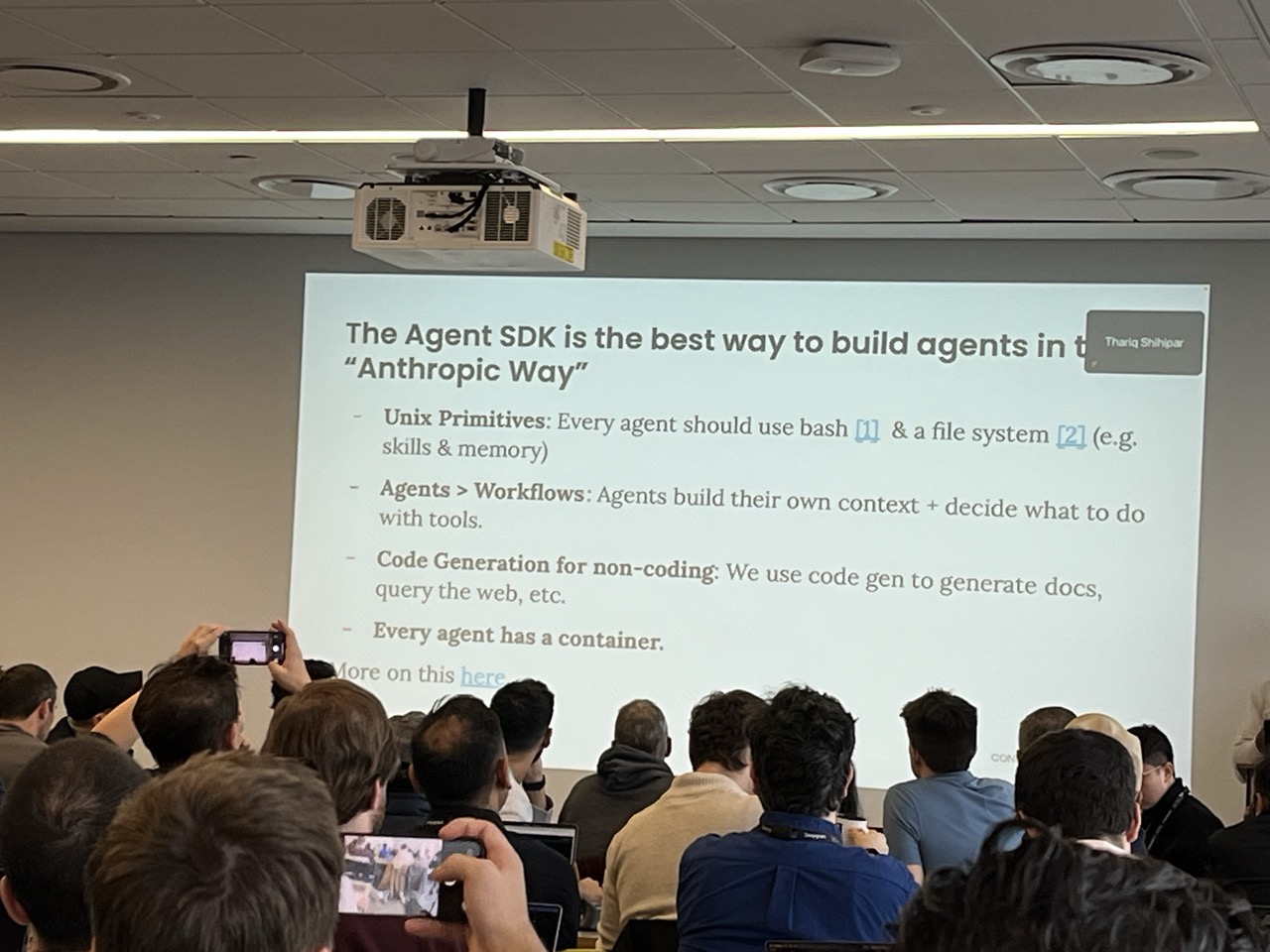

It’s an exciting time in the RL field as many of the techniques and tools are getting standardized and democratized for reuse. The models are getting better, but so is the broader “feel” of using them. We saw a few different approaches:

- GPT Codex - Aiming for an SDK that wraps their model and is trained for tool use and coding tasks.

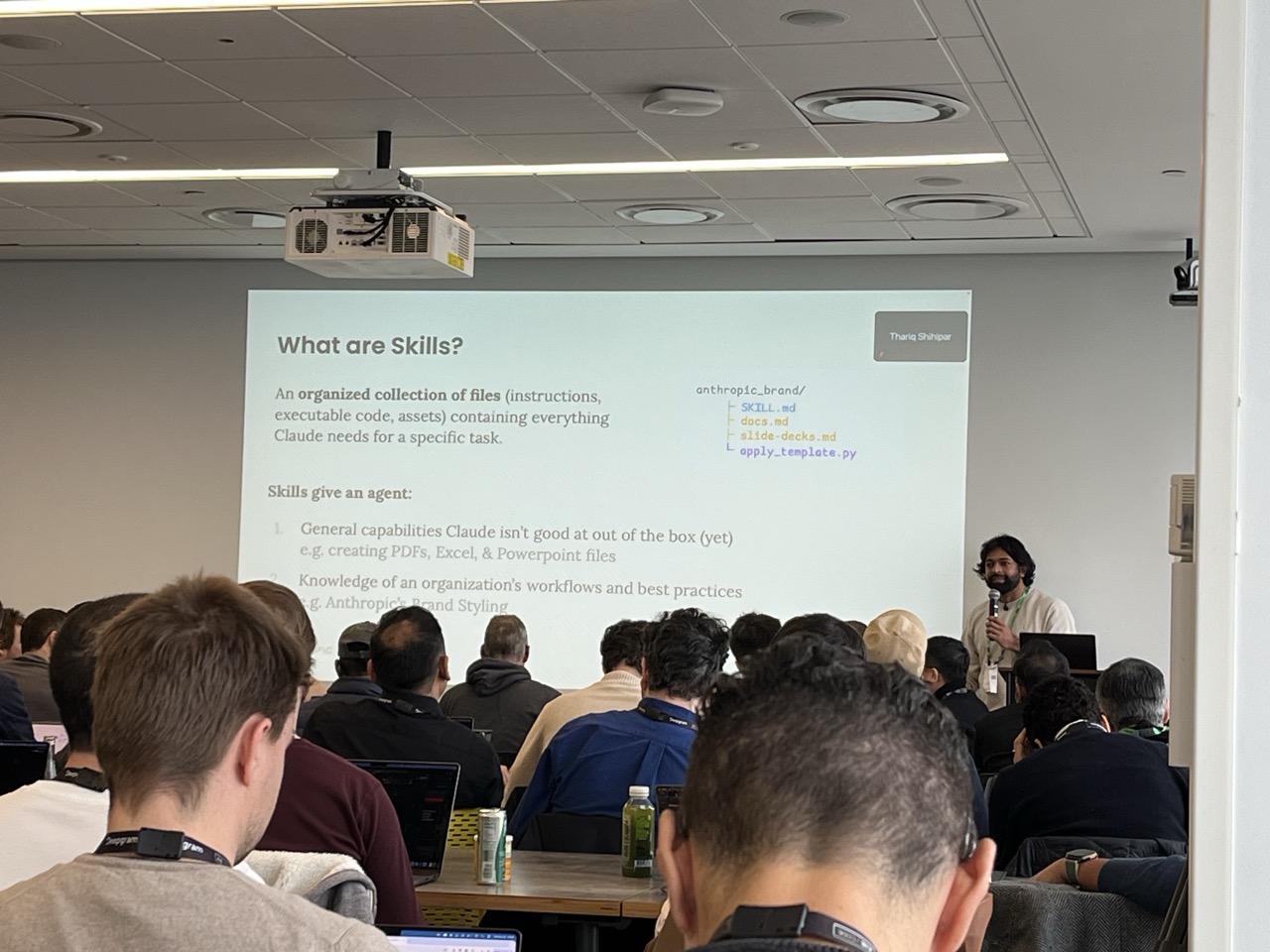

- Claude Code + Skills - Claude Code is “the OS” and Skills are the “Apps” that give Claude the domain knowledge.

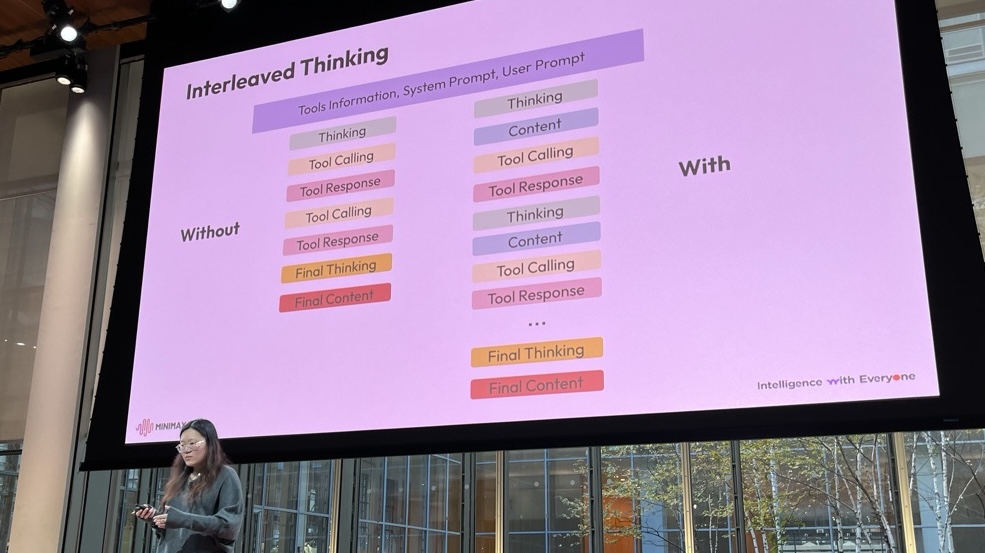

- Minimax 2 - Small model that competes with big models on most benchmarks including tool use.

- Amp - Opinionated delegation to subagents where they are optimizing for the global feel and speed of coding. There are 4 main subagents that do different kinds of things well, and the team is trying to make them work well together and switch out pieces (models, tools, etc.) behind the scenes to make the experience smooth, fast, and powerful.

- Amazon Kiro - Agent pushes a structured spec-driven approach, emphasizing planning and spec before delving in.

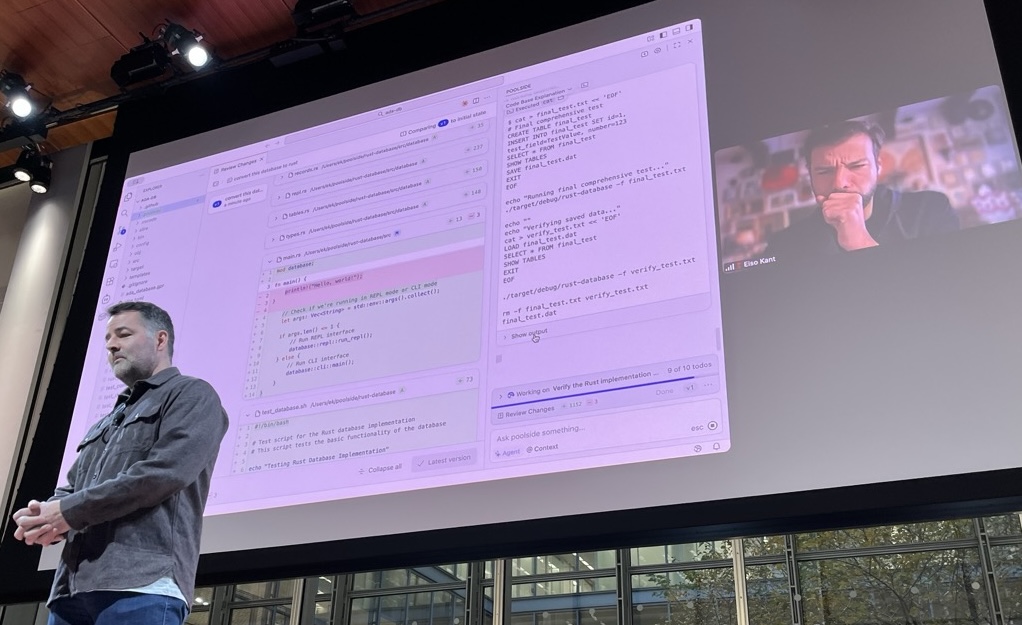

- Poolside - Proprietary model trained in secret and mostly used by the defense industry (?). Very fast in the demo.

- Beads - Not an agent per se but a Steve Yegge invention worth trying that aims to handle memory and issues to allow your agent to be much better at its job.

The overall trend: RL with data can make a really good model, and now there is a lot of innovation going into engineering the entire code experience to cover broader use cases, use memory, be effective more autonomously, etc. It’s a very fertile time.

Agentic Code Across the Software Development Lifecycle#

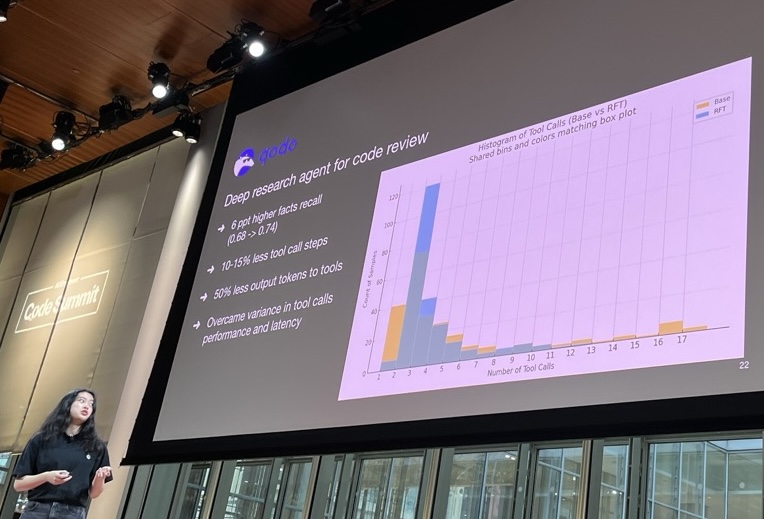

The CEO of Qodo gave a great talk that introduced code generation as the tip of an iceberg, where the full body of the iceberg is Code Integrity, which includes “review”, “standards”, “compliance”, “maintainability”, “reliability”, and “testing”.

In the absence of affirmative efforts to keep your code of high quality, you are at risk of entering a vicious cycle where your code quality decays, dev experience decays, and at some point your code becomes unmaintainable. This was a common refrain from a number of people, and Qodo is one of a number of companies that attempt to address this problem at code review—the others being CodeRabbit, Greptile, and Baz.

One of the claims of Qodo relative to others is a context engine that, when retrieving your code for review, attempts to gather the relevant code and docs from across your organization in order to give a good critique and to TEACH YOU, the reviewer and reviewee! In other words, the technical solution of a code review agent is intended to solve a SOCIAL problem of wild amounts of code being written and not being able to keep on top of it all—to restore long-term agency back to the human coder where it is relevant.

This human element was also covered quite well by Max Kanat-Alexander about what coders can do to future-proof their systems in the age of AI no matter which tool they use. It’s worth enumerating them:

- Standardize your development environments

- Make CLIs and APIs available at dev time

- Improve deterministic validation (e.g., types/tests/linters)

- Refactor for testability and ability to reason about

- Write down external context and intentions (aside: possible to add company-specific docs and standards via MCP)

- Make each response faster in code review

- Raise the bar on code review

These are excellent practices at the individual level. There were also a number of suggestions on how to manage AI code one step higher—at the systems level. Lei Zhang had the following distilled suggestions for creating a “paved path” for his 9,000 engineers at Bloomberg:

- Models (LLMs) via a Gateway

- MCPs via a Hub

- Tool creation via PaaS

- Demos are easy; production begets quality control

At a high level, these two talks linked together by:

- Creating the cultural practices to avoid AI-codegen-induced decay via intentional escalating quality, and

- Providing centralized APIs and infrastructure to allow agent developers to have a safe managed runtime to program against.

Throughout these talks, I liked the idea that we need to learn at the individual level as well as at the system level.

Case Studies and Implementation Examples#

Rocket Mortgage#

Working to tighten the product/dev loop in feature delivery at Rocket. An architect wanted to change a feature within the mortgage calculator. They enlisted a PM and tech lead to engage in a new process:

- PM draws the feature and writes out the delivery requirements

- Architect reviews and converts the written spec to a document within the repo

- Developer works off the product spec in the repo with AI coding assistance

It worked fantastically. They began a new working relationship where the product team moves their specifications into Git. Product doc discussions happen in GitHub PRs just like any other code. Once the spec is agreed on, the engineer implements. They have now adopted this across multiple teams. It speeds feature delivery and the history of the product is now also contained as text within the repo.

From discussion with a Senior Architect at Rocket.

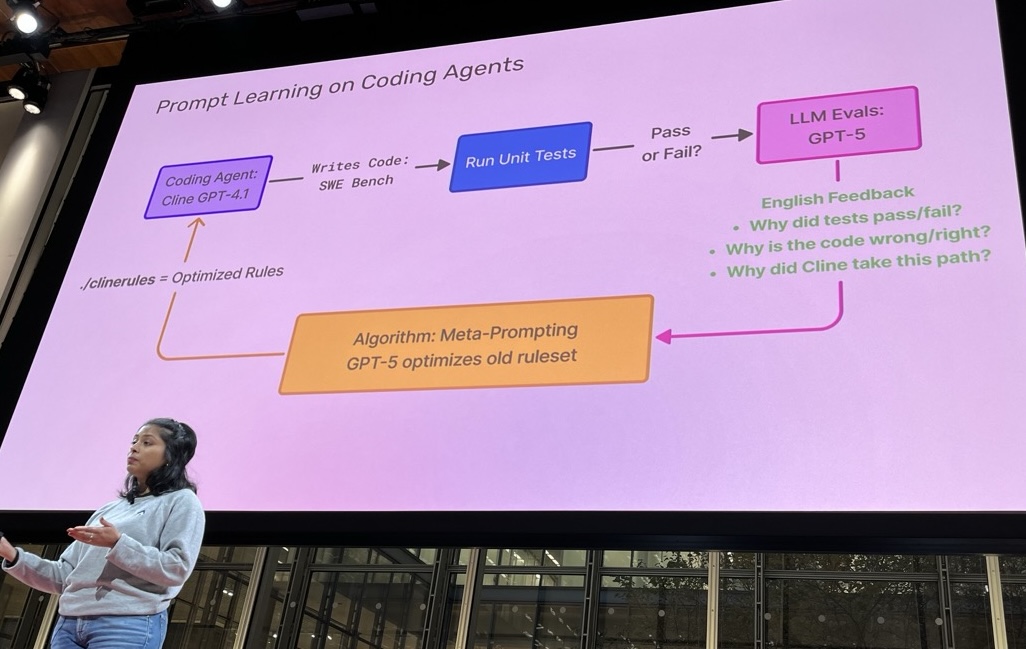

Prompt Learning#

One of the interesting talks was by the CEO of Arize. She presented how they used prompt engineering to bring Cline + GPT into striking distance of Claude Sonnet (similar performance for 2/3 of the price). Basically, you set up a bunch of evals and use those to measure how the results improve when the prompt is updated. This is a really lightweight form of learning and should be generally applicable to all types of agents, not just those related to code.

I think this is something we could consider introducing, but it requires both technical and social elements:

Technical requirements:

- We need to define objective evaluations of success; ideally ~100s of them

- We need/want trace observability (are we doing this for our non-RL training envs?)

- We ideally flow all of our agentic questions via a common portal

Social requirements:

- Commitment to revisit the questions, the evals, and the outputs at a regular cadence

- Commitment to updating all of the above to improve output

I was thinking it would be great to be able to do this across the entire company and be able to centralize, monitor, and improve our agentic processes for all users.

The Goose Desktop Agent#

For some time I have been on the lookout for a local GUI agent that can work locally and also provide data visualization support. A few months back I discovered Goose and it fit the bill. I used it to teach several of our new scientists how to interact with our databases via natural language, and it took only a few minutes to set up and have someone productive.

So I was predisposed to like Goose when I met the Goose team at AIE and found out a few additional pieces of information that dramatically increased my appreciation for Goose and my estimation of its potential as a tool within Lila and as a tool for the Forward Deployed Engineers as we engage clients. Here is what I learned:

- Although Goose is an open source project, its core team are seasoned software engineers at Block

- Every person within Block has Goose installed (i.e., thousands of daily users within a corporate environment)

- Block’s internal IT department does the following:

- Preconfigures LLM endpoints

- Preconfigures MCP availability

- Preconfigures corporate-specific identity provider

- Preconfigures a COMMON recipe directory for agents that anyone in the company can use

This is amazing to me because it means that there is a way to create a rock-solid, extensible agent-focused desktop application that is:

- Easy to use - All configs are baked in at build time; users just use it

- Existing maintenance staff - Battle tested by 1,000s of people with dedicated staff devoted to its long-term maintenance

- Highly configurable for roles - Builds could disallow MCPs or local code execution OR they could contain additional role-specific capabilities

- Brandable - Perfect for applications that are installed on customer machines. We could imagine creating custom applications for a client that are preconfigured specifically for them (e.g., with endpoints for the Lila Model as the deep thinker and one or more MCPs that expose customer-specific actions)

- Extensible - Unless we are targeting coders in our clients, we are going to want to extend or customize their experience in a non-coding way

- Centrally learnable - In the section above I discussed how over time you want to capture your agentic traces so you can learn from them. Having all of your agent calls routed AND having a central recipe repository is one foundation for such a virtuous cycle

I had some great chats with the technical staff at AIE and I am going to meet with one of their DevRel engineers to learn in more detail about Block’s internal release process. They are interested in exploring an open source repo which would demonstrate how to generate a custom build with preconfiguration.

High Level Pictures#

Codex

Emphasis on fitting a "harness" to a model.

Cursor

Holy moly these guys are good. Scary good.

Minimax2

The best model no one in the audience had heard of. Big adoption as measured in HuggingFace downloads. Excellent performance on tooling benchmarks.

Prime Intellect

A platform for environments, benchmarks, and RL. AI research for the masses.

Applied Compute

Cracked team making RL cheap and fast so they can do it inside companies on their private data. Imagine Merck biocatalysis data or Pfizer's medchem archive.

Cline

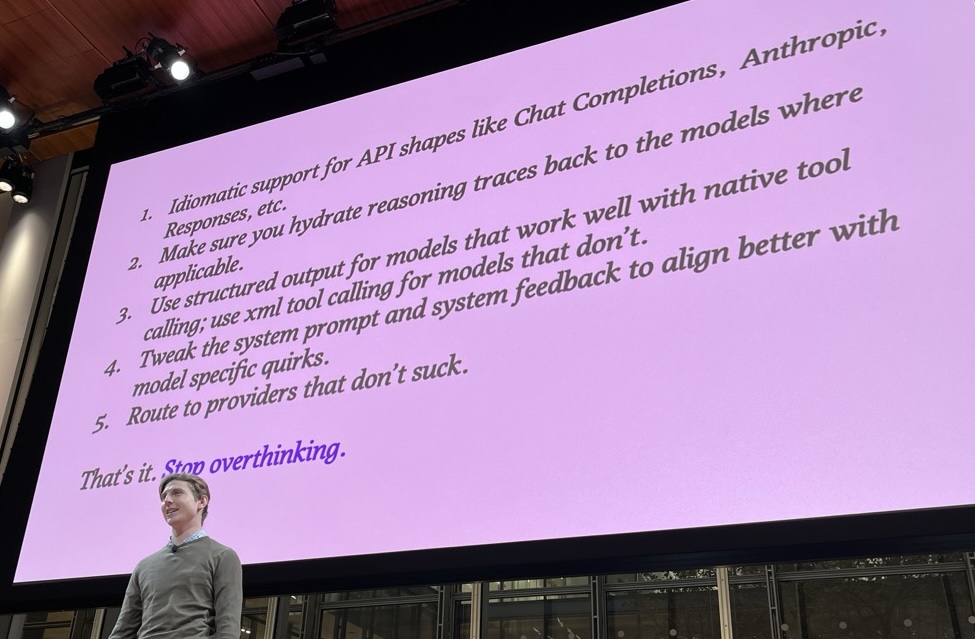

Don't overthink coding agents. Just get a good model and good real usage data and let RL work its magic. Introduced cline-bench.

Gimlet Labs

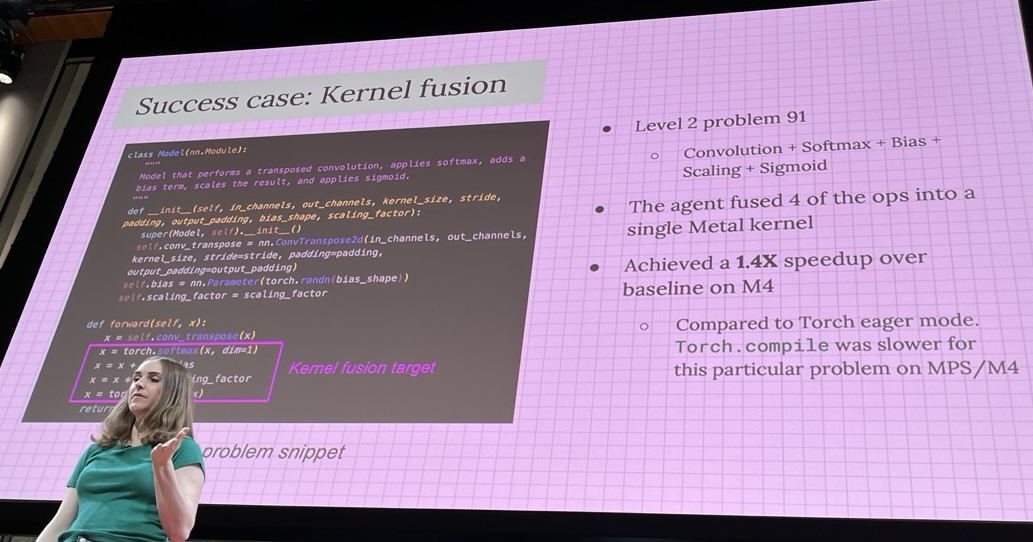

Agents to make fast GPU kernels.

Poolside

AI company you never heard of with sick model and lots of military contracts. Coming out of stealth.

Arize AI

Enterprise agents. Optimize your prompts!

OpenAI AgentRL

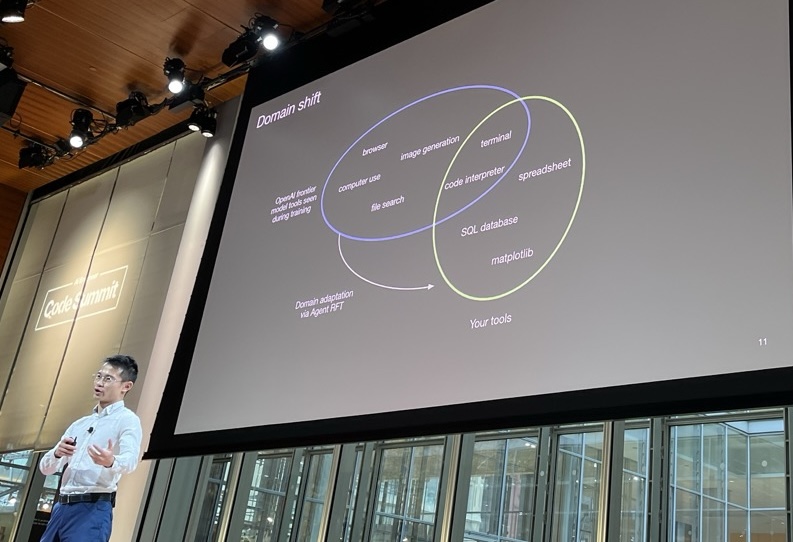

Environment drift is your enemy! Mismatch between training env and usage env is potential for pain.

OpenAI AgentRL - Tool Use

Tool use in code review optimized for Qodo. Note: special training for special agent.

OpenAI AgentRL - Rules of Thumb

Important rules of thumb that came up over and over. Consistent environments and unhackable rewards/verifiers.

Skills - Tools

Tools are good but...

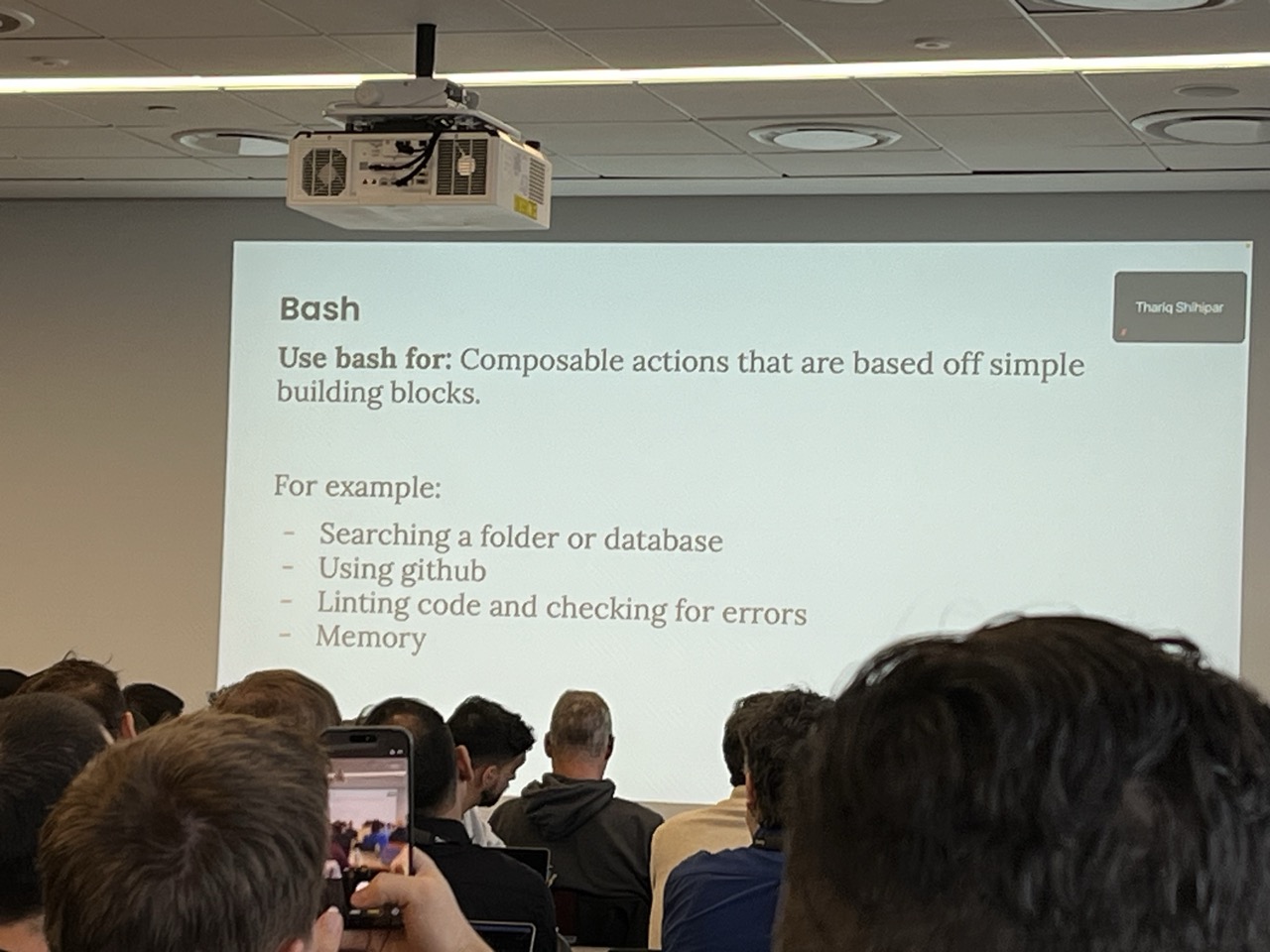

Skills - Bash

"Bash is all you need" ... unless...

Skills - Topical

You need to do topical stuff.